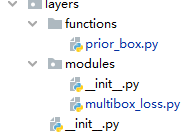

pytorch----retinaface(layer文件)

(改了SSD的源码,添加了mandl)

目录

prior_box.py

import torch

from itertools import product as product

import numpy as np

from math import ceil

#生成先验框

class PriorBox(object):

def __init__(self, cfg, image_size=None, phase='train'):

super(PriorBox, self).__init__()

self.min_sizes = cfg['min_sizes']

self.steps = cfg['steps']

self.clip = cfg['clip']

self.image_size = image_size

self.feature_maps = [[ceil(self.image_size[0]/step), ceil(self.image_size[1]/step)] for step in self.steps]

self.name = "s"

# 遍历多尺度的 特征图

def forward(self):

anchors = []

for k, f in enumerate(self.feature_maps):

min_sizes = self.min_sizes[k]

# 遍历每个像素

for i, j in product(range(f[0]), range(f[1])):

for min_size in min_sizes:

#feature map 大小

s_kx = min_size / self.image_size[1]

s_ky = min_size / self.image_size[0]

# 每个框的中心坐标

dense_cx = [x * self.steps[k] / self.image_size[1] for x in [j + 0.5]]

dense_cy = [y * self.steps[k] / self.image_size[0] for y in [i + 0.5]]

for cy, cx in product(dense_cy, dense_cx):

anchors += [cx, cy, s_kx, s_ky]

# back to torch land

# 转化为 torch的Tensor

output = torch.Tensor(anchors).view(-1, 4)

# 归一化,把输出设置在 [0,1]

if self.clip:

output.clamp_(max=1, min=0)

return output

multibox_loss.py

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

from utils.box_utils import match, log_sum_exp

from data import cfg_mnet

GPU = cfg_mnet['gpu_train']

class MultiBoxLoss(nn.Module):

"""SSD Weighted Loss Function

Compute Targets:

1) Produce Confidence Target Indices by matching ground truth boxes

with (default) 'priorboxes' that have jaccard index > threshold parameter

(default threshold: 0.5).

2) Produce localization target by 'encoding' variance into offsets of ground

truth boxes and their matched 'priorboxes'.

3) Hard negative mining to filter the excessive number of negative examples

that comes with using a large number of default bounding boxes.

(default negative:positive ratio 3:1)

Objective Loss:

L(x,c,l,g) = (Lconf(x, c) + αLloc(x,l,g)) / N

Where, Lconf is the CrossEntropy Loss and Lloc is the SmoothL1 Loss

weighted by α which is set to 1 by cross val.

Args:

c: class confidences,

l: predicted boxes,

g: ground truth boxes

N: number of matched default boxes

See: https://arxiv.org/pdf/1512.02325.pdf for more details.

"""

def __init__(self, num_classes, overlap_thresh, prior_for_matching, bkg_label, neg_mining, neg_pos, neg_overlap, encode_target):

super(MultiBoxLoss, self).__init__()

self.num_classes = num_classes

self.threshold = overlap_thresh

self.background_label = bkg_label

self.encode_target = encode_target

self.use_prior_for_matching = prior_for_matching

self.do_neg_mining = neg_mining

self.negpos_ratio = neg_pos

self.neg_overlap = neg_overlap

self.variance = [0.1, 0.2]

def forward(self, predictions, priors, targets):

"""Multibox Loss

Args:

predictions (tuple): A tuple containing loc preds, conf preds,

and prior boxes from SSD net.

conf shape: torch.size(batch_size,num_priors,num_classes)

loc shape: torch.size(batch_size,num_priors,4)

priors shape: torch.size(num_priors,4)

ground_truth (tensor): Ground truth boxes and labels for a batch,

shape: [batch_size,num_objs,5] (last idx is the label).

"""

loc_data, conf_data, landm_data = predictions#loc是偏置值,conf是分类,land是五特征点

priors = priors#priors是PriorBox方法画出来的box

num = loc_data.size(0) #num = batch_size

num_priors = (priors.size(0))#num_proirs,一个batchsize所有框

# match priors (default boxes) and ground truth boxes

# 获取匹配每个prior box的 ground truth

# 创建 loc_t 和 conf_t 保存真实box的位置和类别

#创建landm_t保存land的位置

loc_t = torch.Tensor(num, num_priors, 4)

landm_t = torch.Tensor(num, num_priors, 10)

conf_t = torch.LongTensor(num, num_priors)

for idx in range(num):#一张张图片拿出来

truths = targets[idx][:, :4].data #ground truth box信息

labels = targets[idx][:, -1].data # ground truth conf信息

landms = targets[idx][:, 4:14].data# # ground truth landms信息

defaults = priors.data# priors的 box 信息

match(self.threshold, truths, defaults, self.variance, labels, landms, loc_t, conf_t, landm_t, idx)# 匹配 ground truth

if GPU:

loc_t = loc_t.cuda()

conf_t = conf_t.cuda()

landm_t = landm_t.cuda()

zeros = torch.tensor(0).cuda()#创建了一个全为0的张量

# landm Loss (Smooth L1)

# Shape: [batch,num_priors,10]

# 匹配中所有的正样本mask,shape[b,M]

pos1 = conf_t > zeros ##pos1为 conf_t大于0的部分

num_pos_landm = pos1.long().sum(1, keepdim=True)#不因jard被舍去的五特征点的个数

# 正样本个数

N1 = max(num_pos_landm.data.sum().float(), 1)

pos_idx1 = pos1.unsqueeze(pos1.dim()).expand_as(landm_data)#在最后加一维度

landm_p = landm_data[pos_idx1].view(-1, 10)#预测的正样本land

landm_t = landm_t[pos_idx1].view(-1, 10)#真实的正样本land

loss_landm = F.smooth_l1_loss(landm_p, landm_t, reduction='sum')#Smooth L1 损失

pos = conf_t != zeros

conf_t[pos] = 1

# Localization Loss (Smooth L1)

# Shape: [batch,num_priors,4]

pos_idx = pos.unsqueeze(pos.dim()).expand_as(loc_data)##在最后加一维度

loc_p = loc_data[pos_idx].view(-1, 4)#预测的正样本box信息

loc_t = loc_t[pos_idx].view(-1, 4) #真实的正样本box信息

loss_l = F.smooth_l1_loss(loc_p, loc_t, reduction='sum')#Smooth L1 损失

# Compute max conf across batch for hard negative mining

batch_conf = conf_data.view(-1, self.num_classes)

loss_c = log_sum_exp(batch_conf) - batch_conf.gather(1, conf_t.view(-1, 1))# 使用logsoftmax,计算置信度,shape[b*M, 1]

# Hard Negative Mining

loss_c[pos.view(-1, 1)] = 0 # 把正样本排除,剩下的就全是负样本,可以进行抽样

loss_c = loss_c.view(num, -1) # filter out pos boxes for now #一个batchsize每张图的 loss_c

# 两次sort排序,能够得到每个元素在降序排列中的位置idx_rank

_, loss_idx = loss_c.sort(1, descending=True)# 整个batchsize的loss_c排序

_, idx_rank = loss_idx.sort(1)#各个框loss_c(分类损失)的排名,从大到小 [batch,num_priors]

# 抽取负样本

# 每个batch中正样本的数目,shape[b,1]

num_pos = pos.long().sum(1, keepdim=True)

num_neg = torch.clamp(self.negpos_ratio*num_pos, max=pos.size(1)-1)

# 抽取前top_k个负样本,shape[b, M]

neg = idx_rank < num_neg.expand_as(idx_rank)#得到负样本

# Confidence Loss Including Positive and Negative Examples

pos_idx = pos.unsqueeze(2).expand_as(conf_data) #[batchsize,num_priors,num_class]为1的是大于阈值的框

neg_idx = neg.unsqueeze(2).expand_as(conf_data) #[batchsize,num_priors,num_class]为1的是负样本

# 提取出所有筛选好的正负样本(预测的和真实的)

conf_p = conf_data[(pos_idx+neg_idx).gt(0)].view(-1,self.num_classes)#pos=0是小于阈值的框,neg=0是损失很小的框

# pos_idx+neg_idx大于0的数据保留,其余舍去

targets_weighted = conf_t[(pos+neg).gt(0)]

# 计算conf交叉熵

loss_c = F.cross_entropy(conf_p, targets_weighted, reduction='sum')

# Sum of losses: L(x,c,l,g) = (Lconf(x, c) + αLloc(x,l,g)) / N

# 正样本个数

N = max(num_pos.data.sum().float(), 1)

loss_l /= N

loss_c /= N

loss_landm /= N1

return loss_l, loss_c, loss_landm

4917

4917

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?