文章目录

其他文章列表:

- 一篇关于Incremental few shot object detection的论文汇总。

- 配置论文《Sylph: A Hypernetwork Framework for Incremental Few-shot Object Detection》代码过程中遇到的bug修复记录

- 未完待续…

相同batch_size下单机单卡与单机多卡运行代码的区别

一、背景描述

- 研究的方向是incremental few shot object detection

- 复现论文《Sylph: A Hypernetwork Framework for Incremental Few-shot Object Detection》

- 论文代码:https://github.com/facebookresearch/sylph-few-shot-detection

虽然代码开源出来了,但是没有任何的训练日志,issue上提问作者也表示无能为力,作者的回复如下:

这篇论文代码采用 AdelaiDet + detectron2 + mobile-vision + d2go 进行构建的,与单纯的detectron2架构代码还是有区别的,所以后面验证的内容如果看着很简单的可以跳过,不喜勿喷!!!

由于论文中采用的配置基本都是64张gpu这种集群环境进行训练而我们一般人没有这么多的算力无法进行复现,其中一个配置文件如下 :

这个配置文件路径为:sylph-few-shot-detection/configs/COCO-Detection/Meta-FCOS-pretrain.yaml

# Note: REFERENCE_WORLD_SIZE is the number of gpus, IMS_PER_BATCH/REFERENCE_WORLD_SIZE is the images/gpu

# modify this to your use

_BASE_: "Base-FCOS.yaml"

......

SOLVER:

IMS_PER_BATCH: 128 # 每一次迭代的Batch_size = GPUS * bs_pre_gpu

BASE_LR: 0.01 # Note that RetinaNet uses a different default learning rate

STEPS: (60000, 80000)

MAX_ITER: 90000

REFERENCE_WORLD_SIZE: 64 # 采用64块gpu的集群进行训练

CHECKPOINT_PERIOD: 10000

# LR_MULTIPLIER_OVERWRITE, [{'backbone': 0.1}, {'reference_points': 0.1, 'sampling_offsets': 0.1}]

......

如果仅仅使用1块gpu进行训练,那么d2go中的auto_scale_world_size函数将根据实际gpu数量对运行参数进行调整

auto_scale_world_size函数的实现如下:

def auto_scale_world_size(cfg, new_world_size):

"""

Usually the config file is written for a specific number of devices, this method

scales the config (in-place!) according to the actual world size using the

pre-registered scaling methods specified as cfg.SOLVER.AUTO_SCALING_METHODS.

Note for registering scaling methods:

- The method will only be called when scaling is needed. It won't be called

if SOLVER.REFERENCE_WORLD_SIZE is 0 or equal to target world size. Thus

cfg.SOLVER.REFERENCE_WORLD_SIZE will always be positive.

- The method updates cfg in-place, no return is required.

- No need for changing SOLVER.REFERENCE_WORLD_SIZE.

Args:

cfg (CfgNode): original config which contains SOLVER.REFERENCE_WORLD_SIZE and

SOLVER.AUTO_SCALING_METHODS.

new_world_size: the target world size

"""

old_world_size = cfg.SOLVER.REFERENCE_WORLD_SIZE

if old_world_size == 0 or old_world_size == new_world_size:

return cfg

if len(cfg.SOLVER.AUTO_SCALING_METHODS) == 0:

return cfg

original_cfg = cfg.clone()

frozen = original_cfg.is_frozen()

cfg.defrost()

assert len(cfg.SOLVER.AUTO_SCALING_METHODS) > 0, cfg.SOLVER.AUTO_SCALING_METHODS

for scaling_method in cfg.SOLVER.AUTO_SCALING_METHODS:

logger.info("Applying auto scaling method: {}".format(scaling_method))

CONFIG_SCALING_METHOD_REGISTRY.get(scaling_method)(cfg, new_world_size)

assert (

cfg.SOLVER.REFERENCE_WORLD_SIZE == cfg.SOLVER.REFERENCE_WORLD_SIZE

), "Runner's scale_world_size shouldn't change SOLVER.REFERENCE_WORLD_SIZE"

cfg.SOLVER.REFERENCE_WORLD_SIZE = new_world_size

if frozen:

cfg.freeze()

from d2go.config.utils import get_cfg_diff_table

table = get_cfg_diff_table(cfg, original_cfg)

logger.info("Auto-scaled the config according to the actual world size: \n" + table)

使用1块gpus进行运行,转换之后的参数如下:

[06/28 21:26:30] d2go.config.config INFO: Applying auto scaling method: default_scale_d2_configs

[06/28 21:26:30] d2go.config.config INFO: Applying auto scaling method: default_scale_quantization_configs

[06/28 21:26:30] d2go.config.config INFO: Auto-scaled the config according to the actual world size:

| config key | old value | new value |

|:------------------------------------------------|:---------------|:-------------------|

| QUANTIZATION.QAT.DISABLE_OBSERVER_ITER | 38000 | 2432000 |

| QUANTIZATION.QAT.ENABLE_LEARNABLE_OBSERVER_ITER | 36000 | 2304000 |

| QUANTIZATION.QAT.ENABLE_OBSERVER_ITER | 35000 | 2240000 |

| QUANTIZATION.QAT.FREEZE_BN_ITER | 37000 | 2368000 |

| QUANTIZATION.QAT.START_ITER | 35000 | 2240000 |

| SOLVER.BASE_LR | 0.01 | 0.00015625 |

| SOLVER.IMS_PER_BATCH | 128 | 2 |

| SOLVER.MAX_ITER | 90000 | 5760000 |

| SOLVER.REFERENCE_WORLD_SIZE | 64 | 1 |

| SOLVER.STEPS | (60000, 80000) | (3840000, 5120000) |

| SOLVER.WARMUP_ITERS | 1000 | 64000 |

观察可以发现,转换前后batch_size/gpu不变,主要的思想就是将之前一次大batch_size的运算分开成若干次小batch_size的运算。

batch_size的计算如下:

总的batch_size由于gpu数量的减少而减少,但是每块gpu的batch_size却不变。

128

/

64

=

=

2

/

1

=

=

2

128 / 64 == 2 / 1 == 2

128/64==2/1==2

迭代次数的计算如下:

128 ∗ 90000 = 2 ∗ ( n e w i t e r s ) 128 * 90000 = 2 * (newiters) 128∗90000=2∗(newiters)

其他参数也都是按照等比例进行转换的,其中主要的参数就是Batch_Size和Base_LR,还有迭代参数。可以阅读论文《Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour》

从迭代次数来看,很浪费时间(达到了百万次),因此这种方法由于耗时又耗力而无法复现。但是通过观察可以发现每一块gpus的batch_size很小,如果我们的gpu显存很大,但是数量不多的时候,这种方式无异于暴殄天物,所以需要提高每一块gpu显存的利用率,那么参数又该如何进行配置呢。

Tips: 我有两张Quadro RTX 5000(16G显存)的显卡。

二、任务描述:

接下来需要进行实验的验证,主要的任务如下:

- 保持原始的总Batch_Size(即配置文件中的SOLVER.IMS_PER_BATCH)、学习率(即配置文件中的SOLVER.BASE_LR)、迭代次数(即配置文件中的SOLVER.MAX_ITER)、Gpu数量(即配置文件中的SOLVER.REFERENCE_WORLD_SIZE),得到一个baseline结果。

- 总Batch_Size(即配置文件中的SOLVER.IMS_PER_BATCH)、学习率(即配置文件中的SOLVER.BASE_LR)、迭代次数(即配置文件中的SOLVER.MAX_ITER)保持不变的情况之下,通过减少gpu的数量(即配置文件中的SOLVER.REFERENCE_WORLD_SIZE)来观察对于结果是否有太大的影响。

- 尽可能的提高每一块gpu的利用率,即通过增加每一块gpu的Batch_Size从而间接增加总Batch_Size(即配置文件中的SOLVER.IMS_PER_BATCH),并按照线性变换的方式来调整(减倍)学习率(即配置文件中的SOLVER.BASE_LR)、迭代次数(即配置文件中的SOLVER.MAX_ITER),最后观察对于结果是否有太大的影响。

采用的配置文件路径为:sylph-few-shot-detection/configs/COCO-Detection/TFA/FCOS_pretrain.yaml,代码如下:

MODEL:

META_ARCHITECTURE: "MetaOneStageDetector"

BACKBONE:

NAME: "build_fcos_resnet_fpn_backbone"

FREEZE: False # freeze backbone

RESNETS:

OUT_FEATURES: ["res3", "res4", "res5"]

FPN:

IN_FEATURES: ["res3", "res4", "res5"]

PROPOSAL_GENERATOR:

NAME: "MetaFCOS"

META_LEARN:

EPISODIC_LEARNING: False # pretraining

TFA:

TRAIN_SHOT: 10

FCOS:

NUM_CLASSES: 60 #80

# PIXEL_MEAN: [102.9801, 115.9465, 122.7717]

DATASETS:

# TRAIN: ("coco_pretrain_train_all",)

# TEST: ("coco_pretrain_val_all", ) # "coco_pretrain_val_novel", )

TRAIN: ("coco_pretrain_train_base",)

TEST: ("coco_pretrain_val_base", ) # "coco_pretrain_val_novel", )

TEST:

EVAL_PERIOD: 10000

SOLVER:

IMS_PER_BATCH: 16

BASE_LR: 0.01 # Note that RetinaNet uses a different default learning rate

STEPS: (60000, 80000)

MAX_ITER: 10 #90000

# LR_MULTIPLIER_OVERWRITE, [{'backbone': 0.1}, {'reference_points': 0.1, 'sampling_offsets': 0.1}]

INPUT:

MIN_SIZE_TRAIN: (640, 672, 704, 736, 768, 800)

如果没有配置SOLVER.REFERENCE_WORLD_SIZE参数,默认值是8.

2.1、利用两块GPU跑出一个Baseline结果:

IMS_PER_BATCH: 16、BASE_LR: 0.01、MAX_ITER: 30000、REFERENCE_WORLD_SIZE: 2、 STEPS:(20000,25000)

配置如下:

DATASETS:

TEST:

- coco_pretrain_val_base

TRAIN:

- coco_pretrain_train_base

INPUT:

MIN_SIZE_TRAIN:

- 640

- 672

- 704

- 736

- 768

- 800

MODEL:

BACKBONE:

NAME: build_fcos_resnet_fpn_backbone

DDP_FIND_UNUSED_PARAMETERS: true

FCOS:

NUM_CLASSES: 60

FPN:

IN_FEATURES:

- res3

- res4

- res5

META_ARCHITECTURE: MetaOneStageDetector

PROPOSAL_GENERATOR:

NAME: MetaFCOS

RESNETS:

OUT_FEATURES:

- res3

- res4

- res5

WEIGHTS: detectron2://ImageNetPretrained/MSRA/R-50.pkl

OUTPUT_DIR: output_sylph/TFA_shuangka/coco/pretrain/

SOLVER:

IMS_PER_BATCH: 16

BASE_LR: 0.01

MAX_ITER: 30000

REFERENCE_WORLD_SIZE: 2

STEPS:

- 20000

- 25000

TEST:

EVAL_PERIOD: 10000

训练结果如下:

[09/02 03:46:48] d2.engine.hooks INFO: Overall training speed: 29998 iterations in 11:05:49 (1.3317 s / it)

[09/02 03:46:48] d2.engine.hooks INFO: Total training time: 11:17:36 (0:11:47 on hooks) # 训练时间

[09/02 03:50:43] d2.evaluation.coco_evaluation INFO: Preparing results for COCO format ...

[09/02 03:50:43] d2.evaluation.coco_evaluation INFO: Saving results to output_sylph/TFA_shuangka/coco/pretrain/inference/default/final/coco_pretrain_val_base/coco_instances_results.json

[09/02 03:50:45] d2.evaluation.coco_evaluation INFO: Evaluating predictions with official COCO API...

[09/02 03:52:09] d2.evaluation.coco_evaluation INFO: Evaluation results for bbox:

| AP | AP50 | AP75 | APs | APm | APl | ARdet1 | ARdet10 | ARdet100 | ARs | ARm | ARl |

|:------:|:------:|:------:|:------:|:------:|:------:|:--------:|:---------:|:----------:|:------:|:------:|:------:|

| 32.887 | 50.833 | 35.163 | 19.228 | 37.868 | 42.126 | 29.625 | 50.698 | 54.897 | 38.173 | 60.524 | 69.414 |

[09/02 03:52:09] d2.evaluation.coco_evaluation INFO: Per-category bbox AP:

| category | AP | category | AP | category | AP |

|:---------------|:-------|:--------------|:-------|:-------------|:-------|

| truck | 25.783 | traffic light | 24.838 | fire hydrant | 58.756 |

| stop sign | 60.566 | parking meter | 36.520 | bench | 18.567 |

| elephant | 53.398 | bear | 59.048 | zebra | 60.039 |

| giraffe | 60.111 | backpack | 13.367 | umbrella | 34.310 |

| handbag | 12.899 | tie | 27.715 | suitcase | 30.286 |

| frisbee | 62.595 | skis | 15.911 | snowboard | 21.826 |

| sports ball | 43.707 | kite | 32.891 | baseball bat | 25.121 |

| baseball glove | 34.357 | skateboard | 44.367 | surfboard | 31.114 |

| tennis racket | 44.440 | wine glass | 33.262 | cup | 37.429 |

| fork | 20.322 | knife | 11.687 | spoon | 9.416 |

| bowl | 38.036 | banana | 20.496 | apple | 17.709 |

| sandwich | 29.315 | orange | 29.050 | broccoli | 20.888 |

| carrot | 17.289 | hot dog | 24.932 | pizza | 46.245 |

| donut | 41.060 | cake | 30.549 | bed | 33.140 |

| toilet | 52.188 | laptop | 51.783 | mouse | 57.023 |

| remote | 23.473 | keyboard | 41.127 | cell phone | 30.168 |

| microwave | 49.580 | oven | 27.093 | toaster | 9.304 |

| sink | 31.164 | refrigerator | 44.687 | book | 11.876 |

| clock | 48.607 | vase | 35.526 | scissors | 17.430 |

| teddy bear | 35.318 | hair drier | 0.712 | toothbrush | 12.794 |

[09/02 03:52:10] d2.evaluation.testing INFO: copypaste: Task: bbox

[09/02 03:52:10] d2.evaluation.testing INFO: copypaste: AP,AP50,AP75,APs,APm,APl,ARdet1,ARdet10,ARdet100,ARs,ARm,ARl

[09/02 03:52:10] d2.evaluation.testing INFO: copypaste: 32.8868,50.8326,35.1630,19.2278,37.8680,42.1257,29.6249,50.6976,54.8969,38.1732,60.5238,69.4136

[09/02 03:52:10] d2go.utils.misc INFO: Dumping trained config file: output_sylph/TFA_shuangka/coco/pretrain/trained_model_configs/model_final.yaml

[09/02 03:52:10] d2go.utils.misc INFO: Finished dumping trained config file

[09/02 03:52:10] mobile_cv.torch.utils_pytorch.distributed_helper INFO: Save tools.setup.new_main_func return to: /tmp/mcvdh_detectron2.utils.serialize.new_main_func_returnpriwfza6.pth.rank0

Tips: 需要设置DDP_FIND_UNUSED_PARAMETERS: true。

2.2、利用一块GPU运行出来结果:

IMS_PER_BATCH: 16、BASE_LR: 0.01、MAX_ITER: 30000、REFERENCE_WORLD_SIZE: 1、 STEPS:(20000,25000)

配置如下:

DATASETS:

TEST:

- coco_pretrain_val_base

TRAIN:

- coco_pretrain_train_base

INPUT:

MIN_SIZE_TRAIN:

- 640

- 672

- 704

- 736

- 768

- 800

MODEL:

BACKBONE:

NAME: build_fcos_resnet_fpn_backbone

FCOS:

NUM_CLASSES: 60

FPN:

IN_FEATURES:

- res3

- res4

- res5

META_ARCHITECTURE: MetaOneStageDetector

PROPOSAL_GENERATOR:

NAME: MetaFCOS

RESNETS:

OUT_FEATURES:

- res3

- res4

- res5

WEIGHTS: detectron2://ImageNetPretrained/MSRA/R-50.pkl

OUTPUT_DIR: output_sylph/TFA_danka/coco/pretrain/

SOLVER:

IMS_PER_BATCH: 16

BASE_LR: 0.01

MAX_ITER: 30000

REFERENCE_WORLD_SIZE: 1

STEPS:

- 20000

- 25000

TEST:

EVAL_PERIOD: 10000

训练结果如下:

[09/01 15:07:15] d2.engine.hooks INFO: Overall training speed: 29998 iterations in 19:58:49 (2.3978 s / it)

[09/01 15:07:15] d2.engine.hooks INFO: Total training time: 20:15:22 (0:16:32 on hooks) # 总训练时间

[09/01 15:13:40] d2.evaluation.coco_evaluation INFO: Preparing results for COCO format ...

[09/01 15:13:41] d2.evaluation.coco_evaluation INFO: Saving results to output_sylph/TFA_danka/coco/pretrain/inference/default/final/coco_pretrain_val_base/coco_instances_results.json

[09/01 15:13:43] d2.evaluation.coco_evaluation INFO: Evaluating predictions with official COCO API...

[09/01 15:15:05] d2.evaluation.coco_evaluation INFO: Evaluation results for bbox:

| AP | AP50 | AP75 | APs | APm | APl | ARdet1 | ARdet10 | ARdet100 | ARs | ARm | ARl |

|:------:|:------:|:------:|:------:|:------:|:------:|:--------:|:---------:|:----------:|:------:|:------:|:------:|

| 32.882 | 50.836 | 35.346 | 19.495 | 37.770 | 41.777 | 30.014 | 50.698 | 55.017 | 38.052 | 60.512 | 69.699 |

[09/01 15:15:05] d2.evaluation.coco_evaluation INFO: Per-category bbox AP:

| category | AP | category | AP | category | AP |

|:---------------|:-------|:--------------|:-------|:-------------|:-------|

| truck | 26.234 | traffic light | 24.540 | fire hydrant | 57.536 |

| stop sign | 61.126 | parking meter | 39.529 | bench | 19.172 |

| elephant | 52.451 | bear | 57.825 | zebra | 59.816 |

| giraffe | 60.008 | backpack | 13.684 | umbrella | 33.598 |

| handbag | 12.576 | tie | 27.643 | suitcase | 30.193 |

| frisbee | 60.575 | skis | 16.626 | snowboard | 22.355 |

| sports ball | 42.712 | kite | 35.412 | baseball bat | 24.698 |

| baseball glove | 33.945 | skateboard | 44.483 | surfboard | 31.571 |

| tennis racket | 44.272 | wine glass | 32.958 | cup | 37.498 |

| fork | 21.523 | knife | 10.945 | spoon | 8.277 |

| bowl | 37.415 | banana | 20.202 | apple | 16.854 |

| sandwich | 28.217 | orange | 29.647 | broccoli | 21.222 |

| carrot | 18.313 | hot dog | 25.558 | pizza | 46.495 |

| donut | 42.006 | cake | 31.377 | bed | 32.272 |

| toilet | 51.671 | laptop | 51.830 | mouse | 58.112 |

| remote | 23.474 | keyboard | 41.571 | cell phone | 28.927 |

| microwave | 48.750 | oven | 26.837 | toaster | 10.041 |

| sink | 32.883 | refrigerator | 44.787 | book | 12.040 |

| clock | 47.192 | vase | 35.346 | scissors | 17.160 |

| teddy bear | 34.904 | hair drier | 0.921 | toothbrush | 13.110 |

[09/01 15:15:06] d2.evaluation.testing INFO: copypaste: Task: bbox

[09/01 15:15:06] d2.evaluation.testing INFO: copypaste: AP,AP50,AP75,APs,APm,APl,ARdet1,ARdet10,ARdet100,ARs,ARm,ARl

[09/01 15:15:06] d2.evaluation.testing INFO: copypaste: 32.8819,50.8364,35.3460,19.4948,37.7705,41.7771,30.0138,50.6982,55.0174,38.0516,60.5119,69.6988

[09/01 15:15:06] d2go.utils.misc INFO: Dumping trained config file: output_sylph/TFA_danka/coco/pretrain/trained_model_configs/model_final.yaml

[09/01 15:15:06] d2go.utils.misc INFO: Finished dumping trained config file

[09/01 15:15:06] mobile_cv.torch.utils_pytorch.distributed_helper INFO: Launched jobs finished, dist_url: file:///tmp/d2go_dist_file_1725101434.1924598

从上述结果可以看出两者结果近似,得出的结论是:

当保持总BS、lr、iters不变的时候,训练结果与GPU数量无关,可以使用一张大显存的GPU来代替若干张小显存GPU进行运算。

使用Tensorboard来可视化一下损失图也可以验证这个想法(在文章的最后)。

2.3、利用两块GPU(bs_pre最大)运行出来结果:

IMS_PER_BATCH: 30、BASE_LR: 0.02、MAX_ITER: 15000、REFERENCE_WORLD_SIZE: 2、 STEPS:(10000,12500)

Tips: 原本IMS_PER_BATCH应该是32的,但是由于每张卡的bs=16会爆显存,所以设置成30.

配置如下:

DATASETS:

TEST:

- coco_pretrain_val_base

TRAIN:

- coco_pretrain_train_base

INPUT:

MIN_SIZE_TRAIN:

- 640

- 672

- 704

- 736

- 768

- 800

MODEL:

BACKBONE:

NAME: build_fcos_resnet_fpn_backbone

DDP_FIND_UNUSED_PARAMETERS: true

FCOS:

NUM_CLASSES: 60

FPN:

IN_FEATURES:

- res3

- res4

- res5

META_ARCHITECTURE: MetaOneStageDetector

PROPOSAL_GENERATOR:

NAME: MetaFCOS

RESNETS:

OUT_FEATURES:

- res3

- res4

- res5

WEIGHTS: detectron2://ImageNetPretrained/MSRA/R-50.pkl

OUTPUT_DIR: output_sylph/TFA_shuangbeibs/coco/pretrain/

SOLVER:

BASE_LR: 0.02

IMS_PER_BATCH: 30

MAX_ITER: 15000

REFERENCE_WORLD_SIZE: 2

STEPS:

- 10000

- 12500

TEST:

EVAL_PERIOD: 10000

训练结果如下:

[09/02 19:14:36] d2.engine.hooks INFO: Overall training speed: 14998 iterations in 9:43:15 (2.3333 s / it)

[09/02 19:14:36] d2.engine.hooks INFO: Total training time: 9:49:10 (0:05:55 on hooks) # 总的训练时间

[09/02 19:18:28] d2.evaluation.coco_evaluation INFO: Preparing results for COCO format ...

[09/02 19:18:29] d2.evaluation.coco_evaluation INFO: Saving results to output_sylph/TFA_shuangbeibs/coco/pretrain/inference/default/final/coco_pretrain_val_base/coco_instances_results.json

[09/02 19:18:31] d2.evaluation.coco_evaluation INFO: Evaluating predictions with official COCO API...

[09/02 19:19:53] d2.evaluation.coco_evaluation INFO: Evaluation results for bbox:

| AP | AP50 | AP75 | APs | APm | APl | ARdet1 | ARdet10 | ARdet100 | ARs | ARm | ARl |

|:------:|:------:|:------:|:------:|:------:|:------:|:--------:|:---------:|:----------:|:------:|:------:|:------:|

| 32.662 | 50.715 | 35.003 | 19.986 | 37.365 | 41.966 | 29.936 | 50.815 | 54.903 | 39.312 | 60.476 | 69.437 |

[09/02 19:19:53] d2.evaluation.coco_evaluation INFO: Per-category bbox AP:

| category | AP | category | AP | category | AP |

|:---------------|:-------|:--------------|:-------|:-------------|:-------|

| truck | 24.719 | traffic light | 25.109 | fire hydrant | 56.087 |

| stop sign | 59.856 | parking meter | 36.393 | bench | 18.575 |

| elephant | 52.799 | bear | 55.483 | zebra | 60.004 |

| giraffe | 58.331 | backpack | 12.968 | umbrella | 33.007 |

| handbag | 12.360 | tie | 27.676 | suitcase | 31.035 |

| frisbee | 61.520 | skis | 16.335 | snowboard | 24.008 |

| sports ball | 43.596 | kite | 36.135 | baseball bat | 24.210 |

| baseball glove | 34.805 | skateboard | 44.214 | surfboard | 31.307 |

| tennis racket | 45.242 | wine glass | 32.089 | cup | 37.601 |

| fork | 20.472 | knife | 10.713 | spoon | 9.313 |

| bowl | 37.740 | banana | 20.521 | apple | 18.616 |

| sandwich | 27.233 | orange | 30.228 | broccoli | 21.418 |

| carrot | 16.801 | hot dog | 24.280 | pizza | 46.001 |

| donut | 39.685 | cake | 31.516 | bed | 30.078 |

| toilet | 49.645 | laptop | 51.554 | mouse | 57.478 |

| remote | 24.332 | keyboard | 41.024 | cell phone | 29.425 |

| microwave | 46.203 | oven | 26.922 | toaster | 16.231 |

| sink | 31.143 | refrigerator | 43.622 | book | 11.921 |

| clock | 47.546 | vase | 34.431 | scissors | 17.359 |

| teddy bear | 36.482 | hair drier | 0.428 | toothbrush | 13.893 |

[09/02 19:19:54] d2.evaluation.testing INFO: copypaste: Task: bbox

[09/02 19:19:54] d2.evaluation.testing INFO: copypaste: AP,AP50,AP75,APs,APm,APl,ARdet1,ARdet10,ARdet100,ARs,ARm,ARl

[09/02 19:19:54] d2.evaluation.testing INFO: copypaste: 32.6619,50.7154,35.0027,19.9863,37.3647,41.9661,29.9360,50.8152,54.9029,39.3122,60.4762,69.4366

[09/02 19:19:54] d2go.utils.misc INFO: Dumping trained config file: output_sylph/TFA_shuangbeibs/coco/pretrain/trained_model_configs/model_final.yaml

[09/02 19:19:54] d2go.utils.misc INFO: Finished dumping trained config file

[09/02 19:19:54] mobile_cv.torch.utils_pytorch.distributed_helper INFO: Save tools.setup.new_main_func return to: /tmp/mcvdh_detectron2.utils.serialize.new_main_func_returnp17qhdor.pth.rank0

三、 结果与结论:

定义如下:

- 方法1 即:Baseline,采用2GPUS,保持总的BS、iteration、base_lr不变。

- 方法2 即:采用1GPU,保持总的Batch_Size、iteration、base_lr不变。

- 方法3 即: 采用2GPUs,适当调整iteration(减倍)、Batch_Size(加倍)、base_lr(加倍)。

3.1、训练结果:

| 方法 | AP | AP50 | AP75 | APs | APm | APl | ARdet1 | ARdet10 | ARdet100 | ARs | ARm | ARl |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 方法1 | 32.887 | 50.833 | 35.163 | 19.228 | 37.868 | 42.126 | 29.625 | 50.698 | 54.897 | 38.173 | 60.524 | 69.414 |

| 方法2 | 32.882 | 50.836 | 35.346 | 19.495 | 37.770 | 41.777 | 30.014 | 50.698 | 55.017 | 38.052 | 60.512 | 69.699 |

| 方法3 | 32.622 | 50.715 | 35.003 | 19.986 | 37.365 | 41.966 | 29.936 | 50.815 | 54.903 | 39.312 | 60.476 | 69.437 |

观察训练结果可以发现三者比较接近,尤其是 方法1 与 方法2。方法3稍有0.2的落后,主要是由于总的Batch_Size理应是32,由于显存爆炸设置成了30.

3.2、总的训练时间:

| Baseline(2GPU) | 不改变iteration(1GPU) | 改变iteration(2GPU) |

|---|---|---|

| 11:17:36 | 20:15:22 | 9:49:10 |

可以发现使用大的Batch_size确实能够节省训练时间,并且达到同样的训练效果。

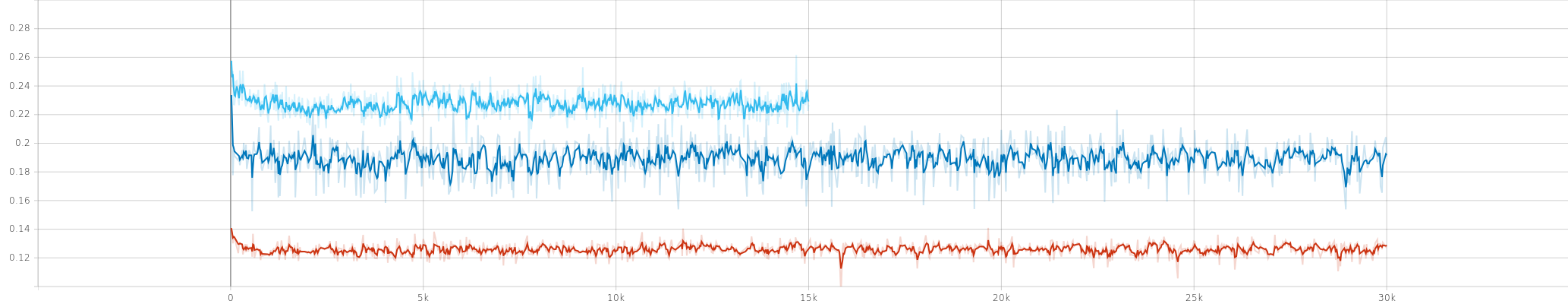

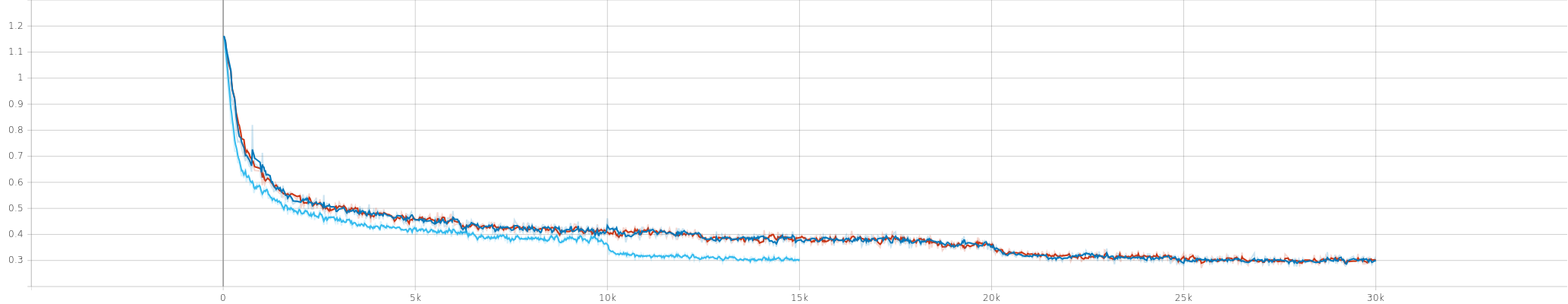

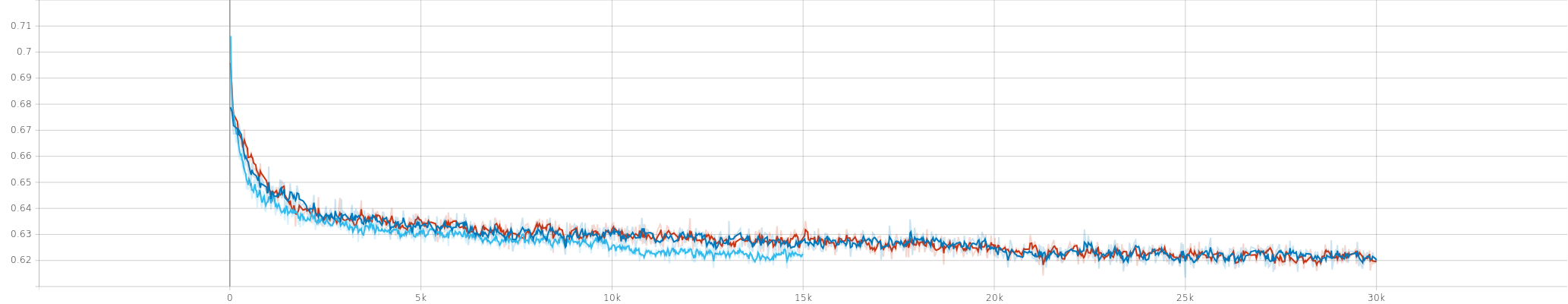

3.3、tensorboard可视化的结果:

- 青靛色为方法3:改变iteration(2GPU)

- 浅蓝色为方法2:不改变iteration(1GPU)

- 橙黄色为方法1:Baseline(2GPU)

训练时间

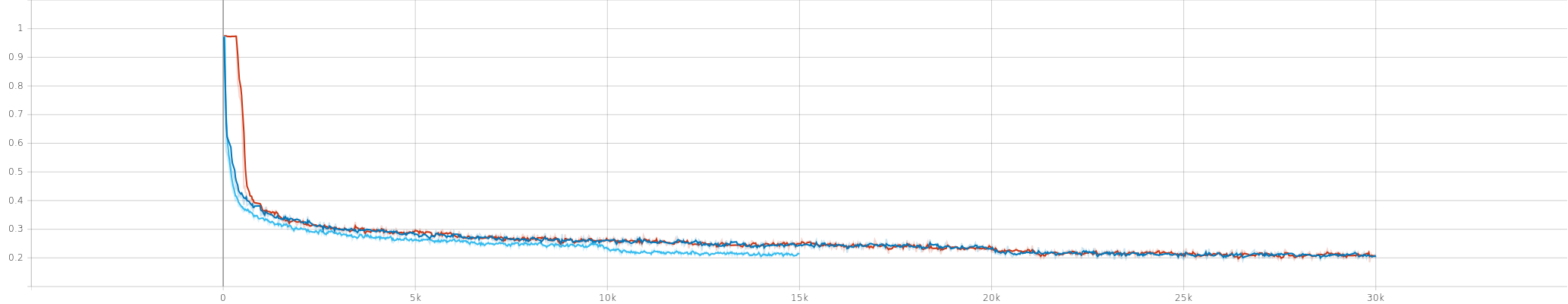

loss_fcos_cls

loss_fcos_ctr

loss_fcos_loc

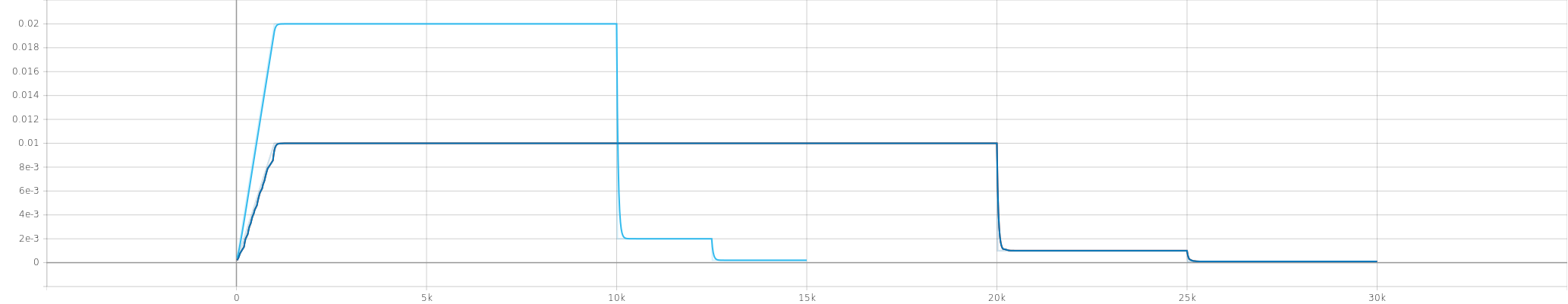

lr

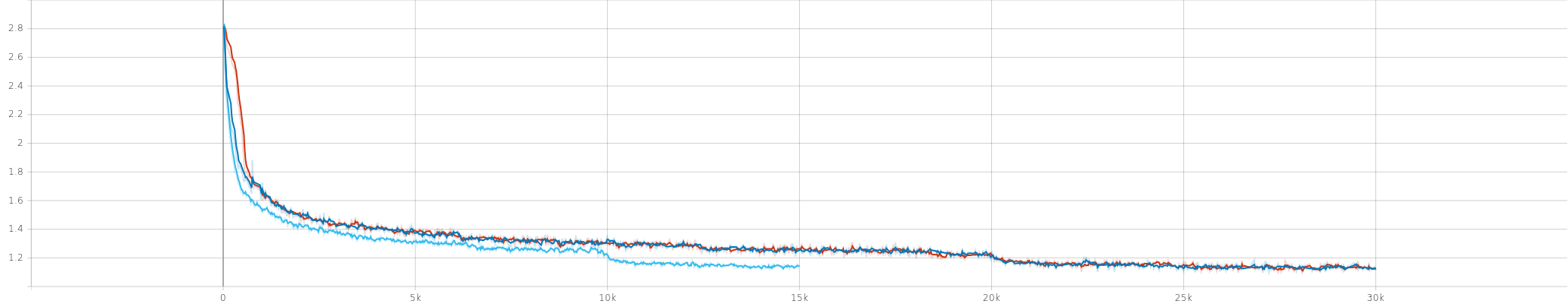

total_loss

3.4 结论:

从3.3中的各个可视化结果图来看,损失函数都比较相似,与3.1的训练结果相匹配。

因此,对于可以采用这种大Batch_Size的方式来减少总的iteration,替代源代码中那种集群的训练方式,从而减少训练的时间。

单机单卡与多卡运行目标检测代码对比

单机单卡与多卡运行目标检测代码对比

3025

3025

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?