论文:[NeurIPS 2021] Low-Rank Subspaces in GANs

zhujiapeng.github.io/lowrankgan/

论文翻译:https://blog.csdn.net/qq_43620967/article/details/121561323

代码下载地址: https://github.com/zhujiapeng/LowRankGAN

readme

图:在StyleGAN2(前三列)和BigGAN(最后一列)上使用LowRankGAN编辑图像的结果。

在存储库中,我们提出了用新的低秩子空间局部控制GANS图像合成的LowRankGAN算法。具体而言,我们首先利用Jacobian方法将图像区域与潜在空间联系起来。然后在Jacobian上进行低秩因式分解,得到主空间和空空间.We finally project the principal space w.r.t. the region of interest onto the null space w.r.t. the rest region我们最终将感兴趣区域的主空间投影到剩余区域的零空间上。这样,通过改变投影空间内的方向上的潜码,我们称之为低秩子空间,我们设法精确地控制感兴趣的区域,但对其余的区域几乎没有影响。

按规定的指示操作

我们已经在目录directions/.下提供了一些说明。用户可以很容易地使用这些方向进行图像本地编辑。

MODEL_PATH='stylegan2-ffhq-config-f-1024x1024.pkl'

DIRECTION='directions/ffhq1024/eyes_size.npy'

python manipulate.py $MODEL_PATH $DIRECTION

Find More Directions

我们还为用户提供了查找自定义指导的代码。请按照以下步骤操作。

Step-0: Prepare the pre-trained generator

这里,我们以StyleGAN 2中正式发布的FFHQ模型为例。请先下载。

Step-1: Compute Jacobian with random syntheses

MODEL_PATH='stylegan2-ffhq-config-f-1024x1024.pkl'

python compute_jacobian.py $MODEL_PATH

Step-2: Compute the directions from the Jacobian

JACOBIAN_PATH='outputs/jacobian_seed_4/w_dataset_ffhq.npy'

python compute_directions.py $JACOBIAN_PATH

Step-3: Verify the directions through image manipulation

MODEL_PATH='stylegan2-ffhq-config-f-1024x1024.pkl'

DIRECTION_PATH='outputs/directions/${DIRECTION_NAME}'

python manipulate.py $MODEL_PATH $DIRECTION

环境 python3.7 TensorFlow 1.14.0

配置新环境

conda create -n py37 python=3.7

cv2 包

pip install opencv-python

MODEL_PATH='stylegan2-ffhq-config-f-1024x1024.pkl'

DIRECTION='directions/ffhq1024/eyes_size.npy'

python manipulate.py $MODEL_PATH $DIRECTION

在terminal上运行

python manipulate.py 'stylegan2-ffhq-config-f-1024x1024.pkl' 'directions/ffhq1024/eyes_size.npy'

其中 后面两项分别为下面两项

parser.add_argument('restore_path', type=str, default='',

help='The pre-trained encoder pkl file path')

parser.add_argument('matrix_path', type=str,

help='Path to the low rank matrix.')

‘stylegan2-ffhq-config-f-1024x1024.pkl’ 需要在 https://drive.google.com/open?id=1QHc-yF5C3DChRwSdZKcx1w6K8JvSxQi7 中下载

运行后会报错

usage: manipulate.py [-h] [–batch_size BATCH_SIZE] [–output_dir

OUTPUT_DIR]

[–total_num TOTAL_NUM] [–gpu_id GPU_ID] [–start START]

[–end END] [–mani_layers MANI_LAYERS]

[–start_distance START_DISTANCE]

[–end_distance END_DISTANCE] [–step STEP] [–save_raw]

[–seed SEED] [–name NAME] [–latent_path LATENT_PATH]

restore_path matrix_path manipulate.py: error: the following arguments are required: restore_path, matrix_path

1.需要进入命令行,输入参数

2.-需要变为–

3.pycharm中选择"Edit Configurations…"

改为 即可

parser.add_argument('--restore_path', type=str, default='',

help='The pre-trained encoder pkl file path')

parser.add_argument('--matrix_path', type=str,

help='Path to the low rank matrix.')

tensorflow 2 降1:

pip install --upgrade https://storage.googleapis.com/tensorflow/mac/cpu/tensorflow-1.14.0-py3-none-any.whl

查看tf,gpu能用否

import tensorflow as tf

print(tf.test.is_gpu_available())

装tensorflow-gpu。。。不会找大神帮我装的

True后

stylegan2报错“undefined symbol: _ZN10tensorflow12OpDefBuilder6OutputESs”的解决方案

导致这个问题,主要是在生成so包过程中,主要是由gcc编译选项问题导致的,gcc版本大于 4,本身支持c11标准,则不需要选项-D_GLIBCXX_USE_CXX11_ABI = 0;打开custom_ops.py中127行–compiler-options '-fPIC -D_GLIBCXX_USE_CXX11_ABI=0,改为 –compiler-options '-fPIC -D_GLIBCXX_USE_CXX11_ABI=1

Failed to get convolution algorithm

csdn不能直接黏贴图片了烦死了 看下面的链接吧

https://www.yuque.com/docs/share/5902b0bf-a5a5-4bf4-b829-5c096be20514?# 《LowRankGAN》

manipulate.py

# python 3.7

"""Manipulates the latent codes using existing model and directions."""

import os

import sys

import argparse

import signal

import pickle

from tqdm import tqdm

import tensorflow as tf

import numpy as np

import dnnlib.tflib as tflib

from utils.visualizer import save_image, adjust_pixel_range

from utils.visualizer import HtmlPageVisualizer

from utils.editor import parse_indices

from utils.editor import manipulate_codes

import warnings # pylint: disable=wrong-import-order

warnings.filterwarnings('ignore', category=FutureWarning)

def parse_args():

"""Parses arguments."""

signal.signal(signal.SIGINT, lambda x, y: sys.exit(0))

parser = argparse.ArgumentParser()

parser.add_argument('--restore_path', type=str, default='stylegan2-ffhq-config-f-1024x1024.pkl',

help='The pre-trained encoder pkl file path')

parser.add_argument('--matrix_path', type=str,default='directions/ffhq1024/eyes_size.npy',

help='Path to the low rank matrix.')

parser.add_argument("--batch_size", type=int,

default=1, help="size of the input batch")

parser.add_argument('--output_dir', type=str, default='',

help='Directory to save the results. If not specified,'

'`./outputs/manipulation` will be used '

'by default.')

parser.add_argument('--total_num', type=int, default=10,

help='number of loops for sampling')

parser.add_argument('--gpu_id', type=str, default='0',

help='Which GPU(s) to use. (default: `0`)')

parser.add_argument('--start', type=int, default=0,

help='Start index of the manipulation direction')

parser.add_argument('--end', type=int, default=1,

help='Number of direction will be used in VH')

parser.add_argument('--mani_layers', type=str, default='4,5,6,7',

help='The layer will be manipulated')

parser.add_argument('--start_distance', type=float, default=-4.0,

help='Start distance for manipulation. (default: -4.0)')

parser.add_argument('--end_distance', type=float, default=4.0,

help='End distance for manipulation. (default: 4.0)')

parser.add_argument('--step', type=int, default=7,

help='Number of steps for manipulation. (default: 7)')

parser.add_argument('--save_raw', action='store_true',

help='Whether to save raw images (default: False)')

parser.add_argument('--seed', type=int, default=4,

help='random seed')

parser.add_argument('--name', type=str, default='lowrankgan',

help='The name to help save the file')

parser.add_argument('--latent_path', type=str, default='',

help='The path to the existing latent codes.')

return parser.parse_args()

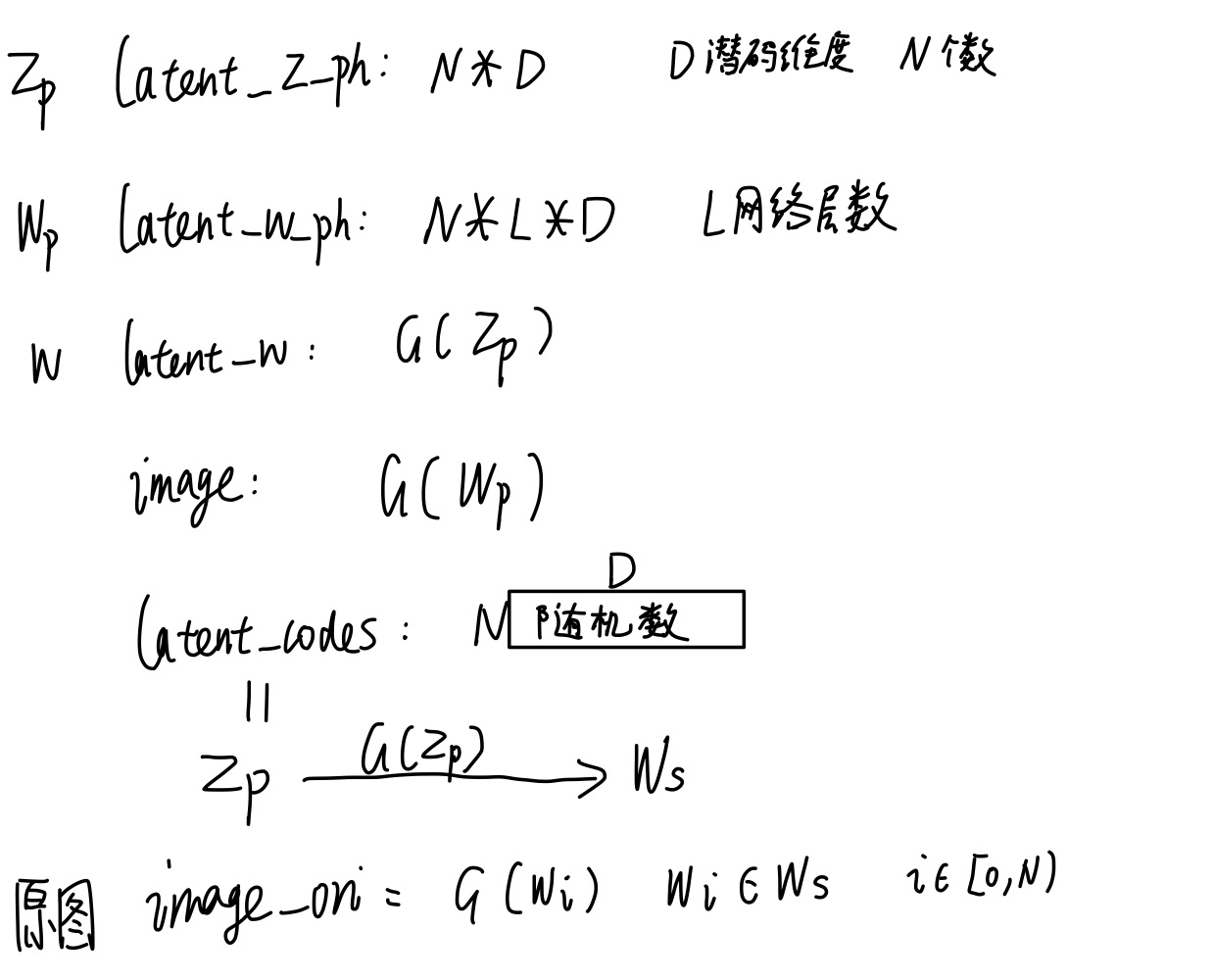

def main():

"""Main function."""

args = parse_args()

os.environ["CUDA_VISIBLE_DEVICES"] = args.gpu_id

tf_config = {'rnd.np_random_seed': 1000}

tflib.init_tf(tf_config)

np.random.seed(args.seed)

assert os.path.exists(args.restore_path)

assert os.path.exists(args.matrix_path)

with open(args.restore_path, 'rb') as f:

_, _, Gs = pickle.load(f)

directions = np.load(args.matrix_path)

num_layers, latent_dim = Gs.components.synthesis.input_shape[1:3]

# Building graph

latent_z_ph = tf.placeholder(

tf.float32, [None, latent_dim], name='latent_z')

latent_w_ph = tf.placeholder(

tf.float32, [None, num_layers, latent_dim], name='latent_w')

sess = tf.get_default_session()

latent_w = Gs.components.mapping.get_output_for(latent_z_ph, None)

images = Gs.components.synthesis.get_output_for(

latent_w_ph, randomize_noise=False)

print(f'Load or Generate latent codes.')

if os.path.exists(args.latent_path):

latent_codes = np.load(args.latent_path)

latent_codes = latent_codes[:args.total_num]

else:

latent_codes = np.random.randn(args.total_num, latent_dim)

feed_dict = {latent_z_ph: latent_codes}

latent_ws = sess.run(latent_w, feed_dict)

num_images = latent_ws.shape[0]

image_list = []

for i in range(num_images):

image_list.append(f'{i:06d}')

save_dir = args.output_dir or f'./outputs/manipulations'

os.makedirs(save_dir, exist_ok=True)

delta_num = args.end - args.start

visualizer = HtmlPageVisualizer(num_rows=num_images * delta_num,

num_cols=args.step + 2,

viz_size=256)

layer_index = parse_indices(args.mani_layers)

print(f'Manipulate on layers {layer_index}')

for row in tqdm(range(num_images)):

latent_code = latent_ws[row:row+1]

images_ori = sess.run(images, {latent_w_ph: latent_code})

images_ori = adjust_pixel_range(images_ori)

if args.save_raw:

save_image(f'{save_dir}/ori_{row:06d}.png', images_ori[0])

for num_direc in range(args.start, args.end):

html_row = num_direc - args.start

direction = directions[num_direc:num_direc + 1][:, np.newaxis]

direction = np.tile(direction, [1, num_layers, 1])

visualizer.set_cell(row * delta_num + html_row, 0,

text=f'{image_list[row]}_{num_direc:03d}')

visualizer.set_cell(row * delta_num + html_row, 1,

image=images_ori[0])

mani_codes = manipulate_codes(latent_code=latent_code,

boundary=direction,

layer_index=layer_index,

start_distance=args.start_distance,

end_distance=args.end_distance,

steps=args.step)

mani_images = sess.run(images, {latent_w_ph: mani_codes})

mani_images = adjust_pixel_range(mani_images)

for i in range(mani_images.shape[0]):

visualizer.set_cell(row * delta_num + html_row, i + 2,

image=mani_images[i])

if args.save_raw:

save_name = (

f'mani_{row:06d}_ind_{num_direc:06d}_{i:06d}.png')

save_image(f'{save_dir}/{save_name}', mani_images[i])

visualizer.save(f'{save_dir}/manipulate_results_{args.name}.html')

if __name__ == "__main__":

main()

compute_jacobian.py

# python 3.7

"""Computes the Jacobian matrix with the help of random syntheses."""

import os

import sys

import time

import argparse

import signal

import pickle

from tqdm import tqdm

import tensorflow as tf

import numpy as np

from tensorflow.python.ops.parallel_for.gradients import jacobian

import dnnlib

import dnnlib.tflib as tflib

from utils.visualizer import save_image

import warnings # pylint: disable=wrong-import-order

warnings.filterwarnings('ignore', category=FutureWarning)

def parse_args():

"""Parses arguments."""

signal.signal(signal.SIGINT, lambda x, y: sys.exit(0))

parser = argparse.ArgumentParser()

parser.add_argument('restore_path', type=str, default='',

help='The pre-trained encoder pkl file path')

parser.add_argument("--image_size", type=int,

default=256, help="size of the images")

parser.add_argument("--batch_size", type=int,

default=1, help="size of the input batch")

parser.add_argument('--output_dir', type=str, default='',

help='Directory to save the results. If not specified,'

'`./outputs/jacobian_seed_{}` will be used '

'by default.')

parser.add_argument('--total_num', type=int, default=5,

help='Number of latent codes to sample')

parser.add_argument('--gpu_id', type=str, default='0',

help='Which GPU(s) to use. (default: `0`)')

parser.add_argument('--compute_z', action='store_true',

help='Whether to compute jacobian on z '

'(default: False)')

parser.add_argument('--save_png', action='store_false',

help='Whether or not to save the images '

'(default: True)')

parser.add_argument('--fused_channel', action='store_false',

help='Whether or not to mean RGB channel '

'(default: True)')

parser.add_argument('--seed', type=int, default=4,

help='Random seed')

parser.add_argument('--d_name', type=str, default='ffhq',

help='Name of the dataset.')

return parser.parse_args()

def resize_image(images, size=256):

"""Resizes the image with data format NCHW."""

images = tf.transpose(images, perm=[0, 2, 3, 1])

images = tf.image.resize_images(images, size=[size, size], method=1)

images = tf.transpose(images, perm=[0, 3, 1, 2])

return images

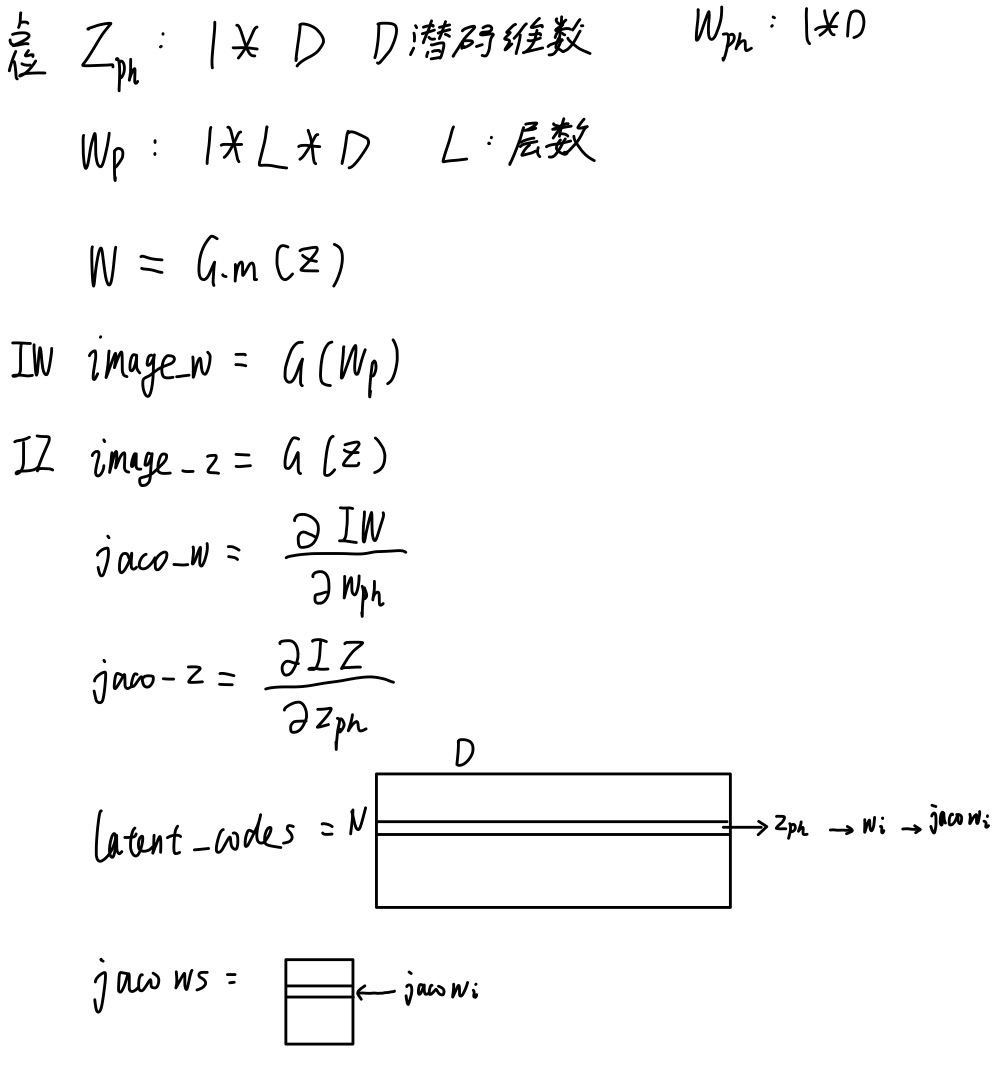

def main():

"""Main function."""

args = parse_args()

os.environ["CUDA_VISIBLE_DEVICES"] = args.gpu_id

tf_config = {'rnd.np_random_seed': 1000}

tflib.init_tf(tf_config)

assert os.path.exists(args.restore_path)

with open(args.restore_path, 'rb') as f:

_, _, Gs = pickle.load(f)

num_layers, latent_dim = Gs.components.synthesis.input_shape[1:3]

# Building graph

latent_z_ph = tf.placeholder(tf.float32, [1, latent_dim], name='latent_z')

latent_w_ph = tf.placeholder(tf.float32, [1, latent_dim], name='latent_w')

sess = tf.get_default_session()

latent_w_temp = tf.expand_dims(latent_w_ph, axis=1)

latent_wp = tf.tile(latent_w_temp, [1, num_layers, 1])

# Sampling w from z

latent_w = Gs.components.mapping.get_output_for(latent_z_ph, None)

# Build graph for w

images_w = Gs.components.synthesis.get_output_for(

latent_wp, randomize_noise=False)

output_size = images_w.shape[-1]

if output_size != args.image_size:

images_w = resize_image(images=images_w, size=args.image_size)

images_w_255 = tflib.convert_images_to_uint8(images_w, nchw_to_nhwc=True)

if args.fused_channel:

images_w = tf.reduce_mean(images_w, axis=1)

# Build graph for z

images_z = Gs.get_output_for(

latent_z_ph, None, is_validation=True, randomize_noise=False)

if output_size != args.image_size:

images_z = resize_image(images=images_z, size=args.image_size)

if args.fused_channel:

images_z = tf.reduce_mean(images_z, axis=1)

jaco_w = jacobian(images_w, latent_w_ph, use_pfor=False)

print(f'Jacobian w shape: {jaco_w.shape}')

if args.compute_z:

jaco_z = jacobian(images_z, latent_z_ph, use_pfor=False)

print(f'Jacobian z shape: {jaco_z.shape}')

save_dir = args.output_dir or f'./outputs/jacobians_seed_{args.seed}'

os.makedirs(save_dir, exist_ok=True)

np.random.seed(args.seed)

print(f'Starting calculating jacobian...')

sys.stdout.flush()

jaco_ws = []

jaco_zs = []

start_time = time.time()

latent_codes = np.random.randn(args.total_num, latent_dim)

for num in tqdm(range(latent_codes.shape[0])):

latent_code = latent_codes[num:num+1]

feed_dict = {latent_z_ph: latent_code}

latent_w_i = sess.run(latent_w, feed_dict)

feed_dict = {latent_w_ph: latent_w_i[:, 0]}

jaco_w_i = sess.run(jaco_w, feed_dict)

if args.compute_z:

jaco_z_i = sess.run(jaco_z, {latent_z_ph: latent_code})

jaco_zs.append(jaco_z_i)

jaco_ws.append(jaco_w_i)

if args.save_png:

img_i = sess.run(images_w_255, {latent_w_ph: latent_w_i[:, 0]})

save_path0 = (f'{save_dir}/syn_dataset_{args.d_name}_'

f'number_{num:04d}.png')

save_image(save_path0, img_i[0])

jaco_w = np.concatenate(jaco_ws, axis=0)

print(f'jaco_w shape {jaco_w.shape}')

np.save(f'{save_dir}/w_dataset_{args.d_name}.npy', jaco_w)

if args.compute_z:

jaco_z = np.concatenate(jaco_zs, axis=0)

print(f'jaco_z shape {jaco_z.shape}')

np.save(f'{save_dir}/z_dataset_{args.d_name}.npy', jaco_z)

print(f'Finished! and Time: '

f'{dnnlib.util.format_time(time.time() - start_time):12s}')

if __name__ == "__main__":

main()

2153

2153

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?