k8s容器资源限制、kubernetes资源监控、Dashboard图形化

一、k8s容器资源限制

1.简介

Kubernetes采用request和limit两种限制类型来对资源进行分配。

request(资源需求):即运行Pod的节点必须满足运行Pod的最基本需求才能运行Pod。

limit(资源限额):即运行Pod期间,可能内存使用量会增加,那最多能使用多少内存,这就是资源限额。

资源类型:

CPU 的单位是核心数,内存的单位是字节。

一个容器申请0.5个CPU,就相当于申请1个CPU的一半,你也可以加个后缀m 表示千分之一的概念。比如说100m的CPU,100豪的CPU和0.1个CPU都是一样的。

内存单位:

K、M、G、T、P、E #通常是以1000为换算标准的。

Ki、Mi、Gi、Ti、Pi、Ei #通常是以1024为换算标准的。

2.上传镜像

[root@foundation15 ~]# lftp 172.25.254.250

lftp 172.25.254.250:~> cd pub/docs/k8s/

lftp 172.25.254.250:/pub/docs/k8s> get stress.tar

291106304 bytes transferred in 3 seconds (96.81 MiB/s)

lftp 172.25.254.250:/pub/docs/k8s> exit

[root@foundation15 ~]# scp stress.tar server1:

root@server1's password:

stress.tar 100% 278MB 75.5MB/s 00:03

[root@foundation15 ~]#

[root@server1 ~]# docker load -i stress.tar

[root@server1 ~]# docker push reg.westos.org/library/stress:latest

3.内存限制

[root@server4 ~]# mkdir limit

[root@server4 ~]# cd limit/

[root@server4 limit]# ls

[root@server4 limit]# vim pod.yaml

[root@server4 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- --vm

- "1"

- --vm-bytes

- 200M

resources:

requests:

memory: 50Mi

limits:

memory: 100Mi #限制200M

[root@server4 limit]# kubectl apply -f pod.yaml

pod/memory-demo created

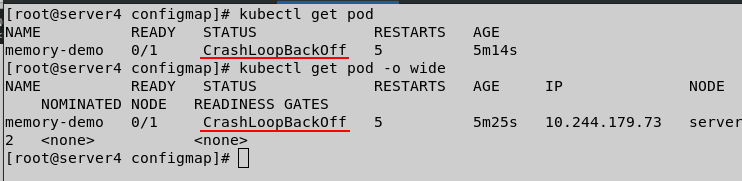

[root@server4 configmap]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 CrashLoopBackOff 5 5m14s

[root@server4 configmap]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

memory-demo 0/1 CrashLoopBackOff 5 5m25s 10.244.179.73 server2 <none> <none>

[root@server4 configmap]#

如果容器超过其内存限制,则会被终止。如果可重新启动,则与所有其他类型的运行时故障一样,kubelet 将重新启动它。

#如果一个容器超过其内存请求,那么当节点内存不足时,它的 Pod 可能被逐出。

[root@server4 limit]# vim pod.yaml

[root@server4 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- --vm

- "1"

- --vm-bytes

- 200M

resources:

requests:

memory: 50Mi

limits:

memory: 300Mi

[root@server4 limit]# kubectl delete -f pod.yaml

pod "memory-demo" deleted

[root@server4 limit]# kubectl apply -f pod.yaml

pod/memory-demo created

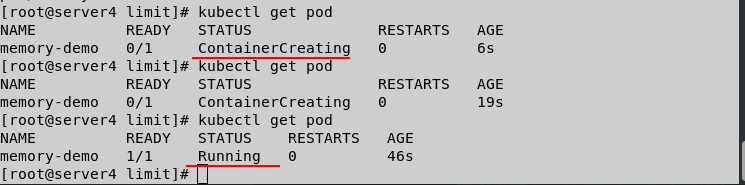

[root@server4 limit]# kubectl get pod

4.cpu限制

[root@server4 limit]# kubectl delete -f pod.yaml

pod "memory-demo" deleted

[root@server4 limit]# vim pod1.yaml

[root@server4 limit]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: stress

resources:

limits:

cpu: "10"

requests:

cpu: "5"

args:

- -c

- "2"

[root@server4 limit]# kubectl apply -f pod1.yaml

pod/cpu-demo created

[root@server4 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 0/1 Pending 0 28s

调度失败是因为申请的CPU资源超出集群节点所能提供的资源

但CPU 使用率过高,不会被杀死

[root@server4 limit]# kubectl delete -f pod1.yaml

pod "cpu-demo" deleted

[root@server4 limit]# vim pod1.yaml

[root@server4 limit]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: stress

resources:

limits:

cpu: "2"

requests:

cpu: "0.1"

args:

- -c

- "2"

[root@server4 limit]# kubectl apply -f pod1.yaml

pod/cpu-demo created

[root@server4 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 6s

5.为namespace设置资源限制:

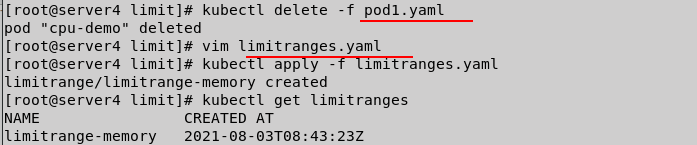

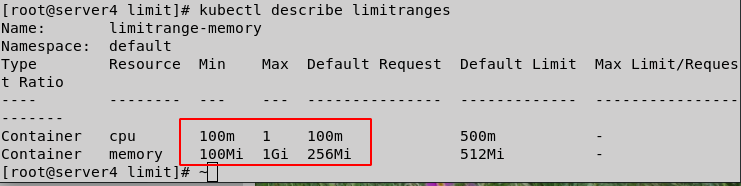

[root@server4 limit]# kubectl delete -f pod1.yaml

pod "cpu-demo" deleted

[root@server4 limit]# vim limitranges.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

[root@server4 limit]# kubectl apply -f limitranges.yaml

limitrange/limitrange-memory created

[root@server4 limit]# kubectl get limitranges

NAME CREATED AT

limitrange-memory 2021-08-03T08:43:23Z

[root@server4 limit]# kubectl describe limitranges limitrange-memory

Name: limitrange-memory

Namespace: default

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu 100m 1 100m 500m -

Container memory 100Mi 1Gi 256Mi 512Mi -

[root@server4 limit]# vim pod.yaml

[root@server4 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: nginx

[root@server4 limit]# kubectl apply -f pod.yaml

pod/cpu-demo created

[root@server4 limit]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 29s

[root@server4 limit]# kubectl describe pod cpu-demo

[root@server4 limit]# kubectl describe limitranges

编写pod.yaml,设置内存最小50M

[root@server4 ~]# cd limit/

[root@server4 limit]# ls

components.yaml limitranges.yaml pod1.yaml pod.yaml

[root@server4 limit]# vim pod.yaml

[root@server4 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo-1

spec:

containers:

- name: memory-demo

image: nginx

resources:

requests:

memory: 50Mi

limits:

memory: 300Mi

## 拉起后失败,原因是资源限定内存最小100M,这里的50M不满足条件

[root@server4 limit]# kubectl apply -f pod.yaml

Error from server (Forbidden): error when creating "pod.yaml": pods "memory-demo-1" is forbidden: minimum memory usage per Container is 100Mi, but request is 50Mi

[root@server4 limit]#

6.为namespace设置资源配额:

[root@server4 limit]# vim limitranges.yaml

[root@server4 limit]# cat limitranges.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default:

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

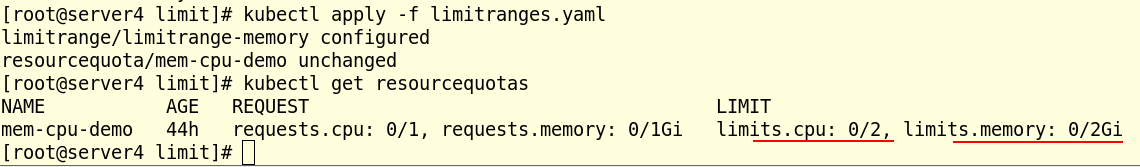

[root@server4 limit]# kubectl apply -f limitranges.yaml

limitrange/limitrange-memory configured

resourcequota/mem-cpu-demo unchanged

[root@server4 limit]# kubectl get resourcequotas

NAME AGE REQUEST LIMIT

mem-cpu-demo 44h requests.cpu: 0/1, requests.memory: 0/1Gi limits.cpu: 0/2, limits.memory: 0/2Gi

[root@server4 limit]#

资源配额设定成功

[root@server4 limit]# vim pod.yaml

[root@server4 limit]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: nginx

resources:

requests:

cpu: 0.1

memory: 100Mi

limits:

cpu: 0.5

memory: 300Mi

[root@server4 limit]# kubectl apply -f pod.yaml

pod/memory-demo created

[root@server4 limit]# kubectl describe resourcequotas

7.为 Namespace 配置Pod配额:

创建的ResourceQuota对象将在default名字空间中添加以下限制:

每个容器必须设置内存请求(memory request),内存限额(memory limit),cpu请求(cpu request)和cpu限额(cpu limit)。

所有容器的内存请求总额不得超过1 GiB。

所有容器的内存限额总额不得超过2 GiB。

所有容器的CPU请求总额不得超过1 CPU。

所有容器的CPU限额总额不得超过2 CPU。

设置Pod配额以限制可以在namespace中运行的Pod数量。

[root@server4 limit]# vim limitranges.yaml

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

pods: "3" #设置pod数

[root@server4 limit]# kubectl apply -f limitranges.yaml

limitrange/limitrange-memory configured

resourcequota/mem-cpu-demo configured

[root@server4 limit]# kubectl describe resourcequotas

Name: mem-cpu-demo

Namespace: default

Resource Used Hard

-------- ---- ----

limits.cpu 500m 2

limits.memory 300Mi 2Gi

pods 1 3

requests.cpu 100m 1

requests.memory 100Mi 1Gi

[root@server4 limit]#

二、kubernetes资源监控

1.简介

Metrics-Server是集群核心监控数据的聚合器,用来替换之前的heapster。

容器相关的 Metrics 主要来自于 kubelet 内置的 cAdvisor 服务,有了Metrics-Server之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。

Metrics API 只可以查询当前的度量数据,并不保存历史数据。

Metrics API URI 为 /apis/metrics.k8s.io/,在 k8s.io/metrics 维护。

必须部署 metrics-server 才能使用该 API,metrics-server 通过调用 Kubelet Summary API 获取数据。

示例:

http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes

http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes/<node-name>

http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/namespace/<namespace-name>/pods/<pod-name>

Metrics Server 并不是 kube-apiserver 的一部分,而是通过 Aggregator 这种插件机制,在独立部署的情况下同 kube-apiserver 一起统一对外服务的。

kube-aggregator 其实就是一个根据 URL 选择具体的 API 后端的代理服务器。

Metrics-server属于Core metrics(核心指标),提供API metrics.k8s.io,仅提供Node和Pod的CPU和内存使用情况。而其他Custom Metrics(自定义指标)由Prometheus等组件来完成。

资源下载:https://github.com/kubernetes-incubator/metrics-server

Metrics-server部署:

$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

2.上传镜像

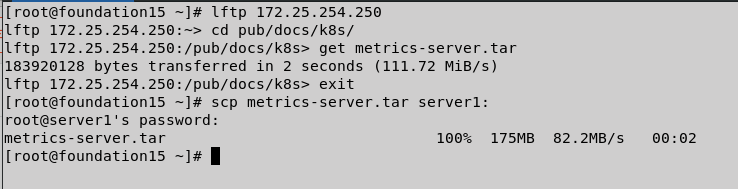

[root@foundation15 ~]# lftp 172.25.254.250

lftp 172.25.254.250:~> cd pub/docs/k8s/

lftp 172.25.254.250:/pub/docs/k8s> get metrics-server.tar

183920128 bytes transferred in 2 seconds (111.72 MiB/s)

lftp 172.25.254.250:/pub/docs/k8s> exit

[root@foundation15 ~]# scp metrics-server.tar server1:

root@server1's password:

metrics-server.tar 100% 175MB 82.2MB/s 00:02

[root@foundation15 ~]#

[root@server1 ~]# docker load -i metrics-server.tar

3fa01eaf81a5: Loading layer 70.8MB/70.8MB

eade1f59b7c7: Loading layer 6.656kB/6.656kB

f42d4f3f41f7: Loading layer 18.55MB/18.55MB

e7ad03a7fd89: Loading layer 47.26MB/47.26MB

b17c160cf0a8: Loading layer 2.048kB/2.048kB

0369974182df: Loading layer 47.26MB/47.26MB

Loaded image: reg.westos.org/library/metrics-server:v0.5.0

[root@server1 ~]# docker push reg.westos.org/library/metrics-server:v0.5.0

3.Metrics-Server部署

[root@server4 limit]# vim components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

image: metrics-server:v0.5.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

[root@server4 limit]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@server4 limit]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-784b4f4c9-g6cvs 1/1 Running 6 5d1h

calico-node-7qbw6 1/1 Running 1 2d6h

calico-node-875tv 1/1 Running 5 5d1h

calico-node-c56c4 1/1 Running 5 5d1h

coredns-7777df944c-9lxhb 1/1 Running 9 9d

coredns-7777df944c-bzmfr 1/1 Running 9 9d

etcd-server4 1/1 Running 9 9d

kube-apiserver-server4 1/1 Running 0 149m

kube-controller-manager-server4 1/1 Running 12 9d

kube-proxy-bp8kp 1/1 Running 8 7d

kube-proxy-p8v9j 1/1 Running 7 7d

kube-proxy-zmtrh 1/1 Running 1 2d6h

kube-scheduler-server4 1/1 Running 12 9d

metrics-server-86d6b8bbcc-vtsk2 0/1 Running 0 19s #没有完全启动存在问题

4. 查看日志,排除错误

4.1错误一

[root@server4 limit]# kubectl -n kube-system logs metrics-server-86d6b8bbcc-vtsk2

I0803 09:48:42.602054 1 serving.go:341] Generated self-signed cert (/tmp/apiserver.crt, /tmp/apiserver.key)

E0803 09:48:43.854104 1 scraper.go:139] "Failed to scrape node" err="Get \"https://172.25.15.2:10250/stats/summary?only_cpu_and_memory=true\": x509: cannot validate certificate for 172.25.15.2 because it doesn't contain any IP SANs" node="server2"

E0803 09:48:43.868844 1 scraper.go:139] "Failed to scrape node" err="Get \"https://172.25.15.3:10250/stats/summary?only_cpu_and_memory=true\": x509: cannot validate certificate for 172.25.15.3 because it doesn't contain any IP SANs" node="server3"

E0803 09:48:43.874546 1 scraper.go:139] "Failed to scrape node" err="Get \"https://172.25.15.4:10250/stats/summary?only_cpu_and_memory=true\": x509: cannot validate certificate for 172.25.15.4 because it doesn't contain any IP SANs" node="server4"

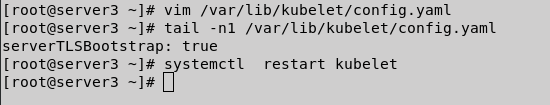

Metric Server 支持一个参数 --kubelet-insecure-tls,可以跳过这一检查,然而官方也明确说了,这种方式不推荐生产使用。

启用TLS Bootstrap 证书签发

解决错误一

解决错误,所有节点作此操作server2、3、4

[root@server3 ~]# vim /var/lib/kubelet/config.yaml

[root@server3 ~]# tail -n1 /var/lib/kubelet/config.yaml

serverTLSBootstrap: true

[root@server3 ~]# systemctl restart kubelet

4.2错误二,解析

[root@server4 limit]# kubectl edit configmap coredns -n kube-system

configmap/coredns edited

hosts {

172.25.15.2 server2

172.25.15.3 server3

172.25.15.4 server4

fallthrough

}

4.3错误三:证书认证

[root@server4 limit]# kubectl get csr

[root@server4 limit]# kubectl certificate approve csr-74hhl csr-njplr csr-p95hw #j加入认证

5.成功部署

[root@server4 limit]# kubectl get pod -n kube-system

[root@server4 limit]# kubectl top node

三、web图形化(Dashboard)

Dashboard可以给用户提供一个可视化的 Web 界面来查看当前集群的各种信息。用户可以用 Kubernetes Dashboard 部署容器化的应用、监控应用的状态、执行故障排查任务以及管理 Kubernetes 各种资源。

网址:https://github.com/kubernetes/dashboard

1.拉取镜像,上传镜像

[root@server1 ~]# docker pull kubernetesui/dashboard:v2.3.1

v2.3.1: Pulling from kubernetesui/dashboard

b82bd84ec244: Pull complete

21c9e94e8195: Pull complete

Digest: sha256:ec27f462cf1946220f5a9ace416a84a57c18f98c777876a8054405d1428cc92e

Status: Downloaded newer image for kubernetesui/dashboard:v2.3.1

docker.io/kubernetesui/dashboard:v2.3.1

[root@server1 ~]# docker pull kubernetesui/metrics-scraper:v1.0.6

v1.0.6: Pulling from kubernetesui/metrics-scraper

47a33a630fb7: Pull complete

62498b3018cb: Pull complete

Digest: sha256:1f977343873ed0e2efd4916a6b2f3075f310ff6fe42ee098f54fc58aa7a28ab7

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.6

docker.io/kubernetesui/metrics-scraper:v1.0.6

[root@server1 ~]# docker tag docker.io/kubernetesui/dashboard:v2.3.1 reg.westos.org/kubernetesui/dashboard:v2.3.1 #打标签

[root@server1 ~]# docker push reg.westos.org/kubernetesui/dashboard:v2.3.1

[root@server1 ~]# docker tag docker.io/kubernetesui/metrics-scraper:v1.0.6 reg.westos.org/kubernetesui/metrics-scraper:v1.0.6

[root@server1 ~]# docker push reg.westos.org/kubernetesui/metrics-scraper:v1.0.6

The push refers to repository [reg.westos.org/kubernetesui/metrics-scraper]

a652c34ae13a: Pushed

6de384dd3099: Pushed

v1.0.6: digest: sha256:c09adb7f46e1a9b5b0bde058713c5cb47e9e7f647d38a37027cd94ef558f0612 size: 736

2.下载部署文件,并配置部署

[root@server4 dashboard]# vi recommended.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

[root@server4 dashboard]# kubectl apply -f rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@server4 dashboard]# cat recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.2.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.6

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

[root@server4 dashboard]# kubectl apply -f recommended.yaml #运行

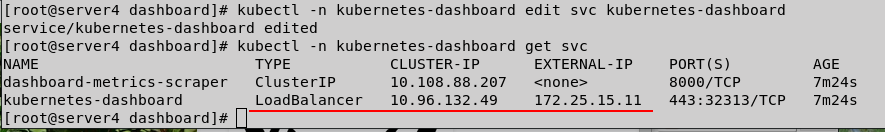

[root@server4 dashboard]# kubectl get ns 使用metallb为了从外部访问,也可以使用nortport,ingress

[root@server4 dashboard]# kubectl -n kubernetes-dashboard get pod #查看metallb对应的pod

[root@server4 dashboard]# kubectl -n kubernetes-dashboard get all

[root@server4 dashboard]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

service/kubernetes-dashboard edited

[root@server4 dashboard]# kubectl -n kubernetes-dashboard get svc #查看分配的vip

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.108.88.207 <none> 8000/TCP 7m24s

kubernetes-dashboard LoadBalancer 10.96.132.49 172.25.15.11 443:32313/TCP 7m24s

[root@server4 dashboard]#

3.访问 https://172.25.15.11

- 登陆dashboard需要认证,需要获取dashboard pod的token

[root@server4 dashboard]# kubectl -n kubernetes-dashboard get sa

[root@server4 dashboard]# kubectl -n kubernetes-dashboard describe sa kubernetes-dashboard

[root@server4 dashboard]# kubectl -n kubernetes-dashboard describe secrets kubernetes-dashboard-token-q9q5m #查看token登陆

4.默认dashboard对集群没有操作权限,需要授权

root@server4 dashboard]# vim rbac.yaml

[root@server4 dashboard]# cat rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

[root@server4 dashboard]# kubectl apply -f rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

[root@server4 dashboard]#

权限添加,无报错

5 通过web操作集群

5.1创建pod

5.2副本数为1

5.3缩放副本数

5.4查看副本数,正常访问

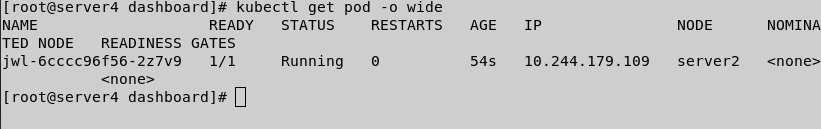

[root@server4 dashboard]# kubectl get pod -o wide

5.5更新镜像

镜像修改成功

259

259

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?