大数据系列之运维(自主搭建的大数据平台)

(6)Sqoop运维

- 在 master 节点查看Sqoop 的版本信息。

[root@master ~]# sqoop version

20/03/31 16:15:45 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

Sqoop 1.4.7

git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

Compiled by maugli on Thu Dec 21 15:59:58 STD 2017

- 使用 Sqoop 工具列出 master 节点中 MySQL 中所有数据库。(我的数据库装在了slave2节点)

这里要注意一下:在先电版大数据中,有时候执行下面相关语句会报错,可能是root用户的权限不够,就算su - sqoop下也会报错。这时应该到数据库里操作,为相关用户赋予相关权限。这里以root用户为例。

MariaDB [(none)]> grant all privileges on *.* to 'root'@'localhost' identified by '000000';

MariaDB [(none)]> grant all privileges on *.* to 'root'@'%' identified by '000000';

[root@master ~]# sqoop list-databases --connect jdbc:mysql://slave2:3306 --username root --password 000000

20/03/31 16:18:37 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hive

mysql

performance_schema

- 使用Sqoop工具列出master 节点中MySQL中mysql数据库中所有的数据表。

[root@master ~]# sqoop list-tables --connect jdbc:mysql://slave2:3306/mysql --username root --password 000000

Warning: /usr/sqoop/sqoop-1.4.7/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/sqoop/sqoop-1.4.7/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

20/03/31 16:27:55 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

20/03/31 16:27:55 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

20/03/31 16:27:56 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

columns_priv

db

event

func

general_log

help_category

help_keyword

help_relation

help_topic

host

ndb_binlog_index

plugin

proc

procs_priv

proxies_priv

servers

slow_log

tables_priv

time_zone

time_zone_leap_second

time_zone_name

time_zone_transition

time_zone_transition_type

user

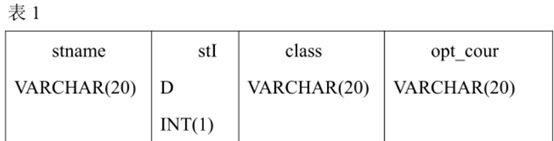

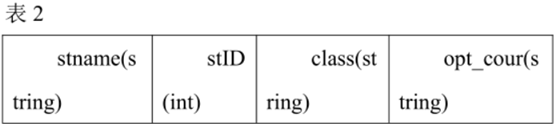

- 在 MySQL 中创建名为 xiandian 的数据库,在 xiandian 数据库中创建xd_phy_course 数据表,其数据表结构如表 1 所示。使用 Hive 工具来创建数据表 xd_phy_course,将 phy_course_xd.txt 导入到该表中,其中 xd_phy_course表的数据结构如表 2 所示。使用 Sqoop 工具将 hive 数据仓库中的xd_phy_course 数据导出到 master 节点的 MySQL 中 xiandain 数据库的xd_phy_course 表内。

MariaDB [xiandian]> create table xd_phy_course(

-> stname VARCHAR(20),

-> stID INT(1),

-> class VARCHAR(20),

-> opt_cour VARCHAR(20)

-> );

Query OK, 0 rows affected (0.00 sec)

hive> create table xd_phy_course (stname string,stID int,class string,opt_cour string) row format delimited fields terminated by '\t' lines terminated by '\n' stored as textfile;

OK

Time taken: 3.45 seconds

hive> load data local inpath'/root/tiku/Hive/phy_course_xd.txt' into table xd_phy_course;

Loading data to table default.xd_phy_course

OK

Time taken: 1.442 seconds

[root@master ~]# sqoop export --connect jdbc:mysql://slave2:3306/xiandian --username root --password 000000 --table xd_phy_course --hcatalog-database default --hcatalog-table xd_phy_course

20/03/31 17:19:19 INFO mapred.FileInputFormat: Total input paths to process : 1

20/03/31 17:19:19 INFO mapreduce.JobSubmitter: number of splits:1

20/03/31 17:19:19 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

20/03/31 17:19:20 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1585642380845_0001

20/03/31 17:19:22 INFO impl.YarnClientImpl: Submitted application application_1585642380845_0001

20/03/31 17:19:22 INFO mapreduce.Job: The url to track the job: http://master:18088/proxy/application_1585642380845_0001/

20/03/31 17:19:22 INFO mapreduce.Job: Running job: job_1585642380845_0001

20/03/31 17:19:57 INFO mapreduce.Job: Job job_1585642380845_0001 running in uber mode : false

20/03/31 17:19:57 INFO mapreduce.Job: map 0% reduce 0%

20/03/31 17:20:16 INFO mapreduce.Job: map 100% reduce 0%

20/03/31 17:20:18 INFO mapreduce.Job: Job job_1585642380845_0001 completed successfully

20/03/31 17:20:18 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=307992

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=92951

HDFS: Number of bytes written=0

HDFS: Number of read operations=2

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=13249

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=13249

Total vcore-milliseconds taken by all map tasks=13249

Total megabyte-milliseconds taken by all map tasks=13566976

Map-Reduce Framework

Map input records=2050

Map output records=2050

Input split bytes=1457

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=1223

CPU time spent (ms)=3290

Physical memory (bytes) snapshot=195649536

Virtual memory (bytes) snapshot=2117246976

Total committed heap usage (bytes)=96468992

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

20/03/31 17:20:18 INFO mapreduce.ExportJobBase: Transferred 90.7725 KB in 78.4333 seconds (1.1573 KB/sec)

20/03/31 17:20:18 INFO mapreduce.ExportJobBase: Exported 2050 records.

20/03/31 17:20:18 INFO mapreduce.ExportJobBase: Publishing HCatalog export job data to Listeners

到数据库区验证:

MariaDB [xiandian]> select * from xd_phy_course;

| student2036 | 10122035 | ClOfih_1407 | football |

| student2037 | 10122036 | ClOfih_1407 | football |

| student2038 | 10122037 | ClOfih_1407 | table tennis |

| student2039 | 10122038 | ClOfih_1407 | badminton |

| student2040 | 10122039 | ClOfih_1407 | volleyball |

| student2041 | 10122040 | ClOfih_1407 | tennis |

| student2042 | 10122041 | ClOfih_1407 | volleyball |

| student2043 | 10122042 | ClOfih_1407 | volleyball |

| student2044 | 10122043 | ClOfih_1407 | tennis |

| student2045 | 10122044 | ClOfih_1407 | volleyball |

| student2046 | 10122045 | ClOfih_1407 | basketball |

| student2047 | 10122046 | ClOfih_1407 | taekwondo |

| student2048 | 10122047 | ClOfih_1407 | taekwondo |

| student2049 | 10122048 | ClOfih_1407 | table tennis |

+-------------+----------+---------------+--------------+

2050 rows in set (0.01 sec)

如果出现问题:

Exception in thread “main” java.lang.NoClassDefFoundError: org/apache/hive/hcatalog/mapreduce/HCatOutputFormat

原因:

您正面临此问题,因为未设置HCAT_HOME路径。

解决方法:

到hive的安装目录下查看是否有hcatalog目录,和bin目录在同一级。

将此目录的路径放在/etc/profile文件里:

export HCAT_HOME=/usr/hive/apache-hive-2.1.1-bin/hcatalog

然后source。有sqoop的各个主机都得配置。

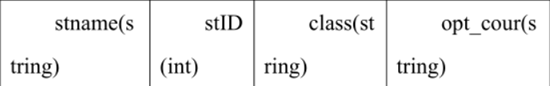

- 在 Hive 中创建 xd_phy_course 数据表,其数据表结构如下表所示。使用Sqoop 工具将 MySQL 中 xiandian 数据库下 xd_phy_course 表导入到 Hive 数据仓库中的 xd_phy_course 表中。

hive> create table xd_phy_course (stname string,stID int,class string,opt_cour string) row format delimited fields terminated by '\t' lines terminated by '\n' stored as textfile;

OK

Time taken: 0.663 seconds

[root@master ~]# sqoop import --connect jdbc:mysql://slave2:3306/xiandian --username root --password 000000 --table xd_phy_course --hive-import --hive-database default --hive-table xd_phy_course --fields-terminated-by '\t' --lines-terminated-by '\n' -m 1;

20/03/31 17:50:58 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

如果出现问题:

2020-03-31 17:50:47,328 main ERROR Could not register mbeans java.security.AccessControlException: access denied (“javax.management.MBeanTrustPermission” “register”)

原因:java 的安全策略问题

解决方法:

到Java安装的目录下的jre/lib/security目录里有个java.policy文件(这是我的路径/usr/java/jdk1.8.0_171/jre/lib/security)

在java.policy文件里面的grant部分添加如下内容:

permission javax.management.MBeanTrustPermission "register";

运行Sqoop报错:Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/avro/Logical

将avro-1.8.1.jar包放到Sqoop的lib目录下,问题解决。

运行Sqoop报错:找不到或无法加载主类 org.apache.sqoop.sqoop

将sqoop-1.4.7.jar包放到Sqoop的lib目录下,问题解决。

运行Sqoop报错:ERROR sqoop.Sqoop: Got exception running Sqoop: java.lang.RuntimeException: Could not load db driver class:

com.mysql.jdbc.Driver

java.lang.RuntimeException: Could not load db driver class: com.mysql.jdbc.Driver

将mysql的Java驱动包sqoop的lib 目录下,问题解决。

在此感谢先电云提供的题库。

感谢Apache开源技术服务支持

感谢抛物线、mn525520、菜鸟一枚2019三位博主的相关博客。

3750

3750

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?