1 接着day3的kafka 之前安装好了

在/home/atguigu/bin目录下创建脚本kf.sh

[atguigu@hadoop102 bin]$ vim kf.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------启动 $i kafka-------"

# 用于KafkaManager监控

ssh $i "export JMX_PORT=9988 && /opt/module/kafka/bin/kafka-server-start.sh -daemon /opt/module/kafka/config/server.properties "

done

};;

"stop"){

for i in hadoop102 hadoop103 hadoop104

do

echo " --------停止 $i kafka-------"

ssh $i "ps -ef | grep server.properties | grep -v grep| awk '{print $2}' | xargs kill >/dev/null 2>&1 &"

done

};;

esac

注意:启动Kafka时要先开启JMX端口,是用于后续KafkaManager监控。

2)增加脚本执行权限

[atguigu@hadoop102 bin]$ chmod 777 kf.sh

3)kf集群启动脚本

[atguigu@hadoop102 module]$ kf.sh start

4)kf集群停止脚本

[atguigu@hadoop102 module]$ kf.sh stop

创建 Kafka topic

进入到/opt/module/kafka/目录下分别创建:启动日志主题、事件日志主题。

1)创建启动日志主题

[atguigu@hadoop102 kafka]$ bin/kafka-topics.sh --zookeeper hadoop102:2181,hadoop103:2181,hadoop104:2181 --create --replication-factor 1 --partitions 1 --topic topic_start

2)创建事件日志主题

[atguigu@hadoop102 kafka]$ bin/kafka-topics.sh --zookeeper hadoop102:2181,hadoop103:2181,hadoop104:2181 --create --replication-factor 1 --partitions 1 --topic topic_event

删除 Kafka topic

[atguigu@hadoop102 kafka]$ bin/kafka-topics.sh --delete --zookeeper hadoop102:2181,hadoop103:2181,hadoop104:2181 --topic topic_start

[atguigu@hadoop102 kafka]$ bin/kafka-topics.sh --delete --zookeeper hadoop102:2181,hadoop103:2181,hadoop104:2181 --topic topic_event

查看某个Topic的详情

[atguigu@hadoop102 kafka]$ bin/kafka-topics.sh --zookeeper hadoop102:2181 \

--describe --topic topic_start

2Kafka Manager安装

1)下载地址

https://github.com/yahoo/kafka-manager

下载之后编译源码,编译完成后,拷贝出:kafka-manager-1.3.3.22.zip

2)拷贝kafka-manager-1.3.3.22.zip到hadoop102的/opt/module目录

[atguigu@hadoop102 module]$ pwd

/opt/module

3)解压kafka-manager-1.3.3.22.zip到/opt/module目录

[atguigu@hadoop102 module]$ unzip kafka-manager-1.3.3.22.zip

4)进入到/opt/module/kafka-manager-1.3.3.22

[atguigu@hadoop102 module]$ cd /opt/module/kafka-manager-1.3.3.22/

5)启动KafkaManager

[atguigu@hadoop102 kafka-manager-1.3.3.22]$ export ZK_HOSTS="hadoop102:2181,hadoop103:2181,hadoop104:2181"

nohup bin/kafka-manager -Dhttp.port=7456 >start.log 2>&1 &

3Kafka Manager启动停止脚本

1)在/home/atguigu/bin目录下创建脚本km.sh

[atguigu@hadoop102 bin]$ vim km.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

echo " -------- 启动 KafkaManager -------"

cd /opt/module/kafka-manager-1.3.3.22/

export ZK_HOSTS="hadoop102:2181,hadoop103:2181,hadoop104:2181"

nohup bin/kafka-manager -Dhttp.port=7456 >start.log 2>&1 &

};;

"stop"){

echo " -------- 停止 KafkaManager -------"

ps -ef | grep ProdServerStart | grep -v grep |awk '{print $2}' | xargs kill

};;

esac

2)增加脚本执行权限

[atguigu@hadoop102 bin]$ chmod 777 km.sh

3)km集群启动脚本

[atguigu@hadoop102 module]$ km.sh start

4)km集群停止脚本

[atguigu@hadoop102 module]$ km.sh stop

3.1Kafka Producer压力测试

1)在/opt/module/kafka/bin目录下面有这两个文件。我们来测试一下

[atguigu@hadoop102 kafka]$ bin/kafka-producer-perf-test.sh --topic test --record-size 100 --num-records 100000 --throughput 1000 --producer-props bootstrap.servers=hadoop102:9092,hadoop103:9092,hadoop104:9092

说明:throughput 是每秒多少条信息,record size是一条信息有多大,单位是字节。num-records是总共发送多少条信息。

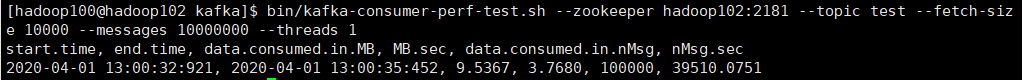

3.2Kafka Consumer压力测试

bin/kafka-consumer-perf-test.sh --zookeeper hadoop102:2181 --topic test --fetch-size 10000 --messages 10000000 --threads 1

4Flume消费Kafka数据写到HDFS

在hadoop104的/opt/module/flume/conf目录下创建kafka-flume-hdfs.conf文件

[atguigu@hadoop102 conf]$ vim kafka-flume-hdfs.conf

在文件配置如下内容

## 组件

a1.sources=r1 r2

a1.channels=c1 c2

a1.sinks=k1 k2

## source1

a1.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r1.batchSize = 5000

a1.sources.r1.batchDurationMillis = 2000

a1.sources.r1.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092,hadoop104:9092

a1.sources.r1.kafka.zookeeperConnect = hadoop102:2181,hadoop103:2181,hadoop104:2181

a1.sources.r1.kafka.topics=topic_start

## source2

a1.sources.r2.type = org.apache.flume.source.kafka.KafkaSource

a1.sources.r2.batchSize = 5000

a1.sources.r2.batchDurationMillis = 2000

a1.sources.r2.kafka.bootstrap.servers = hadoop102:9092,hadoop103:9092,hadoop104:9092

a1.sources.r2.kafka.zookeeperConnect = hadoop102:2181,hadoop103:2181,hadoop104:2181

a1.sources.r2.kafka.topics=topic_event

## channel1

a1.channels.c1.type=memory

a1.channels.c1.capacity=100000

a1.channels.c1.transactionCapacity=10000

## channel2

a1.channels.c2.type=memory

a1.channels.c2.capacity=100000

a1.channels.c2.transactionCapacity=10000

## sink1

a1.sinks.k1.type = hdfs

a1.sinks.k1.hdfs.path = /origin_data/gmall/log/topic_start/%Y-%m-%d

a1.sinks.k1.hdfs.filePrefix = logstart-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 30

a1.sinks.k1.hdfs.roundUnit = second

##sink2

a1.sinks.k2.type = hdfs

a1.sinks.k2.hdfs.path = /origin_data/gmall/log/topic_event/%Y-%m-%d

a1.sinks.k2.hdfs.filePrefix = logevent-

a1.sinks.k2.hdfs.round = true

a1.sinks.k2.hdfs.roundValue = 30

a1.sinks.k2.hdfs.roundUnit = second

## 不要产生大量小文件

a1.sinks.k1.hdfs.rollInterval = 30

a1.sinks.k1.hdfs.rollSize = 0

a1.sinks.k1.hdfs.rollCount = 0

a1.sinks.k2.hdfs.rollInterval = 30

a1.sinks.k2.hdfs.rollSize = 0

a1.sinks.k2.hdfs.rollCount = 0

## 控制输出文件是原生文件。

a1.sinks.k1.hdfs.fileType = CompressedStream

a1.sinks.k2.hdfs.fileType = CompressedStream

a1.sinks.k1.hdfs.codeC = lzop

a1.sinks.k2.hdfs.codeC = lzop

## 拼装

a1.sources.r1.channels = c1

a1.sinks.k1.channel= c1

a1.sources.r2.channels = c2

a1.sinks.k2.channel= c24日志消费Flume启动停止脚本

1)在/home/atguigu/bin目录下创建脚本f2.sh

[atguigu@hadoop102 bin]$ vim f2.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

for i in hadoop104

do

echo " --------启动 $i 消费flume-------"

ssh $i "nohup /opt/module/flume/bin/flume-ng agent --conf-file /opt/module/flume/conf/kafka-flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,LOGFILE >/opt/module/flume/log.txt 2>&1 &"

done

};;

"stop"){

for i in hadoop104

do

echo " --------停止 $i 消费flume-------"

ssh $i "ps -ef | grep kafka-flume-hdfs | grep -v grep |awk '{print \$2}' | xargs kill"

done

};;

esac

2)增加脚本执行权限

[atguigu@hadoop102 bin]$ chmod 777 f2.sh

3)f2集群启动脚本

[atguigu@hadoop102 module]$ f2.sh start

4)f2集群停止脚本

[atguigu@hadoop102 module]$ f2.sh stop

5 集群的启动停止脚本

1)在/home/atguigu/bin目录下创建脚本cluster.sh

[atguigu@hadoop102 bin]$ vim cluster.sh

在脚本中填写如下内容

#! /bin/bash

case $1 in

"start"){

echo " -------- 启动 集群 -------"

echo " -------- 启动 hadoop集群 -------"

/opt/module/hadoop-2.7.2/sbin/start-dfs.sh

ssh hadoop103 "/opt/module/hadoop-2.7.2/sbin/start-yarn.sh"

#启动 Zookeeper集群

zk.sh start

#启动 Flume采集集群

f1.sh start

#启动 Kafka采集集群

kf.sh start

sleep 4s;

#启动 Flume消费集群

f2.sh start

#启动 KafkaManager

km.sh start

};;

"stop"){

echo " -------- 停止 集群 -------"

#停止 KafkaManager

km.sh stop

#停止 Flume消费集群

f2.sh stop

#停止 Kafka采集集群

kf.sh stop

sleep 4s;

#停止 Flume采集集群

f1.sh stop

#停止 Zookeeper集群

zk.sh stop

echo " -------- 停止 hadoop集群 -------"

ssh hadoop103 "/opt/module/hadoop-2.7.2/sbin/stop-yarn.sh"

/opt/module/hadoop-2.7.2/sbin/stop-dfs.sh

};;

esac

2)增加脚本执行权限

[atguigu@hadoop102 bin]$ chmod 777 cluster.sh

3)cluster集群启动脚本

[atguigu@hadoop102 module]$ cluster.sh start

4)cluster集群停止脚本

[atguigu@hadoop102 module]$ cluster.sh stop

关于flume无法消费到hdfs问题

去core-site.xml配置

<configuration>

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop102:9000</value>

</property>

<!-- 指定Hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.2/data/tmp</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec,

com.hadoop.compression.lzo.LzoCodec,

com.hadoop.compression.lzo.LzopCodec

</value>

</property>

<property>

<name>io.compression.codec.lzo.class</name>

<value>com.hadoop.compression.lzo.LzoCodec</value>

</property>

</configuration>

589

589

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?