因为本科论文需要,所以写个blog留个纪念。代码只有核心推荐系统部分代码,对于其中文本繁体转简体的部分需要自己实现,如需要请联系我。

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import jieba

import nltk

import re

import numpy as np

from langconv import *

# df = pd.read_csv(r"C:\Users\heng\Desktop\数据新\douban_movies.csv")

df = pd.read_csv(r'E:\python\论文\数据\douban_movies.csv')

df.info()

# 去除简介为空列表的数据

df=df[(df.movie_comment!="[]")]

df.info()

# 去掉重复的数据标题

df = df.drop_duplicates(subset='movie_title')

df.info()

df = df[['movie_title', 'movie_director', 'movie_rating', 'movie_comment', 'movie_type']]

# 去掉含有空白数据

df.movie_comment.fillna('', inplace=True)

df.dropna(inplace=True)

df.info()

with open(r"E:\python\论文\文本聚类\stop_words.txt", encoding="utf8") as f:

stop_words = f.read()

#去掉数字,字母和特殊符号和括号中的演员

# def remove_special_characters(text):

# #pattern1 = '[0-9|a-z|A-Z|]'

# #text = re.sub(pattern1, '', text)

# #pattern2 = re.compile('[{}]'.format(re.escape(string.punctuation)))

# #text = re.sub(pattern2, '', text)

# pattern = re.compile(u'[^\u4E00-\u9FA5]')

# text = pattern.sub('',text)

# return text

def remove_special_characters(text):

#pattern1 = '[0-9|a-z|A-Z|]'

#text = re.sub(pattern1, '', text)

#pattern2 = re.compile('[{}]'.format(re.escape(string.punctuation)))

#text = re.sub(pattern2, '', text)

text = re.sub(u"\\(.*?\\)", "", str(text))

pattern = re.compile(u'[^\u4E00-\u9FA5]')

text = pattern.sub('',text)

return text

def normalize_document(doc):

doc = remove_special_characters(doc)

# lower case and remove special characters\whitespaces

# doc = re.sub(r'[^a-zA-Z0-9\s]', '', doc, re.I|re.A)

doc = doc.strip()

# 繁体转简体

doc = Converter("zh-hans").convert(doc)

# tokenize document

tokens = jieba.lcut(doc)

# filter stopwords out of document

filtered_tokens = [token for token in tokens if token not in stop_words]

# re-create document from filtered tokens

doc = ' '.join(filtered_tokens)

return doc

normalize_corpus = np.vectorize(normalize_document)

norm_corpus = normalize_corpus(list(df['movie_comment']))

len(norm_corpus)

from sklearn.feature_extraction.text import TfidfVectorizer

# stop_word_list = []

# for i in stop_words:

# stop_word_list.append(i)

# stop_words_add = stop_word_list+['故事', '影片', '导演', '拍摄', '配音','最佳', '本片', '电影', '荣获', '第届','一位']

tf = TfidfVectorizer(ngram_range=(1, 2), min_df=2)

tfidf_matrix = tf.fit_transform(norm_corpus)

tfidf_matrix.shape

# tv_ma = tfidf_matrix.toarray()

# vocab = tf.get_feature_names()

# a = pd.DataFrame(np.round(tv_ma,5),columns = vocab)

#############################################################################

#Compute Pairwise Document Similarity

from sklearn.metrics.pairwise import cosine_similarity

doc_sim = cosine_similarity(tfidf_matrix)

doc_sim_df = pd.DataFrame(doc_sim,index = df["movie_title"],columns = df["movie_title"])

doc_sim_df.head()

##############################################################################

#Get List of Movie Titles

movies_list = df['movie_title'].values

movies_list, movies_list.shape

###########################################################################

#Find Top Similar Movies for a Sample Movie

#Find movie ID

# movie_idx = np.where(movies_list == '红楼梦')[0][0]

# movie_idx

# #Get movie similarities

# movie_similarities = doc_sim_df.iloc[movie_idx].values

# movie_similarities

# #Get top 5 similar movie IDs

# similar_movie_idxs = np.argsort(-movie_similarities)[1:6]

# similar_movie_idxs

# #Get top 5 similar movies

# similar_movies = movies_list[similar_movie_idxs]

# similar_movies

############################################################################

#Build a movie recommender function to recommend top 5 similar movies for any movie

def movie_recommender(movie_titles, movies=movies_list, doc_sims=doc_sim_df):

# find movie id

movie_idx = np.where(movies == movie_titles)[0][0]

# get movie similarities

movie_similarities = doc_sims.iloc[movie_idx].values

# get top 5 similar movie IDs

similar_movie_idxs = np.argsort(-movie_similarities)[1:7]

# get top 5 movies

similar_movies = movies[similar_movie_idxs]

# return the top 5 movies

return similar_movies

#Sort Dataset by Popular Movies

pop_movies = df.sort_values(by='movie_rating', ascending=False)

pop_movies.head()

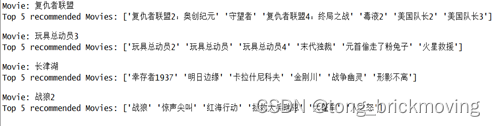

popular_movies = ["复仇者联盟","玩具总动员3","长津湖","战狼2"]

#Get Popular Movie Recommendations

for movie in popular_movies:

print('Movie:', movie)

recommd_movies = movie_recommender(movie_titles=movie)

# advise_mov = [i.encode('utf-8').decode('utf-8-sig') for i in recommd_movies]

# print('Top 5 recommended Movies:', advise_mov)

print('Top 5 recommended Movies:', recommd_movies)

print()

推荐效果如下:

3472

3472

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?