Sqoop

简介

Sqoop是一款开源工具,主要用于Hadoop(Hive)与传统数据库(mysql、postgresql…)间进行数据沟通,可以将一个关系型数据库(Oracle、Mysql、Postgres)中数据导入Hadoop中,也可将HDFS中数据导入到关系型数据库中。

原理

将导入或导出命令翻译为mapreduce程序来实现(主要是对inputformat和outputformat进行定制)

安装

- 下载安装包解压到linux

tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz -C /opt/module/

- 修改配置文件

[yyx@hadoop01 conf]$ mv sqoop-env-template.sh sqoop-env.sh

[yyx@hadoop01 conf]$ vim sqoop-env.sh

export HADOOP_COMMON_HOME=/opt/module/hadoop-2.7.2

export HADOOP_MAPRED_HOME=/opt/module/hadoop-2.7.2

export HIVE_HOME=/opt/module/hive

export ZOOKEEPER_HOME=/opt/module/zookeeper-3.4.10

export ZOOCFGDIR=/opt/module/zookeeper-3.4.10

export HBASE_HOME=/opt/module/hbase

- 拷贝JDBC驱动

[yyx@hadoop01 mysql-connector-java-5.1.27]$ ll

总用量 1264

-rw-r--r--. 1 root root 47173 10月 24 2013 build.xml

-rw-r--r--. 1 root root 222520 10月 24 2013 CHANGES

-rw-r--r--. 1 root root 18122 10月 24 2013 COPYING

drwxr-xr-x. 2 root root 71 2月 4 17:18 docs

-rw-r--r--. 1 root root 872303 10月 24 2013 mysql-connector-java-5.1.27-bin.jar

-rw-r--r--. 1 root root 61423 10月 24 2013 README

-rw-r--r--. 1 root root 63674 10月 24 2013 README.txt

drwxr-xr-x. 7 root root 67 10月 24 2013 src

[yyx@hadoop01 mysql-connector-java-5.1.27]$ cp mysql-connector-java-5.1.27-bin.jar /opt/module/sqoop/lib/

4.验证

[yyx@hadoop01 sqoop]$ bin/sqoop help

Warning: /opt/module/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/module/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

21/03/26 16:47:39 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

成功!!!

测试连接数据库:

[yyx@hadoop01 sqoop]$ bin/sqoop list-databases --connect jdbc:mysql://hadoop01:3306/ --username root --password 000000

Warning: /opt/module/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/module/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

21/03/26 16:48:29 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

21/03/26 16:48:29 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

21/03/26 16:48:29 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

metastore

mysql

performance_schema

test

简单案例

导入数据

从RDBMS向大数据集群(HDFS、HIVE、HBASE)中传输数据,用导入:import

从RDBMS到HDFS

mysel现有一张表:

mysql> select * from teacher;

+-------------+------+

| name | sum |

+-------------+------+

| chenfei | 2 |

| ddddd | 1 |

| lihua | 4 |

| rongzhuqing | 3 |

+-------------+------+

4 rows in set (0.00 sec)

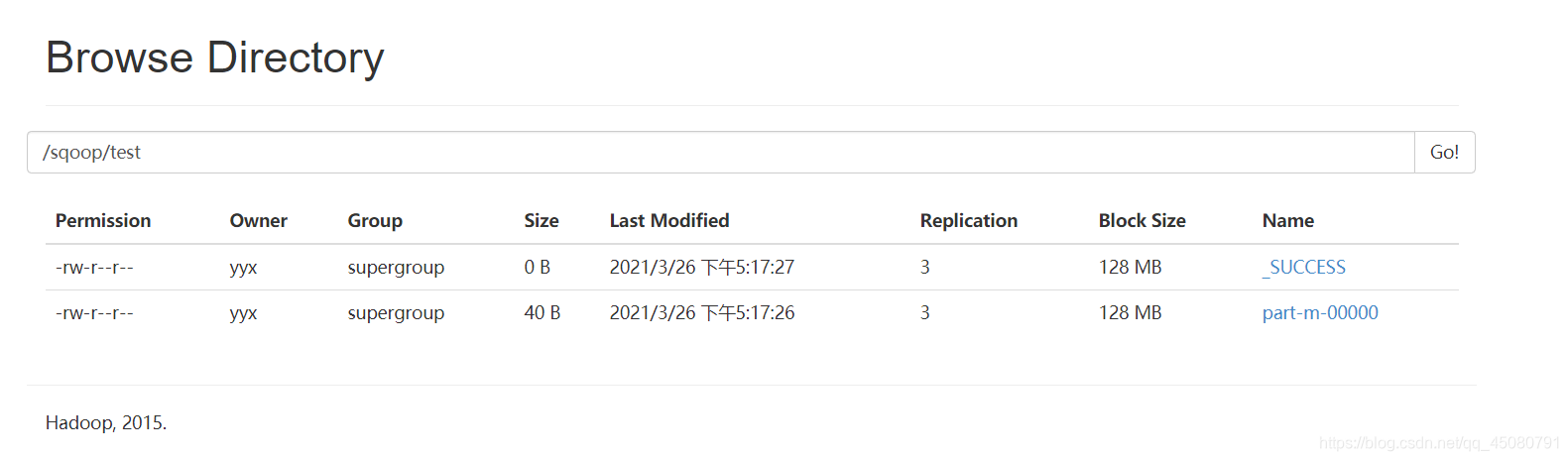

全部导入HDFS

创建好导入位置

[yyx@hadoop01 hadoop-2.7.2]$ hdfs dfs mkdir /sqoop/test/

插入:

bin/sqoop import \

--connect jdbc:mysql://hadoop01:3306/test \

--username root \

--password 000000 \

--table teacher \

--target-dir /sqoop/test \

--delete-target-dir \

--num-mappers 1 \

--fields-terminated-by "\t"

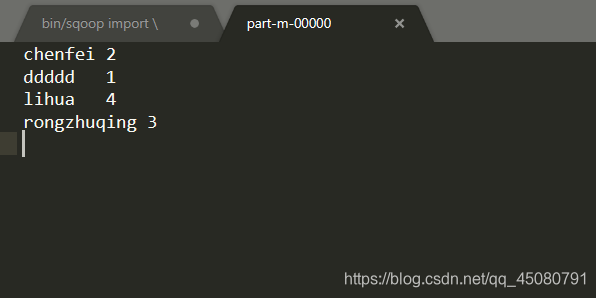

查询导入

bin/sqoop import \

--connect jdbc:mysql://hadoop01:3306/test \

--username root \

--password 000000 \

--target-dir /sqoop/test \

--delete-target-dir \

--num-mappers 1 \

--fields-terminated-by "," \

--query 'select name,sum from teacher where sum <=5 and $CONDITIONS;'

–delete-target-dir \表示如果存在文件,删除后添加

$CONDITIONS一定要加,里面封装了变量,否则会报错

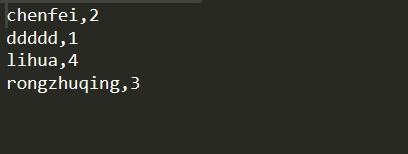

指定列导入

bin/sqoop import \

--connect jdbc:mysql://hadoop01:3306/test \

--username root \

--password 000000 \

--target-dir /sqoop/test \

--delete-target-dir \

--num-mappers 1 \

--fields-terminated-by "\t" \

--columns name \

--table teacher

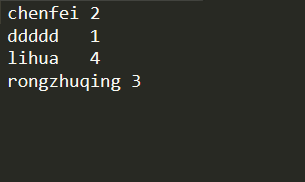

使用sqoop关键字筛选查询导入数据

bin/sqoop import \

--connect jdbc:mysql://hadoop01:3306/test \

--username root \

--password 000000 \

--target-dir /sqoop/test \

--delete-target-dir \

--num-mappers 1 \

--fields-terminated-by "\t" \

--table teacher \

--where "sum<5"

RDBMS到HIVE

bin/sqoop import \

--connect jdbc:mysql://hadoop01:3306/test \

--username root \

--password 000000 \

--table teacher \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "\t" \

--hive-overwrite \

--hive-table countteacher

可以实现不创建表

0: jdbc:hive2://hadoop01:10000> show tables;

+---------------+--+

| tab_name |

+---------------+--+

| countteacher |

| student |

| test |

+---------------+--+

3 rows selected (0.37 seconds)

0: jdbc:hive2://hadoop01:10000> select * from countteacher

0: jdbc:hive2://hadoop01:10000> ;

+--------------------+-------------------+--+

| countteacher.name | countteacher.sum |

+--------------------+-------------------+--+

| chenfei | 2 |

| ddddd | 1 |

| lihua | 4 |

| rongzhuqing | 3 |

+--------------------+-------------------+--+

4 rows selected (1.559 seconds)

第一步是先将数据导入到HDFS,第二部才是将HDFS中数据导入到HIVE

RDBMS到HBase

bin/sqoop import \

--connect jdbc:mysql://hadoop01:3306/test \

--username root \

--password 000000 \

--table teacher \

--columns "name,sum" \

--column-family "info" \

--hbase-create-table \

--hbase-row-key "sum" \

--hbase-table "hbase_teacher" \

--num-mappers 1 \

--split-by name

hbase(main):001:0> scan 'hbase_teacher'

ROW COLUMN+CELL

1 column=info:name, timestamp=1616811676595, value=ddddd

2 column=info:name, timestamp=1616811676595, value=chenfei

3 column=info:name, timestamp=1616811676595, value=rongzhuqing

4 column=info:name, timestamp=1616811676595, value=lihua

4 row(s) in 0.2690 seconds

数据导出

从HDFS、HIVE、HBASE向RDBMS中传输数据,使用export关键字

Hive 到 RDBMS

bin/sqoop export \

--connect jdbc:mysql://hadoop01:3306/company \

--username root \

--password 000000 \

--table teacher \

--num-mappers 1 \

--export-dir /user/hive/warehouse/staff_hive \

--input-fields-terminated-by "\t"

表不存在,mysql不会自动创建

脚本

用opt格式文件打包sqoop命令

1.创建一个.opt文件

$ mkdir opt

$ touch opt/job_HDFS2RDBMS.opt

2.编写脚本

$ vi opt/job_HDFS2RDBMS.opt

export

--connect

jdbc:mysql://hadoop01:3306/test

--username

root

--password

000000

--table

teacher

--num-mappers

1

--export-dir

/user/hive/warehouse/staff_hive

--input-fields-terminated-by

"\t"

执行:

bin/sqoop --options-file opt/job_HDFS2RDBMS.opt

常用参数

参数:

命令参数:

6834

6834

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?