需求

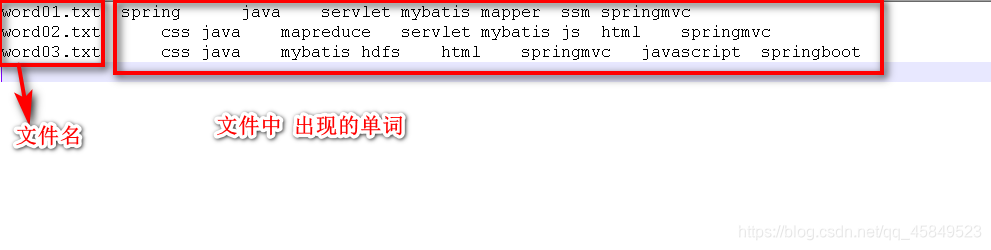

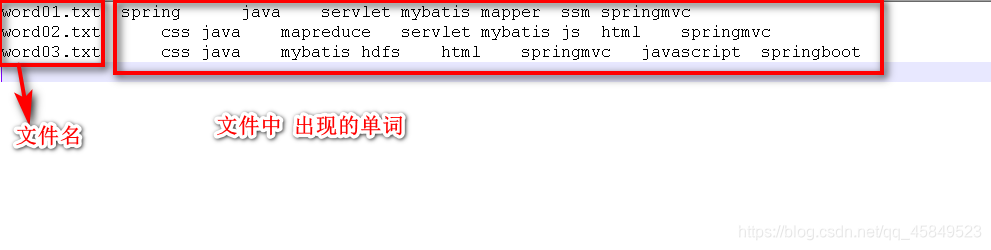

输入三个文件、统计每个文件出现的单词

word01.txt

java mapper

servlet

ssm

spring

springmvc

mybatis

word02.txt

java mapreduce

servlet

js

css

html

springmvc

mybatis

word03.txt

java hdfs

springboot

javascript

css

html

springmvc

mybatis

想要输出结果

编写Mapper类

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.InputSplit;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import java.io.IOException;

public class WordMapper extends Mapper<LongWritable, Text, Text, Text> {

private final Text word = new Text();

private Text fileName;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

FileSplit fileSplit = (FileSplit) context.getInputSplit();

String name = fileSplit.getPath().getName();

fileName = new Text(name);

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] words = value.toString().split(" ");

for (String word : words) {

this.word.set(word);

context.write(fileName, this.word);

}

}

}

编写Reducer类

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

import java.util.HashSet;

public class WordReducer extends Reducer<Text, Text, Text, Text> {

private final Text words = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

HashSet<String> words = new HashSet<>();

for (Text value : values) {

words.add(value.toString());

}

StringBuilder builder = new StringBuilder();

for (String word : words) {

builder.append(word).append("\t");

}

this.words.set(builder.toString());

context.write(key, this.words);

}

}

编写Driver类

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class WordDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Job job = Job.getInstance(new Configuration());

job.setJarByClass(WordDriver.class);

job.setMapperClass(WordMapper.class);

job.setReducerClass(WordReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1 );

}

}

输入文件

1498

1498

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?