文章目录

虚拟机安装及硬件配置

controller

cpu 2 内存 1.5G 一块网卡 硬盘100G

第一块网卡 VMnet 1 仅主机 eno16777736 192.168.222.5

neutron

cpu 2 内存 1.5G 三块网卡 硬盘 20G

第一块网卡 VMnet 1 仅主机 eno16777736 192.168.222.6

第二块网卡 VMnet 2 仅主机 eno33554960 172.168.0.6

第三块网卡 VMnet 3 仅主机 eno50332184 100.100.100.10

compute

MAX MAX 两块网卡 硬盘 100G

第一块网卡 VMnet 1 仅主机 eno16777736 192.168.222.10

第二块网卡 VMnet 2 仅主机 eno33554960 172.168.0.10

block

cpu 2 内存 1.5G 一块网卡 硬盘 20G 后面实验会再添加一块100G

第一块网卡 VMnet 1 仅主机 eno16777736 192.168.222.20

实验环境

vi /etc/sysconfig/network-scripts/ifcfg-eno16777736 #修改文件配置 IP 地址

BOOTPROTO=static

IPADDR=192.168.222.5

NETMASK=255.255.255.0

ONBOOT=yes

vi /etc/sysconfig/network-scripts/ifcfg-eno33554960

vi /etc/sysconfig/network-scripts/ifcfg-eno50332184

systemctl stop NetworkManager && systemctl disable NetworkManager

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

vi /etc/selinux/config

#修改

SELINUX=disabled

hostnamectl set-hostname xxxx.nice.com #配置主机名,比如 controller.nice.com, conpute.nice.com

vim /etc/hosts

#添加

192.168.222.5 controller.nice.com

192.168.222.6 network.nice.com

192.168.222.10 compute.nice.com

192.168.222.20 block.nice.com

使用 Serv-U-Tray 软件搭建本地 yum 源

win + R 输入 ncpa.cpl 添加 192.168.222.240 IP

名称为 “openstack” 然后 “下一步”–“下一步”

ipv4 地址选择刚刚添加的 IP(192.168.222.140) 然后 “下一步”

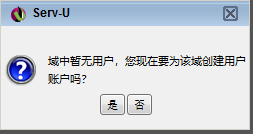

创建用户,选择 “是”—“是”

用户名设置为 “a” 然后 “下一步” 密码也设置为 “a”

选择 目录 7 的上一级目录 本地 yum 源 ,然后 下一步 — 完成

虚拟机配置 yum 源

cd /etc/yum.repos.d/

mkdir back

mv *.repo back

vi ftp.repo

[base]

name=base

baseurl=ftp://a:a@192.168.222.240/7/os/x86_64/

enabled=1

gpgcheck=0

[updates]

name=updates

baseurl=ftp://a:a@192.168.222.240/7/updates/x86_64/

enabled=1

gpgcheck=0

[extras]

name=extras

baseurl=ftp://a:a@192.168.222.240/7/extras/x86_64/

enabled=1

gpgcheck=0

[epel]

name=epel

baseurl=ftp://a:a@192.168.222.240/7/epel

enabled=1

gpgcheck=0

[rdo]

name=rdo

baseurl=ftp://a:a@192.168.222.240/7/rdo

enabled=1

gpgcheck=0

:%s /10.0.0.200/192.168.222.240\/7/g #替换字符

yum clean all

yum makecache

安装 OpenStack 预备包

1、安装 yum-plugin-priorities 包,防止高优先级软件被低优先级软件覆盖

yum -y install yum-plugin-priorities

2、安装 epel 扩展 YUM 源 (有本地 yum 不需要执行此命令)

yum -y install http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-2.noarch.rpm

3、安装 OpenStack YUM 源 (有本地 yum 不需要执行此命令)

yum -y install http://rdo.fedorapeople.org/openstack-juno/rdo-release-juno.rpm

4、更新操作系统

yum update

#更新后 centos 会生成自带的源文件,需要删除

cd /etc/yum.repo.d/

rm -rf C*

yum clean all

5、安装 OpenStack-selinux 自动管理 Selinux(selinux 关闭了就不用安装)

yum -y install openstack-selinux

6、controller 配置时间同步服务

[root@controller ~]$ yum -y install ntp

[root@controller ~]$ vim /etc/ntp.conf

restrict 192.168.222.0 mask 255.255.255.0 nomodify notrap #取消注释并修改

#server 0.centos.pool.ntp.org iburst #注释

#server 1.centos.pool.ntp.org iburst #注释

#server 2.centos.pool.ntp.org iburst #注释

#server 3.centos.pool.ntp.org iburst #注释

server 127.127.1.0 #添加

fudge 127.127.1.10 startum 10 #添加

[root@controller ~]$ systemctl start ntpd && systemctl enable ntpd #启动并自启

7、其余服务器同步时间

yum -y install ntpdate

crontab -e

*/1 * * * * /sbin/ntpdate -u controller.nice.com &> /dev/null

systemctl restart crond && systemctl enable crond

关机创建快照

OpenStack Identity(keystone)

在controller节点安装和配置认证服务

•配置先决条件

•安装并配置认证服务组件

•完成安装

1、安装 mariadb 软件包

[root@controller ~]$ yum -y install mariadb mariadb-server MySQL-python

[root@controller ~]$ yum -y install vim net-tools

2、编辑 /etc/yum.cnf 软件,设置绑定 IP,默认数据库引擎及默认字符集为 UTF-8

[root@controller ~]$ vim /etc/my.cnf

[mysqld] #在此标签下添加

bind-address = 192.168.222.5

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

3、启动数据库并设置为开机自启动并初始化数据库脚本

[root@controller ~]$ systemctl start mariadb && systemctl enable mariadb #启动并自启

[root@controller ~]$ mysql_secure_installation # 数据库初始化

………………

Enter current password for root (enter for none): #没有密码,直接回车

Set root password? [Y/n] y #是否创建密码

New password: #密码:123

Re-enter new password: #密码:123

………………

Remove anonymous users? [Y/n] y #是否移除匿名用户

………………

Disallow root login remotely? [Y/n] y #是否禁止 root 远程登录

………………

Remove test database and access to it? [Y/n] y #是否移除测试数据库

………………

Reload privilege tables now? [Y/n] y # 是否现在加载特权表

………………

Thanks for using MariaDB!

[root@controller ~]$ mysql -uroot -p123 #登录数据库测试

………………

MariaDB [(none)]> exit

`安装 Messaing Server 服务`

1、功能:协调操作和状态信息服务

2、常用的消息代理软件

RabbitMQ

Qpid

ZeroMQ

3、在 controller 节点安装 RabbitMQ

a、安装 RabbitMQ 软件包

[root@controller ~]$ yum -y install rabbitmq-server

b、启动服务并设置开机自启动

[root@controller ~]$ systemctl start rabbitmq-server && systemctl enable rabbitmq-server

c、rabbitmq 默认用户名和密码是 guest,可以通过下列命令修改

#rabbitmqctl change_password guest new_password #不需要修改

配置先决条件

1、创建认证服务数据库

a.登录mysql数据库

[root@controller ~]# mysql -uroot -p123

b.创建keystone数据库

CREATE DATABASE keystone;

c.创建keystone数据库用户,使其可以对keystone数据库有完全控制权限

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

exit

2、生成一个随机值作为管理令牌在初始配置

[root@controller ~]# openssl rand -hex 10:

b98e5dd20659d0f2ff64

安装和配置认证组件

1、安装软件包

[root@controller ~]# yum -y install openstack-keystone python-keystoneclient

2、编辑vim /etc/keystone/keystone.conf文件并作下列修改:

a.修改[DEFAULT]小节,定义初始管理令牌。

[DEFAULT]

…

admin_token=b98e5dd20659d0f2ff64 #刚才生成的随机值

b.修改[database]小节,配置数据库访问

[database]

…

connection=mysql://keystone:KEYSTONE_DBPASS@controller.nice.com/keystone

c.修改[token]小节,配置UUID提供者和SQL驱动

[token]

…

provider=keystone.token.providers.uuid.Provider

driver=keystone.token.persistence.backends.sql.Token

d.(可选)开启详细日志,协助故障排除

[DEFAULT]

…

verbose=True

3、常见通用证书的密钥,并限制相关文件的访问权限

[root@controller ~]# keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# chown -R keystone:keystone /var/log/keystone

[root@controller ~]# chown -R keystone:keystone /etc/keystone/ssl

[root@controller ~]# chmod -R o-rwx /etc/keystone/ssl

4、初始化keystone数据库

[root@controller ~]$ su -s /bin/sh -c "keystone-manage db_sync" keystone

完成安装

1、启动identity服务并设置开机启动

[root@controller ~]# systemctl start openstack-keystone.service && systemctl enable openstack-keystone.service

2、默认情况下,服务器会无限存储到期的令牌,在资源有限的情况下会严重影响

服务器性能。建议用计划任务,每小时删除过期的令牌

[root@controller ~]$ (crontab -l -u keystone 2>&1 | grep -q token_flush) || echo '@hourly /usr/bin/keystone-manage token_flush > /var/log/keystone/keystone-tokenflush.log 2>&1' >> /var/spool/cron/keystone

创建tenants(租户),(users)用户和(roles)角色

配置先决条件

1、配置管理员令牌

[root@controller ~]# export OS_SERVICE_TOKEN=b98e5dd20659d0f2ff64 #刚才生成的字符串

2、配置端点

[root@controller ~]# export OS_SERVICE_ENDPOINT=http://controller.nice.com:35357/v2.0

创建租户,用户和角色

1、创建用于管理的租户,用户和角色

`a.创建admin租户`

[root@controller ~]$ keystone tenant-create --name admin --description "Admin Tenant"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Admin Tenant |

| enabled | True |

| id | e8b7d41aa54a4d4287c4706d90c8696c |

| name | admin |

+-------------+----------------------------------+

`b.创建admin用户`

[root@controller ~]$ keystone user-create --name admin --pass ADMIN_PASS --email EMAIL_ADDRESS

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | EMAIL_ADDRESS |

| enabled | True |

| id | b8011e2729554a6f94d105a1228dcc85 |

| name | admin |

| username | admin |

+----------+----------------------------------+

`c.创建admin角色`

[root@controller ~]$ keystone role-create --name admin

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| id | b9b8626ae51e4688926a82a9df484dba |

| name | admin |

+----------+----------------------------------+

`d.添加admin租户和用户到admin角色`

[root@controller ~]$ keystone user-role-add --tenant admin --user admin --role admin

`e.创建用于dashboard访问的“_member_”角色`

[root@controller ~]$ keystone role-create --name _member_

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| id | 8d8e5ad9951043c5a357d49533aa0dcc |

| name | _member_ |

+----------+----------------------------------+

`f.添加admin租户和用户到_member_角色`

[root@controller ~]$ keystone user-role-add --tenant admin --user admin --role _member_

2、创建一个用于演示的demo租户和用户

`a.创建demo租户`

[root@controller ~]$ keystone tenant-create --name demo --description "Demo Tenant"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Demo Tenant |

| enabled | True |

| id | 87f80a3b9d524ccc9930773a364d2358 |

| name | demo |

+-------------+----------------------------------+

`b.创建的demo用户`

[root@controller ~]$ keystone user-create --name demo --pass DEMO_PASS --email EMAIL_ADDRESS

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | EMAIL_ADDRESS |

| enabled | True |

| id | 7421850fb60d46e6965406baa336a3f1 |

| name | demo |

| username | demo |

+----------+----------------------------------+

`c.添加demo租户和用户到_member_角色`

[root@controller ~]$ keystone user-role-add --tenant demo --user demo --role _member_

3、OpenStack服务业需要一个租户,用户和角色和其他服务进行交互。因此我们创建一个service的租户。任何一个OpenStack服务都要和它关联

[root@controller ~]$ keystone tenant-create --name service --description "Service Tenant"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Service Tenant |

| enabled | True |

| id | 20c39c5e876649bf82bd6faccef60ba9 |

| name | service |

+-------------+----------------------------------+

创建服务实体和API端点

1、在OpenStack环境中,identity服务管理一个服务目录,并使用这个目录在OpenStack环境中定位其他服务。

为identity服务创建一个服务实体

[root@controller ~]$ keystone service-create --name keystone --type identity --description "OpenStack ldentity"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStack ldentity |

| enabled | True |

| id | b2398f0f1d2341b4b251a65a3e9bf6c2 |

| name | keystone |

| type | identity |

+-------------+----------------------------------+

2、OpenStack环境中,identity服务管理目录以及与服务相关API断点。服务使用这个目录来沟通其他服务。

OpenStack为每个服务提供了三个API端点:admin(管理),internal(内部),public(公共)

为identity服务创建API端点

[root@controller ~]$ keystone endpoint-create \

--service-id $(keystone service-list | awk '/ identity / {print $2}') \

--publicurl http://controller.nice.com:5000/v2.0 \

--internalurl http://controller.nice.com:5000/v2.0 \

--adminurl http://controller.nice.com:35357/v2.0 \

--region regionOne

+-------------+---------------------------------------+

| Property | Value |

+-------------+---------------------------------------+

| adminurl | http://controller.nice.com:35357/v2.0 |

| id | b180f06c7cb94124bf0d690652e5488a |

| internalurl | http://controller.nice.com:5000/v2.0 |

| publicurl | http://controller.nice.com:5000/v2.0 |

| region | regionOne |

| service_id | b2398f0f1d2341b4b251a65a3e9bf6c2 |

+-------------+---------------------------------------+

确认操作

1、删除OS_SERVICE_TOKEN 和OS_SERVICE_ENDPOINT 临时变量

[root@controller ~]# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

2、使用 admin 租户和用户请求认证令牌

[root@controller ~]$ keystone --os-tenant-name admin --os-username admin --os-password ADMIN_PASS --os-auth-url http://controller.nice.com:35357/v2.0 token-get

+-----------+----------------------------------+

| Property | Value |

+-----------+----------------------------------+

| expires | 2021-03-30T12:25:44Z |

| id | 18aca812b5ff439a8332deab8bb8c414 |

| tenant_id | e8b7d41aa54a4d4287c4706d90c8696c |

| user_id | b8011e2729554a6f94d105a1228dcc85 |

+-----------+----------------------------------+

3、以 admin 租户和用户的身份查看租户列表

[root@controller ~]$ keystone --os-tenant-name admin --os-username admin --os-password ADMIN_PASS --os-auth-url http://controller.nice.com:35357/v2.0 tenant-list

+----------------------------------+---------+---------+

| id | name | enabled |

+----------------------------------+---------+---------+

| e8b7d41aa54a4d4287c4706d90c8696c | admin | True |

| 87f80a3b9d524ccc9930773a364d2358 | demo | True |

| 20c39c5e876649bf82bd6faccef60ba9 | service | True |

+----------------------------------+---------+---------+

4、以 admin 租户和用户的身份查看用户列表

[root@controller ~]$ keystone --os-tenant-name admin --os-username admin --os-password ADMIN_PASS --os-auth-url http://controller.nice.com:35357/v2.0 user-list

+----------------------------------+-------+---------+---------------+

| id | name | enabled | email |

+----------------------------------+-------+---------+---------------+

| b8011e2729554a6f94d105a1228dcc85 | admin | True | EMAIL_ADDRESS |

| 7421850fb60d46e6965406baa336a3f1 | demo | True | EMAIL_ADDRESS |

+----------------------------------+-------+---------+---------------+

5、以 admin 租户和用户的身份查看角色列表

[root@controller ~]$ keystone --os-tenant-name admin --os-username admin --os-password ADMIN_PASS --os-auth-url http://controller.nice.com:35357/v2.0 role-list

+----------------------------------+----------+

| id | name |

+----------------------------------+----------+

| 8d8e5ad9951043c5a357d49533aa0dcc | _member_ |

| b9b8626ae51e4688926a82a9df484dba | admin |

+----------------------------------+----------+

6、以 demo 租户和用户的身份请求认证令牌

[root@controller ~]$ keystone --os-tenant-name demo --os-username demo --os-password DEMO_PASS --os-auth-url http://controller.nice.com:35357/v2.0 token-get

+-----------+----------------------------------+

| Property | Value |

+-----------+----------------------------------+

| expires | 2021-03-30T12:37:57Z |

| id | 11e8c7c3447a498e9110f6e6892e89a9 |

| tenant_id | 87f80a3b9d524ccc9930773a364d2358 |

| user_id | 7421850fb60d46e6965406baa336a3f1 |

+-----------+----------------------------------+

7、以 demo 租户和用户的身份查看用户列表

[root@controller ~]$ keystone --os-tenant-name demo --os-username demo --os-password DEMO_PASS --os-auth-url http://controller.nice.com:35357/v2.0 user-list

You are not authorized to perform the requested action: admin_required (HTTP 403)

创建OpenStack客户端环境脚本

为了方便使用上面的环境变量和命令选项,我们为admin和demo租户和用户

创建环境脚本。

1、编辑admin.sh

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller.nice.com:35357/v2.0

2、编辑demo.sh

export OS_TENANT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=DEMO_PASS

export OS_AUTH_URL=http://controller.nice.com:5000/v2.0

加载客户端环境脚本

source admin.sh

OpenStack Image(glance)

在controller节点安装并配置OpenStack镜像服务

•配置先决条件

•安装并配置镜像服务组件

•完成安装

配置先决条件

1、创建数据库

a.以数据库管理员root的身份登录数据库

mysql-u root -p

b.创建glance数据库

CREATE DATABASE glance;

c.创建数据库用户glance,并授予其对glance数据库的管理权限

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

d.退出数据库连接

exit

2、启用admin环境脚本

source admin.sh

3、创建认证服务凭证,完成下列步骤:

a.创建glance用户

[root@controller ~]$ keystone user-create --name glance --pass GLANCE_PASS

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | |

| enabled | True |

| id | 9666a04863eb42eba6b879904dcda33a |

| name | glance |

| username | glance |

+----------+----------------------------------+

b.将glance用户链接到service租户和admin角色

keystone user-role-add --user glance --tenant service --role admin

c.创建glance服务

[root@controller ~]$ keystone service-create --name glance --type image --description "OpenStackImage Service"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStackImage Service |

| enabled | True |

| id | 9f04e56ad5ef4efc9604fe108c032a84 |

| name | glance |

| type | image |

+-------------+----------------------------------+

4、为OpenStack镜像服务创建认证服务端点

[root@controller ~]$ keystone endpoint-create \

--service-id $(keystone service-list | awk '/ image / {print $2}') \

--publicurl http://controller.nice.com:9292 \

--internalurl http://controller.nice.com:9292 \

--adminurl http://controller.nice.com:9292 \

--region regionOne

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| adminurl | http://controller.nice.com:9292 |

| id | 4f97020c55144a22b014b933dd71c191 |

| internalurl | http://controller.nice.com:9292 |

| publicurl | http://controller.nice.com:9292 |

| region | regionOne |

| service_id | 9f04e56ad5ef4efc9604fe108c032a84 |

+-------------+----------------------------------+

安装并配置镜像服务组件

1、安装软件包

yum -y install openstack-glance python-glanceclient

2、编辑vim /etc/glance/glance-api.conf文件,并完成下列操作

a.修改[database]小节,配置数据库连接:

[database]

…

connection=mysql://glance:GLANCE_DBPASS@controller.nice.com/glance

b.修改[keystone_authtoken]和[paste_deploy]小节,配置认证服务访问:

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=glance

admin_password=GLANCE_PASS

[paste_deploy]

…

flavor=keystone

c.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose=True

3、编辑vim /etc/glance/glance-registry.con文件,并完成下列配置:

a.在[database]小节中配置数据库连接:

[database]

…

connection=mysql://glance:GLANCE_DBPASS@controller.nice.com/glance

b.在[keystone_authtoken]和[paste_deploy]小节中配置认证服务访问

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=glance

admin_password=GLANCE_PASS

[paste_deploy]

…

flavor=keystone

c.末尾添加[glance_store]小节中配置本地文件系统存储和镜像文件的存放路径

[glance_store]

default_store=file

filesystem_store_datadir=/var/lib/glance/images/

d.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose=True

4、初始化镜像服务的数据库

su -s /bin/sh -c "glance-manage db_sync" glance

完成安装

启动镜像服务并设置开机自动启动:

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl enable openstack-glance-api.service openstack-glance-registry.service

验证安装

本节演示如何使用CirrOS验证镜像服务是否安装成功。CirrOS是一个小Linux镜像,可

以帮你验证镜像服务。

1、下载CirrOS镜像文件]

wget http://cdn.download.cirros-cloud.net/0.3.3/cirros-0.3.3-x86_64-disk.img (真实环境)

wget ftp://用户名:密码@ftp服务器ip/cirros-0.3.3-x86_64-disk.img (实验室环境) ` #也可以直接上传`

下载示例:

wget ftp://a:a@192.168.222.240/cirros-0.3.3-x86_64-disk.img

2、运行admin环境脚本,以便执行管理命令。

source admin.sh

3、上传镜像文件到镜像服务器

glance image-create --name "cirros-0.3.3-x86_64" --file cirros-0.3.3-x86_64-disk.img --disk-format qcow2 --container-format bare --is-public True --progress

glance image-create 相关选项含义:

--name <NAME> 镜像名称。

--file <FILE> 要上传文件及路径。

--disk-format <DISK_FORMAT> 镜像的磁盘格式。可以支持:ami,ari,aki,vhd,vmdk,raw,qcow2,vdi,iso格式。

--container-format <CONTAINER_FORMAT> 镜像容器格式。可以支持:ami,ari,aki,bare,ovf格式。

--is-public {True,False} 镜像是否可以被公共访问。

--progress 显示上传进度

4、确认镜像文件上传并验证属性

glance image-list

5、删除镜像文件

rm -r cirros-0.3.3-x86_64-disk.img

compute 配置

安装和配置 controller 节点

•配置先决条件

•安装和配置计算控制组件

•完成安装

配置先决条件

1、创建数据库,完成下列步骤:

a.使用数据库管理员root登录数据库

mysq l-u root -p

b.创建nova数据库

CREATE DATABASE nova;

c.创建数据库用户nova,并授予nova用户对nova数据库的完全控制权限。

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

d.退出数据库连接

exit

2、执行admin环境脚本

source admin.sh

3、在认证服务中创建计算服务的认证信息。完成下列步骤:

a.创建nova用户

[root@controller ~]$ keystone user-create --name nova --pass NOVA_PASS

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | |

| enabled | True |

| id | 4d7b81ade9f64a4a814d01dcc7b92a63 |

| name | nova |

| username | nova |

+----------+----------------------------------+

b.链接nova用户到service租户和admin角色

keystone user-role-add --user nova --tenant service --role admin

c.创建nova服务

[root@controller ~]$ keystone service-create --name nova --type compute --description "OpenStackCompute"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStackCompute |

| enabled | True |

| id | 3c1fa52fff6f4117be55e6a46043c848 |

| name | nova |

| type | compute |

+-------------+----------------------------------+

4、创建计算服务端点

keystone endpoint-create \

--service-id $(keystone service-list | awk '/ compute / {print $2}') \

--publicurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \

--internalurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \

--adminurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \

--region regionOne

+-------------+--------------------------------------------------+

| Property | Value |

+-------------+--------------------------------------------------+

| adminurl | http://controller.nice.com:8774/v2/%(tenant_id)s |

| id | 0b08755d4eeb4d99af4bdeed432e097b |

| internalurl | http://controller.nice.com:8774/v2/%(tenant_id)s |

| publicurl | http://controller.nice.com:8774/v2/%(tenant_id)s |

| region | regionOne |

| service_id | 3c1fa52fff6f4117be55e6a46043c848 |

+-------------+--------------------------------------------------+

controller 安装和配置计算控制组件

1、安装软件包

[root@controller ~]# yum -y install openstack-nova-api openstack-nova-cert openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler python-novaclient #七个软件包

2、编辑 vim /etc/nova/nova.conf文件,完成如下操作:

a.末尾添加[database]小节,配置数据库访问:

[database]

connection=mysql://nova:NOVA_DBPASS@controller.nice.com/nova

b.编辑[DEFAULT]小节,配置RabbitMQ消息队列访问:

rabbit_host=controller.nice.com

rabbit_password=guset

rpc_backend=rabbit

c.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务

[DEFAULT]

…

auth_strategy=keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=nova

admin_password=NOVA_PASS

d.编辑[DEFAULT]小节,配置my_ip选项为controller节点的管理接口ip:

[DEFAULT]

…

my_ip=192.168.222.5

e.编辑[DEFAULT]小节,配置VNCdialing服务的使用controller节点的管理接口ip:

[DEFAULT]

…

vncserver_listen=192.168.222.5

vncserver_proxyclient_address=192.168.222.5

f.编辑[glance]小节,配置镜像服务器的位置:

[glance]

…

host=controller.nice.com

g.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose=True

3、初始化计算数据库

su -s /bin/sh -c "nova-manage db sync" nova

完成安装

启动计算服务并配置开机自动启动:

[root@controller ~]# systemctl start openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

#六个软件包

[root@controller ~]# systemctl enable openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

查看是否服务是否正常

[root@controller ~]$ nova service-list

+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+

| 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2021-04-06T09:34:13.000000 | - |

| 2 | nova-conductor | controller.nice.com | internal | enabled | up | 2021-04-06T09:34:13.000000 | - |

| 4 | nova-scheduler | controller.nice.com | internal | enabled | up | 2021-04-06T09:34:13.000000 | - |

| 5 | nova-cert | controller.nice.com | internal | enabled | up | 2021-04-06T09:34:13.000000 | - |

+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+

安装配置一个compute节点

•安装并配置计算虚拟化组件

•完成安装

•controller 验证安装

安装并配置计算虚拟化组件

1、安装软件包

[root@compute ~]# yum -y install openstack-nova-compute sysfsutils

2、编辑vim /etc/nova/nova.conf文件,完成下列步骤:

a.编辑[DEFAULT]小节,配置RabbitMQ消息队列访问:

[DEFAULT]

…

rabbit_host=controller.nice.com

rabbit_password=RABBIT_PASS

rpc_backend=rabbit

b.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:

[DEFAULT]

…

auth_strategy=keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=nova

admin_password=NOVA_PASS

c.编辑[DEFAULT]小节,配置my_ip配置项:

[DEFAULT]

…

my_ip=192.168.222.10 #管理接口 IP

d.编辑[DEFAULT]小节,开启并配置远程控制台访问

[DEFAULT]

…

vnc_enabled=True

vncserver_listen=0.0.0.0

vncserver_proxyclient_address=192.168.222.10 #管理接口 IP

novncproxy_base_url=http://controller.nice.com:6080/vnc_auto.html

e.编辑[glance]小节,配置镜像服务器位置

[glance]

…

host=controller.nice.com

f.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose=True

完成安装

1、确认你的计算节点是否支持硬件虚拟化

egrep -c '(vmx|svm)' /proc/cpuinfo

如果返回值>=1,则说明你的计算节点硬件支持虚拟化,无需额外配置。

如果返回值=0,则说明你的计算节点硬件不支持虚拟化,你必须配置libvirt由使用KVM改为QEMU。

在vim /etc/nova/nova.conf文件中编辑[libvirt]小节

[libvirt]

…

virt_type=qemu

2、启动计算服务及依赖服务,并设置他们开机自动启动。

systemctl start libvirtd.service

systemctl start openstack-nova-compute.service

systemctl enable libvirtd.service openstack-nova-compute.service

在controller节点上验证安装

1、启用admin环境脚本

[root@controller ~]# source admin.sh

2、列出服务组件确认每一个进程启动成功

[root@controller ~]$ nova service-list

+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+

| 1 | nova-cert | controller.nice.com | internal | enabled | up | 2021-04-01T15:15:36.000000 | - |

| 2 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2021-04-01T15:15:36.000000 | - |

| 3 | nova-scheduler | controller.nice.com | internal | enabled | up | 2021-04-01T15:15:36.000000 | - |

| 4 | nova-conductor | controller.nice.com | internal | enabled | up | 2021-04-01T15:15:45.000000 | - |

| 5 | nova-compute | compute.nice.com | nova | enabled | up | 2021-04-01T15:15:39.000000 | - |

+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+

3、列出镜像服务中的镜像列表,确认连接认证服务器和镜像服务器成功

[root@controller ~]$ nova image-list

+--------------------------------------+---------------------+--------+--------+

| ID | Name | Status | Server |

+--------------------------------------+---------------------+--------+--------+

| 105ce1dd-3e20-4d3d-9c02-3419033e0acf | cirros-0.3.3-x86_64 | ACTIVE | |

+--------------------------------------+---------------------+--------+--------+

OpenStack Networking(neutron)

安装并配置controller节点

•配置先决条件

•安装网络服务组件

•配置网络服务组件

•配置Modular Layer2(ML2)插件

•配置计算服务使用Neutron

•完成安装

•验证

配置先决条件

1、创建数据库,完成下列步骤:

a.使用root用户连接mysql数据库

mysql -u root -p

b.创建neutron数据库

CREATE DATABASE neutron;

c.创建数据库用户neutron,并授予neutron用户对neutron数据库完全控制权限

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

d.退出数据库连接

exit

2、执行admin环境变量脚本

source admin.sh

3、在认证服务中创建网络服务的认证信息,完成下列步骤:

a.创建neutron用户

[root@controller ~]$ keystone user-create --name neutron --pass NEUTRON_PASS

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | |

| enabled | True |

| id | c3c0b0037e524324849d4d3921c3ac2b |

| name | neutron |

| username | neutron |

+----------+----------------------------------+

b.连接neutron用户到serivce租户和admin角色

keystone user-role-add --user neutron --tenant service --role admin

c.创建neutron服务

[root@controller ~]$ keystone service-create --name neutron --type network --description "OpenStackNetworking"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStackNetworking |

| enabled | True |

| id | f4068d5fe464457f8d6b12cca7581995 |

| name | neutron |

| type | network |

+-------------+----------------------------------+

d.创建neutron服务端点

[root@controller ~]$ keystone endpoint-create \

--service-id $(keystone service-list | awk '/ network / {print $2}') \

--publicurl http://controller.nice.com:9696 \

--adminurl http://controller.nice.com:9696 \

--internalurl http://controller.nice.com:9696 \

--region regionOne

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| adminurl | http://controller.nice.com:9696 |

| id | f2c7049c1e374e91a5072554c292dd6e |

| internalurl | http://controller.nice.com:9696 |

| publicurl | http://controller.nice.com:9696 |

| region | regionOne |

| service_id | f4068d5fe464457f8d6b12cca7581995 |

+-------------+----------------------------------+

安装网络服务组件

yum -y install openstack-neutron openstack-neutron-ml2 python-neutronclient which

配置网络服务组件

编辑 vim /etc/neutron/neutron.conf 文件,并完成下列操作:

a.编辑[database]小节,配置数据库访问

[database]

…

connection=mysql://neutron:NEUTRON_DBPASS@controller.nice.com/neutron

b.编辑[DEFAULT]小节,配置RabbitMQ消息队列访问:

[DEFAULT]

…

rpc_backend rabbit

rabbit_host=controller.nice.com

rabbit_password=guset

c.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:

[DEFAULT]

…

auth_strategy=keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=neutron

admin_password=NEUTRON_PASS

d.编辑[DEFAULT]小节,启用Modular Layer2(ML2)插件,路由服务和重叠IP地址功能:

[DEFAULT]

…

core_plugin=ml2

service_plugins=router

allow_overlapping_ips=True

e.编辑[DEFAULT]小节,配置当网络拓扑结构发生变化时通知计算服务:

[DEFAULT]

…

notify_nova_on_port_status_changes=True

notify_nova_on_port_data_changes=True

nova_url=http://controller.nice.com:8774/v2

nova_admin_auth_url=http://controller.nice.com:35357/v2.0

nova_region_name=regionOne

nova_admin_username=nova

nova_admin_tenant_id=SERVICE_TENANT_ID #ID 查看请看下面注

nova_admin_password=NOVA_PASS

注:可通过keystone tenant-get service,获取service租户ID。

操作

[root@controller ~]$ source admin.sh

[root@controller ~]$ keystone tenant-get service

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Service Tenant |

| enabled | True |

| id | 20c39c5e876649bf82bd6faccef60ba9 |

| name | service |

+-------------+----------------------------------+

f.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose=True

配置Modular Layer 2 (ML2) plug-in

编辑 vim /etc/neutron/plugins/ml2/ml2_conf.ini文件,并完成下列操作:

a.编辑[ml2]小节,启用flat和generic routing encapsulation (GRE)网络类型驱动,配置

GRE租户网络和OVS驱动机制。

[ml2]

…

type_drivers=flat,gre

tenant_network_types=gre

mechanism_drivers=openvswitch

b.编辑[ml2_type_gre]小节,配置隧道标识范围:

[ml2_type_gre]

…

tunnel_id_ranges=1:1000

c.编辑[securitygroup]小节,启用安全组,启用ipset并配置OVS防火墙驱动:

[securitygroup]

…

enable_security_group=True

enable_ipset=True

firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver #添加

配置计算服务使用Neutron

默认情况下,计算服务使用传统网络,我们需要重新配置。

编辑 vim /etc/nova/nova.conf文件,并完成下列操作:

a.编辑[DEFAULT]小节,配置API接口和驱动程序:

[DEFAULT]

...

network_api_class=nova.network.neutronv2.api.API

security_group_api=neutron

linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver=nova.virt.firewall.NoopFirewallDriver

b.编辑[neutron]小节,配置访问参数:

[neutron]

...

url=http://controller.nice.com:9696#3148行

auth_strategy=keystone#3198行

admin_auth_url=http://controller.nice.com:35357/v2.0#3189行

admin_tenant_name=service#3179行

admin_username=neutron#3162行

admin_password=NEUTRON_DBPASS#3167行

完成配置

1、为ML2插件配置文件创建连接文件。

[root@controller ~]$ ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

2、初始化数据库

[root@controller ~]$ su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade juno" neutron

INFO [alembic.migration] Context impl MySQLImpl.

………………

INFO [alembic.migration] Running upgrade 544673ac99ab -> juno, juno

3、重新启动计算服务

[root@controller ~]$ systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service

4、启动网络服务并配置开机自动启动

systemctl enable neutron-server.service

systemctl start neutron-server.service

验证

1、执行admin环境变量脚本

[root@controller ~]# source admin.sh

2、列出加载的扩展模块,确认成功启动neutron-server进程。

[root@controller ~]$ neutron ext-list

+-----------------------+-----------------------------------------------+

| alias | name |

+-----------------------+-----------------------------------------------+

| security-group | security-group |

| l3_agent_scheduler | L3 Agent Scheduler |

| ext-gw-mode | Neutron L3 Configurable external gateway mode |

| binding | Port Binding |

| provider | Provider Network |

| agent | agent |

| quotas | Quota management support |

| dhcp_agent_scheduler | DHCP Agent Scheduler |

| l3-ha | HA Router extension |

| multi-provider | Multi Provider Network |

| external-net | Neutron external network |

| router | Neutron L3 Router |

| allowed-address-pairs | Allowed Address Pairs |

| extraroute | Neutron Extra Route |

| extra_dhcp_opt | Neutron Extra DHCP opts |

| dvr | Distributed Virtual Router |

+-----------------------+-----------------------------------------------+

安装并配置network节点

配置先决条件

安装网络组件

配置网络通用组件

配置Modular Layer 2 (ML2) plug-in

配置Layer-3 (L3) agent

配置DHCP agent

配置metadata agent

配置Open vSwitch(OVS)服务

完成安装

验证

配置先觉条件

[root@network ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward=1

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

[root@network ~]# sysctl -p

安装网络组件

[root@network ~]# yum -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch

配置网络通用组件

网络通用组件配置包含认证机制,消息队列及插件。

编辑vim /etc/neutron/neutron.conf文件并完成下列操作:

a.编辑[database]小节,注释任何connection选项。因为network节点不能直接连接数

据库。

b.编辑[DEFAULT]小节,配置RabbitMQ消息队列访问

DEFAULT]

…

rpc_backend=rabbit

rabbit_host=controller.nice.com

rabbit_password=guset

c.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:

[DEFAULT]

…

auth_strategy= keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=neutron

admin_password=NEUTRON_PASS

d.编辑[DEFAULT]小节,启用Modular Layer2(ML2)插件,路由服务和重叠IP地址功能:

[DEFAULT]

…

core_plugin= ml2

service_plugins= router

allow_overlapping_ips=True

e.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose =True

配置Modular Layer 2 (ML2) plug-in

ML2插件使用Open vSwitch(OVS)机制为虚拟机实例提供网络框架。

编辑vim /etc/neutron/plugins/ml2/ml2_conf.ini文件并完成下列操作:

a.编辑[ml2]小节,启用flat和generic routing encapsulation (GRE)网络类型驱动,配置

GRE租户网络和OVS驱动机制。

[ml2]

…

type_drivers=flat,gre

tenant_network_types=gre

mechanism_drivers=openvswitch

b.编辑[ml2_type_flat]小节,配置外部网络:

[ml2_type_flat]

…

flat_networks=external

c.编辑[ml2_type_gre]小节,配置隧道标识范围:

[ml2_type_gre]

…

tunnel_id_ranges=1:1000

d.编辑[securitygroup]小节,启用安全组,启用

ipset并配置OVS防火墙驱动:

[securitygroup]

…

enable_security_group=True

enable_ipset=True

firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver#添加

e.末尾添加 [ovs]小节,配置Open vSwitch(OVS) 代理

[ovs]

…

local_ip=INSTANCE_TUNNELS_INTERFACE_IP_ADDRESS

tunnel_type=gre

enable_tunneling=True

bridge_mappings=external:br-ex

配置Layer-3 (L3) agent

编辑vim /etc/neutron/l3_agent.ini文件并完成下列配置:

a.编辑[DEFAULT]小节,配置驱动,启用网络命名空间,配置外部网络桥接

[DEFAULT]

…

interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver

use_namespaces=True

external_network_bridge=br-ex

b.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

debug=True

配置DHCP agent

1、编辑vim /etc/neutron/dhcp_agent.ini文件并完成下列步骤:

a.编辑[DEFAULT]小节,配置驱动和启用命名空间

[DEFAULT]

…

interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver=neutron.agent.linux.dhcp.Dnsmasq

use_namespaces=True

b.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

debug=True

2、(可选,在VMware虚拟机中可能是必要的!)配置DHCP选项,将MUT改为1454bytes,

以改善网络性能。

a.编辑vim /etc/neutron/dhcp_agent.ini文件并完成下列步骤:

编辑[DEFAULT]小节,启用dnsmasq配置:

[DEFAULT]

…

dnsmasq_config_file=/etc/neutron/dnsmasq-neutron.conf

b.创建并编辑vim /etc/neutron/dnsmasq-neutron.conf文件并完成下列配置:

启用DHCP MTU选项(26)并配置值为1454bytes

dhcp-option-force=26,1454

user=neutron

group=neutron

c.终止任何已经存在的dnsmasq进行

pkill dnsmasq

配置metadata agent

1、编辑vim /etc/neutron/metadata_agent.ini文件并完成下列配置:

a.编辑[DEFAULT]小节,配置访问参数:

[DEFAULT]

…

auth_url=http://controller.nice.com:5000/v2.0

auth_region=regionOne

admin_tenant_name=service

admin_user=neutron

admin_password=NEUTRON_PASS

b.编辑[DEFAULT]小节,配置元数据主机:

[DEFAULT]

…

nova_metadata_ip=controller.nice.com

c.编辑[DEFAULT]小节,配置元数据代理共享机密暗号:

[DEFAULT]

…

metadata_proxy_shared_secret=METADATA_SECRET

b.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

debug=True

2、在controller节点,编辑vim /etc/nova/nova.conf文件并完成下列配置

编辑[neutron]小节,启用元数据代理并配置机密暗号:

[neutron]

…

service_metadata_proxy=True

metadata_proxy_shared_secret= METADATA_SECRET

3、在controller节点,重新启动computeAPI服务

systemctl restart openstack-nova-api.service

配置Open vSwitch(OVS)服务

1、network启动VOS服务并配置开机自动启动:

[root@network ~]# systemctl enable openvswitch.service

[root@network ~]# systemctl start openvswitch.service

2、添加外部网桥(external birdge)

ovs-vsctl add-br br-ex

3、添加一个端口到外部网桥,用于连接外部物理网络

ovs-vsctl add-port br-ex eno50332184

注:将INTERFACE_NAME换成实际连接外部网卡接口名。如:eth2或eno50332208。

eno50332184: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 100.100.100.10 netmask 255.255.255.0 broadcast 100.100.100.255

………………

完成安装

1、创建网络服务初始化脚本的符号连接

[root@network ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

[root@network ~]# cp /usr/lib/systemd/system/neutron-openvswitch-agent.service /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig

[root@network ~]# sed -i ‘s,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g’ /usr/lib/systemd/system/neutron-openvswitch-agent.service

2、启动网络服务并设置开机自动启动

[root@network ~]# systemctl enable neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-ovs-cleanup.service

[root@network ~]# systemctl start neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-ovs-cleanup.service

验证(在controller节点执行下列命令)

1、执行admin环境变量脚本

source admin-openrc.sh

2、列出neutron代理,确认启动neutron agents成功。

neutron agent-list

+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

| 114fdfd6-47e9-4542-81a5-a35b900cd20b | Open vSwitch agent | network.nice.com | :-) | True | neutron-openvswitch-agent |

| 18df3c1b-0fdf-45f7-bc39-2198e7303df9 | L3 agent | network.nice.com | :-) | True | neutron-l3-agent |

| 2201f580-a555-4838-ad79-09c3d44c1698 | Metadata agent | network.nice.com | :-) | True | neutron-metadata-agent |

| 28bff0dd-84b3-4ee1-b1be-ae76c7e5e579 | DHCP agent | network.nice.com | :-) | True | neutron-dhcp-agent |

+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

安装并配置compute节点

•配置先决条件

•安装网络组件

•配置网络通用组件

•配置Modular Layer 2 (ML2) plug-in

•配置Open vSwitch(OVS) service

•配置计算服务使用网络

•完成安装

•验证

配置先决条件

1、编辑vim /etc/sysctl.conf文件,使其包含下列参数:

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

2、使/etc/sysctl.conf文件中的更改生效:

sysctl -p

安装网络组件

yum -y install openstack-neutron-ml2 openstack-neutron-openvswitch

配置网络通用组件

编辑vim /etc/neutron/neutron.conf文件并完成下列操作:

a.编辑[database]小节,注释左右connection配置项。因为计算节点不能直接连接数据库。

b.编辑[DEFAULT]小节,配置RabbitMQ消息代理访问:

[DEFAULT]

…

rpc_backend=rabbit

rabbit_host=controller.nice.com

rabbit_password=guset

c.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:

[DEFAULT]

…

auth_strategy= keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=neutron

admin_password=NEUTRON_PASS

d.编辑[DEFAULT]小节,启用Modular Layer2(ML2)插件,路由服务和重叠ip地址功能:

[DEFAULT]

…

core_plugin=ml2

service_plugins=router

allow_overlapping_ips=True

b.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose =True

配置Modular Layer 2 (ML2) plug-in

编辑vim /etc/neutron/plugins/ml2/ml2_conf.ini文件并完成下列操作:

a.编辑[ml2]小节,启用flat和generic routing encapsulation (GRE)网络类型驱动,GRE租

户网络和OVS机制驱动:

[ml2]

…

type_drivers=flat,gre

tenant_network_types=gre

mechanism_drivers=openvswitch

b.编辑[ml2_type_gre]小节,配置隧道标识符(id)范围:

[ml2_type_gre]

…

tunnel_id_ranges=1:1000

c.编辑[securitygroup]小节,启用安装组,ipset并配置OVS iptables防火墙驱动:

[securitygroup]

…

enable_security_group=True

enable_ipset=True

firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver#添加

d.末尾添加[ovs]小节,配置Open vSwitch(OVS) agent:

[ovs]

local_ip=172.168.0.10

tunnel_type=gre

enable_tunneling=True

配置Open vSwitch(OVS) service

启动OVS服务并设置开机自动启动:

[root@compute ~]# systemctl enable openvswitch.service

[root@compute ~]# systemctl start openvswitch.service

配置计算服务使用网络

编辑vim /etc/nova/nova.conf文件并完成下列操作:

a.编辑[DEFAULT]小节,配置API接口和驱动:

[DEFAULT]

…

network_api_class=nova.network.neutronv2.api.API

security_group_api=neutron

linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver=nova.virt.firewall.NoopFirewallDriver

b.编辑[neutron]小节,配置访问参数:

[neutron]

…

url=http://controller.nice.com:9696

auth_strategy=keystone

admin_auth_url=http://controller.nice.com:35357/v2.0

admin_tenant_name=service

admin_username=neutron

admin_password=NEUTRON_PASS

完成安装

1、创建网络服务初始化脚本的符号连接

[root@compute ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

[root@compute ~]# cp /usr/lib/systemd/system/neutron-openvswitch-agent.service /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig

[root@compute ~]# sed -i ‘s,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g’ /usr/lib/systemd/system/neutron-openvswitch-agent.service

2、重启计算服务:

[root@compute ~]# systemctl restart openstack-nova-compute.service

3、启动OVS代理服务并设置开机自动启动:

[root@compute ~]# systemctl enable neutron-openvswitch-agent.service

[root@compute ~]# systemctl start neutron-openvswitch-agent.service

验证(在controller节点执行下列命令)

1、执行admin环境变量脚本

[root@controller ~]# source admin.sh

2、列出neutron代理,确认启动neutron agents成功。

[root@controller ~]$ neutron agent-list

+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

| id | agent_type | host | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

| 114fdfd6-47e9-4542-81a5-a35b900cd20b | Open vSwitch agent | network.nice.com | :-) | True | neutron-openvswitch-agent |

| 18df3c1b-0fdf-45f7-bc39-2198e7303df9 | L3 agent | network.nice.com | :-) | True | neutron-l3-agent |

| 2201f580-a555-4838-ad79-09c3d44c1698 | Metadata agent | network.nice.com | :-) | True | neutron-metadata-agent |

| 28bff0dd-84b3-4ee1-b1be-ae76c7e5e579 | DHCP agent | network.nice.com | :-) | True | neutron-dhcp-agent |

| aa2ab5c3-2da1-4a90-ad58-4de706b64ac1 | Open vSwitch agent | compute.nice.com | :-) | True | neutron-openvswitch-agent |

+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

创建第一个网络

配置外部网络(在controller节点执行后面的命令)

•创建一个外部网络

•创建一个外部网络的子网

创建一个外部网络

1、执行admin环境变量脚本

[root@controller ~]# source admin.sh

2、创建网络

[root@controller ~]$ neutron net-create ext-net --shared --router:external True --provider:physical_network external --provider:network_type flat

Created a new network:

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | e25da901-9f50-4ba1-ae5d-e2ab00b62a34 |

| name | ext-net |

| provider:network_type | flat |

| provider:physical_network | external |

| provider:segmentation_id | |

| router:external | True |

| shared | True |

| status | ACTIVE |

| subnets | |

| tenant_id | c2226f07e2464f45a2d9f547170242ba |

+---------------------------+--------------------------------------+

创建一个外部网络的子网

创建子网:外网网段为:100.100.100.0/24,浮动地址范围为:100.100.100.11~100.100.100.200,网关为:

100.100.100.10:

[root@controller ~]$ neutron subnet-create ext-net --name ext-subnet \

--allocation-pool start=100.100.100.11,end=100.100.100.200 \

--disable-dhcp --gateway 100.100.100.10 100.100.100.0/24

Created a new subnet:

+-------------------+-------------------------------------------------------+

| Field | Value |

+-------------------+-------------------------------------------------------+

| allocation_pools | {"start": "100.100.100.11", "end": "100.100.100.200"} |

| cidr | 100.100.100.0/24 |

| dns_nameservers | |

| enable_dhcp | False |

| gateway_ip | 100.100.100.10 |

| host_routes | |

| id | 7f91305e-ef28-4df8-9eb6-95ff9b867d8f |

| ip_version | 4 |

| ipv6_address_mode | |

| ipv6_ra_mode | |

| name | ext-subnet |

| network_id | e25da901-9f50-4ba1-ae5d-e2ab00b62a34 |

| tenant_id | c2226f07e2464f45a2d9f547170242ba |

+-------------------+-------------------------------------------------------+

外部网络模板:

neutron subnet-create ext-net --name ext-subnet

–allocation-pool start=FLOATING_IP_START,end=FLOATING_IP_END

–disable-dhcp --gateway EXTERNAL_NETWORK_GATEWAY EXTERNAL_NETWORK_CIDR

FLOATING_IP_STAR=起始IP

FLOATING_IP_END=结束IP

EXTERNAL_NETWORK_GATEWAY=外部网络网关

EXTERNAL_NETWORK_CIDR=外部网络网段

配置租户网络(在controller节点执行后面的命令)

•创建租户网络

•创建租户网络的子网

•在租户网络创建一个路由器,用来连接外部网和租户网

1、执行demo环境变量脚本

[root@controller ~]# source demo.sh

2、创建租户网络

[root@controller ~]$ neutron net-create demo-net

Created a new network:

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | 25134ad0-a7b7-4a62-ac57-5059011e5a9c |

| name | demo-net |

| provider:network_type | gre |

| provider:physical_network | |

| provider:segmentation_id | 1 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | c2226f07e2464f45a2d9f547170242ba |

+---------------------------+--------------------------------------+

创建一个租户网络的子网

创建子网:

#neutron subnet-create demo-net --name demo-subnet |

–gateway TENANT_NETWORK_GATEWAY TENANT_NETWORK_CIDR

TENANT_NETWORK_GATEWAY=租户网的网关

TENANT_NETWORK_CIDR=租户网的网段

例如:租户网的网段为192.168.2.0/24,网关为192.168.2.1(网关通常默认为.1)

[root@controller ~]$ neutron subnet-create demo-net --name demo-subnet \

--gateway 192.168.2.1 192.168.2.0/24

Created a new subnet:

+-------------------+--------------------------------------------------+

| Field | Value |

+-------------------+--------------------------------------------------+

| allocation_pools | {"start": "192.168.2.2", "end": "192.168.2.254"} |

| cidr | 192.168.2.0/24 |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 192.168.2.1 |

| host_routes | |

| id | 651b7045-437e-45a7-86f2-cffec328113e |

| ip_version | 4 |

| ipv6_address_mode | |

| ipv6_ra_mode | |

| name | demo-subnet |

| network_id | 25134ad0-a7b7-4a62-ac57-5059011e5a9c |

| tenant_id | c2226f07e2464f45a2d9f547170242ba |

+-------------------+--------------------------------------------------+

在租户网络创建一个路由器,用来连接外部网和租户网

1、创建路由器

[root@controller ~]$ neutron router-create demo-router

Created a new router:

+-----------------------+--------------------------------------+

| Field | Value |

+-----------------------+--------------------------------------+

| admin_state_up | True |

| external_gateway_info | |

| id | ea143fe7-10f3-45f1-af91-9cc72e6c810a |

| name | demo-router |

| routes | |

| status | ACTIVE |

| tenant_id | c2226f07e2464f45a2d9f547170242ba |

+-----------------------+--------------------------------------+

2、附加路由器到demo租户的子网

[root@controller ~]# neutron router-interface-add demo-router demo-subnet

Added interface 262d2432-96bb-4d41-a3a0-5fa6df074baa to router demo-router.

3、通过设置网关,使路由器附加到外部网

[root@controller ~]# neutron router-gateway-set demo-router ext-net

Set gateway for router demo-router

确认连接

查看路由器获取到的 IP

[root@controller ~]$ neutron router-list

+--------------------------------------+-------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+-------------+-------+

| id | name | external_gateway_info | distributed | ha |

+--------------------------------------+-------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+-------------+-------+

| ea143fe7-10f3-45f1-af91-9cc72e6c810a | demo-router | {"network_id": "e25da901-9f50-4ba1-ae5d-e2ab00b62a34", "enable_snat": true, "external_fixed_ips": [{"subnet_id": "7f91305e-ef28-4df8-9eb6-95ff9b867d8f", "ip_address": "100.100.100.11"}]} | False | False |

+--------------------------------------+-------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+-------------+-------+

neutron 的 100.100.100.10 是第三块网卡 VM 3 在主机上 VM3 网卡配置一个互通的 IP 地址

然后在任何一台外部主机上 ping 路由器获取到的外部地址

OpenStack Dashboard(Horizon)

先决条件

•安装OpenStackcompute(nova)和identity(keystone) service。

•安装Python2.6或2.7,并必须支持Django。

•你的浏览器必须支持HTML5并启用cookies和JavaScript功能。

安装和配置

•安装仪表板组件

•配置仪表板

•完成安装

安装仪表板组件 controller节点

[root@controller ~]# yum -y install openstack-dashboard httpd mod_wsgi memcached python-memcached

配置仪表板

编辑vim /etc/openstack-dashboard/local_settings文件并完成下列配置

a.配置dashboard使用controller节点上的OpenStack服务

OPENSTACK_HOST = "controller.nice.com" #124行

b.设置允许来自所有网络的主机访问dashboard

ALLOWED_HOSTS = ['controller.nice.com', '*'] #15行

c.配置memcached会话存贮服务(将原有CACHES区域注释)

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

配置仪表板

d.(可选)配置时区

>TIME_ZONE = "TIME_ZONE"

地球人一般配置成:

TIME_ZONE = "Asia/Shanghai"

完成安装

1、在RHEL或CentOS上,配置SElinux去允许web服务器访问OpenStack服务(如果你没

关SElinux):

2.修改相关文件归属,使dashboardCSS可以被加载。

chown -R apache:apache /usr/share/openstack-dashboard/static

3.启动web服务和会话保存服务,并设置开机自动启动。

systemctl enable httpd.service memcached.service

systemctl start httpd.service memcached.service

验证

1、访问dashboard,在浏览器输入:http://controller.nice.com/dashboard

注:这里使用 goole 浏览器访问时不显示,使用 IE 却可以

2、使用admin或demo用户登录

账号:admin

密码:ADMIN_PASS

OpenStack Block Storage(cinder)

安装并配置controller节点

•配置先决条件

•安装并配置块存储控制组件

•完成安装

配置先决条件

1、创建数据库,并完成下列步骤:

a.以数据库管理员root的身份连接数据库:

mysql -u root -p123

b.创建cinder数据库

CREATE DATABASE cinder;

c.创建数据库用户cinder,并授予cinder用户对cinder数据库的完全控制权限:

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' IDENTIFIED BY 'CINDER_DBPASS';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY 'CINDER_DBPASS';

d.exit #退出数据库连接

2、执行admin环境变量脚本

source admin.sh

3、在认证服务中创建块存储服务认证信息,完成下列步骤:

a.创建cinder用户

[root@controller ~]$ keystone user-create --name cinder --pass CINDER_PASS

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | |

| enabled | True |

| id | e2a24d5140154f108e1bcdb60bbd2c57 |

| name | cinder |

| username | cinder |

+----------+----------------------------------+

b.链接cinder用户到service租户和admin角色

keystone user-role-add --user cinder --tenant service --role admin

c.创建cinder服务

[root@controller ~]$ keystone service-create --name cinder --type volume --description "OpenStack Block Storage"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | 26dc151b1b86442191bfcb7af4d0c981 |

| name | cinder |

| type | volume |

+-------------+----------------------------------+

[root@controller ~]$ keystone service-create --name cinderv2 --type volumev2 --description "OpenStack Block Storage"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | b82b0e123a2043878aa0e7424b815299 |

| name | cinderv2 |

| type | volumev2 |

+-------------+----------------------------------+

d.创建块存储服务端点

[root@controller ~]$ keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volume / {print $2}') \

--publicurl http://controller.nice.com:8776/v1/%\(tenant_id\)s \

--internalurl http://controller.nice.com:8776/v1/%\(tenant_id\)s \

--adminurl http://controller.nice.com:8776/v1/%\(tenant_id\)s \

--region regionOne

+-------------+--------------------------------------------------+

| Property | Value |

+-------------+--------------------------------------------------+

| adminurl | http://controller.nice.com:8776/v1/%(tenant_id)s |

| id | 6ab91a43bc3f4122a9ce40337034f721 |

| internalurl | http://controller.nice.com:8776/v1/%(tenant_id)s |

| publicurl | http://controller.nice.com:8776/v1/%(tenant_id)s |

| region | regionOne |

| service_id | 26dc151b1b86442191bfcb7af4d0c981 |

+-------------+--------------------------------------------------+

[root@controller ~]$ keystone endpoint-create \

--service-id $(keystone service-list | awk '/ volumev2 / {print $2}') \

--publicurl http://controller.nice.com:8776/v2/%\(tenant_id\)s \

--internalurl http://controller.nice.com:8776/v2/%\(tenant_id\)s \

--adminurl http://controller.nice.com:8776/v2/%\(tenant_id\)s \

--region regionOne

+-------------+--------------------------------------------------+

| Property | Value |

+-------------+--------------------------------------------------+

| adminurl | http://controller.nice.com:8776/v2/%(tenant_id)s |

| id | 43b51db7484743a1ad4519386b1ec817 |

| internalurl | http://controller.nice.com:8776/v2/%(tenant_id)s |

| publicurl | http://controller.nice.com:8776/v2/%(tenant_id)s |

| region | regionOne |

| service_id | b82b0e123a2043878aa0e7424b815299 |

+-------------+--------------------------------------------------+

安装并配置块存储控制组件

1、安装软件包

yum -y install openstack-cinder python-cinderclient python-oslo-db

2、编辑vim /etc/cinder/cinder.conf文件并完成下列操作:

a.编辑[database]小节,配置数据库连接:

[database]

…

connection=mysql://cinder:CINDER_DBPASS@controller.nice.com/cinder

b.编辑[DEFAULT]小节,配置RabbitMQ消息代理访问:

[DEFAULT]

…

rpc_backend= rabbit

rabbit_host=controller.nice.com

rabbit_password=guest

c.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:

[DEFAULT]

…

auth_strategy=keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=cinder

admin_password=CINDER_PASS

d.编辑[DEFAULT]小节,配置my_ip选项使用controller节点的控制端口ip:

[DEFAULT]

…

my_ip=192.168.222.5

e.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose =True

3、初始化块存储服务数据库

[root@controller ~]$ su -s /bin/sh -c "cinder-manage db sync" cinder

2021-04-07 03:42:46.863 130652 INFO migrate.versioning.api [-] 0 -> 1...

………………

2021-04-07 03:42:47.688 130652 INFO migrate.versioning.api [-] done

完成安装

启动块存储服务并设置开机自动启动:

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

安装并配置block1节点

•配置先决条件

•安装并配置块存储卷组件

•完成安装

配置先决条件

1、添加一个新的硬盘(如:sdb),并分将全部空间分成一个主分区。

2、配置网卡信息

IP address: 192.168.100.21

Network mask: 255.255.255.0 (or /24)

Default gateway: 192.168.100.1

3、设置主机名为block1.nice.com,并添加对应的DNS记录。设置NTP服务。

4、安装LVM软件包(根据自身情况)

yum -y install lvm2

5、启动LVM服务并这只开机自动启动(根据自身情况)

systemctl enable lvm2-lvmetad.service

systemctl start lvm2-lvmetad.service

6、创建物理卷/dev/sdb1:

pvcreate /dev/sdb

7、创建卷组cinder-volumes(名字不要改):

vgcreate cinder-volumes /dev/sdb

8、编辑vim /etc/lvm/lvm.conf文件,使系统只扫描启用LVM的磁盘。防止识别其他非LVM磁盘对块存储服务造成影响。

编辑devices小节,添加过滤器允许/dev/sdb磁盘,拒绝其他设备。

devices {

...

filter = [ "a/sdb/", "r/.*/"]

警告:如果你的系统磁盘使用了LVM,则必须添加系统盘到过滤器中:

filter = [ "a/sda", "a/sdb/", "r/.*/"]

同样,如果conpute节点的系统盘也使用了LVM,则也需要修改

vim /etc/lvm/lvm.conf文件。并添加过滤器。

filter = [ "a/sdb/", "r/.*/"]

安装并配置块存储卷组件

1、安装软件包

yum -y install openstack-cinder targetcli python-oslo-db MySQL-python

2、编辑vim /etc/cinder/cinder.conf文件并完成下列操作:

a.编辑[database]小节,配置数据库访问:

[database]

…

connection=mysql://cinder:CINDER_DBPASS@controller.nice.com/cinder

b.编辑[DEFAULT]小节,配置RabbitMQ消息代理访问:

[DEFAULT]

…

rpc_backend=rabbit

rabbit_host=controller.nice.com

rabbit_password=guest

c.编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:

[DEFAULT]

…

auth_strategy=keystone

[keystone_authtoken]

…

auth_uri=http://controller.nice.com:5000/v2.0

identity_uri=http://controller.nice.com:35357

admin_tenant_name=service

admin_user=cinder

admin_password=CINDER_PASS

d.编辑[DEFAULT]小节,配置my_ip选项:

[DEFAULT]

…

my_ip=192.168.222.20 #MANAGEMENT_INTERFACE_IP_ADDRESS

e.编辑[DEFAULT]小节,配置镜像服务器位置:

[DEFAULT]

…

glance_host=controller.nice.com

f.编辑[DEFAULT]小节,配置块存储服务使用lioadmiSCSI服务

[DEFAULT]

…

iscsi_helper=lioadm

g.(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。

[DEFAULT]

…

verbose=True

完成安装

启动块存储volume服务和iSCSI服务,并设置开机自动启动。

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

验证(在controller节点完成下列操作)

1、执行admin环境变量脚本

[root@controller ~]# source admin.sh

2、列出服务组件确认每个进程启动成功

root@controller ~]$ cinder service-list

+------------------+---------------------+------+---------+-------+----------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+------------------+---------------------+------+---------+-------+----------------------------+-----------------+

| cinder-scheduler | controller.nice.com | nova | enabled | up | 2021-04-06T20:20:09.000000 | None |

| cinder-volume | block.nice.com | nova | enabled | up | 2021-04-06T20:20:15.000000 | None |

+------------------+---------------------+------+---------+-------+----------------------------+-----------------+

3、执行demo用户环境变量脚本

source demo-openrc.sh

4、创建1GB的卷

[root@controller ~]$ cinder create --display-name demo-volume1 1

+---------------------+--------------------------------------+

| Property | Value |

+---------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| created_at | 2021-04-06T20:22:33.034598 |

| display_description | None |

| display_name | demo-volume1 |

| encrypted | False |

| id | d3faf3c1-aa4c-490a-b319-2eeb1f5a1d52 |

| metadata | {} |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| volume_type | None |

+---------------------+--------------------------------------+

5、确认卷已创建并可用

[root@controller ~]$ cinder list

+--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

| ID | Status | Display Name | Size | Volume Type | Bootable | Attached to |

+--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

| d3faf3c1-aa4c-490a-b319-2eeb1f5a1d52 | available | demo-volume1 | 1 | None | false | |

+--------------------------------------+-----------+--------------+------+-------------+----------+-------------+

OpenStack Launch an instance

利用OpenStackNetworking(neutron)启动一个实例

•创建密钥对

•启动一个实例

•通过虚拟控制台访问你的实例

•远程访问你的实例

•为你的实例添加额外的云硬盘

创建密钥对

大多数云镜像使用公钥认证,这有别于传统的用户名/密码认证。在启动一个实例之前,你必须使用ssh-keygen命令生成一个密钥对,并将公钥添加到你的OpenStack环境。

1、执行demo环境变量脚本

source demo.sh

2、生成密钥对

ssh-keygen

3、添加公钥到OpenStack环境

nova keypair-add --pub-key ~/.ssh/id_rsa.pub demo-key

4、验证公钥是否添加成功

nova keypair-list

+----------+-------------------------------------------------+

| Name | Fingerprint |

+----------+-------------------------------------------------+

| demo-key | db:a8:79:91:18:75:83:44:b0:4f:af:1c:e4:23:97:76 |

+----------+-------------------------------------------------+

启动一个实例

要启动一个实例,你必须最少指定flavor(云主机类型),imagename(镜像名),network(网络),security group(安全组),key(密钥)和instance name(实例名)。

1、flavor用来指定一个虚拟的独立分配的资源。包括cpu,内存和存储。

查看可用的flavor:

[root@controller ~]$ nova flavor-list

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

`2、列出可用的镜像`

[root@controller ~]$ nova image-list

+--------------------------------------+---------------------+--------+--------+

| ID | Name | Status | Server |

+--------------------------------------+---------------------+--------+--------+

| 105ce1dd-3e20-4d3d-9c02-3419033e0acf | cirros-0.3.3-x86_64 | ACTIVE | |

+--------------------------------------+---------------------+--------+--------+

`3、列出可用的网络`

[root@controller ~]$ neutron net-list

+--------------------------------------+----------+-------------------------------------------------------+

| id | name | subnets |

+--------------------------------------+----------+-------------------------------------------------------+

| c5ecb67f-10c0-4a66-a3d0-b65e8baf7c07 | demo-net | 3e625d44-f8f5-4cd4-8197-73550eff52ff 192.168.2.0/24 |

| e25da901-9f50-4ba1-ae5d-e2ab00b62a34 | ext-net | 7f91305e-ef28-4df8-9eb6-95ff9b867d8f 100.100.100.0/24 |

+--------------------------------------+----------+-------------------------------------------------------+

`4、列出可用的安全组`

[root@controller ~]$ nova secgroup-list

+--------------------------------------+---------+-------------+

| Id | Name | Description |

+--------------------------------------+---------+-------------+

| c192c9a4-d996-4a05-8310-53b14fe1cc8e | default | default |

+--------------------------------------+---------+-------------+

5、启动实例

nova boot --flavor m1.tiny --image cirros-0.3.3-x86_64 --nic net-id=DEMO_NET_ID --security-group default --key-name demo-key demo-instance1

例如:

[root@controller ~]$ nova boot --flavor m1.tiny --image cirros-0.3.3-x86_64 --nic net-id=c5ecb67f-10c0-4a66-a3d0-b65e8baf7c07 --security-group default --key-name demo-key demo-instance

+--------------------------------------+------------------------------------------------------------+

| Property | Value |

+--------------------------------------+------------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| OS-SRV-USG:launched_at | - |

| OS-SRV-USG:terminated_at | - |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | Zf7FGNUnug5E |

| config_drive | |

| created | 2021-04-07T08:24:45Z |

| flavor | m1.tiny (1) |

| hostId | |

| id | 3f8eab7b-1d01-4169-856a-2e4bbb21a457 |

| image | cirros-0.3.3-x86_64 (105ce1dd-3e20-4d3d-9c02-3419033e0acf) |

| key_name | demo-key |

| metadata | {} |

| name | demo-instance |

| os-extended-volumes:volumes_attached | [] |

| progress | 0 |

| security_groups | default |

| status | BUILD |

| tenant_id | 9f5b0b3758164bc6bd2a6dbd2b012d5d |

| updated | 2021-04-07T08:24:45Z |

| user_id | 80e503c2a70d463fab77a10753e7285f |

+--------------------------------------+------------------------------------------------------------+

6、查看实例状态

[root@controller ~]$ nova list

+--------------------------------------+----------------+--------+------------+-------------+----------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+----------------+--------+------------+-------------+----------+

| 3f8eab7b-1d01-4169-856a-2e4bbb21a457 | demo-instance | BUILD | spawning | NOSTATE | |

+--------------------------------------+----------------+--------+------------+----------

未完待续……

10万+

10万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?