flinkcdc抽取oracle数据

首先需要在数据库方面对数据进行设置,参考网址如下

https://blog.csdn.net/weixin_46580067/article/details/124985447?ops_request_misc=%257B%2522request%255Fid%2522%253A%2522169323165516800185874882%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fall.%2522%257D&request_id=169323165516800185874882&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2allfirst_rank_ecpm_v1~rank_v31_ecpm-2-124985447-null-null.142v93insert_down28v1&utm_term=flinkcdc%E6%8A%BD%E5%8F%96oracle%E6%95%B0%E6%8D%AE%E4%BB%A3%E7%A0%81&spm=1018.2226.3001.4187

直接上代码

package flinkcdc;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.TableResult;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class FlinkCdcOracle {

public static void main(String[] args) throws Exception {

//创建flink环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.disableOperatorChaining();

StreamTableEnvironment tenv = StreamTableEnvironment.create(env);

tenv.executeSql("create table employees_flink(\n" +

"EMPLOYEE_ID int , \n" +

"FIRST_NAME string, \n" +

"LAST_NAME string, \n" +

"EMAIL string, \n" +

"PHONE_NUMBER string, \n" +

"JOB_ID string, \n" +

"SALARY double, \n" +

"COMMISSION_PCT double, \n" +

"MANAGER_ID int, \n" +

"DEPARTMENT_ID int\n" +

")WITH (\n" +

" 'connector' = 'oracle-cdc', \n" +

" 'hostname' = 'localhost', \n" +

" 'port' = '1521', \n" +

" 'username' = 'test', \n" +

" 'password' = 'test', \n" +

" 'database-name' = 'ORCL', \n" +

" 'schema-name' = 'TEST', // 注意这里要大写\n" +

" 'table-name' = 'employees', \n" +

" 'debezium.log.mining.continuous.mine'='true',\n" +

" 'debezium.log.mining.strategy'='online_catalog', \n" +

" 'debezium.database.tablename.case.insensitive'='false',\n" +

" 'scan.startup.mode' = 'initial')");

TableResult tableResult = tenv.executeSql("select * from employees_flink");

tableResult.print();

}

}

参数详解

Debezium 的开发者将 “大小写不敏感” 统一定义为了 “需要将表名转换为小写,数据库名转为大写”

然而对于 Oracle 数据库,“大小写不敏感” 却意味着在内部元信息存储时,需要将表名转换为大写。

因而 Debezium 在读取到 “大小写不敏感” 的配置后,按照上述代码逻辑,只会因为尝试去读取小写的表名而报错。

解决办法:需使用 Oracle “大小写不敏感” 的特性,可以在 create 语句中加上 'debezium.database.tablename.case.insensitive'='false'

*/

'debezium.database.tablename.case.insensitive'='false',

/*

以FLINKCDC读取mysql数据为例。flinkcdc读取mysql数据分为两个阶段,

首先是快照阶段,并行读取快照数据,快照阶段按照表的主键和行数别切分为为多个是数据块,被分配到到不同的reader处理。

快照阶段支持块级别的checkpoint,当checkpoint失败后从上一次的checkpoint恢复

只有当块级别的数据块完全checkpoint之后才会进入到binlog 读取阶段

仅启动一个 task,即一个并行度。为了保证快照数据和 binlog 数据的顺序 。

inlog reader 把 binlog 的位置记录在 Flink 状态中,因此,在 binlog 阶段可以支持行级别的 checkpoint。

当表没有主键时,Incremental snapshot reading 会失败,这时需要修改配置,

把 scan.incremental.snapshot.enabled 设置为 false,使用旧的快照读取机制

*/

'scan.incremental.snapshot.enabled'='false'

版本二

package flinkcdc;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.TableResult;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class FlinkCdcOracle {

public static void main(String[] args) throws Exception {

//创建flink环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

StreamTableEnvironment tenv = StreamTableEnvironment.create(env);

//启用检查点,检查点设置每个5000毫秒

env.enableCheckpointing(5000);

//配置检查点保存路径

env.getCheckpointConfig().setCheckpointStorage("hdfs://hdfs-cluster/flink/flinkcheckpoint");

//设置检查点模式

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//设置检查点之间的最小暂停时间1000毫秒

env.getCheckpointConfig().setMinPauseBetweenCheckpoints(1000);

//设置检查点超时时间

env.getCheckpointConfig().setCheckpointTimeout(60000);

tenv.executeSql("create table employees_flink(\n" +

"EMPLOYEE_ID int , \n" +

"FIRST_NAME string, \n" +

"LAST_NAME string, \n" +

"EMAIL string, \n" +

"PHONE_NUMBER string, \n" +

"HIRE_DATE string, \n" +

"JOB_ID string, \n" +

"SALARY double, \n" +

"COMMISSION_PCT double, \n" +

"MANAGER_ID int, \n" +

"DEPARTMENT_ID int\n" +

")WITH (\n" +

" 'connector' = 'oracle-cdc', \n" +

" 'hostname' = 'localhost', \n" +

" 'port' = '1521', \n" +

" 'username' = 'test', \n" +

" 'password' = 'test', \n" +

" 'database-name' = 'ORCL', \n" +

" 'schema-name' = 'TEST', // 注意这里要大写\n" +

" 'table-name' = 'employees', \n" +

" 'debezium.log.mining.continuous.mine'='true',\n" +

" 'debezium.log.mining.strategy'='online_catalog', \n" +

" 'debezium.database.tablename.case.insensitive'='false',\n" +

" 'scan.startup.mode' = 'initial')");

TableResult tableResult = tenv.executeSql("select * from employees_flink");

tableResult.print();

}

}

注意此时运行会如下错误:

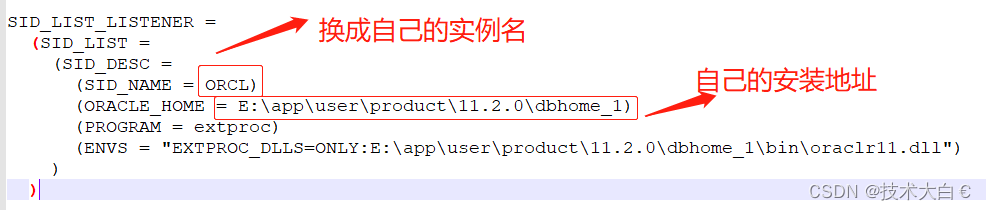

侦听器当前不知道连接描述符中请求的服务。说明监听器有问题,我们查看监听器配置

我是本地安装的,目录是E:\app\user\product\11.2.0\dbhome_1\NETWORK\ADMIN\listener.ora

进行修改

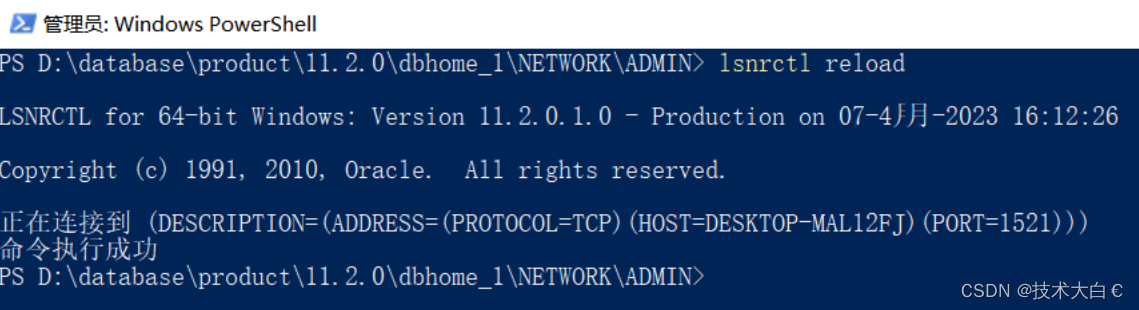

然后在E:\app\user\product\11.2.0\dbhome_1\NETWORK\ADMIN目录下shift+powershell 执行如下命令: lsnrctl reload

然后oracle重启服务(自己私下测试,是否重启不确定),重新启动java的demo即可

报错解决连接:https://blog.csdn.net/theweather/article/details/130014045

上述的cdc解决大部分的数据类型问题,但是Date类型的数据没有解决。以下重点解决date数据类型。

报错如下

Caused by: java.time.DateTimeException: Invalid value for Year (valid values -999999999 - 999999999)

不知道咋解决的使用string代替date,反正没报错,运行出来了

package flinkcdc;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.TableResult;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class FlinkCdcOracle {

public static void main(String[] args) throws Exception {

//创建flink环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.disableOperatorChaining();

StreamTableEnvironment tenv = StreamTableEnvironment.create(env);

tenv.executeSql("create table employees_flink(\n" +

"EMPLOYEE_ID int , \n" +

"FIRST_NAME string, \n" +

"LAST_NAME string, \n" +

"EMAIL string, \n" +

"PHONE_NUMBER string, //原本是Date类型,报错替换成String \n" +

"HIRE_DATE string, \n" +

"JOB_ID string, \n" +

"SALARY double, \n" +

"COMMISSION_PCT double, \n" +

"MANAGER_ID int, \n" +

"DEPARTMENT_ID int\n" +

")WITH (\n" +

" 'connector' = 'oracle-cdc', \n" +

" 'hostname' = 'localhost', \n" +

" 'port' = '1521', \n" +

" 'username' = 'test', \n" +

" 'password' = 'test', \n" +

" 'database-name' = 'ORCL', \n" +

" 'schema-name' = 'TEST', // 注意这里要大写\n" +

" 'table-name' = 'employees', \n" +

" 'debezium.log.mining.continuous.mine'='true',\n" +

" 'debezium.log.mining.strategy'='online_catalog', \n" +

" 'debezium.database.tablename.case.insensitive'='false',\n" +

" 'scan.startup.mode' = 'initial')");

TableResult tableResult = tenv.executeSql("select * from employees_flink");

tableResult.print();

}

}

1270

1270

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?