HW2-Classification

Reference Code:https://colab.research.google.com/drive/1u20e6ifVg6hUdIoe8jMnkZ4sX4juMuJS?usp=drive_open#scrollTo=84HU5GGjPqR0

作业链接:https://speech.ee.ntu.edu.tw/~hylee/ml/ml2022-course-data/hw2_slides%202022.pdf

作业代码

将Colab的

.ipynb转换为.py文件。

该作业属于分类任务,分类的Loss一般采用CrossEntropyLoss(交叉熵)。

一般来说编写代码流程为:

- 处理数据:利用

numpy库的np.load加载文件的train data & test data、将train data划分为train set & validation set、定义数据类DataClass用于将数据与标签封装到类中,便于后面的DataLoader(dataset, batchsize, shuffle)。- 构造模型:加入适当数量层数的网络层并层间加入激活函数。

- 训练:设置训练相关参数(随机种子|计算单元

cpu or gpu|损失函数|优化器)、训练训练集(梯度归零|预测|计算损失|反向传播|更新参数),验证集(记得应将模型调至相应运行模式)、保存最优模型参数。- 预测:加载模型、设置模型运行模式,预测。

"""2.Classifier.ipynb

Automatically generated by Colab.

Original file is located at

https://colab.research.google.com/drive/1wN4kqDFsaZpZYc3ORVsBALvRwI8vRlOR

"""

!gdown --id '1HPkcmQmFGu-3OknddKIa5dNDsR05lIQR' --output data.zip

!unzip data.zip

!ls

"""## Preparing Data

Loading the Training and Testing data from `.npy` file (Numpy array)

"""

import numpy as np

print('Loading Data')

data_root = './timit_11/'

train = np.load(data_root + 'train_11.npy')

train_label = np.load(data_root + 'train_label_11.npy')

test = np.load(data_root + 'test_11.npy')

print(f"Size of training data:{train.shape}")

print(f"Size of training data:{test.shape}")

"""## Creat DataSet

setup the DataClass

"""

import torch

from torch.utils.data import Dataset

class TIMITDataset(Dataset):

def __init__(self, X, y=None):

self.data = torch.from_numpy(X).float()

if y is None:

self.label = None

else:

y = y.astype(np.int32) # 将y的类型转换为 np.int

self.label = torch.LongTensor(y)

def __getitem__(self, idx):

if self.label is not None:

return self.data[idx], self.label[idx]

else:

return self.data[idx]

def __len__(self):

return len(self.data)

"""Split the Train Data into training dataset and valid dataset,you can modify the VAL_RATIO to change the ratio of validation data"""

VAL_RATIO = 0.2

percent = int((1 - VAL_RATIO) * train.shape[0])

train_x, train_y, val_x, val_y = train[:percent], train_label[:percent], train[percent:], train_label[percent:]

print(f"Size of train dataset: {train_x.shape}")

print(f"Size of validation dataset: {val_x.shape}")

from torch.utils.data import DataLoader

train_set = TIMITDataset(train_x, train_y)

val_set = TIMITDataset(val_x, val_y)

BATCH_SIZE = 64

train_loader = DataLoader(train_set, batch_size = BATCH_SIZE, shuffle = True) # 只需将训练集打乱顺序

val_loader = DataLoader(val_set, batch_size = BATCH_SIZE, shuffle = False)

print(len(val_loader))

print(len(val_set))

"""## Create Model

Define model architecture.

"""

import torch

import torch.nn as nn

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

self.layers = nn.Sequential(

nn.Linear(429, 1024),

nn.Sigmoid(),

nn.Linear(1024, 512),

nn.Sigmoid(),

nn.Linear(512, 128),

nn.Sigmoid(),

nn.Linear(128, 39)

)

def forward(self, x):

x = self.layers(x)

return x

"""Training"""

def get_device():

return 'cuda' if torch.cuda.is_available() else 'cpu'

"""Fix the random seed for reproducibility."""

def same_seed(seed):

torch.manual_seed(seed)

if torch.cuda.is_available():

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

np.random.seed(seed)

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = False

# fix random seed for reproducibility.

same_seed(0)

# get the device

device = get_device()

print(f"device is {device}")

# training parameters

num_epoch = 20

learning_rate = 0.0001

# the save path of model

model_path = './model.ckpt'

# create model, loss function and optimizer

model = Classifier().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

# start training

best_acc = 0.0

for epoch in range(num_epoch):

train_acc, val_acc = 0.0, 0.0

train_loss, val_loss = 0.0, 0.0

# training

model.train() # set the model to training mode

for i, data in enumerate(train_loader): # 梯度清零、预测、计算Loss、反向传播、梯度更新

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

batch_loss = criterion(outputs, labels)

_, train_pred = torch.max(outputs, 1) # 按照维度0依次返回:每行最大的元素、每行最大元素位置

# if epoch == 0 and i == 0:

# print(train_pred)

# print('``````````````````')

# print(f'{outputs.shape}')

# print(outputs)

batch_loss.backward()

optimizer.step()

train_loss += batch_loss.item()

train_acc += (train_pred.cpu() == labels.cpu()).sum().item() # 数据间进行比较需要在内存上比较

# validation

if len(val_set) > 0:

model.eval() # set the model to evaluation mode

with torch.no_grad():

for i, data in enumerate(val_loader): # 预测、计算损失

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

outputs = model(inputs)

batch_loss = criterion(outputs, labels)

_, val_pred = torch.max(outputs, 1)

val_loss += batch_loss.item()

val_acc += (val_pred.cpu() == labels.cpu()).sum().item()

print(f"{epoch+1}/{num_epoch} Train Acc:{train_acc/len(train_set)} Loss:{train_loss/len(train_loader)} Val Acc:{val_acc/len(val_set)} loss:{val_loss/len(val_loader)}")

# if the model improves, then save the checkpoint at this epoch

if val_acc > best_acc:

best_acc = val_acc

torch.save(model.state_dict(), model_path)

print(f"saving model with acc{best_acc / len(val_loader)}")

else: # len(val_set)==0

print(f"{epoch+1}/{num_epoch} Train Acc:{train_acc/len(train_set)} Loss:{train_loss/len(train_loader)}")

# if not have val_set, then save the last epoch

if len(val_set) == 0:

torch.save(model.state_dict(), model_path)

print('saving the model at the last epoch')

"""## Testing

Create a testing dataset and load model from the saved checkpoint

"""

# create testing dataset

test_set = TIMITDataset(test, None)

test_loader = DataLoader(test_set, batch_size=BATCH_SIZE, shuffle=False)

# create testing model

model = Classifier().to(device)

model.load_state_dict(torch.load(model_path))

"""## Make prediction."""

predict = []

model.eval()

with torch.no_grad():

for i, data in enumerate(test_loader):

inputs = data

inputs = inputs.to(device)

outpust = model(inputs)

_, test_pred = torch.max(outputs, 1)

for y in test_pred.cpu().numpy():

predict.append(y)

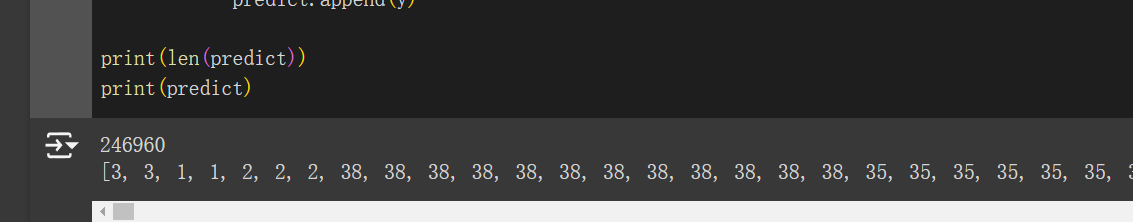

print(len(predict))

print(predict)

清理内存

import gc

del train, train_label, train_x, train_y, val_x, val_y

gc.collect()

训练结果

1/20 Train Acc:0.553800263226095 Loss:1.472851456878631 Val Acc:0.6044425111896157 loss:1.303201699038264

saving model with acc38.67976066597294

2/20 Train Acc:0.6241182179898267 Loss:1.2256297460726606 Val Acc:0.6520303918499758 loss:1.131981437868835

saving model with acc41.72502601456816

3/20 Train Acc:0.6606883514830605 Loss:1.0953975728663004 Val Acc:0.6684093061828471 loss:1.0660503320047063

saving model with acc42.77315296566077

4/20 Train Acc:0.6828481266737471 Loss:1.013523457833422 Val Acc:0.6831336615349591 loss:1.0137166906531967

saving model with acc43.715400624349634

5/20 Train Acc:0.6985705501831911 Loss:0.9553361429160203 Val Acc:0.6878656189148207 loss:0.9889491811611774

saving model with acc44.018210197710715

6/20 Train Acc:0.7113791929426949 Loss:0.9101641085244776 Val Acc:0.690943017313923 loss:0.9730646285605418

saving model with acc44.215140478668054

7/20 Train Acc:0.7214061761582202 Loss:0.8737438883432528 Val Acc:0.6944025497282377 loss:0.9590833547266353

saving model with acc44.43652445369407

8/20 Train Acc:0.7301109309971594 Loss:0.8429551047949287 Val Acc:0.6973661209738726 loss:0.9509904165495415

saving model with acc44.626170655567115

9/20 Train Acc:0.7378349399610751 Loss:0.8157875570398037 Val Acc:0.6975002744047449 loss:0.9488426514442828

saving model with acc44.634755463059314

10/20 Train Acc:0.7446147904608489 Loss:0.7921370646100703 Val Acc:0.7000776463796867 loss:0.9399689289622016

saving model with acc44.799687825182104

11/20 Train Acc:0.7509840489051726 Loss:0.7703462002044771 Val Acc:0.6970815530902039 loss:0.9535724747941503

12/20 Train Acc:0.7563217456260258 Loss:0.7505239899856289 Val Acc:0.7014029196664865 loss:0.9416483208250243

saving model with acc44.884495317377734

13/20 Train Acc:0.7620974749604906 Loss:0.7322820587526492 Val Acc:0.7012321789362852 loss:0.9396959225124797

14/20 Train Acc:0.7670286448937694 Loss:0.7152666749624702 Val Acc:0.7021753182078728 loss:0.9379927765938544

saving model with acc44.93392299687825

15/20 Train Acc:0.7720319733318427 Loss:0.6993599714283052 Val Acc:0.7008175228772252 loss:0.9452622350107619

16/20 Train Acc:0.7762984719674372 Loss:0.684039143942236 Val Acc:0.7016061824405355 loss:0.9465395380955085

17/20 Train Acc:0.7805893622102862 Loss:0.6695845226272334 Val Acc:0.6987970909031778 loss:0.9622827009719473

18/20 Train Acc:0.7847369517605151 Loss:0.655958962944465 Val Acc:0.7029273904718542 loss:0.9497037854270465

saving model with acc44.982049947970864

19/20 Train Acc:0.7890339399051777 Loss:0.6425860358292494 Val Acc:0.7001955387886352 loss:0.9658824376234713

20/20 Train Acc:0.7926540609485286 Loss:0.6300522697679396 Val Acc:0.7004719761613418 loss:0.9665208166129848

预测结果

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?