AUTOWARE

第一章 Autoware概述

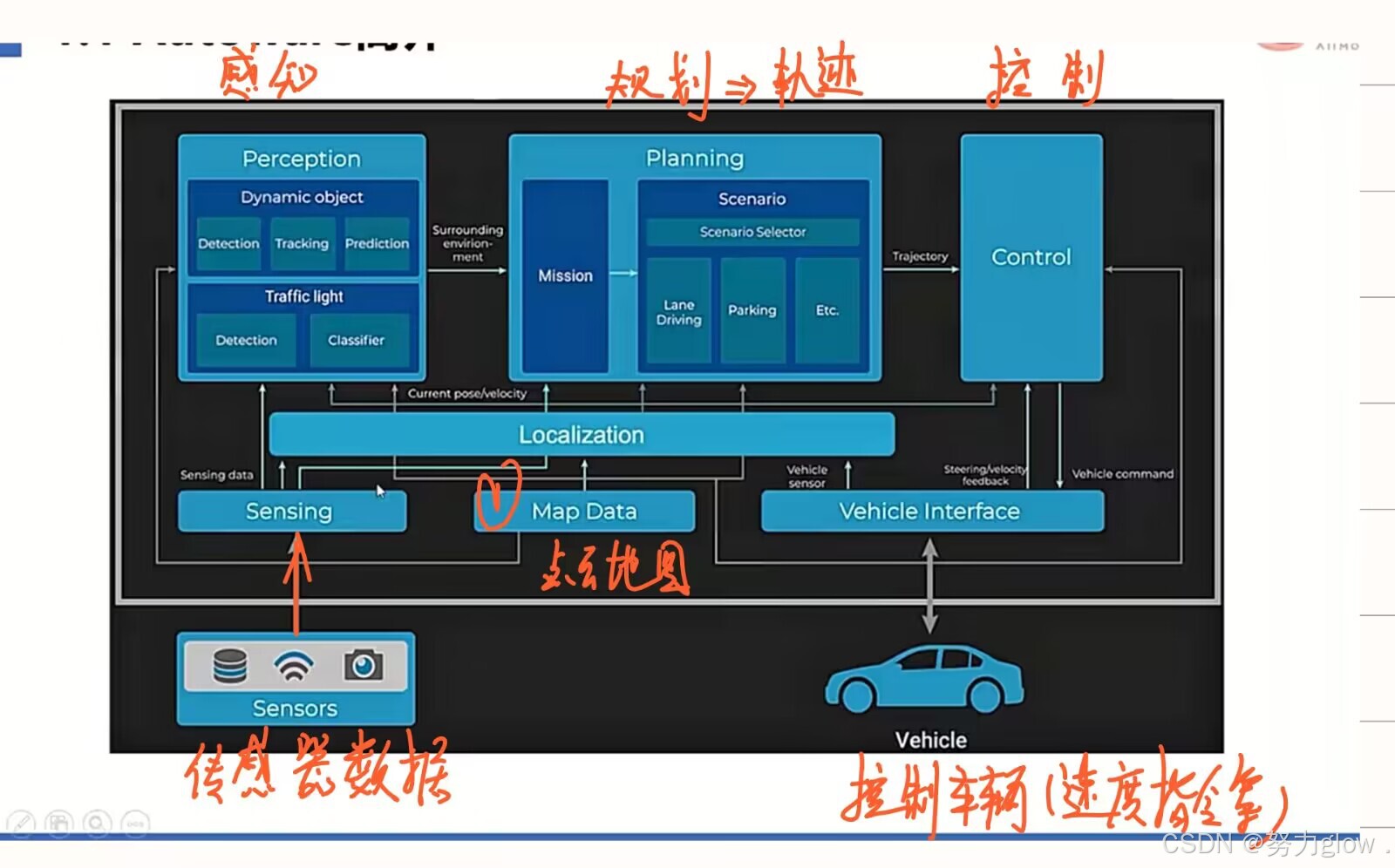

1. 框架

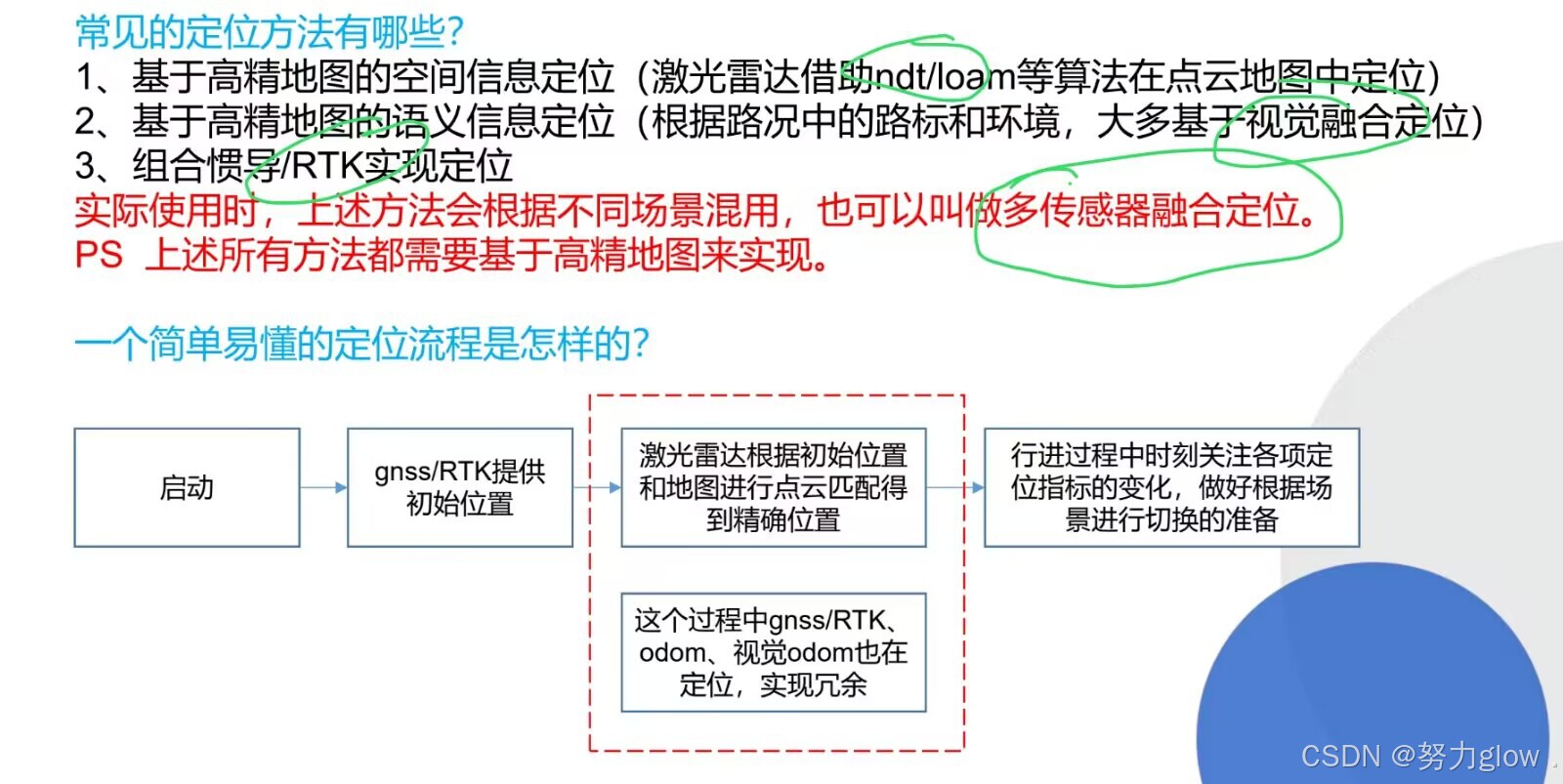

- 定位(Localization ):通过结合GNSS和IMU传感器的3D地图和SLAM算法来实现定位。

- 检测(Detection ):使用具有传感器融合算法和深度神经网络的摄像机以及LiDAR。

- 预测和规划(Prediction and Planning ):基于概率机器人技术和基于规则的系统,部分还使用深度神经网络。

- 控制(Control):Autoware向车辆输出的是速度和角速度的扭曲量。尽管控制量的主要部分通常位于车辆的线控控制器中,但这些是Control的一部分。

**注:**Autoware主要采用了离线的建图方式进行建图,即利用录制好了数据包进行建图

2. demo

本来是个视频,这里就不放了,反正就一个实现效果。

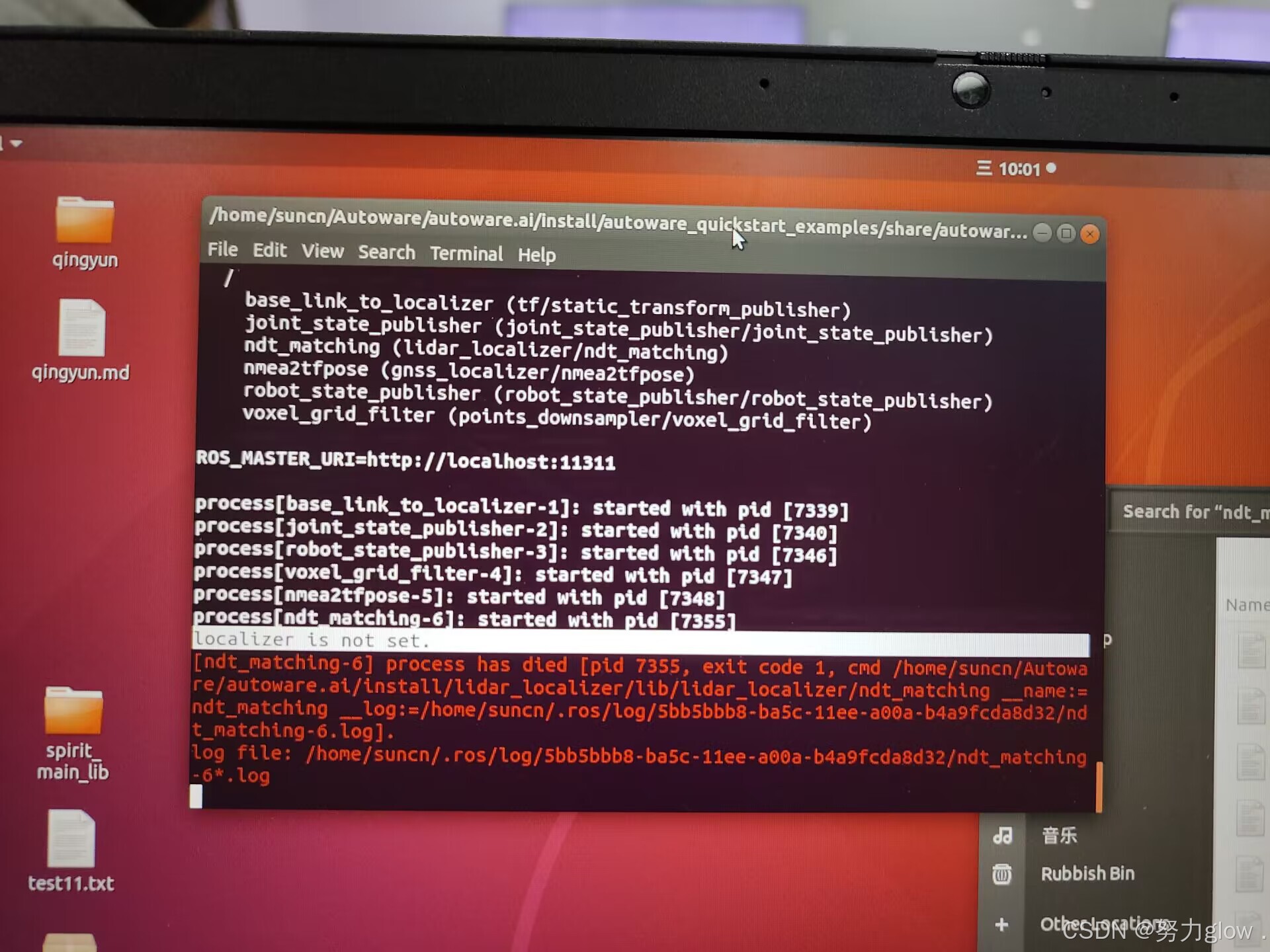

这种错误需要自己定位错误的代码片段修改,一般可以直接注释,不影响运行。

第二章 建图模块

1. 高精地图与经典建图算法

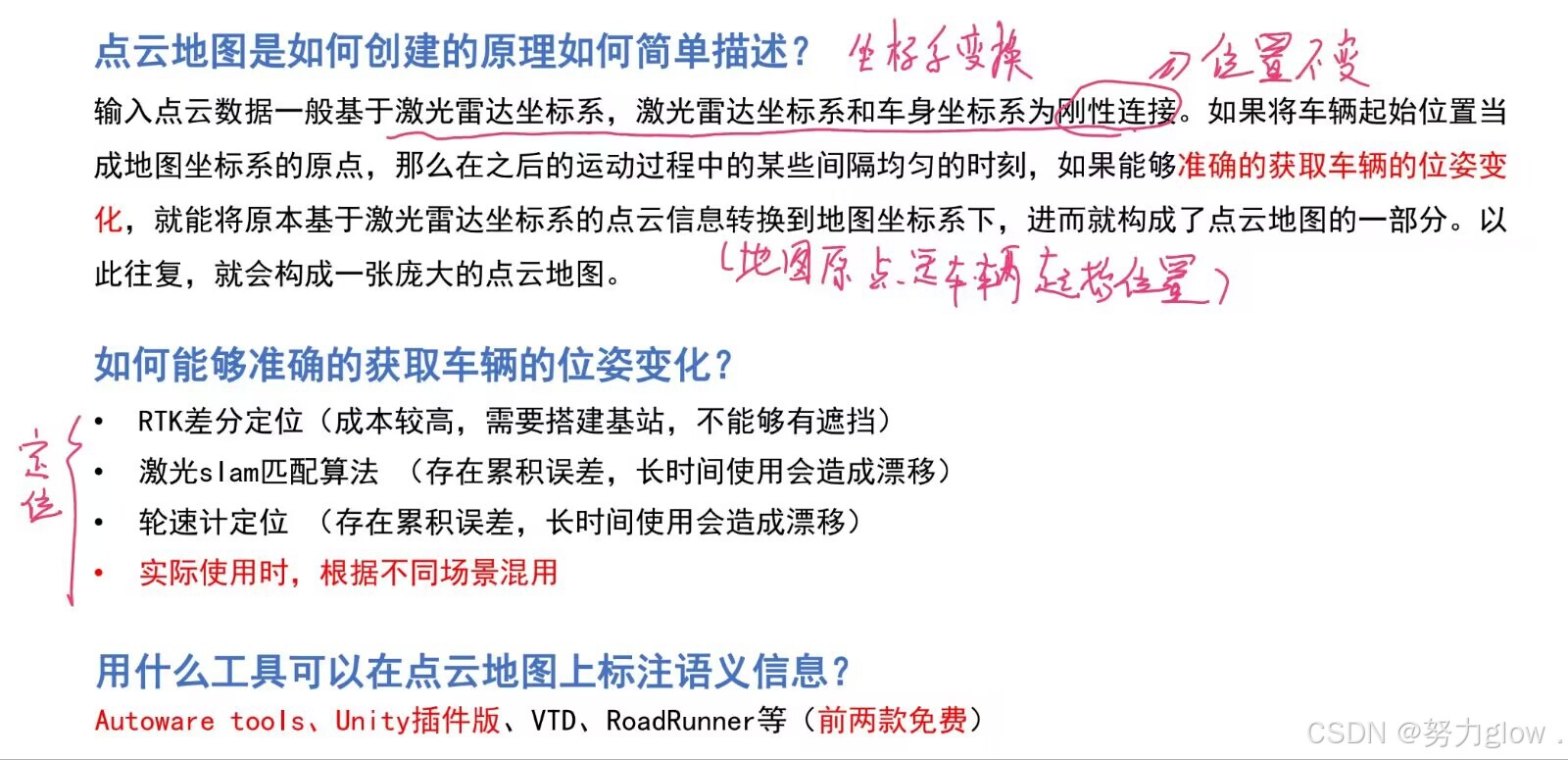

什么是高精地图(HD map)?

(1)数据精度为分米甚至**厘米级**,包含空间信息、语义信息和时间信息的数据体。

(2)空间信息—点云地图

(3)语义信息—车道线、停止线、转向路标、速度标识、人行横道、路牙

(4)时间信息—红绿灯信息,早晚可变车道信息

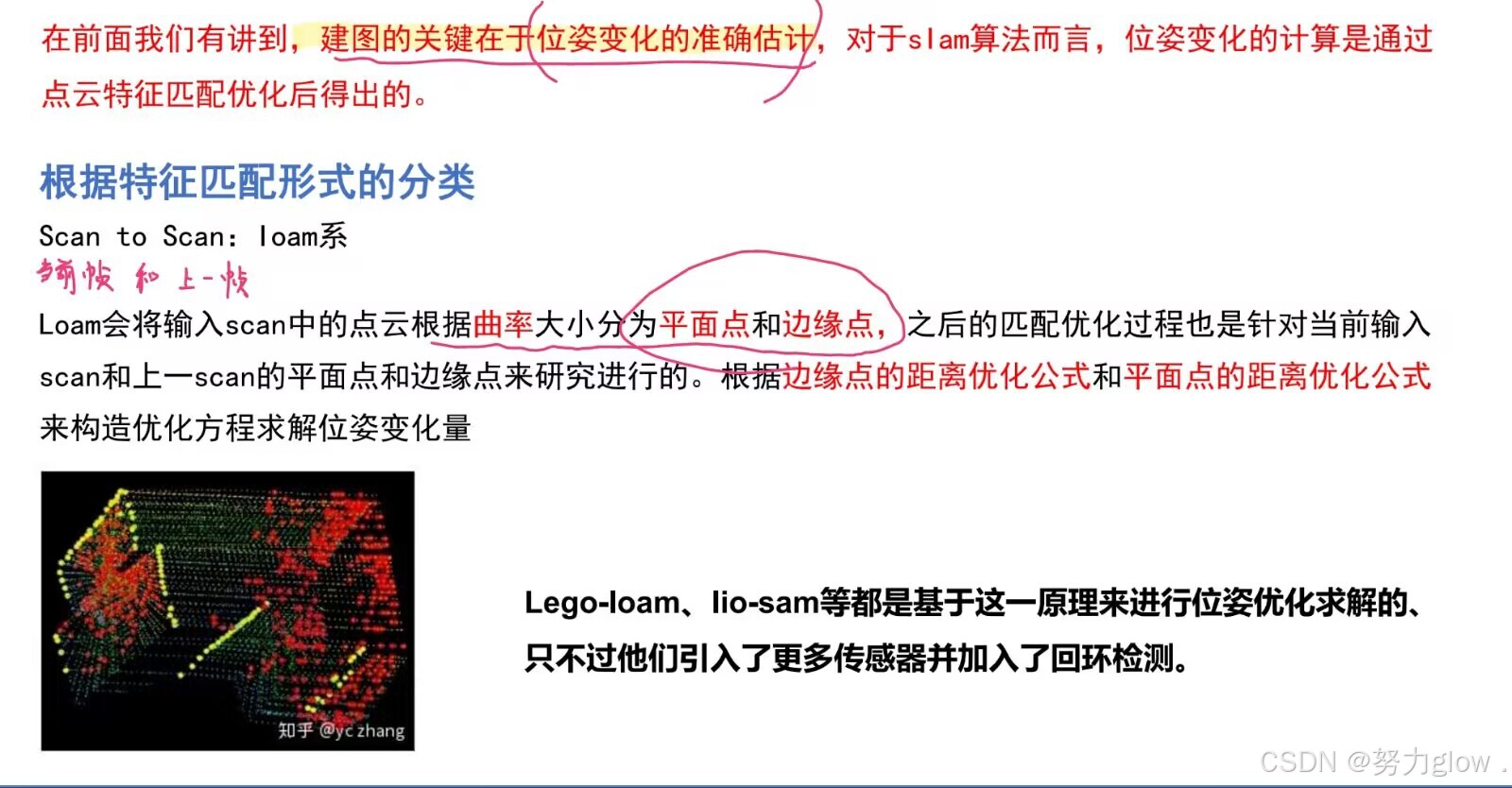

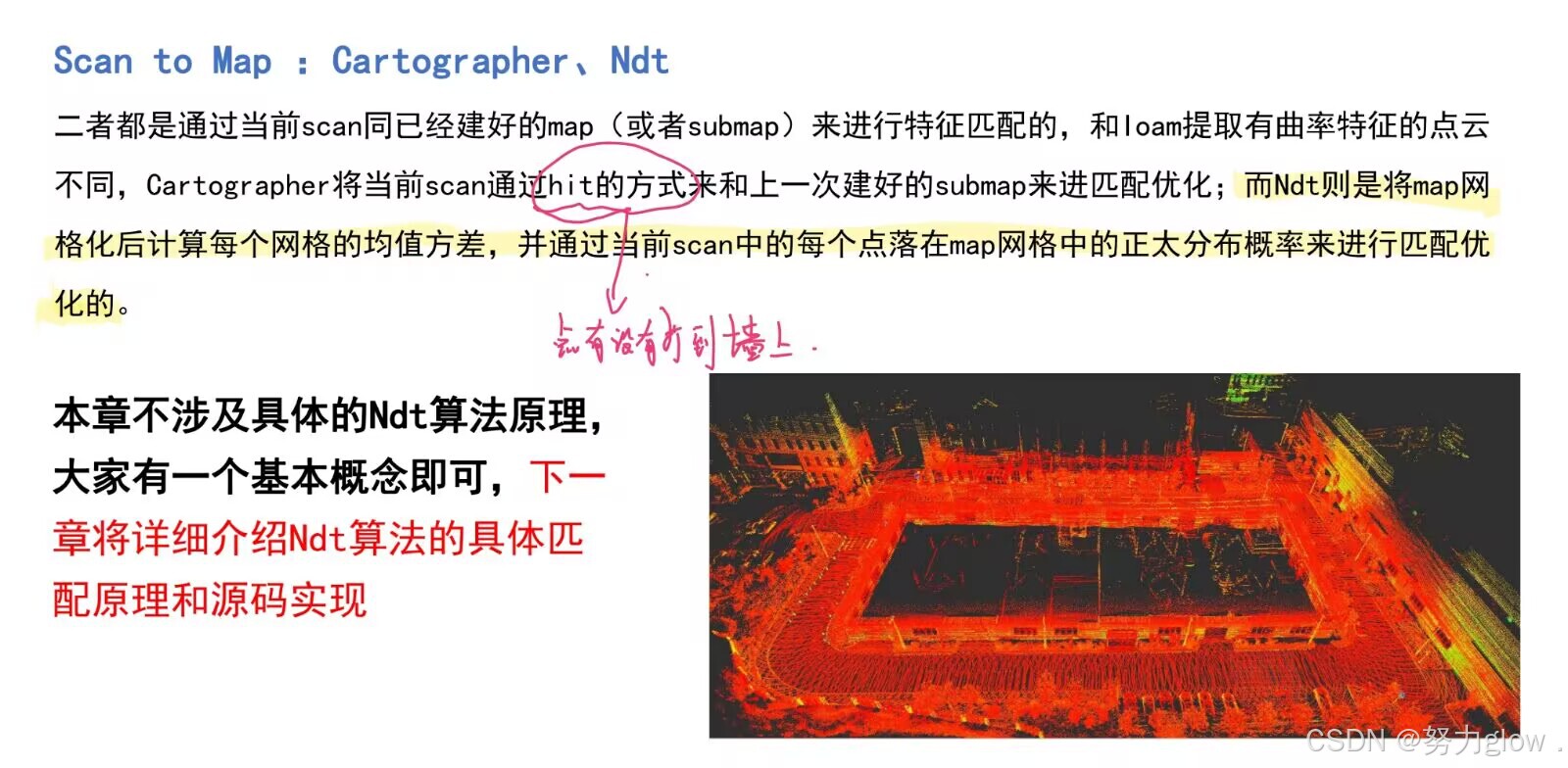

将激光slam算法分类

以cartographer为例

1.submaps

Cartographer 能产生一个精度为5cm的2D栅格地图。

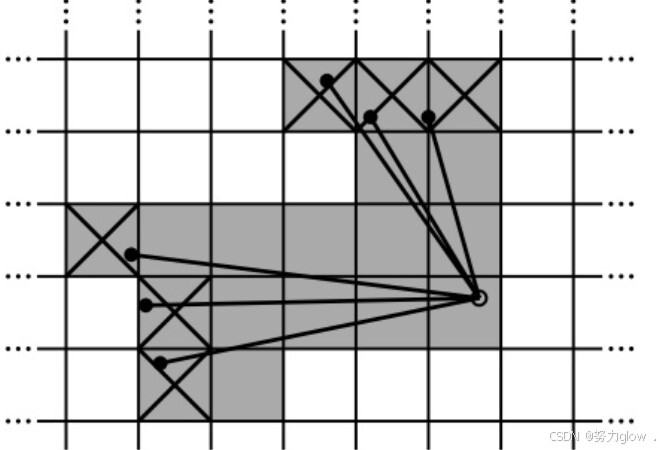

Cartographer在前端匹配环节区别与其它建图算法的主要是使用了Submap这一概念,每当或得一次laser scan的数据后,便与当前最近建立的Submap去进行匹配,使这一帧的laser scan数据插入到Submap上最优的位置。(这里用的是高斯牛顿解最小二乘问题)在不断插入新数据帧的同时该Submap也得到了更新。一定量的数据组合成为一个Submap,当不再有新的scan插入到Submap时,就认为这个submap已经创建完成,接着会去创建下一个submap,具体过程如下图。(这里没有理解什么情况下新的scan不会再匹配到该Submap上)

因此这里scan matching的本质是当前laser scan与多个邻近的laser scan之间进行的。

通过scan matching得到的位姿估计在短时间内是可靠的,但是长时间会有累积误差。因此Cartographer应用了回环检测对累积误差进行优化。==所有创建完成的submap以及当前的laser scan都会用作回环检测的scan matching。如果当前的scan和所有已创建完成的submap在距离上足够近,则进行回环检测。==这里为了减少计算量,提高实时回环检测的效率,Cartographer应用了branch and bound(分支定界)优化方法进行优化搜索。如果得到一个足够好的匹配,则会将该匹配的闭环约束加入到所有Submap的姿态优化上。

连续的scan用来构造submap,这里submap以概率格网的形式表现。每一个scan,在插入格网(submap)时,每一个grid有hits和miss两种情况。离scan终点最近的grid为hits,在scan原点和终点之间相交的grid为miss。之前未观察的grid分配一个概率,已观察的grid进行概率更新。

一些连续的scans组成submap。采用概率栅格地图的形式表示这些submaps,以给定的分辨率(例如5cm)将离散栅格地图点映射到值,这些值可以记作栅格地图点被占用的概率。对于每个栅格地图点,都定义一个相应的pixel,这个piexl是针对于分辨率来说的,对于5cm的分辨率来说,一个pixel相当于一个5*5的方格,那么对应于scan中应该有很多个point,即论文中定义的:pixel包含了所有靠近这个栅格地图点的points。

注:栅格地图点就是上图中的叉号处,像素定义为叉号周围所有的点的集合,即小方框。

submaps是一系列地图,scans与其进行匹配的同时插入其中。

除了在初始化过程中只有一个submap存在外,始终存在两个Submap供scans进行插入:旧的submap进行匹配时候,新的submap进行初始化用来下一步的匹配。

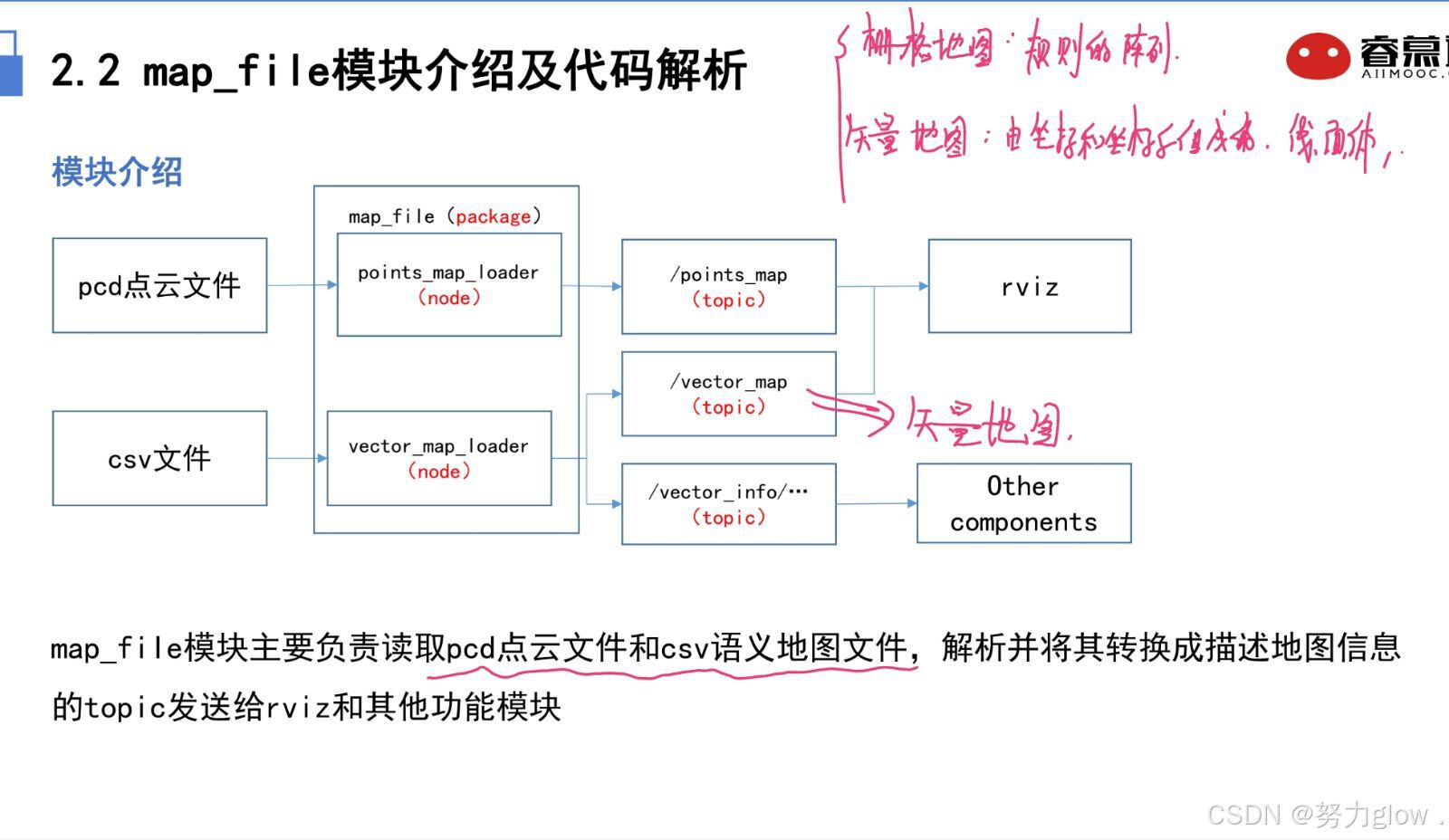

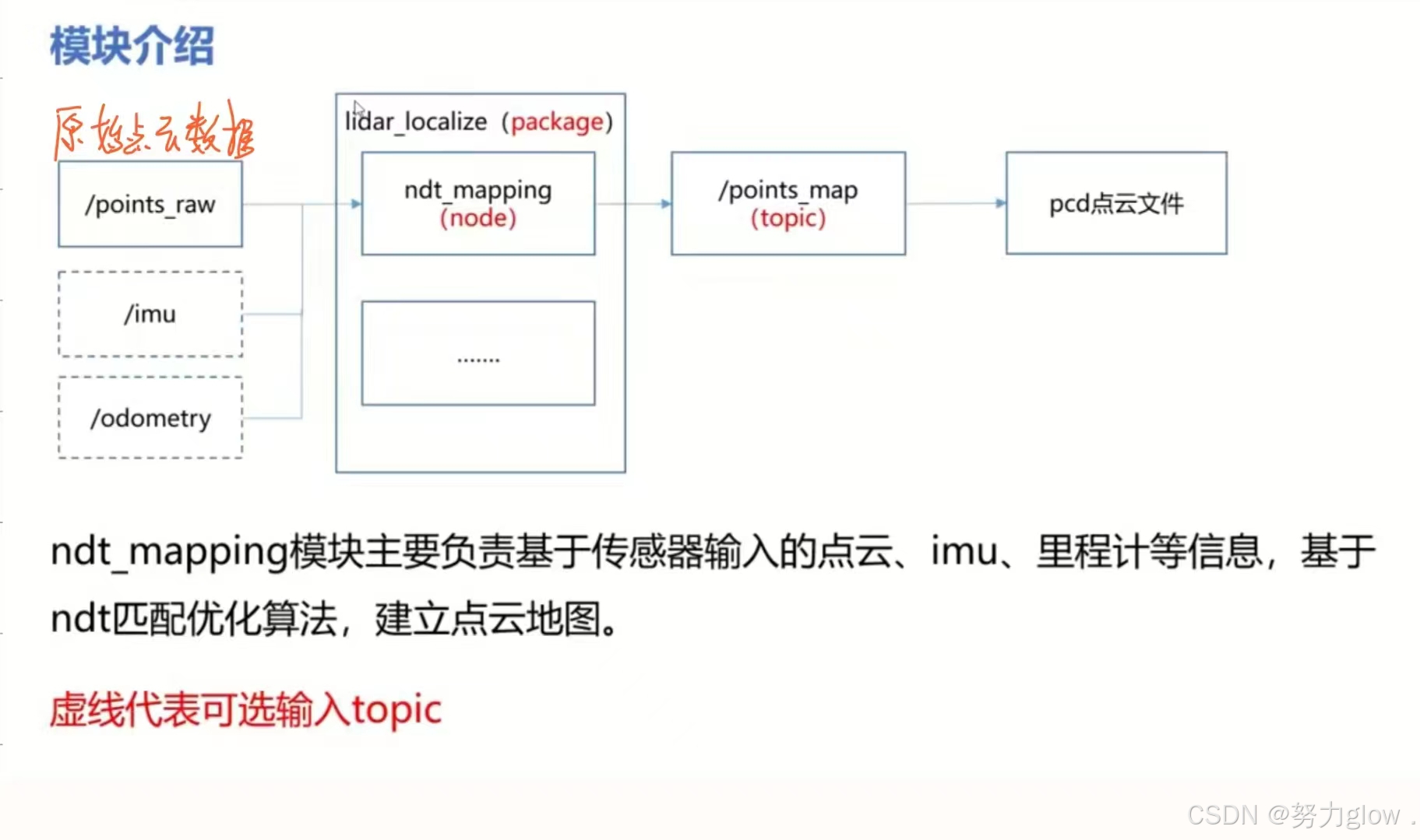

2. map_file模块

后面auto ware tools也是绘制vector map。

具体的map_file和ndt_mapping模块需要读代码,把握关键函数。

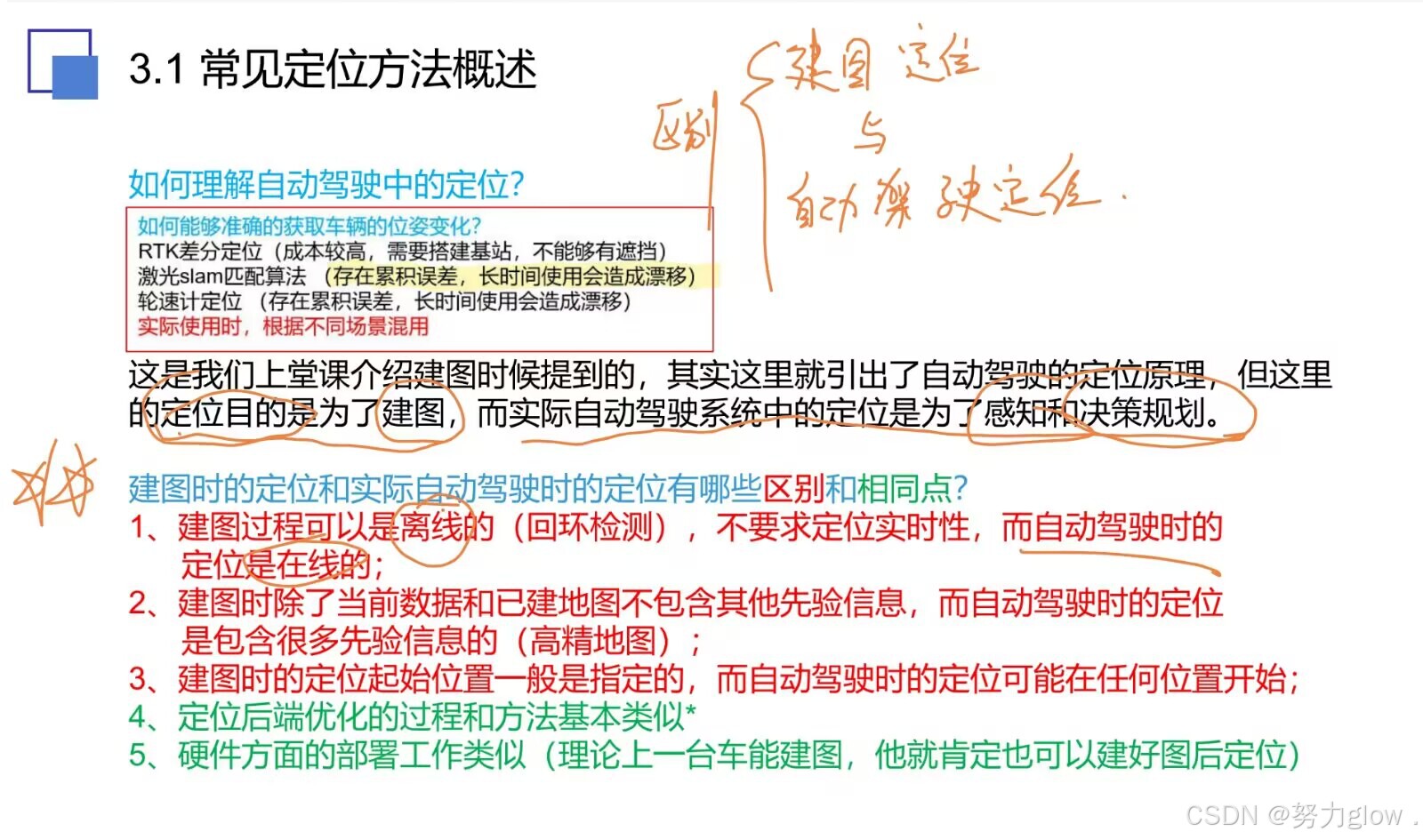

第三章 定位模块

1. 建图定位与自动驾驶定位

剩下的都是代码研读

第四章 感知模块

作业 欧式聚类

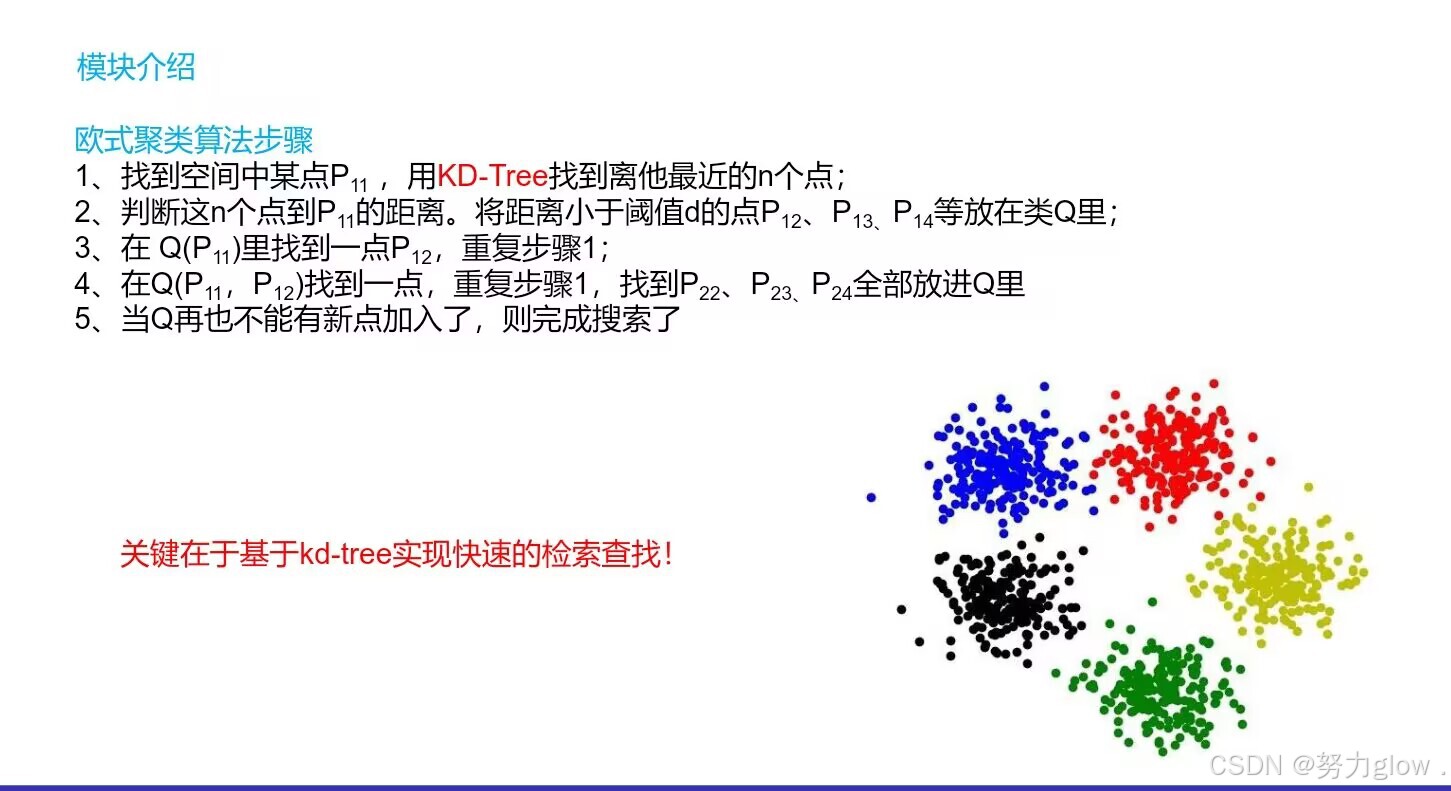

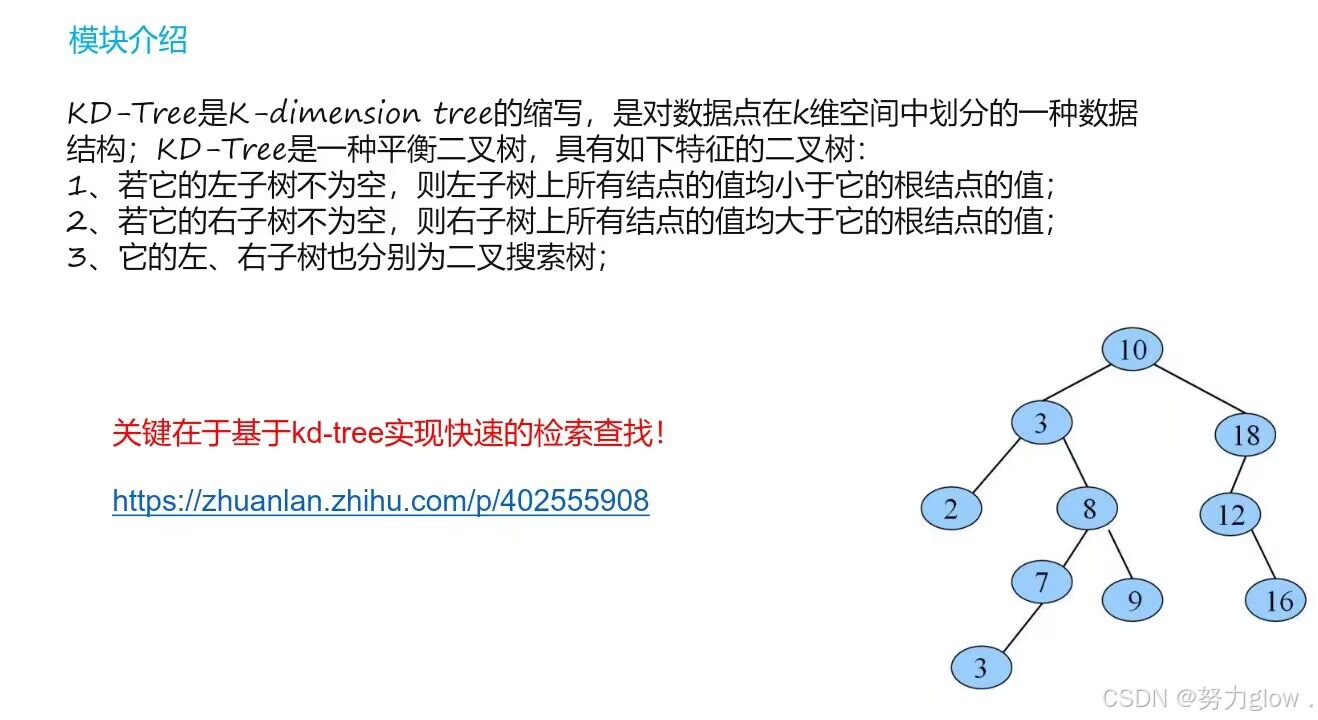

假设我们使用Kd-tree结构去查找最近邻,算法步骤如下:

- 为输入的点云数据集P创建Kd-tree的表示

- 设置一个空的聚类列表C,以及一个需要被检查的点云队列Q

- 然后对P中的每一个点p_i进行如下步骤:

- 将p_i添加到当前队列Q

- 对每个Q中的 p_i 进行如下步骤:

- 设置半径 r<d_th,在此范围内搜索 p_i 的 最近邻 点云集 P_i_k.

- 对于每个最近邻点云集 P_i_k ,检查其中的点是否被处理过,如果没有被处理过,就添加到Q中。

- 当Q中列表中的所有点都被处理完成了,把Q添加到聚类列表C中,并将Q清空。

- 当数据集P中的所有点都被处理过了,并且成了聚类列表中的一部分,算法完成。

算法流程简述:

- 找到空间中某点p,有kdTree找到离他最近的n个点,判断这n个点到p的距离。将距离小于阈值r的点 p1,p2,p3…放在类Q里

- 在 Q里找到一点p1,重复1,找到p22,p23,p24…全部放进Q里

- 当 Q 再也不能有新点加入了,则完成搜索了

下面是代码

#include <pcl/ModelCoefficients.h>

#include <pcl/point_types.h>

#include <pcl/io/pcd_io.h>

#include <pcl/filters/extract_indices.h>

#include <pcl/filters/voxel_grid.h>

#include <pcl/features/normal_3d.h>

#include <pcl/kdtree/kdtree.h>

#include <pcl/sample_consensus/method_types.h>

#include <pcl/sample_consensus/model_types.h>

#include <pcl/segmentation/sac_segmentation.h>

#include <pcl/segmentation/extract_clusters.h>

#include<pcl/visualization/pcl_visualizer.h>

bool isPushSpace = false;

//键盘事件

void keyboard_event_occurred(const pcl::visualization::KeyboardEvent& event, void * nothing)

{

if (event.getKeySym() == "space" && event.keyDown())

{

isPushSpace = true;

}

}

int main(int argc, char** argv)

{

// 从PCD文件中读取点云数据

pcl::PCDReader reader;

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud(new pcl::PointCloud<pcl::PointXYZ>), cloud_f(new pcl::PointCloud<pcl::PointXYZ>);

reader.read("home/suncn/test_ws/cluster_extraction/src/table_scene_lms400.pcd", *cloud);

std::cout << "PointCloud before filtering has: " << cloud->points.size() << " data points." << std::endl; //*

pcl::visualization::PCLVisualizer viewer("Cluster Extraction");

// 注册键盘事件

viewer.registerKeyboardCallback(&keyboard_event_occurred, (void*)NULL);

int v1(1);// 直接赋值

int v2(2);

viewer.createViewPort(0, 0, 0.5, 1, v1);

viewer.createViewPort(0.5, 0, 1, 1, v2);

//创建滤波对象: 使用下采样,叶子的大小为 1cm

pcl::VoxelGrid<pcl::PointXYZ> vg;

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_filtered(new pcl::PointCloud<pcl::PointXYZ>);

vg.setInputCloud(cloud);

vg.setLeafSize(0.01f, 0.01f, 0.01f);

vg.filter(*cloud_filtered);

std::cout << "PointCloud after filtering has: " << cloud_filtered->points.size() << " data points." << std::endl; //*

viewer.addPointCloud(cloud, "cloud1", v1);

viewer.addPointCloud(cloud_filtered, "cloud2", v2);

//渲染10秒再继续

viewer.spinOnce(10000);

// 创建平面分割对象

pcl::SACSegmentation<pcl::PointXYZ> seg;

pcl::PointIndices::Ptr inliers(new pcl::PointIndices);

pcl::ModelCoefficients::Ptr coefficients(new pcl::ModelCoefficients);

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_plane(new pcl::PointCloud<pcl::PointXYZ>());

pcl::PCDWriter writer;

seg.setOptimizeCoefficients(true);

seg.setModelType(pcl::SACMODEL_PLANE);

seg.setMethodType(pcl::SAC_RANSAC);

seg.setMaxIterations(100);

seg.setDistanceThreshold(0.02);

// 把点云中所有的平面全部过滤掉,重复过滤,直到点云数量小于原来的0.3倍

int i = 0, nr_points = (int)cloud_filtered->points.size();

while (cloud_filtered->points.size() > 0.3 * nr_points)

{

//从剩余的点云中分割最大的平面分量

seg.setInputCloud(cloud_filtered);

seg.segment(*inliers, *coefficients);

if (inliers->indices.size() == 0)

{

std::cout << "Could not estimate a planar model for the given dataset." << std::endl;

break;

}

// 从输入点云中提取平面量

pcl::ExtractIndices<pcl::PointXYZ> extract;

extract.setInputCloud(cloud_filtered);

extract.setIndices(inliers);

extract.setNegative(false);

extract.filter(*cloud_plane);

std::cout << "PointCloud representing the planar component: " << cloud_plane->points.size() << " data points." << std::endl;

// 移除平面量,提取剩余的

extract.setNegative(true);

extract.filter(*cloud_f);

//更新显示点云

viewer.updatePointCloud(cloud_filtered, "cloud1");

viewer.updatePointCloud(cloud_f, "cloud2");

//渲染3秒再继续

viewer.spinOnce(3000);

cloud_filtered = cloud_f;

}

viewer.removePointCloud("cloud2", v2);

// 创建KdTreee对象作为搜索方法(使用Kd-tree作为抽取算法的搜索方法

)

pcl::search::KdTree<pcl::PointXYZ>::Ptr tree(new pcl::search::KdTree<pcl::PointXYZ>);

tree->setInputCloud(cloud_filtered);

/*创建EuclideanClusterExtraction对象,并设置参数

点云类型为:PointXYZ

setClusterTolerance(0.02):设置聚类容忍度为2cm,如果设置的值过小,可能会将一个对象误判为多 个聚类,反之,如果过大,则可能会将多个多个对象误判为一个聚类。所以需根据实际情况进行设置。

setMinClusterSize()、setMaxClusterSize() :设置最大聚类和最小聚类的点云数量大小

结果保存在cluster_indices,一个vector类型的数组,每个元素代表一个聚类的所有点云下标。

*/

std::vector<pcl::PointIndices> cluster_indices;

pcl::EuclideanClusterExtraction<pcl::PointXYZ> ec;

ec.setClusterTolerance(0.02); // 2cm

ec.setMinClusterSize(100);

ec.setMaxClusterSize(25000);

ec.setSearchMethod(tree);

ec.setInputCloud(cloud_filtered);

//聚类抽取结果保存在一个数组中,数组中每个元素代表抽取的一个组件点云的下标

ec.extract(cluster_indices);

//遍历抽取结果,将其显示并保存

int j = 0;

for (std::vector<pcl::PointIndices>::const_iterator it = cluster_indices.begin(); it != cluster_indices.end(); ++it)

{

//创建临时保存点云族的点云

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_cluster(new pcl::PointCloud<pcl::PointXYZ>);

//通过下标,逐个填充

for (std::vector<int>::const_iterator pit = it->indices.begin(); pit != it->indices.end(); pit++)

cloud_cluster->points.push_back(cloud_filtered->points[*pit]); //*

//设置点云属性

cloud_cluster->width = cloud_cluster->points.size();

cloud_cluster->height = 1;

cloud_cluster->is_dense = true;

std::cout << "当前聚类 "<<j<<" 包含的点云数量: " << cloud_cluster->points.size() << " data points." << std::endl;

std::stringstream ss;

ss << "cloud_cluster_" << j << ".pcd";

writer.write<pcl::PointXYZ>(ss.str(), *cloud_cluster, false); //*

j++;

//显示,随机设置不同颜色,以区分不同的聚类

pcl::visualization::PointCloudColorHandlerCustom<pcl::PointXYZ> cluster_color(cloud_cluster, rand()*100 + j * 80, rand() * 50 + j * 90, rand() * 200 + j * 100);

viewer.addPointCloud(cloud_cluster,cluster_color, ss.str(), v2);

viewer.spinOnce(5000);

}

while (!viewer.wasStopped())

{

viewer.spinOnce();

}

return (0);

}

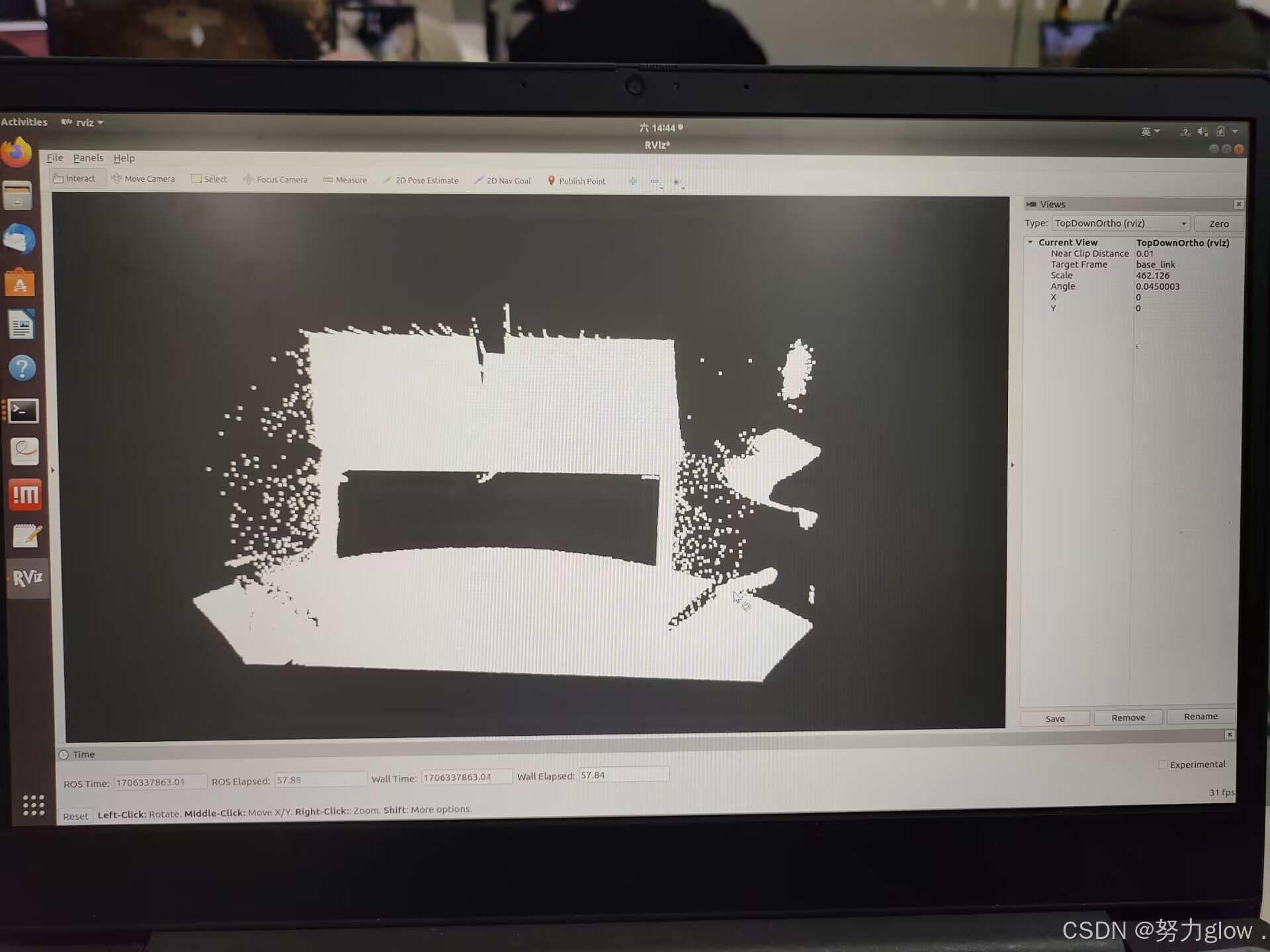

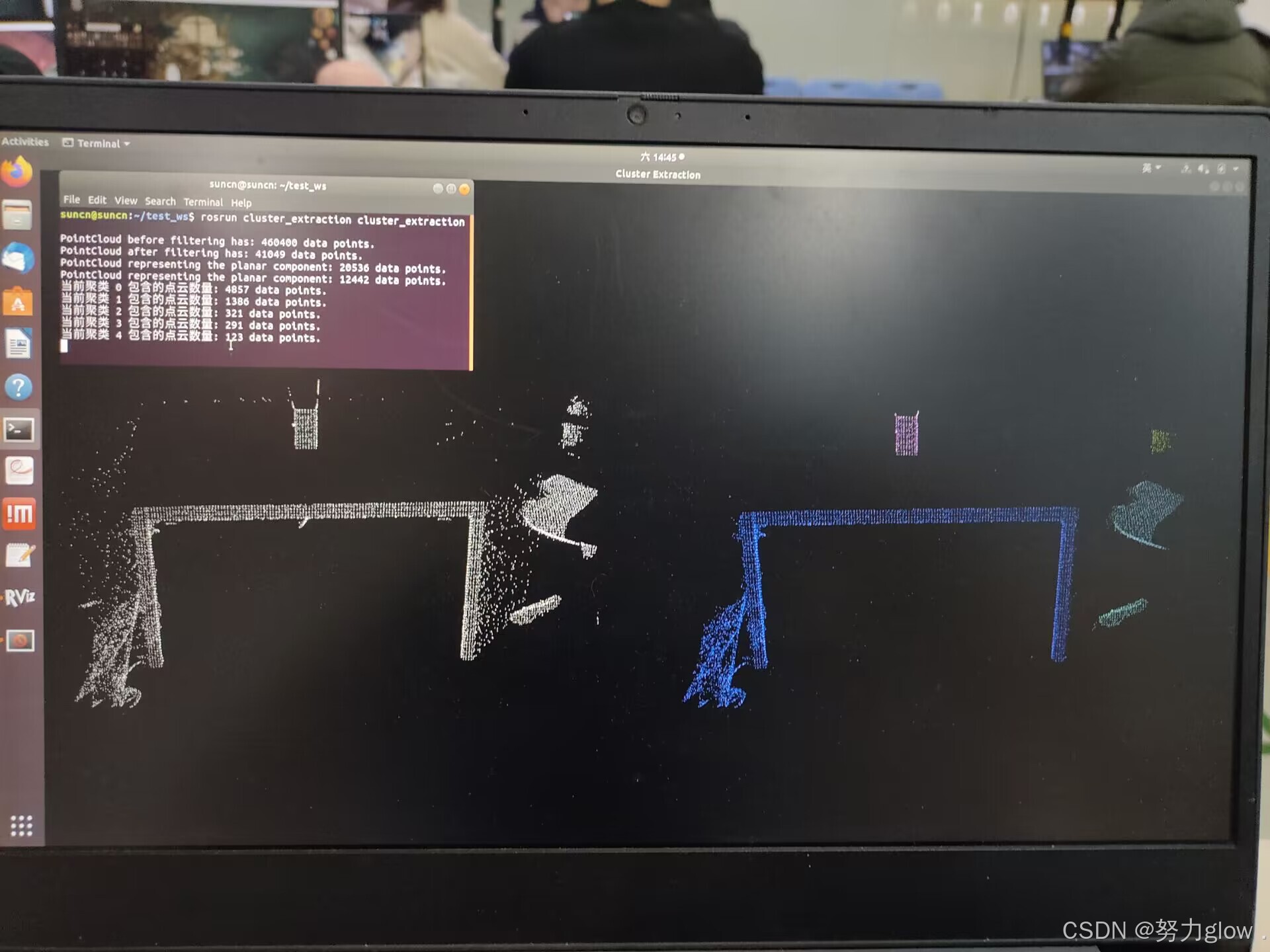

效果演示

平面分割并去除

将点云中所有平面都分割出来,并剔除:

autoware定位——NDT 正态分布变换

(Normal Distributions Transform)

引言:无人车的定位问题,实际上就是要找出无人车当前在地图的那个位置(并且要求精度在厘米级别)。其中有一类方法被称为即时定位与地图构建(simultaneous localization and mapping,SLAM),它能够实现导航定位而不需要地图(同时建图和定位),但是该方法实现代价高,难度大,难以应用到自动驾驶领域。自动驾驶车辆行驶速度快,距离远,环境复杂,使得SLAM的精度下降,同时远距离的行驶将导致实时构建的地图偏移过大。所以,在已经拥有高精度地图的前提下去做无人车的定位,是更加现实,简单的。NDT就是一类利用已有的高精度地图和激光雷达实时测量数据实现高精度定位的技术。(先验信息)

因此,将问题分为独立的两部分:建图Mapping和定位Matching。NDT是一种点云配准算法,可同时用于点云的建图和定位。

NDT配准的原理(scan to map)

通过不断的比对实时扫描到的点云和已经建好的全局点云地图,我们就可以持续获得我们当前的位置。ICP(迭代最近点)等配准算法通过对所有的点或者提取的特征点进行匹配配准以确定当前的位置,但是这样就有一个问题:我们所处的环境是在不断变化的,比如树木的稀疏程度,或者环境中车辆及行人的移动,乃至固有的测量误差,这些都会导致我们实时扫描到的点云与已建立的点云地图有些许的差别,从而导致较大匹配误差。

而NDT可以在很大程序上消除这种不确定性。NDT没有计算两个点云中点与点之间的差距,而是先将参考点云(即高精度地图)转换为多维变量的正态分布,如果变换参数能使得两幅激光数据匹配的很好,那么变换点在参考系中的概率密度将会很大。因此,可以考虑用优化的方法,比如牛顿法,求出使得概率密度之和最大的变换参数,此时两幅激光点云数据将匹配的最好。

可以这样来做一个通俗的理解:NDT把我们所处的三维世界按照一定长度的立方体(比如30cm30cm30cm)进行了划分,类似于一个魔方,每个立方体内并不是存储一个或一些确切的点,而且存储这个立方体被占据的概率密度。当接收到需要匹配的点云时,也按照这样的划分方式进行划分,然后进行配准。

因此,NDT具有以下的特征:

- 支持更大的地图,更稠密的点云 --因为最终还是要划分成voxel的形式

- 相比于ICP等基于点的匹配算法,速度更快

- 更加容忍环境的细微变化

在此不做数学理论推导。

ndt_mapping模块

//2.这个函数是计算在上一帧ndt精确定位的位姿基础上加上一个时间差内imu的精准变化,作为下一帧变化的初始值的过程

static void imu_calc(ros::Time current_time)

{

static ros::Time previous_time = current_time;//当前帧的时间戳

double diff_time = (current_time - previous_time).toSec();//时间变化量

double diff_imu_roll = imu.angular_velocity.x * diff_time;

double diff_imu_pitch = imu.angular_velocity.y * diff_time;

double diff_imu_yaw = imu.angular_velocity.z * diff_time;

current_pose_imu.roll += diff_imu_roll;

current_pose_imu.pitch += diff_imu_pitch;

current_pose_imu.yaw += diff_imu_yaw;

//note-tianyu 计算imu在的线加速度在地图坐标系下xyz方向的线加速度分量 l_acc_map = RzRyRx * l_acc_imu

//计算公式参考roll、pitch和yaw的旋转矩阵

double accX1 = imu.linear_acceleration.x;

double accY1 = std::cos(current_pose_imu.roll) * imu.linear_acceleration.y -

std::sin(current_pose_imu.roll) * imu.linear_acceleration.z;

double accZ1 = std::sin(current_pose_imu.roll) * imu.linear_acceleration.y +

std::cos(current_pose_imu.roll) * imu.linear_acceleration.z;

double accX2 = std::sin(current_pose_imu.pitch) * accZ1 + std::cos(current_pose_imu.pitch) * accX1;

double accY2 = accY1;

double accZ2 = std::cos(current_pose_imu.pitch) * accZ1 - std::sin(current_pose_imu.pitch) * accX1;

double accX = std::cos(current_pose_imu.yaw) * accX2 - std::sin(current_pose_imu.yaw) * accY2;

double accY = std::sin(current_pose_imu.yaw) * accX2 + std::cos(current_pose_imu.yaw) * accY2;

double accZ = accZ2;

//note-tianyu 高中物理公式s = v0*t + (a*t^2)/2

offset_imu_x += current_velocity_imu_x * diff_time + accX * diff_time * diff_time / 2.0;

offset_imu_y += current_velocity_imu_y * diff_time + accY * diff_time * diff_time / 2.0;

offset_imu_z += current_velocity_imu_z * diff_time + accZ * diff_time * diff_time / 2.0;

current_velocity_imu_x += accX * diff_time;

current_velocity_imu_y += accY * diff_time;

current_velocity_imu_z += accZ * diff_time;

offset_imu_roll += diff_imu_roll;

offset_imu_pitch += diff_imu_pitch;

offset_imu_yaw += diff_imu_yaw;

guess_pose_imu.x = previous_pose.x + offset_imu_x;

guess_pose_imu.y = previous_pose.y + offset_imu_y;

guess_pose_imu.z = previous_pose.z + offset_imu_z;

guess_pose_imu.roll = previous_pose.roll + offset_imu_roll;

guess_pose_imu.pitch = previous_pose.pitch + offset_imu_pitch;

guess_pose_imu.yaw = previous_pose.yaw + offset_imu_yaw;

previous_time = current_time;

}

//3.

static void imu_callback(const sensor_msgs::Imu::Ptr& input) //这里输入的是四元数

{

// std::cout << __func__ << std::endl;

//note-tianyu imu是否需要调整方向,有时候可能涉及到imu正装倒装的问题

if (_imu_upside_down)

imuUpsideDown(input);

const ros::Time current_time = input->header.stamp;

static ros::Time previous_time = current_time;

const double diff_time = (current_time - previous_time).toSec();

//note-tianyu 将四元数转成欧拉角 (ros里输入的是四元数)

double imu_roll, imu_pitch, imu_yaw;

tf::Quaternion imu_orientation;

tf::quaternionMsgToTF(input->orientation, imu_orientation);

tf::Matrix3x3(imu_orientation).getRPY(imu_roll, imu_pitch, imu_yaw);

//note-tianyu 限制欧拉角大小不超过M_PI

imu_roll = wrapToPmPi(imu_roll);

imu_pitch = wrapToPmPi(imu_pitch);

imu_yaw = wrapToPmPi(imu_yaw);

//计算欧拉角的便宜

static double previous_imu_roll = imu_roll, previous_imu_pitch = imu_pitch, previous_imu_yaw = imu_yaw;

const double diff_imu_roll = calcDiffForRadian(imu_roll, previous_imu_roll);

const double diff_imu_pitch = calcDiffForRadian(imu_pitch, previous_imu_pitch);

const double diff_imu_yaw = calcDiffForRadian(imu_yaw, previous_imu_yaw);

imu.header = input->header;

imu.linear_acceleration.x = input->linear_acceleration.x;

// imu.linear_acceleration.y = input->linear_acceleration.y;

// imu.linear_acceleration.z = input->linear_acceleration.z;

//车往前开,不存在左右晃,所以只有向前的线速度,防止噪声过大造成干扰

imu.linear_acceleration.y = 0;

imu.linear_acceleration.z = 0;

//note-sixu 根据欧拉角变换来更新角速度, 不相信imu输入的角速度值

if (diff_time != 0)

{

imu.angular_velocity.x = diff_imu_roll / diff_time;

imu.angular_velocity.y = diff_imu_pitch / diff_time;

imu.angular_velocity.z = diff_imu_yaw / diff_time;

}

else

{

imu.angular_velocity.x = 0;

imu.angular_velocity.y = 0;

imu.angular_velocity.z = 0;

}

imu_calc(input->header.stamp);

previous_time = current_time;

previous_imu_roll = imu_roll;

previous_imu_pitch = imu_pitch;

previous_imu_yaw = imu_yaw;

}

//1. note-tianyu-main

static void points_callback(const sensor_msgs::PointCloud2::ConstPtr& input)

{

double r;

pcl::PointXYZI p;

pcl::PointCloud<pcl::PointXYZI> tmp, scan;

pcl::PointCloud<pcl::PointXYZI>::Ptr filtered_scan_ptr(new pcl::PointCloud<pcl::PointXYZI>());

pcl::PointCloud<pcl::PointXYZI>::Ptr transformed_scan_ptr(new pcl::PointCloud<pcl::PointXYZI>());

tf::Quaternion q;

Eigen::Matrix4f t_localizer(Eigen::Matrix4f::Identity());

Eigen::Matrix4f t_base_link(Eigen::Matrix4f::Identity());

static tf::TransformBroadcaster br;

tf::Transform transform;

current_scan_time = input->header.stamp;

pcl::fromROSMsg(*input, tmp);

//note-tianyu 点云过滤

for (pcl::PointCloud<pcl::PointXYZI>::const_iterator item = tmp.begin(); item != tmp.end(); item++)

{

p.x = (double)item->x;

p.y = (double)item->y;

p.z = (double)item->z;

p.intensity = (double)item->intensity;

r = sqrt(pow(p.x, 2.0) + pow(p.y, 2.0));

if (min_scan_range < r && r < max_scan_range)

{

scan.push_back(p);

}

}

pcl::PointCloud<pcl::PointXYZI>::Ptr scan_ptr(new pcl::PointCloud<pcl::PointXYZI>(scan));

// Add initial point cloud to velodyne_map

// note-tianyu 第一帧scan输入,会将其当成直接插入到map中,作为组成map的第一帧子图

if (initial_scan_loaded == 0)

{

//tf_btol 是坐标系转换 baselink to lidar

pcl::transformPointCloud(*scan_ptr, *transformed_scan_ptr, tf_btol);

map += *transformed_scan_ptr;

initial_scan_loaded = 1;

}

// Apply voxelgrid filter 滤波

// pcl库中的VoxelGrid对点云进行体素化,主要就是创建一个三维体素栅格(就是每个比较小的立方体组成的体素栅格)。在每个体素(三维立方体)里面,求取该立方体内的所有点云重心点来代表这个立方体的表示,以此达到下采样的目的。

pcl::VoxelGrid<pcl::PointXYZI> voxel_grid_filter;

voxel_grid_filter.setLeafSize(voxel_leaf_size, voxel_leaf_size, voxel_leaf_size);//室外可以稍微大些,如0.5-2.0;室内需要小一些

voxel_grid_filter.setInputCloud(scan_ptr);

voxel_grid_filter.filter(*filtered_scan_ptr);// filtered_scan_ptr接收降采样后的点云

//note-tianyu 根据对ndt优化的相关参数进行配置

pcl::PointCloud<pcl::PointXYZI>::Ptr map_ptr(new pcl::PointCloud<pcl::PointXYZI>(map));

//四种方式

if (_method_type == MethodType::PCL_GENERIC) //调用pcl库

{

//设置ndt

ndt.setTransformationEpsilon(trans_eps); // 两次变换之间允许的最大值,用于判断是否收敛,作为迭代计算完成的阈值; =0.01

ndt.setMaximumIterations(max_iter); // 最大迭代次数,超过则停止计算; =30

ndt.setStepSize(step_size); // 牛顿法优化的最大步长; =0.1

ndt.setResolution(ndt_res); // voxel的边长大小,过小造成内存占用过高,过大会导致误差偏大; =1.0

ndt.setInputSource(filtered_scan_ptr); // 输入source点云

/*注:ndt.setInputTarget()在更新global_map的时候进行,即直接将global_map输入到ndt_target中*/

}

else if (_method_type == MethodType::PCL_ANH) //cpu ndt

{

anh_ndt.setTransformationEpsilon(trans_eps);

anh_ndt.setStepSize(step_size);

anh_ndt.setResolution(ndt_res);

anh_ndt.setMaximumIterations(max_iter);

anh_ndt.setInputSource(filtered_scan_ptr);//找这一帧输入点云在地图中的匹配

}

#ifdef CUDA_FOUND

else if (_method_type == MethodType::PCL_ANH_GPU) //gpu ndt

{

anh_gpu_ndt.setTransformationEpsilon(trans_eps);

anh_gpu_ndt.setStepSize(step_size);

anh_gpu_ndt.setResolution(ndt_res);

anh_gpu_ndt.setMaximumIterations(max_iter);

anh_gpu_ndt.setInputSource(filtered_scan_ptr);

}

#endif

#ifdef USE_PCL_OPENMP

else if (_method_type == MethodType::PCL_OPENMP) //pcl_OPENMP

{

omp_ndt.setTransformationEpsilon(trans_eps);

omp_ndt.setStepSize(step_size);

omp_ndt.setResolution(ndt_res);

omp_ndt.setMaximumIterations(max_iter);

omp_ndt.setInputSource(filtered_scan_ptr);

}

#endif

//判断是否为第一帧:如果为第一帧就把第一帧设置为target_map,保持完整性

static bool is_first_map = true;

if (is_first_map == true)

{

if (_method_type == MethodType::PCL_GENERIC)

ndt.setInputTarget(map_ptr);

else if (_method_type == MethodType::PCL_ANH)

anh_ndt.setInputTarget(map_ptr);

#ifdef CUDA_FOUND

else if (_method_type == MethodType::PCL_ANH_GPU)

anh_gpu_ndt.setInputTarget(map_ptr);

#endif

#ifdef USE_PCL_OPENMP

else if (_method_type == MethodType::PCL_OPENMP)

omp_ndt.setInputTarget(map_ptr);

#endif

is_first_map = false;

}

//第一帧则以下数值均为零

guess_pose.x = previous_pose.x + diff_x;

guess_pose.y = previous_pose.y + diff_y;

guess_pose.z = previous_pose.z + diff_z;

guess_pose.roll = previous_pose.roll;

guess_pose.pitch = previous_pose.pitch;

guess_pose.yaw = previous_pose.yaw + diff_yaw;

if (_use_imu == true && _use_odom == true)

imu_odom_calc(current_scan_time);

if (_use_imu == true && _use_odom == false)

imu_calc(current_scan_time);

if (_use_imu == false && _use_odom == true)

odom_calc(current_scan_time);

//note-tianyu 根据imu和odo等传感器的配置情况来定义guess_pose_for_ndt

pose guess_pose_for_ndt;

if (_use_imu == true && _use_odom == true)

guess_pose_for_ndt = guess_pose_imu_odom;//下一帧的先验信息

else if (_use_imu == true && _use_odom == false)

guess_pose_for_ndt = guess_pose_imu;//更新初始值

else if (_use_imu == false && _use_odom == true)

guess_pose_for_ndt = guess_pose_odom;

else

guess_pose_for_ndt = guess_pose;

Eigen::AngleAxisf init_rotation_x(guess_pose_for_ndt.roll, Eigen::Vector3f::UnitX());

Eigen::AngleAxisf init_rotation_y(guess_pose_for_ndt.pitch, Eigen::Vector3f::UnitY());

Eigen::AngleAxisf init_rotation_z(guess_pose_for_ndt.yaw, Eigen::Vector3f::UnitZ());

Eigen::Translation3f init_translation(guess_pose_for_ndt.x, guess_pose_for_ndt.y, guess_pose_for_ndt.z);

//note-tianyu ndt优化的初始值预测

Eigen::Matrix4f init_guess =

(init_translation * init_rotation_z * init_rotation_y * init_rotation_x).matrix() * tf_btol;

t3_end = ros::Time::now();

d3 = t3_end - t3_start;

t4_start = ros::Time::now();

pcl::PointCloud<pcl::PointXYZI>::Ptr output_cloud(new pcl::PointCloud<pcl::PointXYZI>);

if (_method_type == MethodType::PCL_GENERIC)

{

//进行ndt配准,计算变换矩阵

ndt.align(*output_cloud, init_guess);// 由此进入正式的ndt算法运算,output_cloud: 存储source经过配准后的点云(由于source_pointcloud被极大的降采样了,因此这个数据没什么用)

//init_guess: ndt计算的初始估计位置,在不使用gnss/imu/odom的情况下,以上一帧车辆位置的变换矩阵作为init_guess 注:ndt对位置不敏感,通常在3m以内都可以迭代计算过去,但是ndt对角度比较敏感,因此初始初始的角度不能与实际相差过大(最好在±45°之内)

fitness_score = ndt.getFitnessScore();

t_localizer = ndt.getFinalTransformation();

has_converged = ndt.hasConverged();

final_num_iteration = ndt.getFinalNumIteration();

transformation_probability = ndt.getTransformationProbability();

}

else if (_method_type == MethodType::PCL_ANH)

{

anh_ndt.align(init_guess);

fitness_score = anh_ndt.getFitnessScore();

t_localizer = anh_ndt.getFinalTransformation();

has_converged = anh_ndt.hasConverged();

final_num_iteration = anh_ndt.getFinalNumIteration();

}

#ifdef CUDA_FOUND

else if (_method_type == MethodType::PCL_ANH_GPU)

{

anh_gpu_ndt.align(init_guess);

fitness_score = anh_gpu_ndt.getFitnessScore();

t_localizer = anh_gpu_ndt.getFinalTransformation();

has_converged = anh_gpu_ndt.hasConverged();

final_num_iteration = anh_gpu_ndt.getFinalNumIteration();

}

#endif

#ifdef USE_PCL_OPENMP

else if (_method_type == MethodType::PCL_OPENMP)

{

omp_ndt.align(*output_cloud, init_guess);

fitness_score = omp_ndt.getFitnessScore();

t_localizer = omp_ndt.getFinalTransformation();

has_converged = omp_ndt.hasConverged();

final_num_iteration = omp_ndt.getFinalNumIteration();

}

#endif

t_base_link = t_localizer * tf_ltob;//note-tianyu 坐标转换

//note-tianyu 根据ndt匹配优化结果将scan的坐标由lidar坐标系换算到map坐标系下

pcl::transformPointCloud(*scan_ptr, *transformed_scan_ptr, t_localizer);//对原始点云进行变换(framd_id: /velodyne->/map)

tf::Matrix3x3 mat_l, mat_b;

//note-tianyu 提取出两个R

mat_l.setValue(static_cast<double>(t_localizer(0, 0)), static_cast<double>(t_localizer(0, 1)),

static_cast<double>(t_localizer(0, 2)), static_cast<double>(t_localizer(1, 0)),

static_cast<double>(t_localizer(1, 1)), static_cast<double>(t_localizer(1, 2)),

static_cast<double>(t_localizer(2, 0)), static_cast<double>(t_localizer(2, 1)),

static_cast<double>(t_localizer(2, 2)));

mat_b.setValue(static_cast<double>(t_base_link(0, 0)), static_cast<double>(t_base_link(0, 1)),

static_cast<double>(t_base_link(0, 2)), static_cast<double>(t_base_link(1, 0)),

static_cast<double>(t_base_link(1, 1)), static_cast<double>(t_base_link(1, 2)),

static_cast<double>(t_base_link(2, 0)), static_cast<double>(t_base_link(2, 1)),

static_cast<double>(t_base_link(2, 2)));

// Update localizer_pose.

localizer_pose.x = t_localizer(0, 3);

localizer_pose.y = t_localizer(1, 3);

localizer_pose.z = t_localizer(2, 3);

mat_l.getRPY(localizer_pose.roll, localizer_pose.pitch, localizer_pose.yaw, 1);

// Update ndt_pose.

// note-tianyu ndt_pose为地图坐标系到车身坐标系的位姿

ndt_pose.x = t_base_link(0, 3);

ndt_pose.y = t_base_link(1, 3);

ndt_pose.z = t_base_link(2, 3);

mat_b.getRPY(ndt_pose.roll, ndt_pose.pitch, ndt_pose.yaw, 1);

current_pose.x = ndt_pose.x;

current_pose.y = ndt_pose.y;

current_pose.z = ndt_pose.z;

current_pose.roll = ndt_pose.roll;

current_pose.pitch = ndt_pose.pitch;

current_pose.yaw = ndt_pose.yaw;

transform.setOrigin(tf::Vector3(current_pose.x, current_pose.y, current_pose.z));

q.setRPY(current_pose.roll, current_pose.pitch, current_pose.yaw);

transform.setRotation(q);

br.sendTransform(tf::StampedTransform(transform, current_scan_time, "map", "base_link"));

scan_duration = current_scan_time - previous_scan_time;

double secs = scan_duration.toSec();

// Calculate the offset (curren_pos - previous_pos)

// note-tianyu 计算前后两帧pose的diff量

diff_x = current_pose.x - previous_pose.x;

diff_y = current_pose.y - previous_pose.y;

diff_z = current_pose.z - previous_pose.z;

diff_yaw = calcDiffForRadian(current_pose.yaw, previous_pose.yaw);

diff = sqrt(diff_x * diff_x + diff_y * diff_y + diff_z * diff_z);

current_velocity_x = diff_x / secs;

current_velocity_y = diff_y / secs;

current_velocity_z = diff_z / secs;

current_pose_imu.x = current_pose.x;

current_pose_imu.y = current_pose.y;

current_pose_imu.z = current_pose.z;

current_pose_imu.roll = current_pose.roll;

current_pose_imu.pitch = current_pose.pitch;

current_pose_imu.yaw = current_pose.yaw;

current_pose_odom.x = current_pose.x;

current_pose_odom.y = current_pose.y;

current_pose_odom.z = current_pose.z;

current_pose_odom.roll = current_pose.roll;

current_pose_odom.pitch = current_pose.pitch;

current_pose_odom.yaw = current_pose.yaw;

current_pose_imu_odom.x = current_pose.x;

current_pose_imu_odom.y = current_pose.y;

current_pose_imu_odom.z = current_pose.z;

current_pose_imu_odom.roll = current_pose.roll;

current_pose_imu_odom.pitch = current_pose.pitch;

current_pose_imu_odom.yaw = current_pose.yaw;

current_velocity_imu_x = current_velocity_x;

current_velocity_imu_y = current_velocity_y;

current_velocity_imu_z = current_velocity_z;

// Update position and posture. current_pos -> previous_pos

previous_pose.x = current_pose.x;

previous_pose.y = current_pose.y;

previous_pose.z = current_pose.z;

previous_pose.roll = current_pose.roll;

previous_pose.pitch = current_pose.pitch;

previous_pose.yaw = current_pose.yaw;

previous_scan_time.sec = current_scan_time.sec;

previous_scan_time.nsec = current_scan_time.nsec;

offset_imu_x = 0.0;

offset_imu_y = 0.0;

offset_imu_z = 0.0;

offset_imu_roll = 0.0;

offset_imu_pitch = 0.0;

offset_imu_yaw = 0.0;

offset_odom_x = 0.0;

offset_odom_y = 0.0;

offset_odom_z = 0.0;

offset_odom_roll = 0.0;

offset_odom_pitch = 0.0;

offset_odom_yaw = 0.0;

offset_imu_odom_x = 0.0;

offset_imu_odom_y = 0.0;

offset_imu_odom_z = 0.0;

offset_imu_odom_roll = 0.0;

offset_imu_odom_pitch = 0.0;

offset_imu_odom_yaw = 0.0;

// Calculate the shift between added_pos and current_pos

//note-tianyu 计算两frame之间的距离间隔,当大于一定阈值时,才像向地图中插入新的点云(子地图)

double shift = sqrt(pow(current_pose.x - added_pose.x, 2.0) + pow(current_pose.y - added_pose.y, 2.0));

// 每n米更新一次全局地图

if (shift >= min_add_scan_shift)

{

map += *transformed_scan_ptr;

added_pose.x = current_pose.x;

added_pose.y = current_pose.y;

added_pose.z = current_pose.z;

added_pose.roll = current_pose.roll;

added_pose.pitch = current_pose.pitch;

added_pose.yaw = current_pose.yaw;

if (_method_type == MethodType::PCL_GENERIC)

//

ndt.setInputTarget(map_ptr); //note-tianyu

else if (_method_type == MethodType::PCL_ANH)

{

if (_incremental_voxel_update == true)

anh_ndt.updateVoxelGrid(transformed_scan_ptr);

else

anh_ndt.setInputTarget(map_ptr);

}

#ifdef CUDA_FOUND

else if (_method_type == MethodType::PCL_ANH_GPU)

anh_gpu_ndt.setInputTarget(map_ptr);

#endif

#ifdef USE_PCL_OPENMP

else if (_method_type == MethodType::PCL_OPENMP)

omp_ndt.setInputTarget(map_ptr);

#endif

}

sensor_msgs::PointCloud2::Ptr map_msg_ptr(new sensor_msgs::PointCloud2);

pcl::toROSMsg(*map_ptr, *map_msg_ptr);

ndt_map_pub.publish(*map_msg_ptr);

q.setRPY(current_pose.roll, current_pose.pitch, current_pose.yaw);

current_pose_msg.header.frame_id = "map";

current_pose_msg.header.stamp = current_scan_time;

current_pose_msg.pose.position.x = current_pose.x;

current_pose_msg.pose.position.y = current_pose.y;

current_pose_msg.pose.position.z = current_pose.z;

current_pose_msg.pose.orientation.x = q.x();

current_pose_msg.pose.orientation.y = q.y();

current_pose_msg.pose.orientation.z = q.z();

current_pose_msg.pose.orientation.w = q.w();

current_pose_pub.publish(current_pose_msg);

// Write log

if (!ofs)

{

std::cerr << "Could not open " << filename << "." << std::endl;

exit(1);

}

ofs << input->header.seq << ","

<< input->header.stamp << ","

<< input->header.frame_id << ","

<< scan_ptr->size() << ","

<< filtered_scan_ptr->size() << ","

<< std::fixed << std::setprecision(5) << current_pose.x << ","

<< std::fixed << std::setprecision(5) << current_pose.y << ","

<< std::fixed << std::setprecision(5) << current_pose.z << ","

<< current_pose.roll << ","

<< current_pose.pitch << ","

<< current_pose.yaw << ","

<< final_num_iteration << ","

<< fitness_score << ","

<< ndt_res << ","

<< step_size << ","

<< trans_eps << ","

<< max_iter << ","

<< voxel_leaf_size << ","

<< min_scan_range << ","

<< max_scan_range << ","

<< min_add_scan_shift << std::endl;

std::cout << "-----------------------------------------------------------------" << std::endl;

std::cout << "Sequence number: " << input->header.seq << std::endl;

std::cout << "Number of scan points: " << scan_ptr->size() << " points." << std::endl;

std::cout << "Number of filtered scan points: " << filtered_scan_ptr->size() << " points." << std::endl;

std::cout << "transformed_scan_ptr: " << transformed_scan_ptr->points.size() << " points." << std::endl;

std::cout << "map: " << map.points.size() << " points." << std::endl;

std::cout << "NDT has converged: " << has_converged << std::endl;

std::cout << "Fitness score: " << fitness_score << std::endl;

std::cout << "Number of iteration: " << final_num_iteration << std::endl;

std::cout << "(x,y,z,roll,pitch,yaw):" << std::endl;

std::cout << "(" << current_pose.x << ", " << current_pose.y << ", " << current_pose.z << ", " << current_pose.roll

<< ", " << current_pose.pitch << ", " << current_pose.yaw << ")" << std::endl;

std::cout << "Transformation Matrix:" << std::endl;

std::cout << t_localizer << std::endl;

std::cout << "shift: " << shift << std::endl;

std::cout << "-----------------------------------------------------------------" << std::endl;

}

int main(int argc, char** argv)

{

...

//4.

ros::Subscriber points_sub = nh.subscribe("points_raw", 100000, points_callback);

//ros::Subscriber points_sub = nh.subscribe("/filtered_points", 100000, points_callback);

ros::Subscriber odom_sub = nh.subscribe("/vehicle/odom", 100000, odom_callback);

ros::Subscriber imu_sub = nh.subscribe(_imu_topic, 100000, imu_callback);

ros::spin();

return 0;

}

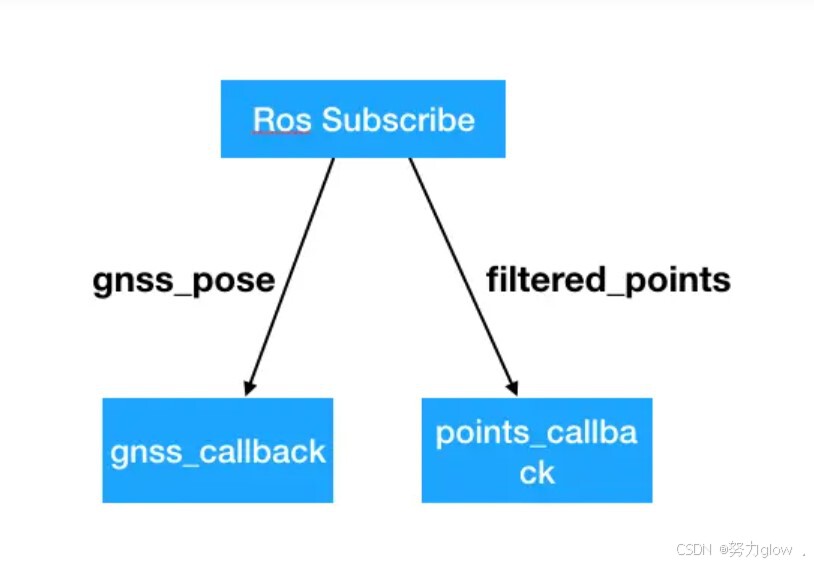

ndt_matching

autoware的定位模块ndt_matching,其中融合了gnss卫星信息,IMU惯导信息,odom里程计,lidar激光雷达点云数据,进行了综合的定位判断。在我们的实验中,只使用GNSS和激光雷达。主要有以下两个topic的回调控制。

Lidar定位

- points_callback:这里主要做的是各种数据的融合定位,首先会结合IMU和odom数据进行一个基本位置预测,然后就是根据,filtered_points消息传过来的点云数据进行矩阵变化,加入到ndt的算法内进行计算得到一个ndt的预测位置。得到了点云匹配算法加入后的位置,与之前利用IMU和里程计计算的位置进行比较

predict_pose_error = sqrt((ndt_pose.x - predict_pose_for_ndt.x) * (ndt_pose.x - predict_pose_for_ndt.x) +

(ndt_pose.y - predict_pose_for_ndt.y) * (ndt_pose.y - predict_pose_for_ndt.y) +

(ndt_pose.z - predict_pose_for_ndt.z) * (ndt_pose.z - predict_pose_for_ndt.z));

if (predict_pose_error <= PREDICT_POSE_THRESHOLD)//默认值0.5

小于0.5说明和计算匹配值相近,则说明定位没有跳跃,设当前位置为点云计算匹配值,否则使用惯导和IMU物理计算综合值

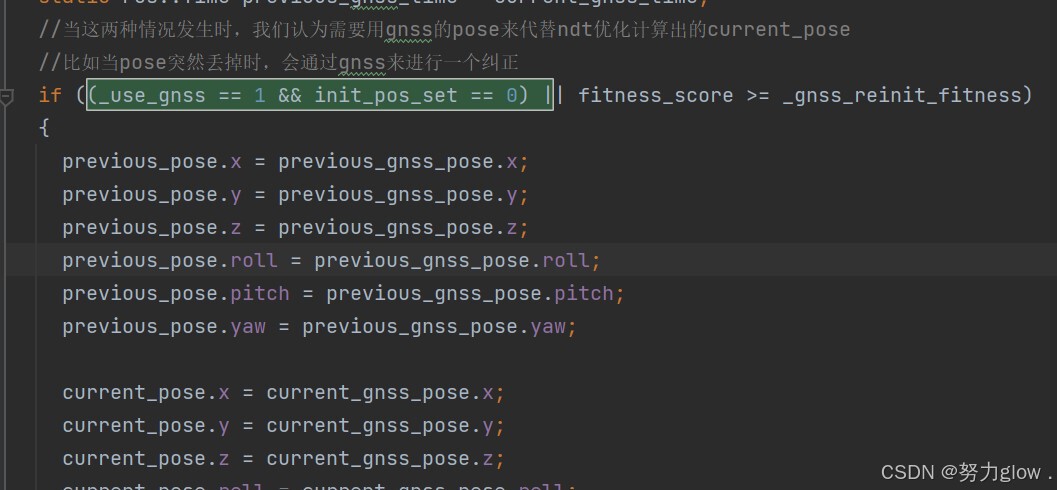

GNSS定位

- gnss_callback:接收到gnss_pose消息,进行判断rosparam参数是否设置使用_use_gnss,init_pos_set是否已经有初始化位置,fitness_score为points_callback中ndt算法点云匹配的结果值,

_gnss_reinit_fitness为静态值500.

(_use_gnss == 1 && init_pos_set == 0) || fitness_score >= _gnss_reinit_fitness

当满足以上条件时,则对当前位置进行重新设置,判断ndt可能存在失准,改用GNSS位置为当前正确位置。这也是对激光雷达定位的一个辅助,这里将会重新设置点云的起始位置,本身点云匹配就会有累计误差出现的,加上gnss后使点云匹配恢复准确度,接下来继续使用以激光雷达定位为主的定位方式。

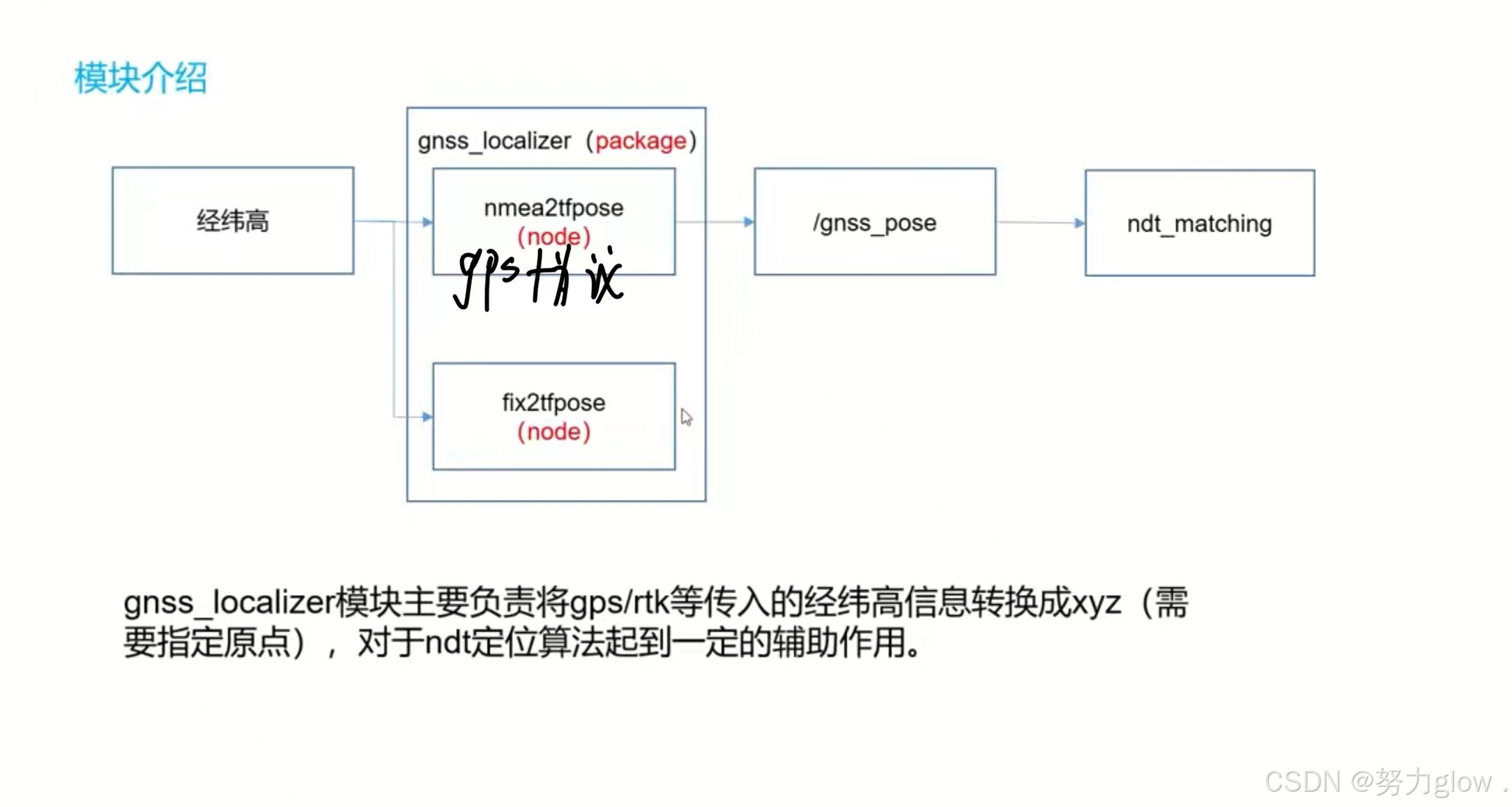

处理GNSS消息(协议)

autoware中对gnss进行处理的包在gnss和gnss_localizer,订阅的topic为/nmea_sentence。

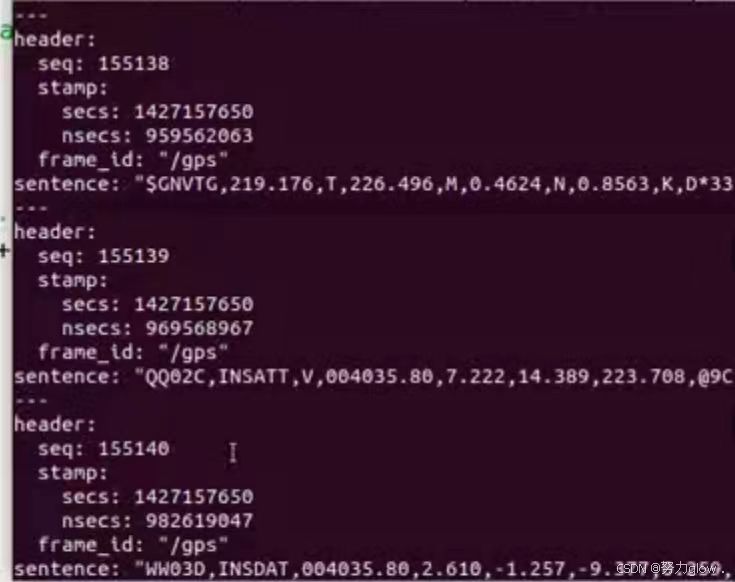

nmea为国际标准的卫星定位传输信号,可以通过华测等定位设备直接读取出来。如一个标准的信息为:

$GPGGA,063201.60,3016.3898531,N,12004.0198533,E,4,19,0.7,6.795,M,7.038,M,1.6,1792*78 $GPRMC,063201.60,A,3016.3898531,N,12004.0198533,E,0.04,190.24,311219,5.6,W,D,C*46

这里就发送了两个标准信息GPGGA和GPRMC,其中会有经纬度,高度,卫星质量等数据,我们使用其中的经纬度即可。

目前主要记录到这,可供简单学习和参考,参考的是autoware的课程~

1174

1174

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?