记录我一次体验完整的比赛经历,通过datawhale的开源学习以及和众多大佬的比赛交流,收获颇多。

比赛官网:https://www.xuelangyun.com/#/sign-up-statistics?tab=0

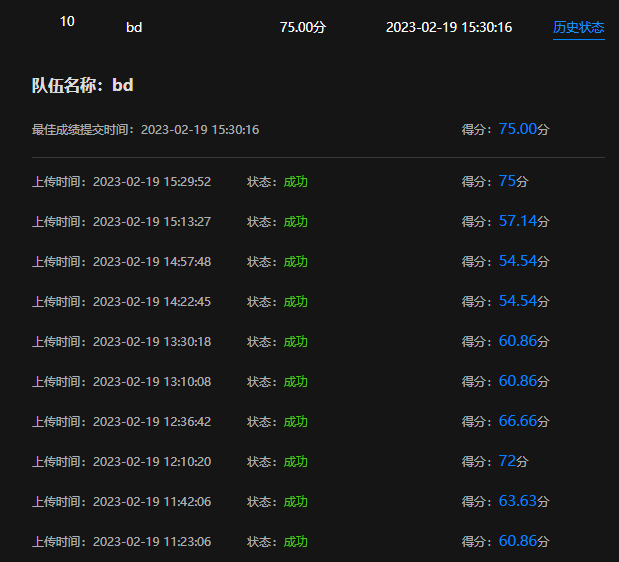

比赛排名:55/263

比赛内容:阀体装配线产品合格检测(时序数据二分类、异常检测)

比赛思路如下:

读取csv数据构建特征

统计特征:最小值、最大值、标准差、方差、极差、均值、均方根;

时域特征:总能量、偏度、峭度、波形因子、峰值因子、脉冲因子、裕度因子;

频域特征:经过fft(快速傅里叶变换)后的数据的总能量、最大值、最小值、标准差、方差、极差、均值、偏度、均方根。

这些特征的详细代码参照时频域特征:http://t.csdn.cn/qP8cx

# 定义特征抽取函数

import pandas as pd

import os

import numpy as np

from scipy.linalg import norm

from tqdm import tqdm

import pickle

import json

def get_each_sample_feature(ori_path):

data = {}

feature_name_list = []

columns_feature_name = ['sum','min','max','std','var','pk','mean','skew','kurt','rms','boxing','fengzhi','maichong','yudu','25','50','75']

defult_parameters=[float('nan') for i in range(len(columns_feature_name))]

columns = ['id']

sample_list = os.listdir(os.path.join(ori_path,'P1000','Report_P1000_Time'))

for sample in sample_list:

sample_name = sample.replace('.csv','')

data[sample_name] = []

station_list = os.listdir(ori_path)

for station in station_list:

for sensor in os.listdir(os.path.join(ori_path,station)):

feature_name_list.append(ori_path+'/%s/%s'%(station,sensor))

for feature_name in tqdm(feature_name_list):

for k in columns_feature_name:

tmp_name = '_'.join(feature_name.split('/')[-2:])

columns.append(tmp_name+'_'+k)

for key in data.keys():

sample_path = feature_name+'/'+key+'.csv'

if os.path.exists(sample_path):

sample_onesensor_pd = pd.read_csv(sample_path)

if sample_onesensor_pd.shape[0] == 0:

sample_ = defult_parameters

else:

sample_ = [sample_onesensor_pd.sum()[0],

sample_onesensor_pd.min()[0],

sample_onesensor_pd.max()[0],

sample_onesensor_pd.std()[0],

sample_onesensor_pd.var()[0],

sample_onesensor_pd.max()[0]-sample_onesensor_pd.min()[0],

sample_onesensor_pd.mean()[0],

sample_onesensor_pd.skew()[0],

sample_onesensor_pd.kurt()[0],

norm(sample_onesensor_pd,2)/np.sqrt(sample_onesensor_pd.shape[-1]),

norm(sample_onesensor_pd,2)/np.sqrt(sample_onesensor_pd.shape[-1])/sample_onesensor_pd.mean()[0],

((sample_onesensor_pd.max()-sample_onesensor_pd.min())/norm(sample_onesensor_pd,2)/np.sqrt(sample_onesensor_pd.shape[-1]))[0],

((sample_onesensor_pd.max()-sample_onesensor_pd.min())/sample_onesensor_pd.mean())[0],

((sample_onesensor_pd.max()-sample_onesensor_pd.min())/(np.mean(np.sqrt(abs(sample_onesensor_pd)))**2))[0],

np.percentile(sample_onesensor_pd,(25)),

np.percentile(sample_onesensor_pd,(50)),

np.percentile(sample_onesensor_pd,(75))]

else:

sample_ = defult_parameters

for i in sample_:

data[key].append(i)

return data,columns以上代码根据datawhale开源代码思路进行了自己的特征扩展,datawhale的思路和开源代码地址:

https://datawhaler.feishu.cn/docx/T3Stdh8nFo4FSwxpTX8cFI0rnnd

接下来提取NG,OK样本中的特征数据,并分别保存为OK.csv ,NG.csv到本地方便使用。

OK,columns = get_each_sample_feature('train/OK')

pd.DataFrame(list(OK.values()),columns=columns[1:]).to_csv('OK.csv',index = False)

NG,columns = get_each_sample_feature('train/NG')

pd.DataFrame(list(NG.values()),columns=columns[1:]).to_csv('NG.csv',index = False)通过以上代码可以把一个一个检测台文件夹里的样本csv处理成一个特征csv。

读取特征数据处理后进行特征筛选

读取特征数据

#读取OK特征数据,删除行列全为空的数据,赋予对应的label为0

OK = pd.read_csv('OK.csv')

OK = OK.dropna(axis = 0,how = 'all')

OK = OK.dropna(axis = 1,how = 'all')

OK['label'] = 0

OK

NG = pd.read_csv('NG.csv')

NG = NG.dropna(axis = 0,how = 'all')

NG = NG.dropna(axis = 1,how = 'all')

NG['label'] = 1

NG

#拼接ok和ng特征数据

data = pd.concat([OK,NG])

data

#查看缺失值数量

print(data.isna().sum())

print(data.isna().sum().sum())由于我做的特征有的存在较多的缺失值,所以需要删掉缺失值过多的特征,如缺失50%就可以认为缺失过多。

#查看缺失值在该列的占比

df.isna().mean()

#定义查找缺失值超过比例的列的函数

#这里给个参数ratio用于控制缺失值比例,比如给个0.01,意思就是缺失值超过99%这个特征就剔除。

def filter_col_by_nan(df, ratio=0.05):

cols = []

for col in df.columns:

if df[col].isna().mean() >= (1-ratio):

cols.append(col)

return cols

#比如我想把缺失值比例超过0.02的列删掉

nanfeature = filter_col_by_nan(data, ratio=0.98)

nanfeature

data = data.drop(labels = nanfeature,axis = 1)

data缺失值补全

常见的是用均值,中位数以及众数填充缺失值(甚至可以直接用一个常数填充)

但是针对这一场景:

对于NG样本而言,过检测的传感器发现数据异常,就会判定阀体异常,那么其他的数据就不会去测了,所以有的阀体是有缺失值的,即没有全部的有效特征#针对这种情况,这里选择从OK样本中随机选一个值进行填充,即我们认为没有测量的的特征值默认是能通过阀体检测台的。

#定义缺失值填充函数

def fill_with_random(df, column):

df[column] = df[column].apply(lambda x: np.random.choice(OK[column].dropna().values) if np.isnan(x) else x)

return df

for i in tqdm(data.columns):

data=fill_with_random(data,i)

#可以再次检查是否还有缺失值

print(data.isna().sum())特征筛选

方差过滤+皮尔逊系数(只能筛选到线性特征)

train_data=data.drop(labels='label', axis=1)

train_data

from sklearn.feature_selection import VarianceThreshold

var = VarianceThreshold(threshold = np.median(train_data.var().values))

# var = VarianceThreshold(threshold = 0)

var.fit_transform(train_data)

is_select = var.get_support()

var_feature = train_data.iloc[:,is_select]

var_feature

x_array = np.array(var_feature)

x_array

y_array = np.array(data['label'])

y_array

from scipy.stats import pearsonr

def multivariate_pearsonr(X, Y):

scores, pvalues = [], []

for column in range(X.shape[1]):

cur_score, cur_p = pearsonr(X[:,column], Y)

scores.append(abs(cur_score))

pvalues.append(cur_p)

return (np.array(scores), np.array(pvalues))

from sklearn.feature_selection import SelectKBest

m_pearsonr = SelectKBest(score_func=multivariate_pearsonr, k=50)

X_pearson = m_pearsonr.fit_transform(x_array, y_array)

print(m_pearsonr.scores_)

pearsonr = pd.DataFrame(m_pearsonr.scores_, columns = ["pearsonr"], index=var_feature.columns)

pearsonr = pearsonr.reset_index()

pearsonr.sort_values('pearsonr',ascending=0)随机森林特征重要性(负样本少,重要性可能不准)

x = data.iloc[:,:-1]

y = data['label']

from sklearn.feature_selection import SelectFromModel

from sklearn.ensemble import RandomForestClassifier as rfc

from sklearn.model_selection import cross_val_score

import numpy as np

import matplotlib.pyplot as plt

rfc_ = rfc(n_estimators=88,random_state=2023)

rfc_.fit(x,y).feature_importances_

max_fi = max(rfc_.fit(x,y).feature_importances_)

max_fi

threshold = np.linspace(0,max_fi,20)

threshold

scores = []

for i in threshold:

X_embedded = SelectFromModel(rfc_,threshold=i).fit_transform(x,y)

score = cross_val_score(rfc_,X_embedded,y,cv=5).mean()

scores.append(score)

plt.plot(threshold,scores)

plt.show()

print("模型最优时的分数:",max(scores))

print("模型最优时的阈值:",scores.index(max(scores)))

a = pd.DataFrame({'feature':x.columns.to_list()})

a

b = pd.DataFrame({'feature_importances_':rfc_.fit(x,y).feature_importances_})

b

c = pd.concat([a,b],axis=1)

c = c.sort_values(by='feature_importances_',ascending=False)

#c = c[:60]

clightgbm特征重要性

from sklearn.model_selection import StratifiedKFold

import lightgbm

def select_by_lgb(train_data,train_label,random_state=2023,n_splits=5,metric='auc',num_round=10000,early_stopping_rounds=100):

kfold = StratifiedKFold(n_splits=n_splits, shuffle=True, random_state=random_state)

feature_importances = pd.DataFrame()

feature_importances['feature'] = train_data.columns

fold=0

for train_idx, val_idx in kfold.split(train_data,target):

random_state+=1

train_x = train_data.iloc[train_idx]

train_y = train_label.iloc[train_idx]

test_x = train_data.iloc[val_idx]

test_y = train_label.iloc[val_idx]

clf=lightgbm

train_matrix=clf.Dataset(train_x,label=train_y)

test_matrix=clf.Dataset(test_x,label=test_y)

params={

'boosting_type': 'gbdt',

'objective': 'binary',

'learning_rate': 0.1,

'metric': metric,

'seed': 2020,

'nthread':-1 ,

'verbose': -1}

model=clf.train(params,train_matrix,num_round,valid_sets=test_matrix,early_stopping_rounds=early_stopping_rounds)

feature_importances['fold_{}'.format(fold + 1)] = model.feature_importance()

fold+=1

feature_importances['averge']=feature_importances[['fold_{}'.format(i) for i in range(1,n_splits+1)]].mean(axis=1)

return feature_importances

train_data = data.drop(labels='label', axis=1)

train_data

target = data['label']

target

feature_importances=select_by_lgb(train_data,target)

feature_importances['averge']=feature_importances[['fold_{}'.format(i) for i in range(1,6)]].mean(axis=1)

feature_importances=feature_importances.sort_values(by='averge',ascending=False)

feature_importances互信息特征筛选(线性和非线性特征都能筛选到)

X = data.iloc[:,:-1]

y = data['label']

from sklearn.feature_selection import mutual_info_classif as MIC

result = MIC(X,y)

k = result.shape[0] - sum(result <= 0)

a = pd.DataFrame({'feature':X.columns.to_list()})

a

b = pd.DataFrame({'feature_importances_':result})

b

c = pd.concat([a,b],axis=1)

c = c.sort_values(by='feature_importances_',ascending=False)

c特征筛选方法简要总结:https://cloud.tencent.com/developer/article/1848218#

由于比赛要求只能使用50个特征,所以还需要写一段代码判断是否超过50个特征

feature_list = []

for feature in list(c['feature'][:100]):

if 'sum' in feature:

feature = feature.replace('_sum','')

if 'min' in feature:

feature = feature.replace('_min','')

if 'max' in feature:

feature = feature.replace('_max','')

if 'std' in feature:

feature = feature.replace('_std','')

if 'var' in feature:

feature = feature.replace('_var','')

if 'pk' in feature:

feature = feature.replace('_pk','')

if 'mean' in feature:

feature = feature.replace('_mean','')

if 'skew' in feature:

feature = feature.replace('_skew','')

if 'kurt' in feature:

feature = feature.replace('_kurt','')

if 'rms' in feature:

feature = feature.replace('_rms','')

if 'boxing' in feature:

feature = feature.replace('_boxing','')

if 'fengzhi' in feature:

feature = feature.replace('_fengzhi','')

if 'maichong' in feature:

feature = feature.replace('_maichong','')

if 'yudu' in feature:

feature = feature.replace('_yudu','')

if '25' in feature:

feature = feature.replace('_25','')

if '50' in feature:

feature = feature.replace('_50','')

if '75' in feature:

feature = feature.replace('_75','')

else:

print('全部替换完成')

feature_list.append(feature)

new_feature_list = sorted(set(feature_list),key = feature_list.index)

new_feature_list

len(new_feature_list)

allfeature = list(c['feature'][:100])

allfeature模型建立

模型可以选择常规的机器学习模型,如随机森林、lightgbm、xgboost、catboost等等,但是由于我做的特征出现过拟合验证的问题,在这些模型上的效果不太理想,于是我选择了gbdt+lr的模型进行训练预测,但是效果还是不咋样,于是调参等一系列操作都没有进行。这里就不放代码了,对gbdt+lr模型感兴趣的点击这个链接看看:http://t.csdn.cn/t6ZKI代码在文末的github里。

不过进行cvk折交叉验证值得记录一下,因为我只用过cvk折交叉验证来在随机森林上进行调参。因为样本不均衡,这里没有选择使用train_test_split或者KFold,而是使用了根据样本标签比例来划分数据集的StratifiedKFold。

from sklearn.model_selection import StratifiedKFold

from sklearn.metrics import f1_score, recall_score

import xgboost as xgb

kf = StratifiedKFold(n_splits=5, shuffle=True)

f1_scores1 = []

recall_scores1 = []

counter = 0

for train_index, test_index in kf.split(train_data,label):

samples = np.array(train_data)

labels = np.array(label)

X_train, X_test = samples[train_index], samples[test_index]

y_train, y_test = labels[train_index], labels[test_index]

ng_nums = y_test.sum()

print('Test ok nums: {}'.format(len(y_test) - ng_nums))

print('Test NG nums: {}'.format(ng_nums))

model1 = xgb.XGBClassifier(max_depth=8, learning_rate=0.05,n_estimators=100, objective='binary:logistic',

eval_metric=['logloss', 'auc', 'error'],

use_label_encoder=False)

model1.fit(X_train,y_train)

test_pred1 = model1.predict(X_test)

f1_scores1.append(f1_score(y_test,test_pred1))

recall_scores1.append(recall_score(y_test,test_pred1,average='binary'))

print(f1_scores1)

print(recall_scores1)模型融合代码:

from sklearn.ensemble import VotingClassifier

voting_clf = VotingClassifier(estimators=[('rfc',rfc),('cbc',cbc),('xgb',xgb)],

voting='soft')

for clf in (rfc, cbc, xgb, voting_clf):

clf.fit(x_train, y_train)

y_pred = clf.predict(x_test)docker常用操作

#查看linux空间

df -h

#安装依赖

pip install -r requirements.txt

#创建docker镜像

chmod +x build.sh

sudo ./build.sh

#挂载数据到镜像

sudo docker run -it -v /home/wyp/data:/code/components/data components-demo:1.0.0 /bin/bash

sudo docker run -it -v /home/wyp/train/NG:/code/components/data components-demo:1.0.0 /bin/bash

#执行代码

chmod +x run.sh

./run.sh

总结

首先,由于对业务背景认识不够准确,对异常样本ng也没有理解到位,在构建特征时的操作是无脑堆特征,有的特征是不必要的,有的特征是冗余的。我也没有加以鉴别就直接筛选使用。在赛后看到大佬们做了eda并分析得到有的特征是一样的等相关有用信息进行构造特征,由此我也认识到特征工程的重要性,好的特征是成功的基础。同时我也学会新的缺失值处理和筛选方法,也不再拘泥于使用一种模型,尝试了多个模型,进行了cvk折交叉验证,也尝试使用投票法融合模型,总体学到了不少知识,收获很大。

其次,打比赛还是不能看榜,人比人属实是气死人。自己做自己的就好,有收获,有成果提交上去试试就行。为了打比赛把身体搞垮了也得不偿失。比赛确实是玄学,后期在机缘巧合之下从50+到75分,没有点运气加持那是不可能的。

最后,感谢飞哥一个比赛全程的交流学习,感谢群里的大佬的答疑,感谢军哥的指点,感谢队友凯凯的合作。

然后2023ccf dcic欺诈风险识别(风控,二分类)和第六届全国工业互联网数据创新应用大赛——产品量测值预测赛题(回归)再战!!!

351

351

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?