问题

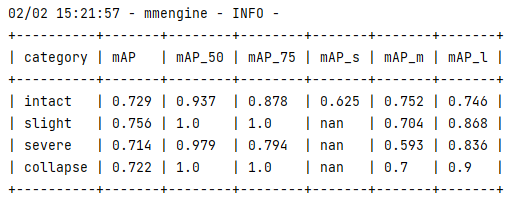

在训练自己的Mask-R-CNN模型时,正常情况下只能打印coocapi评估指标,形式如下

开启打印每一类的评估数据时,仅仅只能打印mAP的值

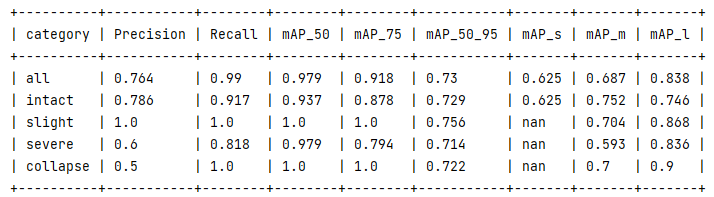

修改后如下

可以打印真实的平均准确率(Precision)、召回率(Recall)、mAP

注:下图的mAP_50_95即是上图的mAP值

实验环境

mmdetection==3.3.0

修改步骤

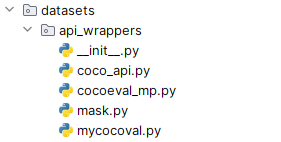

mmdet/datasets/api_wrappers项目最终目录

1.粘贴pycocotools工具包中的mask.py至mmdet/datasets/api_wrappers下,其代码如下,新建mask.py将下面代码粘贴即可

mask.py

__author__ = 'tsungyi'

import pycocotools._mask as _mask

# Interface for manipulating masks stored in RLE format.

#

# RLE is a simple yet efficient format for storing binary masks. RLE

# first divides a vector (or vectorized image) into a series of piecewise

# constant regions and then for each piece simply stores the length of

# that piece. For example, given M=[0 0 1 1 1 0 1] the RLE counts would

# be [2 3 1 1], or for M=[1 1 1 1 1 1 0] the counts would be [0 6 1]

# (note that the odd counts are always the numbers of zeros). Instead of

# storing the counts directly, additional compression is achieved with a

# variable bitrate representation based on a common scheme called LEB128.

#

# Compression is greatest given large piecewise constant regions.

# Specifically, the size of the RLE is proportional to the number of

# *boundaries* in M (or for an image the number of boundaries in the y

# direction). Assuming fairly simple shapes, the RLE representation is

# O(sqrt(n)) where n is number of pixels in the object. Hence space usage

# is substantially lower, especially for large simple objects (large n).

#

# Many common operations on masks can be computed directly using the RLE

# (without need for decoding). This includes computations such as area,

# union, intersection, etc. All of these operations are linear in the

# size of the RLE, in other words they are O(sqrt(n)) where n is the area

# of the object. Computing these operations on the original mask is O(n).

# Thus, using the RLE can result in substantial computational savings.

#

# The following API functions are defined:

# encode - Encode binary masks using RLE.

# decode - Decode binary masks encoded via RLE.

# merge - Compute union or intersection of encoded masks.

# iou - Compute intersection over union between masks.

# area - Compute area of encoded masks.

# toBbox - Get bounding boxes surrounding encoded masks.

# frPyObjects - Convert polygon, bbox, and uncompressed RLE to encoded RLE mask.

#

# Usage:

# Rs = encode( masks )

# masks = decode( Rs )

# R = merge( Rs, intersect=false )

# o = iou( dt, gt, iscrowd )

# a = area( Rs )

# bbs = toBbox( Rs )

# Rs = frPyObjects( [pyObjects], h, w )

#

# In the API the following formats are used:

# Rs - [dict] Run-length encoding of binary masks

# R - dict Run-length encoding of binary mask

# masks - [hxwxn] Binary mask(s) (must have type np.ndarray(dtype=uint8) in column-major order)

# iscrowd - [nx1] list of np.ndarray. 1 indicates corresponding gt image has crowd region to ignore

# bbs - [nx4] Bounding box(es) stored as [x y w h]

# poly - Polygon stored as [[x1 y1 x2 y2...],[x1 y1 ...],...] (2D list)

# dt,gt - May be either bounding boxes or encoded masks

# Both poly and bbs are 0-indexed (bbox=[0 0 1 1] encloses first pixel).

#

# Finally, a note about the intersection over union (iou) computation.

# The standard iou of a ground truth (gt) and detected (dt) object is

# iou(gt,dt) = area(intersect(gt,dt)) / area(union(gt,dt))

# For "crowd" regions, we use a modified criteria. If a gt object is

# marked as "iscrowd", we allow a dt to match any subregion of the gt.

# Choosing gt' in the crowd gt that best matches the dt can be done using

# gt'=intersect(dt,gt). Since by definition union(gt',dt)=dt, computing

# iou(gt,dt,iscrowd) = iou(gt',dt) = area(intersect(gt,dt)) / area(dt)

# For crowd gt regions we use this modified criteria above for the iou.

#

# To compile run "python setup.py build_ext --inplace"

# Please do not contact us for help with compiling.

#

# Microsoft COCO Toolbox. version 2.0

# Data, paper, and tutorials available at: http://mscoco.org/

# Code written by Piotr Dollar and Tsung-Yi Lin, 2015.

# Licensed under the Simplified BSD License [see coco/license.txt]

iou = _mask.iou

merge = _mask.merge

frPyObjects = _mask.frPyObjects

def encode(bimask):

if len(bimask.shape) == 3:

return _mask.encode(bimask)

elif len(bimask.shape) == 2:

h, w = bimask.shape

return _mask.encode(bimask.reshape((h, w, 1), order='F'))[0]

def decode(rleObjs):

if type(rleObjs) == list:

return _mask.decode(rleObjs)

else:

return _mask.decode([rleObjs])[:,:,0]

def area(rleObjs):

if type(rleObjs) == list:

return _mask.area(rleObjs)

else:

return _mask.area([rleObjs])[0]

def toBbox(rleObjs):

if type(rleObjs) == list:

return _mask.toBbox(rleObjs)

else:

return _mask.toBbox([rleObjs])[0]

2.新建自己的cocoval.py,命名为mycocoval.py,路径:mmdet/datasets/api_wrappers/mycocoval.py

mycocoval.py

__author__ = 'tsungyi'

import numpy as np

import datetime

import time

from collections import defaultdict

from . import mask as maskUtils

import copy

class COCOeval:

# Interface for evaluating detection on the Microsoft COCO dataset.

#

# The usage for CocoEval is as follows:

# cocoGt=..., cocoDt=... # load dataset and results

# E = CocoEval(cocoGt,cocoDt); # initialize CocoEval object

# E.params.recThrs = ...; # set parameters as desired

# E.evaluate(); # run per image evaluation

# E.accumulate(); # accumulate per image results

# E.summarize(); # display summary metrics of results

# For example usage see evalDemo.m and http://mscoco.org/.

#

# The evaluation parameters are as follows (defaults in brackets):

# imgIds - [all] N img ids to use for evaluation

# catIds - [all] K cat ids to use for evaluation

# iouThrs - [.5:.05:.95] T=10 IoU thresholds for evaluation

# recThrs - [0:.01:1] R=101 recall thresholds for evaluation

# areaRng - [...] A=4 object area ranges for evaluation

# maxDets - [1 10 100] M=3 thresholds on max detections per image

# iouType - ['segm'] set iouType to 'segm', 'bbox' or 'keypoints'

# iouType replaced the now DEPRECATED useSegm parameter.

# useCats - [1] if true use category labels for evaluation

# Note: if useCats=0 category labels are ignored as in proposal scoring.

# Note: multiple areaRngs [Ax2] and maxDets [Mx1] can be specified.

#

# evaluate(): evaluates detections on every image and every category and

# concats the results into the "evalImgs" with fields:

# dtIds - [1xD] id for each of the D detections (dt)

# gtIds - [1xG] id for each of the G ground truths (gt)

# dtMatches - [TxD] matching gt id at each IoU or 0

# gtMatches - [TxG] matching dt id at each IoU or 0

# dtScores - [1xD] confidence of each dt

# gtIgnore - [1xG] ignore flag for each gt

# dtIgnore - [TxD] ignore flag for each dt at each IoU

#

# accumulate(): accumulates the per-image, per-category evaluation

# results in "evalImgs" into the dictionary "eval" with fields:

# params - parameters used for evaluation

# date - date evaluation was performed

# counts - [T,R,K,A,M] parameter dimensions (see above)

# precision - [TxRxKxAxM] precision for every evaluation setting

# recall - [TxKxAxM] max recall for every evaluation setting

# Note: precision and recall==-1 for settings with no gt objects.

#

# See also coco, mask, pycocoDemo, pycocoEvalDemo

#

# Microsoft COCO Toolbox. version 2.0

# Data, paper, and tutorials available at: http://mscoco.org/

# Code written by Piotr Dollar and Tsung-Yi Lin, 2015.

# Licensed under the Simplified BSD License [see coco/license.txt]

def __init__(self, cocoGt=None, cocoDt=None, iouType='segm'):

'''

Initialize CocoEval using coco APIs for gt and dt

:param cocoGt: coco object with ground truth annotations

:param cocoDt: coco object with detection results

:return: None

'''

if not iouType:

print('iouType not specified. use default iouType segm')

self.cocoGt = cocoGt # ground truth COCO API

self.cocoDt = cocoDt # detections COCO API

self.evalImgs = defaultdict(list) # per-image per-category evaluation results [KxAxI] elements

self.eval = {} # accumulated evaluation results

self._gts = defaultdict(list) # gt for evaluation

self._dts = defaultdict(list) # dt for evaluation

self.params = Params(iouType=iouType) # parameters

self._paramsEval = {} # parameters for evaluation

self.stats = [] # result summarization

self.ious = {} # ious between all gts and dts

if not cocoGt is None:

self.params.imgIds = sorted(cocoGt.getImgIds())

self.params.catIds = sorted(cocoGt.getCatIds())

def _prepare(self):

'''

Prepare ._gts and ._dts for evaluation based on params

:return: None

'''

def _toMask(anns, coco):

# modify ann['segmentation'] by reference

for ann in anns:

rle = coco.annToRLE(ann)

ann['segmentation'] = rle

p = self.params

if p.useCats:

gts=self.cocoGt.loadAnns(self.cocoGt.getAnnIds(imgIds=p.imgIds, catIds=p.catIds))

dts=self.cocoDt.loadAnns(self.cocoDt.getAnnIds(imgIds=p.imgIds, catIds=p.catIds))

else:

gts=self.cocoGt.loadAnns(self.cocoGt.getAnnIds(imgIds=p.imgIds))

dts=self.cocoDt.loadAnns(self.cocoDt.getAnnIds(imgIds=p.imgIds))

# convert ground truth to mask if iouType == 'segm'

if p.iouType == 'segm':

_toMask(gts, self.cocoGt)

_toMask(dts, self.cocoDt)

# set ignore flag

for gt in gts:

gt['ignore'] = gt['ignore'] if 'ignore' in gt else 0

gt['ignore'] = 'iscrowd' in gt and gt['iscrowd']

if p.iouType == 'keypoints':

gt['ignore'] = (gt['num_keypoints'] == 0) or gt['ignore']

self._gts = defaultdict(list) # gt for evaluation

self._dts = defaultdict(list) # dt for evaluation

for gt in gts:

self._gts[gt['image_id'], gt['category_id']].append(gt)

for dt in dts:

self._dts[dt['image_id'], dt['category_id']].append(dt)

self.evalImgs = defaultdict(list) # per-image per-category evaluation results

self.eval = {} # accumulated evaluation results

def evaluate(self):

'''

Run per image evaluation on given images and store results (a list of dict) in self.evalImgs

:return: None

'''

tic = time.time()

print('Running per image evaluation...')

p = self.params

# add backward compatibility if useSegm is specified in params

if not p.useSegm is None:

p.iouType = 'segm' if p.useSegm == 1 else 'bbox'

print('useSegm (deprecated) is not None. Running {} evaluation'.format(p.iouType))

print('Evaluate annotation type *{}*'.format(p.iouType))

p.imgIds = list(np.unique(p.imgIds))

if p.useCats:

p.catIds = list(np.unique(p.catIds))

p.maxDets = sorted(p.maxDets)

self.params=p

self._prepare()

# loop through images, area range, max detection number

catIds = p.catIds if p.useCats else [-1]

if p.iouType == 'segm' or p.iouType == 'bbox':

computeIoU = self.computeIoU

elif p.iouType == 'keypoints':

computeIoU = self.computeOks

self.ious = {(imgId, catId): computeIoU(imgId, catId) \

for imgId in p.imgIds

for catId in catIds}

evaluateImg = self.evaluateImg

maxDet = p.maxDets[-1]

self.evalImgs = [evaluateImg(imgId, catId, areaRng, maxDet)

for catId in catIds

for areaRng in p.areaRng

for imgId in p.imgIds

]

self._paramsEval = copy.deepcopy(self.params)

toc = time.time()

print('DONE (t={:0.2f}s).'.format(toc-tic))

def computeIoU(self, imgId, catId):

p = self.params

if p.useCats:

gt = self._gts[imgId,catId]

dt = self._dts[imgId,catId]

else:

gt = [_ for cId in p.catIds for _ in self._gts[imgId,cId]]

dt = [_ for cId in p.catIds for _ in self._dts[imgId,cId]]

if len(gt) == 0 and len(dt) ==0:

return []

inds = np.argsort([-d['score'] for d in dt], kind='mergesort')

dt = [dt[i] for i in inds]

if len(dt) > p.maxDets[-1]:

dt=dt[0:p.maxDets[-1]]

if p.iouType == 'segm':

g = [g['segmentation'] for g in gt]

d = [d['segmentation'] for d in dt]

elif p.iouType == 'bbox':

g = [g['bbox'] for g in gt]

d = [d['bbox'] for d in dt]

else:

raise Exception('unknown iouType for iou computation')

# compute iou between each dt and gt region

iscrowd = [int(o['iscrowd']) for o in gt]

ious = maskUtils.iou(d,g,iscrowd)

return ious

def computeOks(self, imgId, catId):

p = self.params

# dimention here should be Nxm

gts = self._gts[imgId, catId]

dts = self._dts[imgId, catId]

inds = np.argsort([-d['score'] for d in dts], kind='mergesort')

dts = [dts[i] for i in inds]

if len(dts) > p.maxDets[-1]:

dts = dts[0:p.maxDets[-1]]

# if len(gts) == 0 and len(dts) == 0:

if len(gts) == 0 or len(dts) == 0:

return []

ious = np.zeros((len(dts), len(gts)))

sigmas = p.kpt_oks_sigmas

vars = (sigmas * 2)**2

k = len(sigmas)

# compute oks between each detection and ground truth object

for j, gt in enumerate(gts):

# create bounds for ignore regions(double the gt bbox)

g = np.array(gt['keypoints'])

xg = g[0::3]; yg = g[1::3]; vg = g[2::3]

k1 = np.count_nonzero(vg > 0)

bb = gt['bbox']

x0 = bb[0] - bb[2]; x1 = bb[0] + bb[2] * 2

y0 = bb[1] - bb[3]; y1 = bb[1] + bb[3] * 2

for i, dt in enumerate(dts):

d = np.array(dt['keypoints'])

xd = d[0::3]; yd = d[1::3]

if k1>0:

# measure the per-keypoint distance if keypoints visible

dx = xd - xg

dy = yd - yg

else:

# measure minimum distance to keypoints in (x0,y0) & (x1,y1)

z = np.zeros((k))

dx = np.max((z, x0-xd),axis=0)+np.max((z, xd-x1),axis=0)

dy = np.max((z, y0-yd),axis=0)+np.max((z, yd-y1),axis=0)

e = (dx**2 + dy**2) / vars / (gt['area']+np.spacing(1)) / 2

if k1 > 0:

e=e[vg > 0]

ious[i, j] = np.sum(np.exp(-e)) / e.shape[0]

return ious

def evaluateImg(self, imgId, catId, aRng, maxDet):

'''

perform evaluation for single category and image

:return: dict (single image results)

'''

p = self.params

if p.useCats:

gt = self._gts[imgId,catId]

dt = self._dts[imgId,catId]

else:

gt = [_ for cId in p.catIds for _ in self._gts[imgId,cId]]

dt = [_ for cId in p.catIds for _ in self._dts[imgId,cId]]

if len(gt) == 0 and len(dt) ==0:

return None

for g in gt:

if g['ignore'] or (g['area']<aRng[0] or g['area']>aRng[1]):

g['_ignore'] = 1

else:

g['_ignore'] = 0

# sort dt highest score first, sort gt ignore last

gtind = np.argsort([g['_ignore'] for g in gt], kind='mergesort')

gt = [gt[i] for i in gtind]

dtind = np.argsort([-d['score'] for d in dt], kind='mergesort')

dt = [dt[i] for i in dtind[0:maxDet]]

iscrowd = [int(o['iscrowd']) for o in gt]

# load computed ious

ious = self.ious[imgId, catId][:, gtind] if len(self.ious[imgId, catId]) > 0 else self.ious[imgId, catId]

T = len(p.iouThrs)

G = len(gt)

D = len(dt)

gtm = np.zeros((T,G))

dtm = np.zeros((T,D))

gtIg = np.array([g['_ignore'] for g in gt])

dtIg = np.zeros((T,D))

if not len(ious)==0:

for tind, t in enumerate(p.iouThrs):

for dind, d in enumerate(dt):

# information about best match so far (m=-1 -> unmatched)

iou = min([t,1-1e-10])

m = -1

for gind, g in enumerate(gt):

# if this gt already matched, and not a crowd, continue

if gtm[tind,gind]>0 and not iscrowd[gind]:

continue

# if dt matched to reg gt, and on ignore gt, stop

if m>-1 and gtIg[m]==0 and gtIg[gind]==1:

break

# continue to next gt unless better match made

if ious[dind,gind] < iou:

continue

# if match successful and best so far, store appropriately

iou=ious[dind,gind]

m=gind

# if match made store id of match for both dt and gt

if m ==-1:

continue

dtIg[tind,dind] = gtIg[m]

dtm[tind,dind] = gt[m]['id']

gtm[tind,m] = d['id']

# set unmatched detections outside of area range to ignore

a = np.array([d['area']<aRng[0] or d['area']>aRng[1] for d in dt]).reshape((1, len(dt)))

dtIg = np.logical_or(dtIg, np.logical_and(dtm==0, np.repeat(a,T,0)))

# store results for given image and category

return {

'image_id': imgId,

'category_id': catId,

'aRng': aRng,

'maxDet': maxDet,

'dtIds': [d['id'] for d in dt],

'gtIds': [g['id'] for g in gt],

'dtMatches': dtm,

'gtMatches': gtm,

'dtScores': [d['score'] for d in dt],

'gtIgnore': gtIg,

'dtIgnore': dtIg,

}

def accumulate(self, p = None):

'''

Accumulate per image evaluation results and store the result in self.eval

:param p: input params for evaluation

:return: None

'''

print('Accumulating evaluation results...')

tic = time.time()

if not self.evalImgs:

print('Please run evaluate() first')

# allows input customized parameters

if p is None:

p = self.params

p.catIds = p.catIds if p.useCats == 1 else [-1]

T = len(p.iouThrs) ##设置的iou阈值的个数

R = len(p.recThrs) ##设置的召回的recThrs阈值的个数

K = len(p.catIds) if p.useCats else 1

A = len(p.areaRng)

M = len(p.maxDets)

precision = -np.ones(

(T, R, K, A, M)) # -1 for the precision of absent categories ##这个是存储不同的rec值下的p值,相当于存储了pr曲线的采样点

recall = -np.ones((T, K, A, M))

precision_s = -np.ones((T, K, A, M)) ##真实的精确率值

scores = -np.ones((T, R, K, A, M))

# create dictionary for future indexing

_pe = self._paramsEval

catIds = _pe.catIds if _pe.useCats else [-1]

setK = set(catIds)

setA = set(map(tuple, _pe.areaRng))

setM = set(_pe.maxDets)

setI = set(_pe.imgIds)

# get inds to evaluate

k_list = [n for n, k in enumerate(p.catIds) if k in setK]

m_list = [m for n, m in enumerate(p.maxDets) if m in setM]

a_list = [n for n, a in enumerate(map(lambda x: tuple(x), p.areaRng)) if a in setA]

i_list = [n for n, i in enumerate(p.imgIds) if i in setI]

I0 = len(_pe.imgIds)

A0 = len(_pe.areaRng)

##根据self.evalImgs的存储形式,遍历时最里层是img_id、次外层是aRng、最外层是类别id

'''

self.evalImgs = [evaluateImg(imgId, catId, areaRng, maxDet)

for catId in catIds

for areaRng in p.areaRng

for imgId in p.imgIds

]

'''

# retrieve E at each category, area range, and max number of detections

for k, k0 in enumerate(k_list): ##类别的索引下标遍历

Nk = k0 * A0 * I0

for a, a0 in enumerate(a_list): ##aRng的遍历

Na = a0 * I0

for m, maxDet in enumerate(m_list):

E = [self.evalImgs[Nk + Na + i] for i in i_list]

E = [e for e in E if not e is None]

if len(E) == 0:

continue

##特定类别、特定aRng的所有图片中每一张图片的maxDet个预测框

dtScores = np.concatenate([e['dtScores'][0:maxDet] for e in E])

# different sorting method generates slightly different results.

# mergesort is used to be consistent as Matlab implementation.

inds = np.argsort(-dtScores, kind='mergesort')

##是将特定类别,特定aRng的所有图片的预测框

# (每张图片特定类别、aRng取置信度从大到小的maxDet个框)

# 的置信度拉成一位数组,然后再次从大到小排列;

dtScoresSorted = dtScores[inds]

##dtm、dtIg维度是(T,maxDet个数*图片个数)

dtm = np.concatenate([e['dtMatches'][:, 0:maxDet] for e in E], axis=1)[:, inds]

dtIg = np.concatenate([e['dtIgnore'][:, 0:maxDet] for e in E], axis=1)[:, inds]

gtIg = np.concatenate([e['gtIgnore'] for e in E]) ##gtIg维度是(图片个数,G)

npig = np.count_nonzero(gtIg == 0) ##gt不ignore的个数

if npig == 0:

continue

##dtm、dtIg维度是(T,maxDet个数*图片个数)

tps = np.logical_and(dtm, np.logical_not(dtIg))

fps = np.logical_and(np.logical_not(dtm), np.logical_not(dtIg))

tp_sum = np.cumsum(tps, axis=1).astype(dtype=float)

fp_sum = np.cumsum(fps, axis=1).astype(dtype=float)

for t, (tp, fp) in enumerate(zip(tp_sum, fp_sum)):

tp = np.array(tp)

fp = np.array(fp)

nd = len(tp)

rc = tp / npig

pr = tp / (fp + tp + np.spacing(1))

q = np.zeros((R,)) ##特定召回率下的precision值(pr曲线)

ss = np.zeros((R,)) ##特定召回率下的对应的bbox的置信度

if nd:

recall[t, k, a, m] = rc[-1]

precision_s[t, k, a, m] = pr[-1]

else:

recall[t, k, a, m] = 0

precision_s[t, k, a, m] = 0

# numpy is slow without cython optimization for accessing elements

# use python array gets significant speed improvement

pr = pr.tolist()

q = q.tolist()

for i in range(nd - 1, 0, -1):

if pr[i] > pr[i - 1]:

pr[i - 1] = pr[i]

##这里调用的np.searchsorted表示p.recThrs中每一个值能插入到rc中的位置索引,其中rc必须是升序

inds = np.searchsorted(rc, p.recThrs, side='left')

try:

for ri, pi in enumerate(inds):

q[ri] = pr[pi]

ss[ri] = dtScoresSorted[pi]

except:

pass

precision[t, :, k, a, m] = np.array(q)

scores[t, :, k, a, m] = np.array(ss)

self.eval = {

'params': p,

'counts': [T, R, K, A, M],

'date': datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S'),

'precision': precision, ##(T,R,K,A,M)

'recall': recall, ##(T,K,A,M)

'precision_s': precision_s,

'scores': scores, ##(T,R,K,A,M)

}

toc = time.time()

print('DONE (t={:0.2f}s).'.format(toc - tic))

def summarize(self):

'''

Compute and display summary metrics for evaluation results.

Note this functin can *only* be applied on the default parameter setting

'''

def _summarize(ap=1, iouThr=None, areaRng='all', maxDets=100, catId=self.params.catIds):

'''

precision = -np.ones((T,R,K,A,M)) # -1 for the precision of absent categories ##这个是存储不同的rec值下的p值,相当于存储了pr曲线的采样点

recall = -np.ones((T,K,A,M))

precision_s = -np.ones((T,K,A,M)) ##真实的精确率值

'''

p = self.params

iStr = ' {:<18} {} @[ IoU={:<9} | area={:>6s} | maxDets={:>3d} ] = {:0.3f}'

# titleStr = 'Average Precision' if ap == 1 else 'Average Recall'

# typeStr = '(AP)' if ap==1 else '(AR)'

# titleStr = 'Average Precision' if ap == 1 else 'Average Recall'

# typeStr = '(AP)' if ap==1 else '(AR)'

iouStr = '{:0.2f}:{:0.2f}'.format(p.iouThrs[0], p.iouThrs[-1]) \

if iouThr is None else '{:0.2f}'.format(iouThr)

aind = [i for i, aRng in enumerate(p.areaRngLbl) if aRng == areaRng]

mind = [i for i, mDet in enumerate(p.maxDets) if mDet == maxDets]

cind = [i for i, cat in enumerate(p.catIds) if cat in catId]

if ap == 1:

titleStr = 'Average P-R curve Area'

typeStr = '(mAP)'

# dimension of precision: [TxRxKxAxM]

s = self.eval['precision']

# IoU

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0]

s = s[t]

# s = s[:,:,:,aind,mind]

s = s[:, :, cind, aind, mind]

elif ap == 0:

titleStr = 'Average Recall'

typeStr = '(AR)'

# dimension of recall: [TxKxAxM]

s = self.eval['recall']

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0]

s = s[t]

# s = s[:,:,aind,mind]

s = s[:, cind, aind, mind]

else:

titleStr = 'Average Precision'

typeStr = '(AP)'

# dimension of precision: [TxKxAxM]

s = self.eval['precision_s']

# IoU

if iouThr is not None:

t = np.where(iouThr == p.iouThrs)[0]

s = s[t]

# s = s[:,:,aind,mind]

s = s[:, cind, aind, mind]

if len(s[s > -1]) == 0:

mean_s = -1

else:

mean_s = np.mean(s[s > -1])

# print(iStr.format(titleStr, typeStr, iouStr, areaRng, maxDets, mean_s))

if s.shape[-1] > 1:

iStr += ", per category = {}"

mean_axis = (0,)

if ap == 1:

mean_axis = (0, 1)

# elif ap == 2:

# mean_axis=(0,1)

per_category_mean_s = np.mean(s, axis=mean_axis).flatten()

with np.printoptions(precision=3, suppress=True, sign=" ", floatmode="fixed"):

print(iStr.format(titleStr, typeStr, iouStr, areaRng, maxDets, mean_s, per_category_mean_s))

else:

print(iStr.format(titleStr, typeStr, iouStr, areaRng, maxDets, mean_s, ""))

return mean_s

def _summarizeDets():

stats = np.zeros((18,))

iou=.5

stats[0] = _summarize(1)

stats[1] = _summarize(1, iouThr=.5, maxDets=self.params.maxDets[2])

stats[2] = _summarize(1, iouThr=.75, maxDets=self.params.maxDets[2])

stats[3] = _summarize(1, areaRng='small', maxDets=self.params.maxDets[2])

stats[4] = _summarize(1, areaRng='medium', maxDets=self.params.maxDets[2])

stats[5] = _summarize(1, areaRng='large', maxDets=self.params.maxDets[2])

stats[6] = _summarize(0, iouThr=iou,maxDets=self.params.maxDets[0])

stats[7] = _summarize(0, iouThr=iou,maxDets=self.params.maxDets[1])

stats[8] = _summarize(0, iouThr=iou,maxDets=self.params.maxDets[2])

stats[9] = _summarize(0, areaRng='small', maxDets=self.params.maxDets[2])

stats[10] = _summarize(0, areaRng='medium', maxDets=self.params.maxDets[2])

stats[11] = _summarize(0, areaRng='large', maxDets=self.params.maxDets[2])

stats[12] = _summarize(2, iouThr=iou,maxDets=self.params.maxDets[0])

stats[13] = _summarize(2, iouThr=iou,maxDets=self.params.maxDets[1])

stats[14] = _summarize(2, iouThr=iou,maxDets=self.params.maxDets[2])

stats[15] = _summarize(2, areaRng='small', maxDets=self.params.maxDets[2])

stats[16] = _summarize(2, areaRng='medium', maxDets=self.params.maxDets[2])

stats[17] = _summarize(2, areaRng='large', maxDets=self.params.maxDets[2])

return stats

def _summarizeKps():

stats = np.zeros((10,))

stats[0] = _summarize(1, maxDets=20)

stats[1] = _summarize(1, maxDets=20, iouThr=.5)

stats[2] = _summarize(1, maxDets=20, iouThr=.75)

stats[3] = _summarize(1, maxDets=20, areaRng='medium')

stats[4] = _summarize(1, maxDets=20, areaRng='large')

stats[5] = _summarize(0, maxDets=20)

stats[6] = _summarize(0, maxDets=20, iouThr=.5)

stats[7] = _summarize(0, maxDets=20, iouThr=.75)

stats[8] = _summarize(0, maxDets=20, areaRng='medium')

stats[9] = _summarize(0, maxDets=20, areaRng='large')

return stats

if not self.eval:

raise Exception('Please run accumulate() first')

iouType = self.params.iouType

if iouType == 'segm' or iouType == 'bbox':

summarize = _summarizeDets

elif iouType == 'keypoints':

summarize = _summarizeKps

self.stats = summarize()

def __str__(self):

self.summarize()

class Params:

'''

Params for coco evaluation api

'''

def setDetParams(self):

self.imgIds = []

self.catIds = []

# np.arange causes trouble. the data point on arange is slightly larger than the true value

self.iouThrs = np.linspace(.5, 0.95, int(np.round((0.95 - .5) / .05)) + 1, endpoint=True)

self.recThrs = np.linspace(.0, 1.00, int(np.round((1.00 - .0) / .01)) + 1, endpoint=True)

self.maxDets = [1, 10, 100]

self.areaRng = [[0 ** 2, 1e5 ** 2], [0 ** 2, 32 ** 2], [32 ** 2, 96 ** 2], [96 ** 2, 1e5 ** 2]]

self.areaRngLbl = ['all', 'small', 'medium', 'large']

self.useCats = 1

def setKpParams(self):

self.imgIds = []

self.catIds = []

# np.arange causes trouble. the data point on arange is slightly larger than the true value

self.iouThrs = np.linspace(.5, 0.95, int(np.round((0.95 - .5) / .05)) + 1, endpoint=True)

self.recThrs = np.linspace(.0, 1.00, int(np.round((1.00 - .0) / .01)) + 1, endpoint=True)

self.maxDets = [20]

self.areaRng = [[0 ** 2, 1e5 ** 2], [32 ** 2, 96 ** 2], [96 ** 2, 1e5 ** 2]]

self.areaRngLbl = ['all', 'medium', 'large']

self.useCats = 1

self.kpt_oks_sigmas = np.array([.26, .25, .25, .35, .35, .79, .79, .72, .72, .62,.62, 1.07, 1.07, .87, .87, .89, .89])/10.0

def __init__(self, iouType='segm'):

if iouType == 'segm' or iouType == 'bbox':

self.setDetParams()

elif iouType == 'keypoints':

self.setKpParams()

else:

raise Exception('iouType not supported')

self.iouType = iouType

# useSegm is deprecated

self.useSegm = None

3.修改mmdet/datasets/api_wrappers路径下的coco_api.py,主要是修改导包处

from pycocotools.cocoeval import COCOeval as _COCOeval改为from .mycocoval import COCOeval as _COCOeval

修改后代码如下

coco_api.py

# Copyright (c) OpenMMLab. All rights reserved.

# This file add snake case alias for coco api

import warnings

from collections import defaultdict

from typing import List, Optional, Union

import pycocotools

from pycocotools.coco import COCO as _COCO

# from pycocotools.cocoeval import COCOeval as _COCOeval

from .mycocoval import COCOeval as _COCOeval

class COCO(_COCO):

"""This class is almost the same as official pycocotools package.

It implements some snake case function aliases. So that the COCO class has

the same interface as LVIS class.

"""

def __init__(self, annotation_file=None):

if getattr(pycocotools, '__version__', '0') >= '12.0.2':

warnings.warn(

'mmpycocotools is deprecated. Please install official pycocotools by "pip install pycocotools"', # noqa: E501

UserWarning)

super().__init__(annotation_file=annotation_file)

self.img_ann_map = self.imgToAnns

self.cat_img_map = self.catToImgs

def get_ann_ids(self, img_ids=[], cat_ids=[], area_rng=[], iscrowd=None):

return self.getAnnIds(img_ids, cat_ids, area_rng, iscrowd)

def get_cat_ids(self, cat_names=[], sup_names=[], cat_ids=[]):

return self.getCatIds(cat_names, sup_names, cat_ids)

def get_img_ids(self, img_ids=[], cat_ids=[]):

return self.getImgIds(img_ids, cat_ids)

def load_anns(self, ids):

return self.loadAnns(ids)

def load_cats(self, ids):

return self.loadCats(ids)

def load_imgs(self, ids):

return self.loadImgs(ids)

# just for the ease of import

COCOeval = _COCOeval

class COCOPanoptic(COCO):

"""This wrapper is for loading the panoptic style annotation file.

The format is shown in the CocoPanopticDataset class.

Args:

annotation_file (str, optional): Path of annotation file.

Defaults to None.

"""

def __init__(self, annotation_file: Optional[str] = None) -> None:

super(COCOPanoptic, self).__init__(annotation_file)

def createIndex(self) -> None:

"""Create index."""

# create index

print('creating index...')

# anns stores 'segment_id -> annotation'

anns, cats, imgs = {}, {}, {}

img_to_anns, cat_to_imgs = defaultdict(list), defaultdict(list)

if 'annotations' in self.dataset:

for ann in self.dataset['annotations']:

for seg_ann in ann['segments_info']:

# to match with instance.json

seg_ann['image_id'] = ann['image_id']

img_to_anns[ann['image_id']].append(seg_ann)

# segment_id is not unique in coco dataset orz...

# annotations from different images but

# may have same segment_id

if seg_ann['id'] in anns.keys():

anns[seg_ann['id']].append(seg_ann)

else:

anns[seg_ann['id']] = [seg_ann]

# filter out annotations from other images

img_to_anns_ = defaultdict(list)

for k, v in img_to_anns.items():

img_to_anns_[k] = [x for x in v if x['image_id'] == k]

img_to_anns = img_to_anns_

if 'images' in self.dataset:

for img_info in self.dataset['images']:

img_info['segm_file'] = img_info['file_name'].replace(

'.jpg', '.png')

imgs[img_info['id']] = img_info

if 'categories' in self.dataset:

for cat in self.dataset['categories']:

cats[cat['id']] = cat

if 'annotations' in self.dataset and 'categories' in self.dataset:

for ann in self.dataset['annotations']:

for seg_ann in ann['segments_info']:

cat_to_imgs[seg_ann['category_id']].append(ann['image_id'])

print('index created!')

self.anns = anns

self.imgToAnns = img_to_anns

self.catToImgs = cat_to_imgs

self.imgs = imgs

self.cats = cats

def load_anns(self,

ids: Union[List[int], int] = []) -> Optional[List[dict]]:

"""Load anns with the specified ids.

``self.anns`` is a list of annotation lists instead of a

list of annotations.

Args:

ids (Union[List[int], int]): Integer ids specifying anns.

Returns:

anns (List[dict], optional): Loaded ann objects.

"""

anns = []

if hasattr(ids, '__iter__') and hasattr(ids, '__len__'):

# self.anns is a list of annotation lists instead of

# a list of annotations

for id in ids:

anns += self.anns[id]

return anns

elif type(ids) == int:

return self.anns[ids]

4.修改mmdet/evaluation/metrics/coco_metric.py文件

coco_metric.py

# Copyright (c) OpenMMLab. All rights reserved.

import datetime

import itertools

import os.path as osp

import tempfile

from collections import OrderedDict

from typing import Dict, List, Optional, Sequence, Union

import numpy as np

import torch

from mmengine.evaluator import BaseMetric

from mmengine.fileio import dump, get_local_path, load

from mmengine.logging import MMLogger

from terminaltables import AsciiTable

from mmdet.datasets.api_wrappers import COCO, COCOeval, COCOevalMP

from mmdet.registry import METRICS

from mmdet.structures.mask import encode_mask_results

from ..functional import eval_recalls

@METRICS.register_module()

class CocoMetric(BaseMetric):

"""COCO evaluation metric.

Evaluate AR, AP, and mAP for detection tasks including proposal/box

detection and instance segmentation. Please refer to

https://cocodataset.org/#detection-eval for more details.

Args:

ann_file (str, optional): Path to the coco format annotation file.

If not specified, ground truth annotations from the dataset will

be converted to coco format. Defaults to None.

metric (str | List[str]): Metrics to be evaluated. Valid metrics

include 'bbox', 'segm', 'proposal', and 'proposal_fast'.

Defaults to 'bbox'.

classwise (bool): Whether to evaluate the metric class-wise.

Defaults to False.

proposal_nums (Sequence[int]): Numbers of proposals to be evaluated.

Defaults to (100, 300, 1000).

iou_thrs (float | List[float], optional): IoU threshold to compute AP

and AR. If not specified, IoUs from 0.5 to 0.95 will be used.

Defaults to None.

metric_items (List[str], optional): Metric result names to be

recorded in the evaluation result. Defaults to None.

format_only (bool): Format the output results without perform

evaluation. It is useful when you want to format the result

to a specific format and submit it to the test server.

Defaults to False.

outfile_prefix (str, optional): The prefix of json files. It includes

the file path and the prefix of filename, e.g., "a/b/prefix".

If not specified, a temp file will be created. Defaults to None.

file_client_args (dict, optional): Arguments to instantiate the

corresponding backend in mmdet <= 3.0.0rc6. Defaults to None.

backend_args (dict, optional): Arguments to instantiate the

corresponding backend. Defaults to None.

collect_device (str): Device name used for collecting results from

different ranks during distributed training. Must be 'cpu' or

'gpu'. Defaults to 'cpu'.

prefix (str, optional): The prefix that will be added in the metric

names to disambiguate homonymous metrics of different evaluators.

If prefix is not provided in the argument, self.default_prefix

will be used instead. Defaults to None.

sort_categories (bool): Whether sort categories in annotations. Only

used for `Objects365V1Dataset`. Defaults to False.

use_mp_eval (bool): Whether to use mul-processing evaluation

"""

default_prefix: Optional[str] = 'coco'

def __init__(self,

ann_file: Optional[str] = None,

metric: Union[str, List[str]] = 'bbox',

classwise: bool = True,

proposal_nums: Sequence[int] = (100, 300, 1000),

iou_thrs: Optional[Union[float, Sequence[float]]] = None,

metric_items: Optional[Sequence[str]] = None,

format_only: bool = False,

outfile_prefix: Optional[str] = None,

file_client_args: dict = None,

backend_args: dict = None,

collect_device: str = 'cpu',

prefix: Optional[str] = None,

sort_categories: bool = False,

use_mp_eval: bool = False) -> None:

super().__init__(collect_device=collect_device, prefix=prefix)

# coco evaluation metrics

self.metrics = metric if isinstance(metric, list) else [metric]

allowed_metrics = ['bbox', 'segm', 'proposal', 'proposal_fast']

for metric in self.metrics:

if metric not in allowed_metrics:

raise KeyError(

"metric should be one of 'bbox', 'segm', 'proposal', "

f"'proposal_fast', but got {metric}.")

# do class wise evaluation, default False

self.classwise = classwise

# whether to use multi processing evaluation, default False

self.use_mp_eval = use_mp_eval

# proposal_nums used to compute recall or precision.

self.proposal_nums = list(proposal_nums)

# iou_thrs used to compute recall or precision.

if iou_thrs is None:

iou_thrs = np.linspace(

.5, 0.95, int(np.round((0.95 - .5) / .05)) + 1, endpoint=True)

self.iou_thrs = iou_thrs

self.metric_items = metric_items

self.format_only = format_only

if self.format_only:

assert outfile_prefix is not None, 'outfile_prefix must be not'

'None when format_only is True, otherwise the result files will'

'be saved to a temp directory which will be cleaned up at the end.'

self.outfile_prefix = outfile_prefix

self.backend_args = backend_args

if file_client_args is not None:

raise RuntimeError(

'The `file_client_args` is deprecated, '

'please use `backend_args` instead, please refer to'

'https://github.com/open-mmlab/mmdetection/blob/main/configs/_base_/datasets/coco_detection.py' # noqa: E501

)

# if ann_file is not specified,

# initialize coco api with the converted dataset

if ann_file is not None:

with get_local_path(

ann_file, backend_args=self.backend_args) as local_path:

self._coco_api = COCO(local_path)

if sort_categories:

# 'categories' list in objects365_train.json and

# objects365_val.json is inconsistent, need sort

# list(or dict) before get cat_ids.

cats = self._coco_api.cats

sorted_cats = {i: cats[i] for i in sorted(cats)}

self._coco_api.cats = sorted_cats

categories = self._coco_api.dataset['categories']

sorted_categories = sorted(

categories, key=lambda i: i['id'])

self._coco_api.dataset['categories'] = sorted_categories

else:

self._coco_api = None

# handle dataset lazy init

self.cat_ids = None

self.img_ids = None

def fast_eval_recall(self,

results: List[dict],

proposal_nums: Sequence[int],

iou_thrs: Sequence[float],

logger: Optional[MMLogger] = None) -> np.ndarray:

"""Evaluate proposal recall with COCO's fast_eval_recall.

Args:

results (List[dict]): Results of the dataset.

proposal_nums (Sequence[int]): Proposal numbers used for

evaluation.

iou_thrs (Sequence[float]): IoU thresholds used for evaluation.

logger (MMLogger, optional): Logger used for logging the recall

summary.

Returns:

np.ndarray: Averaged recall results.

"""

gt_bboxes = []

pred_bboxes = [result['bboxes'] for result in results]

for i in range(len(self.img_ids)):

ann_ids = self._coco_api.get_ann_ids(img_ids=self.img_ids[i])

ann_info = self._coco_api.load_anns(ann_ids)

if len(ann_info) == 0:

gt_bboxes.append(np.zeros((0, 4)))

continue

bboxes = []

for ann in ann_info:

if ann.get('ignore', False) or ann['iscrowd']:

continue

x1, y1, w, h = ann['bbox']

bboxes.append([x1, y1, x1 + w, y1 + h])

bboxes = np.array(bboxes, dtype=np.float32)

if bboxes.shape[0] == 0:

bboxes = np.zeros((0, 4))

gt_bboxes.append(bboxes)

recalls = eval_recalls(

gt_bboxes, pred_bboxes, proposal_nums, iou_thrs, logger=logger)

ar = recalls.mean(axis=1)

return ar

def xyxy2xywh(self, bbox: np.ndarray) -> list:

"""Convert ``xyxy`` style bounding boxes to ``xywh`` style for COCO

evaluation.

Args:

bbox (numpy.ndarray): The bounding boxes, shape (4, ), in

``xyxy`` order.

Returns:

list[float]: The converted bounding boxes, in ``xywh`` order.

"""

_bbox: List = bbox.tolist()

return [

_bbox[0],

_bbox[1],

_bbox[2] - _bbox[0],

_bbox[3] - _bbox[1],

]

def results2json(self, results: Sequence[dict],

outfile_prefix: str) -> dict:

"""Dump the detection results to a COCO style json file.

There are 3 types of results: proposals, bbox predictions, mask

predictions, and they have different data types. This method will

automatically recognize the type, and dump them to json files.

Args:

results (Sequence[dict]): Testing results of the

dataset.

outfile_prefix (str): The filename prefix of the json files. If the

prefix is "somepath/xxx", the json files will be named

"somepath/xxx.bbox.json", "somepath/xxx.segm.json",

"somepath/xxx.proposal.json".

Returns:

dict: Possible keys are "bbox", "segm", "proposal", and

values are corresponding filenames.

"""

bbox_json_results = []

segm_json_results = [] if 'masks' in results[0] else None

for idx, result in enumerate(results):

image_id = result.get('img_id', idx)

labels = result['labels']

bboxes = result['bboxes']

scores = result['scores']

# bbox results

for i, label in enumerate(labels):

data = dict()

data['image_id'] = image_id

data['bbox'] = self.xyxy2xywh(bboxes[i])

data['score'] = float(scores[i])

data['category_id'] = self.cat_ids[label]

bbox_json_results.append(data)

if segm_json_results is None:

continue

# segm results

masks = result['masks']

mask_scores = result.get('mask_scores', scores)

for i, label in enumerate(labels):

data = dict()

data['image_id'] = image_id

data['bbox'] = self.xyxy2xywh(bboxes[i])

data['score'] = float(mask_scores[i])

data['category_id'] = self.cat_ids[label]

if isinstance(masks[i]['counts'], bytes):

masks[i]['counts'] = masks[i]['counts'].decode()

data['segmentation'] = masks[i]

segm_json_results.append(data)

result_files = dict()

result_files['bbox'] = f'{outfile_prefix}.bbox.json'

result_files['proposal'] = f'{outfile_prefix}.bbox.json'

dump(bbox_json_results, result_files['bbox'])

if segm_json_results is not None:

result_files['segm'] = f'{outfile_prefix}.segm.json'

dump(segm_json_results, result_files['segm'])

return result_files

def gt_to_coco_json(self, gt_dicts: Sequence[dict],

outfile_prefix: str) -> str:

"""Convert ground truth to coco format json file.

Args:

gt_dicts (Sequence[dict]): Ground truth of the dataset.

outfile_prefix (str): The filename prefix of the json files. If the

prefix is "somepath/xxx", the json file will be named

"somepath/xxx.gt.json".

Returns:

str: The filename of the json file.

"""

categories = [

dict(id=id, name=name)

for id, name in enumerate(self.dataset_meta['classes'])

]

image_infos = []

annotations = []

for idx, gt_dict in enumerate(gt_dicts):

img_id = gt_dict.get('img_id', idx)

image_info = dict(

id=img_id,

width=gt_dict['width'],

height=gt_dict['height'],

file_name='')

image_infos.append(image_info)

for ann in gt_dict['anns']:

label = ann['bbox_label']

bbox = ann['bbox']

coco_bbox = [

bbox[0],

bbox[1],

bbox[2] - bbox[0],

bbox[3] - bbox[1],

]

annotation = dict(

id=len(annotations) +

1, # coco api requires id starts with 1

image_id=img_id,

bbox=coco_bbox,

iscrowd=ann.get('ignore_flag', 0),

category_id=int(label),

area=coco_bbox[2] * coco_bbox[3])

if ann.get('mask', None):

mask = ann['mask']

# area = mask_util.area(mask)

if isinstance(mask, dict) and isinstance(

mask['counts'], bytes):

mask['counts'] = mask['counts'].decode()

annotation['segmentation'] = mask

# annotation['area'] = float(area)

annotations.append(annotation)

info = dict(

date_created=str(datetime.datetime.now()),

description='Coco json file converted by mmdet CocoMetric.')

coco_json = dict(

info=info,

images=image_infos,

categories=categories,

licenses=None,

)

if len(annotations) > 0:

coco_json['annotations'] = annotations

converted_json_path = f'{outfile_prefix}.gt.json'

dump(coco_json, converted_json_path)

return converted_json_path

# TODO: data_batch is no longer needed, consider adjusting the

# parameter position

def process(self, data_batch: dict, data_samples: Sequence[dict]) -> None:

"""Process one batch of data samples and predictions. The processed

results should be stored in ``self.results``, which will be used to

compute the metrics when all batches have been processed.

Args:

data_batch (dict): A batch of data from the dataloader.

data_samples (Sequence[dict]): A batch of data samples that

contain annotations and predictions.

"""

for data_sample in data_samples:

result = dict()

pred = data_sample['pred_instances']

result['img_id'] = data_sample['img_id']

result['bboxes'] = pred['bboxes'].cpu().numpy()

result['scores'] = pred['scores'].cpu().numpy()

result['labels'] = pred['labels'].cpu().numpy()

# encode mask to RLE

if 'masks' in pred:

result['masks'] = encode_mask_results(

pred['masks'].detach().cpu().numpy()) if isinstance(

pred['masks'], torch.Tensor) else pred['masks']

# some detectors use different scores for bbox and mask

if 'mask_scores' in pred:

result['mask_scores'] = pred['mask_scores'].cpu().numpy()

# parse gt

gt = dict()

gt['width'] = data_sample['ori_shape'][1]

gt['height'] = data_sample['ori_shape'][0]

gt['img_id'] = data_sample['img_id']

if self._coco_api is None:

# TODO: Need to refactor to support LoadAnnotations

assert 'instances' in data_sample, \

'ground truth is required for evaluation when ' \

'`ann_file` is not provided'

gt['anns'] = data_sample['instances']

# add converted result to the results list

self.results.append((gt, result))

def compute_metrics(self, results: list) -> Dict[str, float]:

"""Compute the metrics from processed results.

Args:

results (list): The processed results of each batch.

Returns:

Dict[str, float]: The computed metrics. The keys are the names of

the metrics, and the values are corresponding results.

"""

logger: MMLogger = MMLogger.get_current_instance()

# split gt and prediction list

gts, preds = zip(*results)

tmp_dir = None

if self.outfile_prefix is None:

tmp_dir = tempfile.TemporaryDirectory()

outfile_prefix = osp.join(tmp_dir.name, 'results')

else:

outfile_prefix = self.outfile_prefix

if self._coco_api is None:

# use converted gt json file to initialize coco api

logger.info('Converting ground truth to coco format...')

coco_json_path = self.gt_to_coco_json(

gt_dicts=gts, outfile_prefix=outfile_prefix)

self._coco_api = COCO(coco_json_path)

# handle lazy init

if self.cat_ids is None:

self.cat_ids = self._coco_api.get_cat_ids(

cat_names=self.dataset_meta['classes'])

if self.img_ids is None:

self.img_ids = self._coco_api.get_img_ids()

# convert predictions to coco format and dump to json file

result_files = self.results2json(preds, outfile_prefix)

eval_results = OrderedDict()

if self.format_only:

logger.info('results are saved in '

f'{osp.dirname(outfile_prefix)}')

return eval_results

for metric in self.metrics:

logger.info(f'Evaluating {metric}...')

# TODO: May refactor fast_eval_recall to an independent metric?

# fast eval recall

if metric == 'proposal_fast':

ar = self.fast_eval_recall(

preds, self.proposal_nums, self.iou_thrs, logger=logger)

log_msg = []

for i, num in enumerate(self.proposal_nums):

eval_results[f'AR@{num}'] = ar[i]

log_msg.append(f'\nAR@{num}\t{ar[i]:.4f}')

log_msg = ''.join(log_msg)

logger.info(log_msg)

continue

# evaluate proposal, bbox and segm

iou_type = 'bbox' if metric == 'proposal' else metric

if metric not in result_files:

raise KeyError(f'{metric} is not in results')

try:

predictions = load(result_files[metric])

if iou_type == 'segm':

# Refer to https://github.com/cocodataset/cocoapi/blob/master/PythonAPI/pycocotools/coco.py#L331 # noqa

# When evaluating mask AP, if the results contain bbox,

# cocoapi will use the box area instead of the mask area

# for calculating the instance area. Though the overall AP

# is not affected, this leads to different

# small/medium/large mask AP results.

for x in predictions:

x.pop('bbox')

coco_dt = self._coco_api.loadRes(predictions)

except IndexError:

logger.error(

'The testing results of the whole dataset is empty.')

break

if self.use_mp_eval:

coco_eval = COCOevalMP(self._coco_api, coco_dt, iou_type)

else:

coco_eval = COCOeval(self._coco_api, coco_dt, iou_type)

coco_eval.params.catIds = self.cat_ids

coco_eval.params.imgIds = self.img_ids

coco_eval.params.maxDets = list(self.proposal_nums)

coco_eval.params.iouThrs = self.iou_thrs

# mapping of cocoEval.stats

coco_metric_names = {

'mAP_50_95': 0,

'mAP_50': 1,

'mAP_75': 2,

'mAP_s': 3,

'mAP_m': 4,

'mAP_l': 5,

'AR@100': 6,

'AR@300': 7,

'AR@1000': 8,

'AR_s@1000': 9,

'AR_m@1000': 10,

'AR_l@1000': 11

}

metric_items = self.metric_items

if metric_items is not None:

for metric_item in metric_items:

if metric_item not in coco_metric_names:

raise KeyError(

f'metric item "{metric_item}" is not supported')

if metric == 'proposal':

coco_eval.params.useCats = 0

coco_eval.evaluate()

coco_eval.accumulate()

coco_eval.summarize()

if metric_items is None:

metric_items = [

'AR@100', 'AR@300', 'AR@1000', 'AR_s@1000',

'AR_m@1000', 'AR_l@1000'

]

for item in metric_items:

val = float(

f'{coco_eval.stats[coco_metric_names[item]]:.3f}')

eval_results[item] = val

else:

coco_eval.evaluate()

coco_eval.accumulate()

coco_eval.summarize()

if self.classwise: # Compute per-category AP

# Compute per-category AP

# from https://github.com/facebookresearch/detectron2/

precisions = coco_eval.eval['precision']

precisions_s=coco_eval.eval['precision_s']

recalls=coco_eval.eval['recall']

# precision: (iou, recall, cls, area range, max dets)

assert len(self.cat_ids) == precisions.shape[2]

assert len(self.cat_ids) == precisions_s.shape[2]

assert len(self.cat_ids) == recalls.shape[2]

results_per_category = []

s = []

stats=coco_eval.stats

s.append(f'all')

s.append(f'{round(stats[14], 3)}')

s.append(f'{round(stats[8], 3)}')

s.append(f'{round(stats[1], 3)}')

s.append(f'{round(stats[2], 3)}')

s.append(f'{round(stats[0], 3)}')

s.append(f'{round(stats[3], 3)}')

s.append(f'{round(stats[4], 3)}')

s.append(f'{round(stats[5], 3)}')

results_per_category.append(tuple(s))

for idx, cat_id in enumerate(self.cat_ids):

# area range index 0: all area ranges

# max dets index -1: typically 100 per image

t=[]

nm = self._coco_api.loadCats(cat_id)[0]

precision = precisions[:, :, idx, 0, -1]

precision = precision[precision > -1]

if precision.size:

ap_o = np.mean(precision)

else:

ap_o = float('nan')

t.append(f'{nm["name"]}')

precision_s = precisions_s[0, idx, 0, -1]

recall = recalls[0, idx, 0, -1]

t.append(f'{round(precision_s, 3)}')

t.append(f'{round(recall, 3)}')

eval_results[f'{nm["name"]}_precision'] = round(ap_o, 3)

# indexes of IoU @50 and @75

for iou in [0, 5]:

precision = precisions[iou, :, idx, 0, -1]

precision = precision[precision > -1]

if precision.size:

ap = np.mean(precision)

else:

ap = float('nan')

t.append(f'{round(ap, 3)}')

t.append(f'{round(ap_o, 3)}')

# indexes of area of small, median and large

for area in [1, 2, 3]:

precision = precisions[:, :, idx, area, -1]

precision = precision[precision > -1]

if precision.size:

ap = np.mean(precision)

else:

ap = float('nan')

t.append(f'{round(ap, 3)}')

results_per_category.append(tuple(t))

num_columns = len(results_per_category[0])

results_flatten = list(

itertools.chain(*results_per_category))

headers = [

'category','Precision','Recall', 'mAP_50', 'mAP_75','mAP_50_95', 'mAP_s',

'mAP_m', 'mAP_l'

]

results_2d = itertools.zip_longest(*[

results_flatten[i::num_columns]

for i in range(num_columns)

])

table_data = [headers]

table_data += [result for result in results_2d]

table = AsciiTable(table_data)

logger.info('\n' + table.table)

if metric_items is None:

metric_items = [

'mAP_50_95', 'mAP_50', 'mAP_75', 'mAP_s', 'mAP_m', 'mAP_l'

]

for metric_item in metric_items:

key = f'{metric}_{metric_item}'

val = coco_eval.stats[coco_metric_names[metric_item]]

eval_results[key] = float(f'{round(val, 3)}')

ap = coco_eval.stats[:6]

logger.info(f'{metric}_mAP_copypaste: {ap[0]:.3f} '

f'{ap[1]:.3f} {ap[2]:.3f} {ap[3]:.3f} '

f'{ap[4]:.3f} {ap[5]:.3f}')

if tmp_dir is not None:

tmp_dir.cleanup()

return eval_results

5.mmdet/configs/_base_/datasets/coco_instance.py中开启测试打印format_only=False

test_evaluator = dict(

type=CocoMetric,

metric=['bbox', 'segm'],

format_only=False,

ann_file=data_root + 'annotations.json',

outfile_prefix='./work_dirs/coco_instance/test')

完整效果

运行tools/test.py时输出如下

03/01 16:01:41 - mmengine - INFO - Evaluating bbox...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=0.01s).

Accumulating evaluation results...

DONE (t=0.01s).

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.413, per category = [ 0.600 0.320 0.466 0.266]

Average P-R curve Area (mAP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.575, per category = [ 0.814 0.477 0.711 0.297]

Average P-R curve Area (mAP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.419, per category = [ 0.713 0.277 0.451 0.234]

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000, per category = [-1.000 -1.000 -1.000 -1.000]

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.353, per category = [ 0.605 0.325 0.482 0.000]

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.444, per category = [ 0.592 0.378 0.473 0.333]

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 1 ] = 0.339, per category = [ 0.240 0.357 0.357 0.400]

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 10 ] = 0.773, per category = [ 0.920 0.714 0.857 0.600]

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.773, per category = [ 0.920 0.714 0.857 0.600]

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000, per category = [-1.000 -1.000 -1.000 -1.000]

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.426, per category = [ 0.647 0.483 0.575 0.000]

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.636, per category = [ 0.720 0.550 0.650 0.625]

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 1 ] = 0.673, per category = [ 0.857 0.714 0.833 0.286]

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 10 ] = 0.329, per category = [ 0.489 0.286 0.400 0.143]

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.321, per category = [ 0.479 0.286 0.375 0.143]

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000, per category = [-1.000 -1.000 -1.000 -1.000]

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.251, per category = [ 0.443 0.229 0.333 0.000]

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.218, per category = [ 0.276 0.196 0.259 0.139]

03/01 16:01:41 - mmengine - INFO -

+----------+-----------+--------+--------+--------+-----------+-------+-------+-------+

| category | Precision | Recall | mAP_50 | mAP_75 | mAP_50_95 | mAP_s | mAP_m | mAP_l |

+----------+-----------+--------+--------+--------+-----------+-------+-------+-------+

| intact | 0.479 | 0.92 | 0.814 | 0.713 | 0.6 | nan | 0.605 | 0.592 |

| slight | 0.286 | 0.714 | 0.477 | 0.277 | 0.32 | nan | 0.325 | 0.378 |

| severe | 0.375 | 0.857 | 0.711 | 0.451 | 0.466 | nan | 0.482 | 0.473 |

| collapse | 0.143 | 0.6 | 0.297 | 0.234 | 0.266 | nan | 0.0 | 0.333 |

| all | 0.321 | 0.773 | 0.575 | 0.419 | 0.413 | -1.0 | 0.353 | 0.444 |

+----------+-----------+--------+--------+--------+-----------+-------+-------+-------+

03/01 16:01:41 - mmengine - INFO - bbox_mAP_copypaste: 0.413 0.575 0.419 -1.000 0.353 0.444

03/01 16:01:41 - mmengine - INFO - Evaluating segm...

Loading and preparing results...

DONE (t=0.00s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *segm*

DONE (t=0.02s).

Accumulating evaluation results...

DONE (t=0.01s).

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.394, per category = [ 0.565 0.310 0.511 0.189]

Average P-R curve Area (mAP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.542, per category = [ 0.746 0.477 0.711 0.234]

Average P-R curve Area (mAP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.460, per category = [ 0.713 0.222 0.671 0.234]

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000, per category = [-1.000 -1.000 -1.000 -1.000]

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.303, per category = [ 0.567 0.135 0.509 0.000]

Average P-R curve Area (mAP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.460, per category = [ 0.588 0.465 0.537 0.248]

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 1 ] = 0.289, per category = [ 0.240 0.357 0.357 0.200]

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets= 10 ] = 0.693, per category = [ 0.800 0.714 0.857 0.400]

Average Recall (AR) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.693, per category = [ 0.800 0.714 0.857 0.400]

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000, per category = [-1.000 -1.000 -1.000 -1.000]

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.410, per category = [ 0.600 0.417 0.625 0.000]

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.574, per category = [ 0.650 0.550 0.670 0.425]

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 1 ] = 0.637, per category = [ 0.857 0.714 0.833 0.143]

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets= 10 ] = 0.302, per category = [ 0.426 0.286 0.400 0.095]

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.293, per category = [ 0.417 0.286 0.375 0.095]

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = -1.000, per category = [-1.000 -1.000 -1.000 -1.000]

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.161, per category = [ 0.321 0.132 0.192 0.000]

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.271, per category = [ 0.326 0.275 0.353 0.131]

03/01 16:01:41 - mmengine - INFO -

+----------+-----------+--------+--------+--------+-----------+-------+-------+-------+

| category | Precision | Recall | mAP_50 | mAP_75 | mAP_50_95 | mAP_s | mAP_m | mAP_l |

+----------+-----------+--------+--------+--------+-----------+-------+-------+-------+

| intact | 0.417 | 0.8 | 0.746 | 0.713 | 0.565 | nan | 0.567 | 0.588 |

| slight | 0.286 | 0.714 | 0.477 | 0.222 | 0.31 | nan | 0.135 | 0.465 |

| severe | 0.375 | 0.857 | 0.711 | 0.671 | 0.511 | nan | 0.509 | 0.537 |

| collapse | 0.095 | 0.4 | 0.234 | 0.234 | 0.189 | nan | 0.0 | 0.248 |

| all | 0.293 | 0.693 | 0.542 | 0.46 | 0.394 | -1.0 | 0.303 | 0.46 |

+----------+-----------+--------+--------+--------+-----------+-------+-------+-------+

03/01 16:01:41 - mmengine - INFO - segm_mAP_copypaste: 0.394 0.542 0.460 -1.000 0.303 0.460

03/01 16:01:41 - mmengine - INFO - Results has been saved to results.pkl.

03/01 16:01:41 - mmengine - INFO - Epoch(test) [7/7] coco/intact_precision: 0.5650 coco/slight_precision: 0.3100 coco/severe_precision: 0.5110 coco/collapse_precision: 0.1890 coco/bbox_mAP_50_95: 0.4130 coco/bbox_mAP_50: 0.5750 coco/bbox_mAP_75: 0.4190 coco/bbox_mAP_s: -1.0000 coco/bbox_mAP_m: 0.3530 coco/bbox_mAP_l: 0.4440 coco/segm_mAP_50_95: 0.3940 coco/segm_mAP_50: 0.5420 coco/segm_mAP_75: 0.4600 coco/segm_mAP_s: -1.0000 coco/segm_mAP_m: 0.3030 coco/segm_mAP_l: 0.4600 data_time: 1.7243 time: 2.2589

473

473

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?