mmdetection3.x中benchmark.py的问题与解决方案

1.问题背景

在使用mmdetection进行测试模型FPS时,遇到了很多错误,在查阅其仓库Issues后以及自己改动进行解决

实验环境

关键环境

mmdetection==3.3.0+

mmcv==2.0.0

python==3.8.18

PyTorch==2.0.1+cu117

TorchVision==0.15.2+cu117

完整信息如下

sys.platform: win32

Python: 3.8.18 (default, Sep 11 2023, 13:39:12) [MSC v.1916 64 bit (AMD64)]

CUDA available: True

MUSA available: False

numpy_random_seed: 2147483648

GPU 0: NVIDIA GeForce RTX 3050 Laptop GPU

CUDA_HOME: C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.7

NVCC: Cuda compilation tools, release 11.7, V11.7.99

MSVC: 用于 x64 的 Microsoft (R) C/C++ 优化编译器 19.29.30152 版

GCC: n/a

PyTorch: 2.0.1+cu117

PyTorch compiling details: PyTorch built with:

- C++ Version: 199711

- MSVC 193431937

- Intel(R) Math Kernel Library Version 2020.0.2 Product Build 20200624 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v2.7.3 (Git Hash 6dbeffbae1f23cbbeae17adb7b5b13f1f37c080e)

- OpenMP 2019

- LAPACK is enabled (usually provided by MKL)

- CPU capability usage: AVX2

- CUDA Runtime 11.7

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37

- CuDNN 8.5

- Magma 2.5.4

- Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.7, CUDNN_VERSION=8.5.0, CXX_COMPILER=C:/actions-runner/_work/pytorch/pytorch/builder/windows/tmp_bin/sccache-cl.exe, CXX_FLAGS=/DWIN32 /D_WINDOWS /GR /EHsc /w /bigobj /FS -DUSE_PTHREADPOOL -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOCUPTI -DLIBKINETO_NOROCTRACER -DUSE_FBGEMM -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_DISABLE_GPU_ASSERTS=OFF, TORCH_VERSION=2.0.1, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=ON, USE_MPI=OFF, USE_NCCL=OFF, USE_NNPACK=OFF, USE_OPENMP=ON, USE_ROCM=OFF,

TorchVision: 0.15.2+cu117

OpenCV: 4.9.0

MMEngine: 0.10.3

MMDetection: 3.3.0+

2.问题汇总

官方仓库tools/analysis_tools/benchmark.py代码

# Copyright (c) OpenMMLab. All rights reserved.

import argparse

import os

from mmengine import MMLogger

from mmengine.config import Config, DictAction

from mmengine.dist import init_dist

from mmengine.registry import init_default_scope

from mmengine.utils import mkdir_or_exist

from mmdet.utils.benchmark import (DataLoaderBenchmark, DatasetBenchmark,

InferenceBenchmark)

def parse_args():

parser = argparse.ArgumentParser(description='MMDet benchmark')

parser.add_argument('config', help='test config file path')

parser.add_argument('--checkpoint', help='checkpoint file')

parser.add_argument(

'--task',

choices=['inference', 'dataloader', 'dataset'],

default='dataloader',

help='Which task do you want to go to benchmark')

parser.add_argument(

'--repeat-num',

type=int,

default=1,

help='number of repeat times of measurement for averaging the results')

parser.add_argument(

'--max-iter', type=int, default=2000, help='num of max iter')

parser.add_argument(

'--log-interval', type=int, default=50, help='interval of logging')

parser.add_argument(

'--num-warmup', type=int, default=5, help='Number of warmup')

parser.add_argument(

'--fuse-conv-bn',

action='store_true',

help='Whether to fuse conv and bn, this will slightly increase'

'the inference speed')

parser.add_argument(

'--dataset-type',

choices=['train', 'val', 'test'],

default='test',

help='Benchmark dataset type. only supports train, val and test')

parser.add_argument(

'--work-dir',

help='the directory to save the file containing '

'benchmark metrics')

parser.add_argument(

'--cfg-options',

nargs='+',

action=DictAction,

help='override some settings in the used config, the key-value pair '

'in xxx=yyy format will be merged into config file. If the value to '

'be overwritten is a list, it should be like key="[a,b]" or key=a,b '

'It also allows nested list/tuple values, e.g. key="[(a,b),(c,d)]" '

'Note that the quotation marks are necessary and that no white space '

'is allowed.')

parser.add_argument(

'--launcher',

choices=['none', 'pytorch', 'slurm', 'mpi'],

default='none',

help='job launcher')

parser.add_argument('--local_rank', type=int, default=0)

args = parser.parse_args()

if 'LOCAL_RANK' not in os.environ:

os.environ['LOCAL_RANK'] = str(args.local_rank)

return args

def inference_benchmark(args, cfg, distributed, logger):

benchmark = InferenceBenchmark(

cfg,

args.checkpoint,

distributed,

args.fuse_conv_bn,

args.max_iter,

args.log_interval,

args.num_warmup,

logger=logger)

return benchmark

def dataloader_benchmark(args, cfg, distributed, logger):

benchmark = DataLoaderBenchmark(

cfg,

distributed,

args.dataset_type,

args.max_iter,

args.log_interval,

args.num_warmup,

logger=logger)

return benchmark

def dataset_benchmark(args, cfg, distributed, logger):

benchmark = DatasetBenchmark(

cfg,

args.dataset_type,

args.max_iter,

args.log_interval,

args.num_warmup,

logger=logger)

return benchmark

def main():

args = parse_args()

cfg = Config.fromfile(args.config)

if args.cfg_options is not None:

cfg.merge_from_dict(args.cfg_options)

init_default_scope(cfg.get('default_scope', 'mmdet'))

distributed = False

if args.launcher != 'none':

init_dist(args.launcher, **cfg.get('env_cfg', {}).get('dist_cfg', {}))

distributed = True

log_file = None

if args.work_dir:

log_file = os.path.join(args.work_dir, 'benchmark.log')

mkdir_or_exist(args.work_dir)

logger = MMLogger.get_instance(

'mmdet', log_file=log_file, log_level='INFO')

benchmark = eval(f'{args.task}_benchmark')(args, cfg, distributed, logger)

benchmark.run(args.repeat_num)

if __name__ == '__main__':

main()

官方文档运行指令参考Log Analysis — MMDetection 3.3.0 documentation

python -m torch.distributed.launch --nproc_per_node=1 --master_port=29500 tools/analysis_tools/benchmark.py \

configs/faster_rcnn/faster-rcnn_r50_fpn_1x_coco.py \

checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth \

--launcher pytorch

由于是测试FPS,修改benchmark.py中task部分,dataloader为inference

parser.add_argument(

'--task',

choices=['inference', 'dataloader', 'dataset'],

default='dataloader',

help='Which task do you want to go to benchmark')

修改后

parser.add_argument(

'--task',

choices=['inference', 'dataloader', 'dataset'],

default='inference',

help='Which task do you want to go to benchmark')

我的运行代码如下

python -m torch.distributed.launch --nproc_per_node=1 --master_port=29500 tools/analysis_tools/benchmark.py D:\projects\python\mmdetection\tools\work_dirs\mask_rcnn_r50_fpn_1x_coco\mask_rcnn_r50_fpn_1x_coco.py D:\Users\13055\Documents\BaiduDisk\epoch_80.pth --launcher pytorch

1.unrecognized arguments

usage: benchmark.py [-h] [--checkpoint CHECKPOINT] [--task {inference,dataloader,dataset}] [--repeat-num REPEAT_NUM] [--max-iter MAX_ITER] [--log-interval LOG_INTERVAL] [--num-warmup NUM_WARMUP] [--fuse-conv-bn]

[--dataset-type {train,val,test}] [--work-dir WORK_DIR] [--cfg-options CFG_OPTIONS [CFG_OPTIONS ...]] [--launcher {none,pytorch,slurm,mpi}] [--local_rank LOCAL_RANK]

config

benchmark.py: error: unrecognized arguments: --local-rank=0 D:\Users\13055\Documents\BaiduDisk\epoch_80.pth

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 2) local_rank: 0 (pid: 17436) of binary: D:\software\anaconda3\envs\mmdetection\python.exe

Traceback (most recent call last):

File "D:\software\anaconda3\envs\mmdetection\lib\runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "D:\software\anaconda3\envs\mmdetection\lib\runpy.py", line 87, in _run_code

exec(code, run_globals)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 196, in <module>

main()

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 192, in main

launch(args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 177, in launch

run(args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\run.py", line 785, in run

elastic_launch(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launcher\api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launcher\api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

原因

parser.add_argument('--local_rank', type=int, default=0)

修改_为-

parser.add_argument('--local-rank', type=int, default=0)

新的报错如下

usage: benchmark.py [-h] [--checkpoint CHECKPOINT] [--task {inference,dataloader,dataset}] [--repeat-num REPEAT_NUM] [--max-iter MAX_ITER] [--log-interval LOG_INTERVAL] [--num-warmup NUM_WARMUP] [--fuse-conv-bn]

[--dataset-type {train,val,test}] [--work-dir WORK_DIR] [--cfg-options CFG_OPTIONS [CFG_OPTIONS ...]] [--launcher {none,pytorch,slurm,mpi}] [--local-rank LOCAL_RANK]

config

benchmark.py: error: unrecognized arguments: D:\Users\13055\Documents\BaiduDisk\epoch_80.pth

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 2) local_rank: 0 (pid: 28144) of binary: D:\software\anaconda3\envs\mmdetection\python.exe

Traceback (most recent call last):

File "D:\software\anaconda3\envs\mmdetection\lib\runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "D:\software\anaconda3\envs\mmdetection\lib\runpy.py", line 87, in _run_code

exec(code, run_globals)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 196, in <module>

main()

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 192, in main

launch(args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 177, in launch

run(args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\run.py", line 785, in run

elastic_launch(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launcher\api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launcher\api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

原因,--checkpoint参数未指明

修改运行命令如下

python -m torch.distributed.launch --nproc_per_node=1 --master_port=29500 tools/analysis_tools/benchmark.py D:\projects\python\mmdetection\tools\work_dirs\mask_rcnn_r50_fpn_1x_coco\mask_rcnn_r50_fpn_1x_coco.py --checkpoint D:\Users\13055\Documents\BaiduDisk\epoch_80.pth --launcher pytorch

2.type of name should be str, but got <class ‘mmengine.utils.manager.ManagerMeta’>

报错如下

D:\software\anaconda3\envs\mmdetection\lib\site-packages\numpy\.libs\libopenblas64__v0.3.21-gcc_10_3_0.dll

warnings.warn("loaded more than 1 DLL from .libs:"

Traceback (most recent call last):

File "tools/analysis_tools/benchmark.py", line 133, in <module>

main()

File "tools/analysis_tools/benchmark.py", line 113, in main

init_default_scope(cfg.get('default_scope', 'mmdet'))

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\utils.py", line 106, in init_default_scope

DefaultScope.get_instance(scope, scope_name=scope)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\utils\manager.py", line 105, in get_instance

assert isinstance(name, str), \

AssertionError: type of name should be str, but got <class 'mmengine.utils.manager.ManagerMeta'>

配置文件D:\projects\python\mmdetection\tools\work_dirs\mask_rcnn_r50_fpn_1x_coco\mask_rcnn_r50_fpn_1x_coco.py中的default_scope = None导致错误

将其注释即可

# default_scope = None

或者

default_scope = 'mmdet'

3.KeyError: 'mmdet.models.losses.smooth_l1_loss.L1Loss is not in the mmdet::model registry

报错如下

KeyError: 'mmdet.models.losses.smooth_l1_loss.L1Loss is not in the mmdet::model registry. Please check whether the value of `mmdet.models.losses.smooth_l1_loss.L1Loss` is correct or it was registered as expected. More details can be found at https://mmengine.readthedocs.io/en/latest/advanced_tutorials/config.html#import-the-custom-module'

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 28624) of binary: D:\software\anaconda3\envs\mmdetection\python.exe

Traceback (most recent call last):

File "D:\software\anaconda3\envs\mmdetection\lib\runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "D:\software\anaconda3\envs\mmdetection\lib\runpy.py", line 87, in _run_code

exec(code, run_globals)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 196, in <module>

main()

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 192, in main

launch(args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launch.py", line 177, in launch

run(args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\run.py", line 785, in run

elastic_launch(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launcher\api.py", line 134, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\launcher\api.py", line 250, in launch_agent

raise ChildFailedError(

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

解决方案

配置文件D:\projects\python\mmdetection\tools\work_dirs\mask_rcnn_r50_fpn_1x_coco\mask_rcnn_r50_fpn_1x_coco.py中的type='mmdet.models.losses.smooth_l1_loss.L1Loss'导致错误

loss_bbox=dict(

loss_weight=1.0,

type='mmdet.models.losses.smooth_l1_loss.L1Loss'),

此处粗略修改为(反正就是修改loss函数)

loss_bbox=dict(

loss_weight=1.0,

type='mmdet.models.losses.cross_entropy_loss.CrossEntropyLoss'),

4.AttributeError: ‘DistributedDataParallel’ object has no attribute ‘test_step’

Traceback (most recent call last):

File "tools/analysis_tools/benchmark.py", line 133, in <module>

main()

File "tools/analysis_tools/benchmark.py", line 129, in main

benchmark.run(args.repeat_num)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\utils\benchmark.py", line 107, in run

results.append(self.run_once())

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\utils\benchmark.py", line 222, in run_once

self.model.test_step(data)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\nn\modules\module.py", line 1614, in __getattr__

raise AttributeError("'{}' object has no attribute '{}'".format(

AttributeError: 'DistributedDataParallel' object has no attribute 'test_step'

原因不知道,解决方案为将运行命令中的--launcher pytorch去掉

修改后命令如下

python -m torch.distributed.launch --nproc_per_node=1 --master_port=29500 tools/analysis_tools/benchmark.py D:\projects\python\mmdetection\tools\work_dirs\mask_rcnn_r50_fpn_1x_coco\mask_rcnn_r50_fpn_1x_coco.py --checkpoint D:\Users\13055\Documents\BaiduDisk\epoch_80.pth

报错如下

Traceback (most recent call last):

File "tools/analysis_tools/benchmark.py", line 133, in <module>

main()

File "tools/analysis_tools/benchmark.py", line 128, in main

benchmark = eval(f'{args.task}_benchmark')(args, cfg, distributed, logger)

File "tools/analysis_tools/benchmark.py", line 72, in inference_benchmark

benchmark = InferenceBenchmark(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\utils\benchmark.py", line 170, in __init__

self.model = self._init_model(checkpoint, is_fuse_conv_bn)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\utils\benchmark.py", line 186, in _init_model

model = MODELS.build(self.cfg.model)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\registry.py", line 570, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\build_functions.py", line 232, in build_model_from_cfg

return build_from_cfg(cfg, registry, default_args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\build_functions.py", line 121, in build_from_cfg

obj = obj_cls(**args) # type: ignore

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\detectors\mask_rcnn.py", line 22, in __init__

super().__init__(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\detectors\two_stage.py", line 61, in __init__

self.roi_head = MODELS.build(roi_head)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\registry.py", line 570, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\build_functions.py", line 232, in build_model_from_cfg

return build_from_cfg(cfg, registry, default_args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\build_functions.py", line 121, in build_from_cfg

obj = obj_cls(**args) # type: ignore

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\roi_heads\base_roi_head.py", line 32, in __init__

self.init_bbox_head(bbox_roi_extractor, bbox_head)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\roi_heads\standard_roi_head.py", line 38, in init_bbox_head

self.bbox_roi_extractor = MODELS.build(bbox_roi_extractor)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\registry.py", line 570, in build

return self.build_func(cfg, *args, **kwargs, registry=self)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\build_functions.py", line 232, in build_model_from_cfg

return build_from_cfg(cfg, registry, default_args)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\registry\build_functions.py", line 121, in build_from_cfg

obj = obj_cls(**args) # type: ignore

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\roi_heads\roi_extractors\single_level_roi_extractor.py", line 37, in __init__

super().__init__(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\roi_heads\roi_extractors\base_roi_extractor.py", line 32, in __init__

self.roi_layers = self.build_roi_layers(roi_layer, featmap_strides)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\roi_heads\roi_extractors\base_roi_extractor.py", line 64, in build_roi_layers

assert hasattr(ops, layer_type)

AssertionError

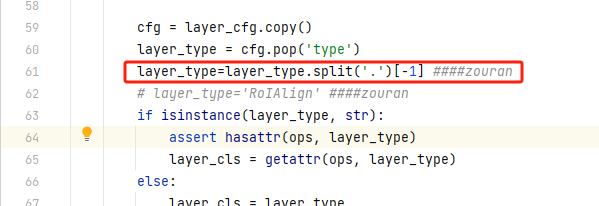

这里可能是代码本身bug问题了,修改库文件即可

进入上述报错的最后一行文件位置进行修改

D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\roi_heads\roi_extractors\base_roi_extractor.py

上述文件的64行代码前加入下图中添加部分代码即可

layer_type=layer_type.split('.')[-1] ####zouran

5.NameError: name ‘mmcv’ is not defined

报错如下

Traceback (most recent call last):

File "tools/analysis_tools/benchmark.py", line 133, in <module>

main()

File "tools/analysis_tools/benchmark.py", line 129, in main

benchmark.run(args.repeat_num)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\utils\benchmark.py", line 107, in run

results.append(self.run_once())

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\utils\benchmark.py", line 222, in run_once

self.model.test_step(data)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\model\base_model\base_model.py", line 145, in test_step

return self._run_forward(data, mode='predict') # type: ignore

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmengine\model\base_model\base_model.py", line 361, in _run_forward

results = self(**data, mode=mode)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\detectors\base.py", line 94, in forward

return self.predict(inputs, data_samples)

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\detectors\two_stage.py", line 231, in predict

rpn_results_list = self.rpn_head.predict(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\dense_heads\base_dense_head.py", line 197, in predict

predictions = self.predict_by_feat(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\dense_heads\base_dense_head.py", line 279, in predict_by_feat

results = self._predict_by_feat_single(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\dense_heads\rpn_head.py", line 233, in _predict_by_feat_single

return self._bbox_post_process(

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmdet\models\dense_heads\rpn_head.py", line 284, in _bbox_post_process

det_bboxes, keep_idxs = batched_nms(bboxes, results.scores,

File "D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmcv\ops\nms.py", line 300, in batched_nms

nms_op = eval(nms_type)

File "<string>", line 1, in <module>

NameError: name 'mmcv' is not defined

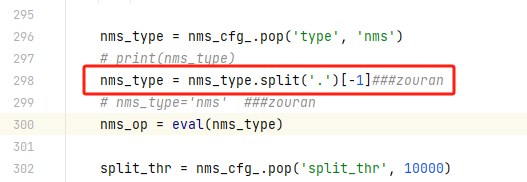

定位到D:\software\anaconda3\envs\mmdetection\lib\site-packages\mmcv\ops\nms.py文件

在报错行代码前加入如下代码即可

nms_type = nms_type.split('.')[-1]###zouran

6.RuntimeError: Distributed package doesn’t have NCCL built in

Traceback (most recent call last):

File "D:\zr\projects\mmdetection\tools\analysis_tools\benchmark.py", line 133, in <module>

main()

File "D:\zr\projects\mmdetection\tools\analysis_tools\benchmark.py", line 117, in main

init_dist(args.launcher, **cfg.get('env_cfg', {}).get('dist_cfg', {}))

File "D:\Users\lenovo\anaconda3\envs\mmdetection\lib\site-packages\mmengine\dist\utils.py", line 85, in init_dist

_init_dist_pytorch(backend, init_backend=init_backend, **kwargs)

File "D:\Users\lenovo\anaconda3\envs\mmdetection\lib\site-packages\mmengine\dist\utils.py", line 133, in _init_dist_pytorch

torch_dist.init_process_group(backend=backend, **kwargs)

File "D:\Users\lenovo\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\distributed_c10d.py", line 907, in init_process_group

default_pg = _new_process_group_helper(

File "D:\Users\lenovo\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\distributed_c10d.py", line 1013, in _new_process_group_helper

raise RuntimeError("Distributed package doesn't have NCCL " "built in")

RuntimeError: Distributed package doesn't have NCCL built in

这个据说是Windows系统不支持NCCL,解决方案为

修改D:\Users\lenovo\anaconda3\envs\mmdetection\lib\site-packages\torch\distributed\distributed_c10d.py文件

添加代码

backend = "gloo" ###zouran

3.实验结果

4.参考文献

1.[Bug] fps benchmark tool can’t work · Issue #9387 · open-mmlab/mmdetection (github.com)

2.https://blog.csdn.net/m0_61787307/article/details/129638108

6737

6737

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?