背景

现在做一些应用级的服务平台时,有时会遇到用户需要上传一个较大的文件,但是上传失败后需要支持下次从失败的地方开始上传,这时候需要用到端点续传,分片上传的解决方案。本文介绍了一种策略来实现这个场景,涉及技术栈有 ng,springboot,vue,minio,amazonS3等框架和组件。

准备工作

1、ng设置文件大小

做这一步的目的是为了让文件分片后不会因为size而导致传不上去。

client_max_body_size 100m; # 目前我们项目需要,这里设置的100m

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

# 在这里设置一下最大支持传输文件大小

client_max_body_size 100m;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

....

}2、springboot配置

servlet:

multipart:

max-file-size: 100MB

# 最大支持请求大小

max-request-size: 500MB接入

前端上传组件 UploadParallel.vue

<script setup>

import { UploadFilled } from '@element-plus/icons-vue'

import md5 from "../lib/md5";

import { taskInfo, initTask, preSignUrl, merge } from '../lib/api';

import {ElNotification} from "element-plus";

import Queue from 'promise-queue-plus';

import axios from 'axios'

import { ref } from 'vue'

// 文件上传分块任务的队列(用于移除文件时,停止该文件的上传队列) key:fileUid value: queue object

const fileUploadChunkQueue = ref({}).value

/**

* 获取一个上传任务,没有则初始化一个

*/

const getTaskInfo = async (file) => {

let task;

const identifier = await md5(file)

const { code, data, msg } = await taskInfo(identifier)

if (code === 200) {

task = data

if (!task || Object.keys(task).length === 0) {

const initTaskData = {

identifier,

fileName: file.name,

totalSize: file.size,

chunkSize: 5 * 1024 * 1024

}

const { code, data, msg } = await initTask(initTaskData)

if (code === 200) {

task = data

} else {

ElNotification.error({

title: '文件上传错误',

message: msg

})

}

}

} else {

ElNotification.error({

title: '文件上传错误',

message: msg

})

}

return task

}

/**

* 上传逻辑处理,如果文件已经上传完成(完成分块合并操作),则不会进入到此方法中

*/

const handleUpload = (file, taskRecord, options) => {

let lastUploadedSize = 0; // 上次断点续传时上传的总大小

let uploadedSize = 0 // 已上传的大小

const totalSize = file.size || 0 // 文件总大小

let startMs = new Date().getTime(); // 开始上传的时间

const { exitPartList, chunkSize, chunkNum, fileIdentifier } = taskRecord

// 获取从开始上传到现在的平均速度(byte/s)

const getSpeed = () => {

// 已上传的总大小 - 上次上传的总大小(断点续传)= 本次上传的总大小(byte)

const intervalSize = uploadedSize - lastUploadedSize

const nowMs = new Date().getTime()

// 时间间隔(s)

const intervalTime = (nowMs - startMs) / 1000

return intervalSize / intervalTime

}

const uploadNext = async (partNumber) => {

const start = new Number(chunkSize) * (partNumber - 1)

const end = start + new Number(chunkSize)

const blob = file.slice(start, end)

const { code, data, msg } = await preSignUrl({ identifier: fileIdentifier, partNumber: partNumber} )

if (code === 200 && data) {

await axios.request({

url: data,

method: 'PUT',

data: blob,

headers: {'Content-Type': 'application/octet-stream'}

})

return Promise.resolve({ partNumber: partNumber, uploadedSize: blob.size })

}

return Promise.reject(`分片${partNumber}, 获取上传地址失败`)

}

/**

* 更新上传进度

* @param increment 为已上传的进度增加的字节量

*/

const updateProcess = (increment) => {

increment = new Number(increment)

const { onProgress } = options

let factor = 1000; // 每次增加1000 byte

let from = 0;

// 通过循环一点一点的增加进度

while (from <= increment) {

from += factor

uploadedSize += factor

const percent = Math.round(uploadedSize / totalSize * 100).toFixed(2);

onProgress({percent: percent})

}

const speed = getSpeed();

const remainingTime = speed != 0 ? Math.ceil((totalSize - uploadedSize) / speed) + 's' : '未知'

console.log('剩余大小:', (totalSize - uploadedSize) / 1024 / 1024, 'mb');

console.log('当前速度:', (speed / 1024 / 1024).toFixed(2), 'mbps');

console.log('预计完成:', remainingTime);

}

return new Promise(resolve => {

const failArr = [];

const queue = Queue(5, {

"retry": 3, //Number of retries

"retryIsJump": false, //retry now?

"workReject": function(reason,queue){

failArr.push(reason)

},

"queueEnd": function(queue){

resolve(failArr);

}

})

fileUploadChunkQueue[file.uid] = queue

for (let partNumber = 1; partNumber <= chunkNum; partNumber++) {

const exitPart = (exitPartList || []).find(exitPart => exitPart.partNumber == partNumber)

if (exitPart) {

// 分片已上传完成,累计到上传完成的总额中,同时记录一下上次断点上传的大小,用于计算上传速度

lastUploadedSize += new Number(exitPart.size)

updateProcess(exitPart.size)

} else {

queue.push(() => uploadNext(partNumber).then(res => {

// 单片文件上传完成再更新上传进度

updateProcess(res.uploadedSize)

}))

}

}

if (queue.getLength() == 0) {

// 所有分片都上传完,但未合并,直接return出去,进行合并操作

resolve(failArr);

return;

}

queue.start()

})

}

/**

* el-upload 自定义上传方法入口

*/

const handleHttpRequest = async (options) => {

const file = options.file

const task = await getTaskInfo(file)

if (task) {

const { finished, path, taskRecord } = task

const { fileIdentifier: identifier } = taskRecord

if (finished) {

return path

} else {

const errorList = await handleUpload(file, taskRecord, options)

if (errorList.length > 0) {

ElNotification.error({

title: '文件上传错误',

message: '部分分片上次失败,请尝试重新上传文件'

})

return;

}

const { code, data, msg } = await merge(identifier)

if (code === 200) {

return path;

} else {

ElNotification.error({

title: '文件上传错误',

message: msg

})

}

}

} else {

ElNotification.error({

title: '文件上传错误',

message: '获取上传任务失败'

})

}

}

/**

* 移除文件列表中的文件

* 如果文件存在上传队列任务对象,则停止该队列的任务

*/

const handleRemoveFile = (uploadFile, uploadFiles) => {

const queueObject = fileUploadChunkQueue[uploadFile.uid]

if (queueObject) {

queueObject.stop()

fileUploadChunkQueue[uploadFile.uid] = undefined

}

}

</script>

<template>

<el-card style="width: 80%; margin: 80px auto" header="文件分片上传">

<el-upload

class="upload-demo"

drag

action="/"

multiple

:http-request="handleHttpRequest"

:on-remove="handleRemoveFile">

<el-icon class="el-icon--upload"><upload-filled /></el-icon>

<div class="el-upload__text">

请拖拽文件到此处或 <em>点击此处上传</em>

</div>

</el-upload>

</el-card>

</template>

前端api文件

import axios from 'axios'

import axiosExtra from 'axios-extra'

const baseUrl = 'http://172.16.10.74:10003/dsj-file'

const http = axios.create({

baseURL: baseUrl,

headers: {

'Dsj-Auth':'bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJ0ZW5hbnRfaWQiOiIwMDAwMDAiLCJkc2pJZCI6ImFqZGhxaWRicSIsImRpc3RyaWN0Q29kZSI6IjQyMTEwMDAwMDAwMCIsInVzZXJfbmFtZSI6ImFkbWluIiwic29jaWFsQWNjb3VudElkIjpudWxsLCJyZWFsX25hbWUiOiLotoXnuqfnrqHnkIblkZgiLCJjbGllbnRfaWQiOiJzd29yZCIsInJvbGVfaWQiOiIxNDkzODIwMjY0OTY4OTk0ODE3IiwiaXNEZWZhdWx0UGFzc3dvcmQiOmZhbHNlLCJpZGVudGl0eVR5cGUiOiIxIiwic2NvcGUiOlsiYWxsIl0sImRlcHRTQ0NvZGUiOiIxMTQyMTEwMDc0NDYyMTAxMDgiLCJleHAiOjE2OTU4NjczMDUsImp0aSI6IjEwMmE3YzhhLTdiMWYtNDU4NC04ZWJjLWZiYmUyZTQyYmUzNCIsImlkZW50aXR5RHluYURhdGEiOnt9LCJhdmF0YXIiOiIxNjM1MTA4Nzk5MzQ3OTU3NzYyIiwiYXV0aG9yaXRpZXMiOlsiYWRtaW5pc3RyYXRvciJdLCJyb2xlX25hbWUiOiJhZG1pbmlzdHJhdG9yIiwiYWNjb3VudElkIjoiMTQ1Mzk5MzI5MjAxMDM5OTgxMSIsImxpY2Vuc2UiOiJwb3dlcmVkIGJ5IGRzaiIsInBvc3RfaWQiOiIxNTYwMTQ1MzUwMDY5NjY5ODg5IiwidXNlcl9pZCI6IjE1NDczOTY0ODAwNzkyMzcxMjEiLCJwaG9uZSI6IjE1ODcxOTI2MDczIiwibmlja19uYW1lIjoi6LaF57qn566h55CG5ZGYIiwiZGVwdF9pZCI6IjExNDIxMTAwNzQ0NjIxMDEwOCIsImFjY291bnQiOiJhZG1pbiIsImRlcHRDb2RlIjoiMTE0MjExMDA3NDQ2MjEwMTA4In0.5r85ctPgWxNarVdF9kwTNoub7IqQM6RxTHYIU-ajxio'

}

})

const httpExtra = axiosExtra.create({

maxConcurrent: 5, //并发为1

queueOptions: {

retry: 3, //请求失败时,最多会重试3次

retryIsJump: false //是否立即重试, 否则将在请求队列尾部插入重试请求

}

})

http.interceptors.response.use(response => {

return response.data

})

/**

* 根据文件的md5获取未上传完的任务

* @param identifier 文件md5

* @returns {Promise<AxiosResponse<any>>}

*/

const taskInfo = (identifier) => {

return http.get(`/parallel-upload/${identifier}`)

}

/**

* 初始化一个分片上传任务

* @param identifier 文件md5

* @param fileName 文件名称

* @param totalSize 文件大小

* @param chunkSize 分块大小

* @returns {Promise<AxiosResponse<any>>}

*/

const initTask = ({identifier, fileName, totalSize, chunkSize}) => {

return http.post('/parallel-upload/init-task', {identifier, fileName, totalSize, chunkSize})

}

/**

* 获取预签名分片上传地址

* @param identifier 文件md5

* @param partNumber 分片编号

* @returns {Promise<AxiosResponse<any>>}

*/

const preSignUrl = ({identifier, partNumber}) => {

return http.get(`/parallel-upload/${identifier}/${partNumber}`)

}

/**

* 合并分片

* @param identifier

* @returns {Promise<AxiosResponse<any>>}

*/

const merge = (identifier) => {

return http.post(`/parallel-upload/merge/${identifier}`)

}

export {

taskInfo,

initTask,

preSignUrl,

merge,

httpExtra

}

文件服务minio配置

minio:

endpoint: http://172.16.10.74:9000

address: http://172.16.10.74

port: 9000

secure: false

access-key: minioadmin

secret-key: XXXXXXXXXX

bucket-name: gpd

internet-address: http://XXXXXXXXX:9000MinioProperties.java

package com.dsj.prod.file.biz.properties;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.cloud.context.config.annotation.RefreshScope;

import org.springframework.stereotype.Component;

import java.util.List;

@Data

@RefreshScope

@Component

@ConfigurationProperties(prefix = "dsj.minio")

public class MinioProperties {

/**

* The constant endpoint.

*/

public String endpoint;

/**

* The constant address.

*/

public String address;

/**

* The constant port.

*/

public String port;

/**

* The constant accessKey.

*/

public String accessKey;

/**

* The constant secretKey.

*/

public String secretKey;

/**

* The constant bucketName.

*/

public String bucketName;

/**

* The constant internetAddress.

*/

public String internetAddress;

/**

* The Limit file extension.

* doc docx xls xlsx 图片() pdf

*/

public List<String> limitFileExtension;

}

控制器 ParallelUploadController.java

package com.dsj.prod.file.biz.controller;

import com.dsj.plf.arch.tool.api.R;

import com.dsj.prod.file.api.dto.parallelUpload.InitTaskParam;

import com.dsj.prod.file.api.dto.parallelUpload.TaskInfoDTO;

import com.dsj.prod.file.api.entity.ParallelUploadTask;

import com.dsj.prod.file.biz.service.ParallelUploadTaskService;

import com.github.xiaoymin.knife4j.annotations.ApiOperationSupport;

import io.swagger.annotations.Api;

import io.swagger.annotations.ApiOperation;

import org.springframework.web.bind.annotation.*;

import javax.annotation.Resource;

import javax.validation.Valid;

import java.util.HashMap;

import java.util.Map;

@Api(value = "文件分片上传接口", tags = "文件分片上传接口")

@RestController

@RequestMapping("/parallel-upload")

public class ParallelUploadController {

@Resource

private ParallelUploadTaskService sysUploadTaskService;

@ApiOperationSupport(order = 1)

@ApiOperation(value = "获取上传进度", notes = "传入 identifier:文件md5")

@GetMapping("/{identifier}")

public R<TaskInfoDTO> taskInfo(@PathVariable("identifier") String identifier) {

TaskInfoDTO result = sysUploadTaskService.getTaskInfo(identifier);

return R.data(result);

}

/**

* 创建一个上传任务

*

* @param param the param

* @return result

*/

@ApiOperationSupport(order = 2)

@ApiOperation(value = "创建一个上传任务", notes = "传入 param")

@PostMapping("/init-task")

public R<TaskInfoDTO> initTask(@Valid @RequestBody InitTaskParam param) {

return R.data(sysUploadTaskService.initTask(param));

}

@ApiOperationSupport(order = 3)

@ApiOperation(value = "获取每个分片的预签名上传地址", notes = "传入 identifier文件md5,partNumber分片序号")

@GetMapping("/{identifier}/{partNumber}")

public R preSignUploadUrl(@PathVariable("identifier") String identifier, @PathVariable("partNumber") Integer partNumber) {

ParallelUploadTask task = sysUploadTaskService.getByIdentifier(identifier);

if (task == null) {

return R.fail("分片任务不存在");

}

Map<String, String> params = new HashMap<>();

params.put("partNumber", partNumber.toString());

params.put("uploadId", task.getUploadId());

return R.data(sysUploadTaskService.genPreSignUploadUrl(task.getBucketName(), task.getObjectKey(), params));

}

@ApiOperationSupport(order = 4)

@ApiOperation(value = "合并分片", notes = "传入 identifier文件md5")

@PostMapping("/merge/{identifier}")

public R merge(@PathVariable("identifier") String identifier) {

sysUploadTaskService.merge(identifier);

return R.success("合并成功");

}

}

服务层 ParallelUploadTaskService.java

package com.dsj.prod.file.biz.service;

import com.baomidou.mybatisplus.extension.service.IService;

import com.dsj.prod.file.api.dto.parallelUpload.InitTaskParam;

import com.dsj.prod.file.api.dto.parallelUpload.TaskInfoDTO;

import com.dsj.prod.file.api.entity.ParallelUploadTask;

import java.util.Map;

/**

* 分片上传-分片任务记录(ParallelUploadTask)表服务接口

*

* @since 2022-08-22 17:47:30

*/

public interface ParallelUploadTaskService extends IService<ParallelUploadTask> {

/**

* 根据md5标识获取分片上传任务

* @param identifier

* @return

*/

ParallelUploadTask getByIdentifier (String identifier);

/**

* 初始化一个任务

*/

TaskInfoDTO initTask (InitTaskParam param);

/**

* 获取文件地址

* @param bucket

* @param objectKey

* @return

*/

String getPath (String bucket, String objectKey);

/**

* 获取上传进度

* @param identifier

* @return

*/

TaskInfoDTO getTaskInfo (String identifier);

/**

* 生成预签名上传url

* @param bucket 桶名

* @param objectKey 对象的key

* @param params 额外的参数

* @return

*/

String genPreSignUploadUrl (String bucket, String objectKey, Map<String, String> params);

/**

* 合并分片

* @param identifier

*/

void merge (String identifier);

}

实现层 ParallelUploadTaskServiceImpl

package com.dsj.prod.file.biz.service.impl;

import cn.hutool.core.date.DateUtil;

import cn.hutool.core.util.IdUtil;

import cn.hutool.core.util.StrUtil;

import com.amazonaws.HttpMethod;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.model.*;

import com.baomidou.mybatisplus.core.conditions.query.QueryWrapper;

import com.baomidou.mybatisplus.extension.service.impl.ServiceImpl;

import com.dsj.prod.file.api.constants.MinioConstant;

import com.dsj.prod.file.api.dto.parallelUpload.InitTaskParam;

import com.dsj.prod.file.api.dto.parallelUpload.TaskInfoDTO;

import com.dsj.prod.file.api.dto.parallelUpload.TaskRecordDTO;

import com.dsj.prod.file.api.entity.ParallelUploadTask;

import com.dsj.prod.file.biz.mapper.ParallelUploadMapper;

import com.dsj.prod.file.biz.properties.MinioProperties;

import com.dsj.prod.file.biz.service.ParallelUploadTaskService;

import lombok.extern.slf4j.Slf4j;

import org.springframework.http.MediaType;

import org.springframework.http.MediaTypeFactory;

import org.springframework.stereotype.Service;

import javax.annotation.Resource;

import java.net.URL;

import java.util.Date;

import java.util.List;

import java.util.Map;

import java.util.stream.Collectors;

/**

* 分片上传-分片任务记录(ParallelUploadTask)表服务实现类

*

* @since 2022-08-22 17:47:31

*/

@Slf4j

@Service("sysUploadTaskService")

public class ParallelUploadTaskServiceImpl extends ServiceImpl<ParallelUploadMapper, ParallelUploadTask> implements ParallelUploadTaskService {

@Resource

private AmazonS3 amazonS3;

@Resource

private MinioProperties minioProperties;

@Resource

private ParallelUploadMapper sysUploadTaskMapper;

@Override

public ParallelUploadTask getByIdentifier(String identifier) {

return sysUploadTaskMapper.selectOne(new QueryWrapper<ParallelUploadTask>().lambda().eq(ParallelUploadTask::getFileIdentifier, identifier));

}

@Override

public TaskInfoDTO initTask(InitTaskParam param) {

Date currentDate = new Date();

String bucketName = minioProperties.getBucketName();

String fileName = param.getFileName();

String suffix = fileName.substring(fileName.lastIndexOf(".") + 1);

String key = StrUtil.format("{}/{}.{}", DateUtil.format(currentDate, "YYYY-MM-dd"), IdUtil.randomUUID(), suffix);

String contentType = MediaTypeFactory.getMediaType(key).orElse(MediaType.APPLICATION_OCTET_STREAM).toString();

ObjectMetadata objectMetadata = new ObjectMetadata();

objectMetadata.setContentType(contentType);

InitiateMultipartUploadResult initiateMultipartUploadResult = amazonS3.initiateMultipartUpload(new InitiateMultipartUploadRequest(bucketName, key)

.withObjectMetadata(objectMetadata));

String uploadId = initiateMultipartUploadResult.getUploadId();

ParallelUploadTask task = new ParallelUploadTask();

int chunkNum = (int) Math.ceil(param.getTotalSize() * 1.0 / param.getChunkSize());

task.setBucketName(minioProperties.getBucketName())

.setChunkNum(chunkNum)

.setChunkSize(param.getChunkSize())

.setTotalSize(param.getTotalSize())

.setFileIdentifier(param.getIdentifier())

.setFileName(fileName)

.setObjectKey(key)

.setUploadId(uploadId);

sysUploadTaskMapper.insert(task);

return new TaskInfoDTO().setFinished(false).setTaskRecord(TaskRecordDTO.convertFromEntity(task)).setPath(getPath(bucketName, key));

}

@Override

public String getPath(String bucket, String objectKey) {

return StrUtil.format("{}/{}/{}", minioProperties.getEndpoint(), bucket, objectKey);

}

@Override

public TaskInfoDTO getTaskInfo(String identifier) {

ParallelUploadTask task = getByIdentifier(identifier);

if (task == null) {

return null;

}

TaskInfoDTO result = new TaskInfoDTO().setFinished(true).setTaskRecord(TaskRecordDTO.convertFromEntity(task)).setPath(getPath(task.getBucketName(), task.getObjectKey()));

boolean doesObjectExist = amazonS3.doesObjectExist(task.getBucketName(), task.getObjectKey());

if (!doesObjectExist) {

// 未上传完,返回已上传的分片

ListPartsRequest listPartsRequest = new ListPartsRequest(task.getBucketName(), task.getObjectKey(), task.getUploadId());

PartListing partListing = amazonS3.listParts(listPartsRequest);

result.setFinished(false).getTaskRecord().setExitPartList(partListing.getParts());

}

return result;

}

@Override

public String genPreSignUploadUrl(String bucket, String objectKey, Map<String, String> params) {

Date currentDate = new Date();

Date expireDate = DateUtil.offsetMillisecond(currentDate, MinioConstant.PRE_SIGN_URL_EXPIRE.intValue());

GeneratePresignedUrlRequest request = new GeneratePresignedUrlRequest(bucket, objectKey)

.withExpiration(expireDate).withMethod(HttpMethod.PUT);

if (params != null) {

params.forEach(request::addRequestParameter);

}

URL preSignedUrl = amazonS3.generatePresignedUrl(request);

return preSignedUrl.toString();

}

@Override

public void merge(String identifier) {

ParallelUploadTask task = getByIdentifier(identifier);

if (task == null) {

log.error("分片任务不存在,任务id:{}", identifier);

throw new RuntimeException("分片任务不存在");

}

log.info("开始合并分片,任务id:{}", task.getId());

ListPartsRequest listPartsRequest = new ListPartsRequest(task.getBucketName(), task.getObjectKey(), task.getUploadId());

PartListing partListing = amazonS3.listParts(listPartsRequest);

List<PartSummary> parts = partListing.getParts();

if (!task.getChunkNum().equals(parts.size())) {

// 已上传分块数量与记录中的数量不对应,不能合并分块

log.error("分片缺失,任务id:{},已上传分块数量:{},记录中的数量:{}", task.getId(), parts.size(), task.getChunkNum());

throw new RuntimeException("分片缺失,请重新上传");

}

CompleteMultipartUploadRequest completeMultipartUploadRequest = new CompleteMultipartUploadRequest()

.withUploadId(task.getUploadId())

.withKey(task.getObjectKey())

.withBucketName(task.getBucketName())

.withPartETags(parts.stream().map(partSummary -> new PartETag(partSummary.getPartNumber(), partSummary.getETag())).collect(Collectors.toList()));

CompleteMultipartUploadResult result = amazonS3.completeMultipartUpload(completeMultipartUploadRequest);

log.info("合并分片完成,返回结果:{}", result);

}

}

亚马逊S3工具类AmazonS3Config.java

package com.dsj.prod.file.biz.config;

import com.amazonaws.ClientConfiguration;

import com.amazonaws.Protocol;

import com.amazonaws.auth.AWSCredentials;

import com.amazonaws.auth.AWSStaticCredentialsProvider;

import com.amazonaws.auth.BasicAWSCredentials;

import com.amazonaws.client.builder.AwsClientBuilder;

import com.amazonaws.regions.Regions;

import com.amazonaws.services.s3.AmazonS3;

import com.amazonaws.services.s3.AmazonS3ClientBuilder;

import com.dsj.prod.file.biz.properties.MinioProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import javax.annotation.Resource;

@Configuration

public class AmazonS3Config {

@Resource

private MinioProperties minioProperties;

@Bean(name = "amazonS3Client")

public AmazonS3 amazonS3Client () {

ClientConfiguration config = new ClientConfiguration();

config.setProtocol(Protocol.HTTP);

config.setConnectionTimeout(60000);

config.setUseExpectContinue(true);

AWSCredentials credentials = new BasicAWSCredentials(minioProperties.getAccessKey(), minioProperties.getSecretKey());

AwsClientBuilder.EndpointConfiguration end_point = new AwsClientBuilder.EndpointConfiguration(minioProperties.getEndpoint(), Regions.CN_NORTH_1.name());

AmazonS3 amazonS3 = AmazonS3ClientBuilder.standard()

.withClientConfiguration(config)

.withCredentials(new AWSStaticCredentialsProvider(credentials))

.withEndpointConfiguration(end_point)

.withPathStyleAccessEnabled(true).build();

return amazonS3;

}

}

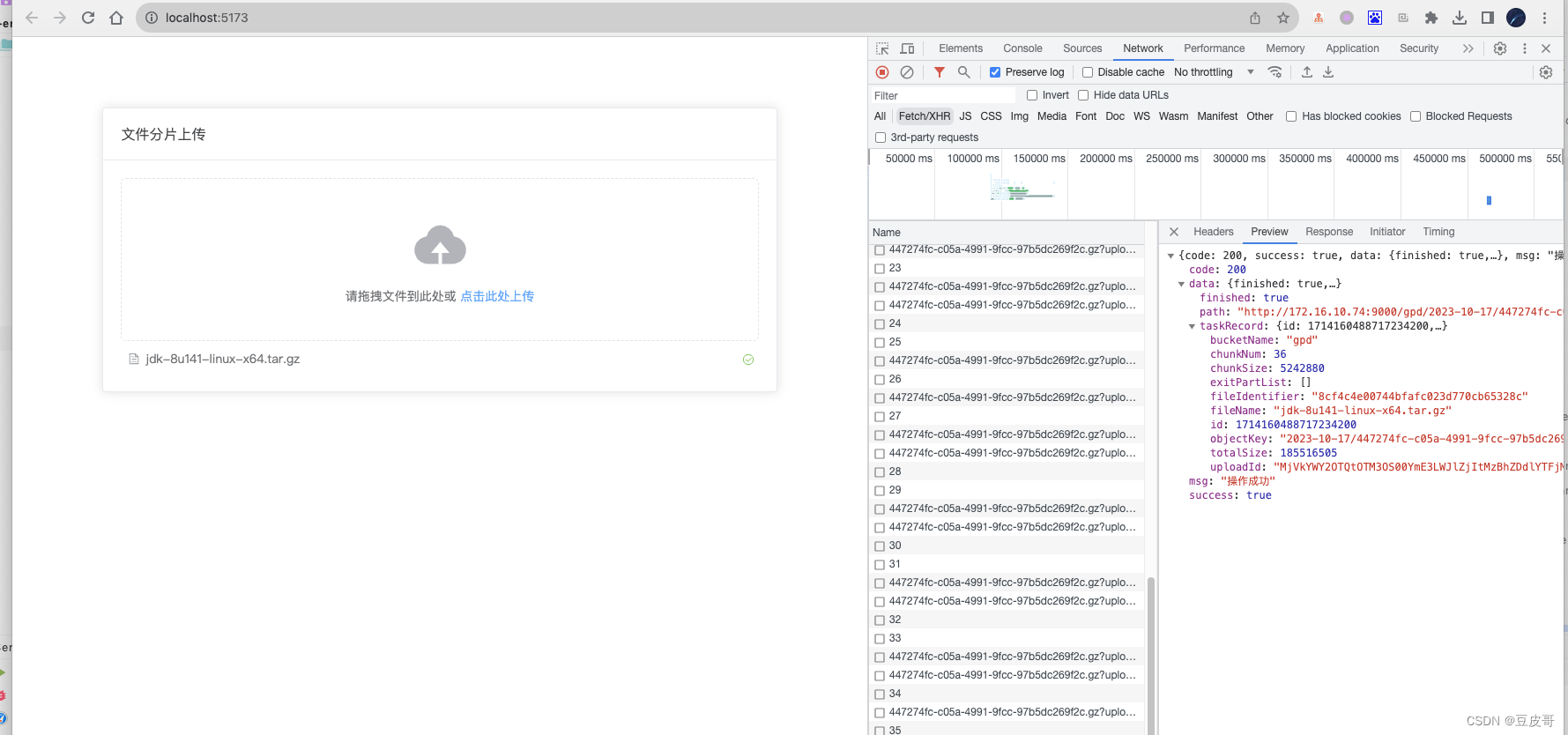

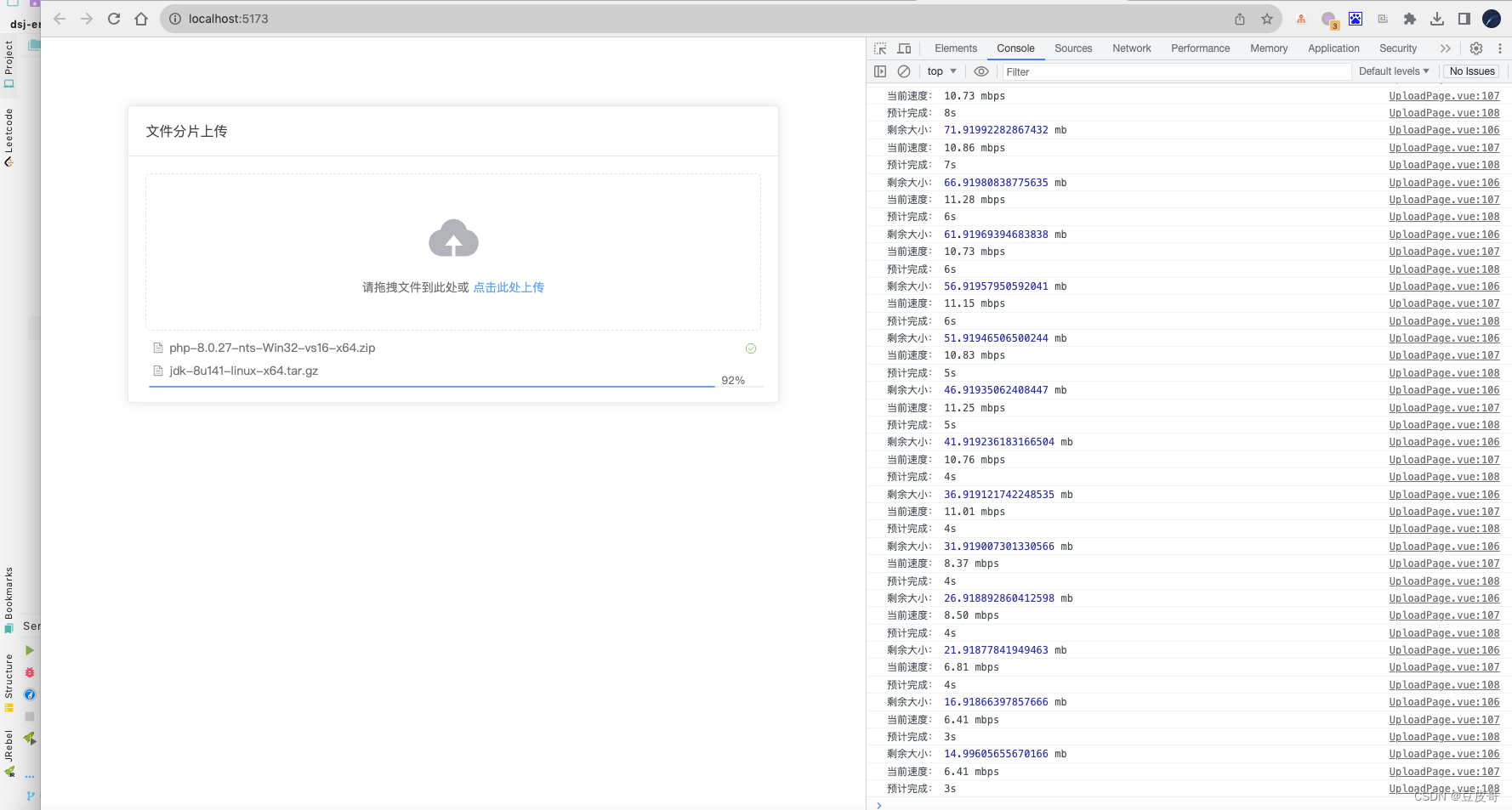

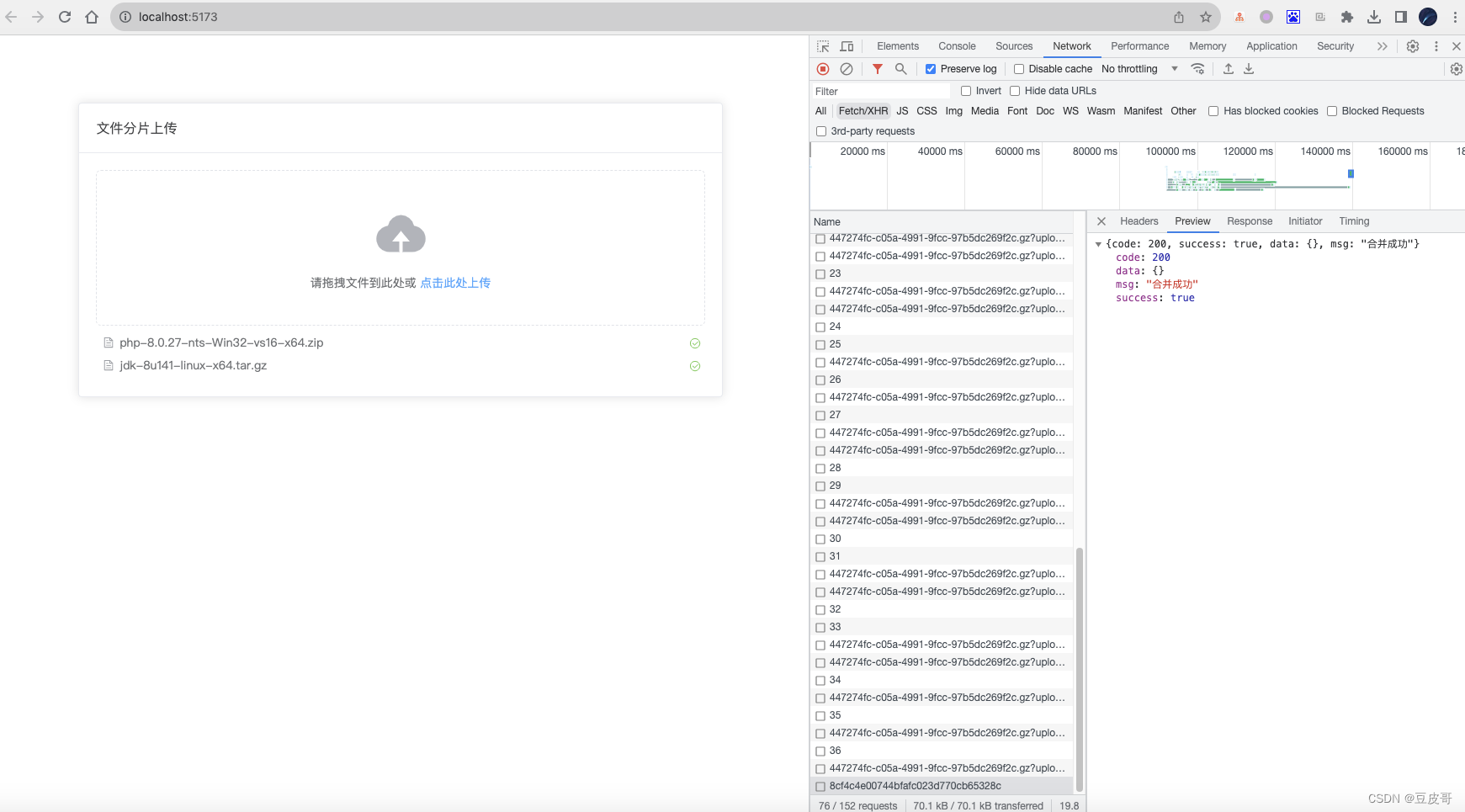

整体效果预览

大致流程。

1、初始化一个任务,获取预期链接。(会通过md5校验,来判断OSS中是否有分片或整个文件。存在断点分片则从最近分片开始上传,存在整个文件则直接返回链接。)

2、文件切片上传

3、合并分片

4、完成并提示合并成功

231

231

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?