车牌定位有很多种算法,从最简单的来,车牌在图像中一般被认为是长方形,由于图像摄取角度不同也可能是四边形。我们可以使用OpenCV中的实例: C:/Program Files/OpenCV/samples/c.squares.c 这是一个搜索图片中矩形的一个算法。我们只要稍微修改一下就可以实现定位车牌。

在这个实例中使用了canny算法进行边缘检测,然后二值化,接着用cvFindContours搜索轮廓,最后从找到的轮廓中根据角点的个数,角的度数和轮廓大小确定,矩形位置。以下是效果图:

这个算法可以找到一些车牌位置,但在复杂噪声背景下,或者车牌图像灰度与背景相差不大就很难定位车牌

所以我们需要寻找更好的定位算法。下面是squares的代码:

#ifdef _CH_

#pragma package <opencv>

#endif

#ifndef _EiC

#include "cv.h"

#include "highgui.h"

#include <stdio.h>

#include <math.h>

#include <string.h>

#endif

int thresh = 50;

IplImage* img = 0;

IplImage* img0 = 0;

CvMemStorage* storage = 0;

CvPoint pt[4];

const char* wndname = "Square Detection Demo";

// helper function:

// finds a cosine of angle between vectors

// from pt0->pt1 and from pt0->pt2

double angle( CvPoint* pt1, CvPoint* pt2, CvPoint* pt0 )

{

double dx1 = pt1->x - pt0->x;

double dy1 = pt1->y - pt0->y;

double dx2 = pt2->x - pt0->x;

double dy2 = pt2->y - pt0->y;

return (dx1*dx2 + dy1*dy2)/sqrt((dx1*dx1 + dy1*dy1)*(dx2*dx2 + dy2*dy2) + 1e-10);

}

// returns sequence of squares detected on the image.

// the sequence is stored in the specified memory storage

CvSeq* findSquares4( IplImage* img, CvMemStorage* storage )

{

CvSeq* contours;

int i, c, l, N = 11;

CvSize sz = cvSize( img->width & -2, img->height & -2 );

IplImage* timg = cvCloneImage( img ); // make a copy of input image

IplImage* gray = cvCreateImage( sz, 8, 1 );

IplImage* pyr = cvCreateImage( cvSize(sz.width/2, sz.height/2), 8, 3 );

IplImage* tgray;

CvSeq* result;

double s, t;

// create empty sequence that will contain points -

// 4 points per square (the square's vertices)

CvSeq* squares = cvCreateSeq( 0, sizeof(CvSeq), sizeof(CvPoint), storage );

// select the maximum ROI in the image

// with the width and height divisible by 2

cvSetImageROI( timg, cvRect( 0, 0, sz.width, sz.height ));

// down-scale and upscale the image to filter out the noise

cvPyrDown( timg, pyr, 7 );

cvPyrUp( pyr, timg, 7 );

tgray = cvCreateImage( sz, 8, 1 );

// find squares in every color plane of the image

for( c = 0; c < 3; c++ )

{

// extract the c-th color plane

cvSetImageCOI( timg, c+1 );

cvCopy( timg, tgray, 0 );

// try several threshold levels

for( l = 0; l < N; l++ )

{

// hack: use Canny instead of zero threshold level.

// Canny helps to catch squares with gradient shading

if( l == 0 )

{

// apply Canny. Take the upper threshold from slider

// and set the lower to 0 (which forces edges merging)

cvCanny( tgray, gray,60, 180, 3 );

// dilate canny output to remove potential

// holes between edge segments

cvDilate( gray, gray, 0, 1 );

}

else

{

// apply threshold if l!=0:

// tgray(x,y) = gray(x,y) < (l+1)*255/N ? 255 : 0

//cvThreshold( tgray, gray, (l+1)*255/N, 255, CV_THRESH_BINARY );

cvThreshold( tgray, gray, 50, 255, CV_THRESH_BINARY );

}

// find contours and store them all as a list

cvFindContours( gray, storage, &contours, sizeof(CvContour),

CV_RETR_LIST, CV_CHAIN_APPROX_SIMPLE, cvPoint(0,0) );

// test each contour

while( contours )

{

// approximate contour with accuracy proportional

// to the contour perimeter

result = cvApproxPoly( contours, sizeof(CvContour), storage,

CV_POLY_APPROX_DP, cvContourPerimeter(contours)*0.02, 0 );

// square contours should have 4 vertices after approximation

// relatively large area (to filter out noisy contours)

// and be convex.

// Note: absolute value of an area is used because

// area may be positive or negative - in accordance with the

// contour orientation

if( result->total == 4 &&

fabs(cvContourArea(result,CV_WHOLE_SEQ)) > 1000 &&

cvCheckContourConvexity(result) )

{

s = 0;

for( i = 0; i < 5; i++ )

{

// find minimum angle between joint

// edges (maximum of cosine)

if( i >= 2 )

{

t = fabs(angle(

(CvPoint*)cvGetSeqElem( result, i ),

(CvPoint*)cvGetSeqElem( result, i-2 ),

(CvPoint*)cvGetSeqElem( result, i-1 )));

s = s > t ? s : t;

}

}

// if cosines of all angles are small

// (all angles are ~90 degree) then write quandrange

// vertices to resultant sequence

if( s < 0.3 )

for( i = 0; i < 4; i++ )

cvSeqPush( squares,

(CvPoint*)cvGetSeqElem( result, i ));

}

// take the next contour

contours = contours->h_next;

}

}

}

// release all the temporary images

cvReleaseImage( &gray );

cvReleaseImage( &pyr );

cvReleaseImage( &tgray );

cvReleaseImage( &timg );

return squares;

}

// the function draws all the squares in the image

void drawSquares( IplImage* img, CvSeq* squares )

{

CvSeqReader reader;

IplImage* cpy = cvCloneImage( img );

int i;

// initialize reader of the sequence

cvStartReadSeq( squares, &reader, 0 );

// read 4 sequence elements at a time (all vertices of a square)

for( i = 0; i < squares->total; i += 4 )

{

CvPoint* rect = pt;

int count = 4;

// read 4 vertices

memcpy( pt, reader.ptr, squares->elem_size );

CV_NEXT_SEQ_ELEM( squares->elem_size, reader );

memcpy( pt + 1, reader.ptr, squares->elem_size );

CV_NEXT_SEQ_ELEM( squares->elem_size, reader );

memcpy( pt + 2, reader.ptr, squares->elem_size );

CV_NEXT_SEQ_ELEM( squares->elem_size, reader );

memcpy( pt + 3, reader.ptr, squares->elem_size );

CV_NEXT_SEQ_ELEM( squares->elem_size, reader );

// draw the square as a closed polyline

cvPolyLine( cpy, &rect, &count, 1, 1, CV_RGB(0,255,0), 3, CV_AA, 0 );

}

// show the resultant image

cvShowImage( wndname, cpy );

cvReleaseImage( &cpy );

}

void on_trackbar( int a )

{

if( img )

drawSquares( img, findSquares4( img, storage ) );

}

char* names[] = { "pic1.png", "pic2.png", "pic3.png",

"pic4.png", "pic5.png", "pic6.png", 0 };

int main(int argc, char** argv)

{

int i, c;

// create memory storage that will contain all the dynamic data

storage = cvCreateMemStorage(0);

for( i = 0; names[i] != 0; i++ )

{

// load i-th image

img0 = cvLoadImage( names[i], 1 );

if( !img0 )

{

printf("Couldn't load %s/n", names[i] );

continue;

}

img = cvCloneImage( img0 );

// create window and a trackbar (slider) with parent "image" and set callback

// (the slider regulates upper threshold, passed to Canny edge detector)

cvNamedWindow( wndname,0 );

cvCreateTrackbar( "canny thresh", wndname, &thresh, 1000, on_trackbar );

// force the image processing

on_trackbar(0);

// wait for key.

// Also the function cvWaitKey takes care of event processing

c = cvWaitKey(0);

// release both images

cvReleaseImage( &img );

cvReleaseImage( &img0 );

// clear memory storage - reset free space position

cvClearMemStorage( storage );

if( c == 27 )

break;

}

cvDestroyWindow( wndname );

return 0;

}

#ifdef _EiC

main(1,"squares.c");

#endif

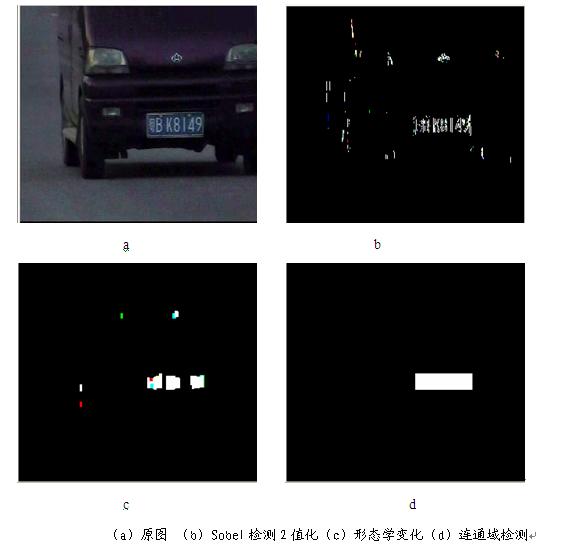

前面介绍了用OpenCV的squares实例定位车牌的算法,效果不是很理想。车牌定位的方法有很多种,这里我们从汽车图像的纹理特征入手,找出车牌不同于背景的特征是车牌定位的关键。观察多幅汽车图片我们会发现车身和背景的纹理多为横向纹理,而车牌字符则为竖向纹理,基于这个纹理特征我们可以区别处车牌位置。车牌的定位我们可以分为如下几个步骤:

1预处理

图像的预处理主要是为了后续处理的需要进行一些滤波和梯度增强的处理,以滤除噪声和垂直方向上的增强。

2 Sobel垂直方向边缘检测并2值化

一般的边缘检测的方法检测到的边缘信息含有大量的无用信息,这里我们使用Sobel边缘检测算子对图像进行垂直边缘检测,由于车牌字符的竖向纹理特征我们可以检测到车牌字符,对得到的边缘图像进行2值化,排除一些噪声并增强字符边缘。

3形态学变换

汽车图像还存在一些竖向的纹理(如车身,车灯等)我们需要排除这些干扰信息,并把临近的字符边缘连通起来。这一阶段主要利用对图像进行形态学操作,即作闭合(先膨胀在腐蚀)开启(先腐蚀再膨胀)运算,膨胀可以把临近边缘连接成一个整体,腐蚀可以滤除一些细碎的边缘(建议使用3*1的结构进行操作)经过形态学变换可以滤除噪声边缘,得到车牌区域。

4筛选

大多数图像经过形态学变化后都可以得到满意的效果,但由于图像背景的一些特殊纹理,可能会留下一些没有滤除的边缘,也有可能字符间空隙较大,车牌区域出现分为几部分的状况,还有可能车身的一些标语等被误认为是车牌图像等,我们还需要进行聚类和筛选。

选取一个标准来判断连通域(如竖直方向两区域重合大于10像素,水平方向两区域距离小于10像素)在根据车牌的形状大小(宽,高)判断是否为车牌区。

程序运行效果图如下:

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?