Natural Language Processing Specialization

Introduction

https://www.coursera.org/specializations/natural-language-processing

Certificate

Natural Language Processing with Attention Models

Course Certificate

本文是学习这门课 Natural Language Processing with Attention Models的学习笔记,如有侵权,请联系删除。

文章目录

- Natural Language Processing Specialization

- Natural Language Processing with Attention Models

- Week 03: Question Answering

- Week 3 Overview

- Transfer Learning in NLP

- ELMo, GPT, BERT, T5

- Reading: ELMo, GPT, BERT, T5

- Bidirectional Encoder Representations from Transformers (BERT)

- BERT Objective

- Reading: BERT Objective

- Fine tuning BERT

- Transformer: T5

- Reading: Transformer T5

- Multi-Task Training Strategy

- GLUE Benchmark

- Lab: SentencePiece and BPE

- Welcome to Hugging Face 🤗

- Hugging Face Introduction

- Hugging Face I

- Hugging Face II

- Hugging Face III

- Lab: Question Answering with HuggingFace - Using a base model

- Lab: Question Answering with HuggingFace 2 - Fine-tuning a model

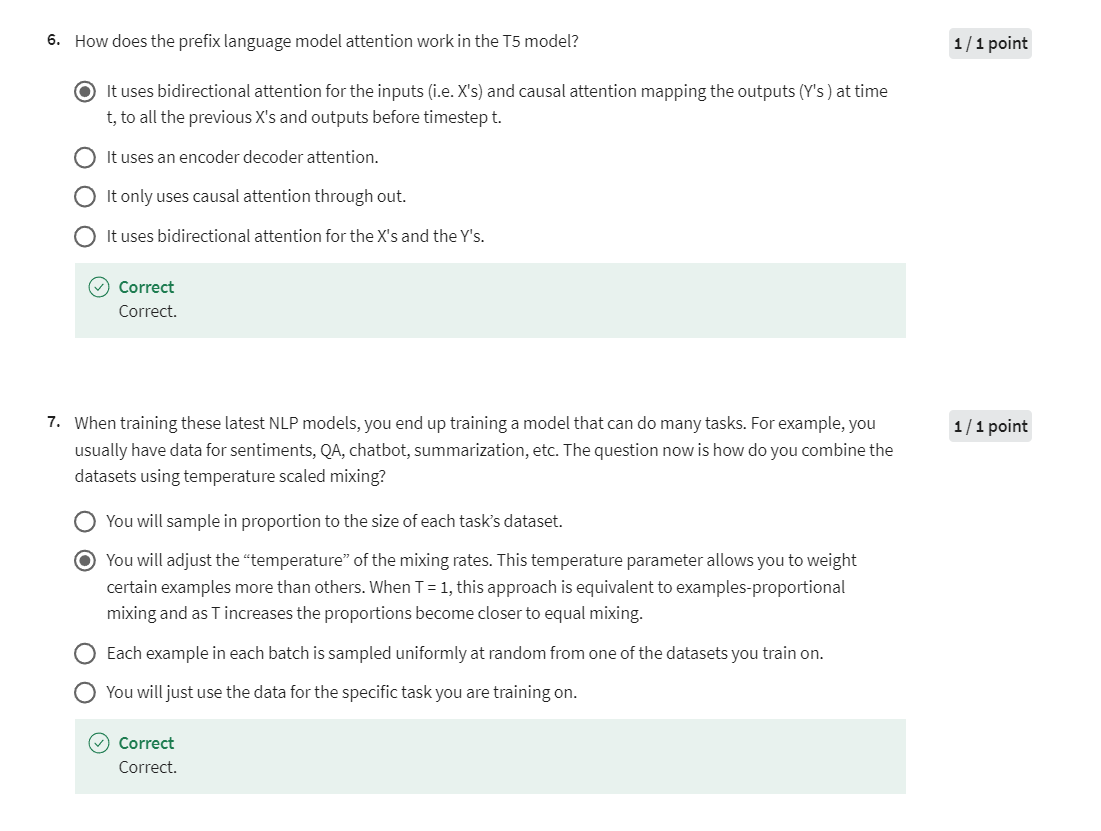

- Quiz: Question Answering

- Programming Assignment: Question Answering

- 后记

Week 03: Question Answering

Explore transfer learning with state-of-the-art models like T5 and BERT, then build a model that can answer questions.

Learning Objectives

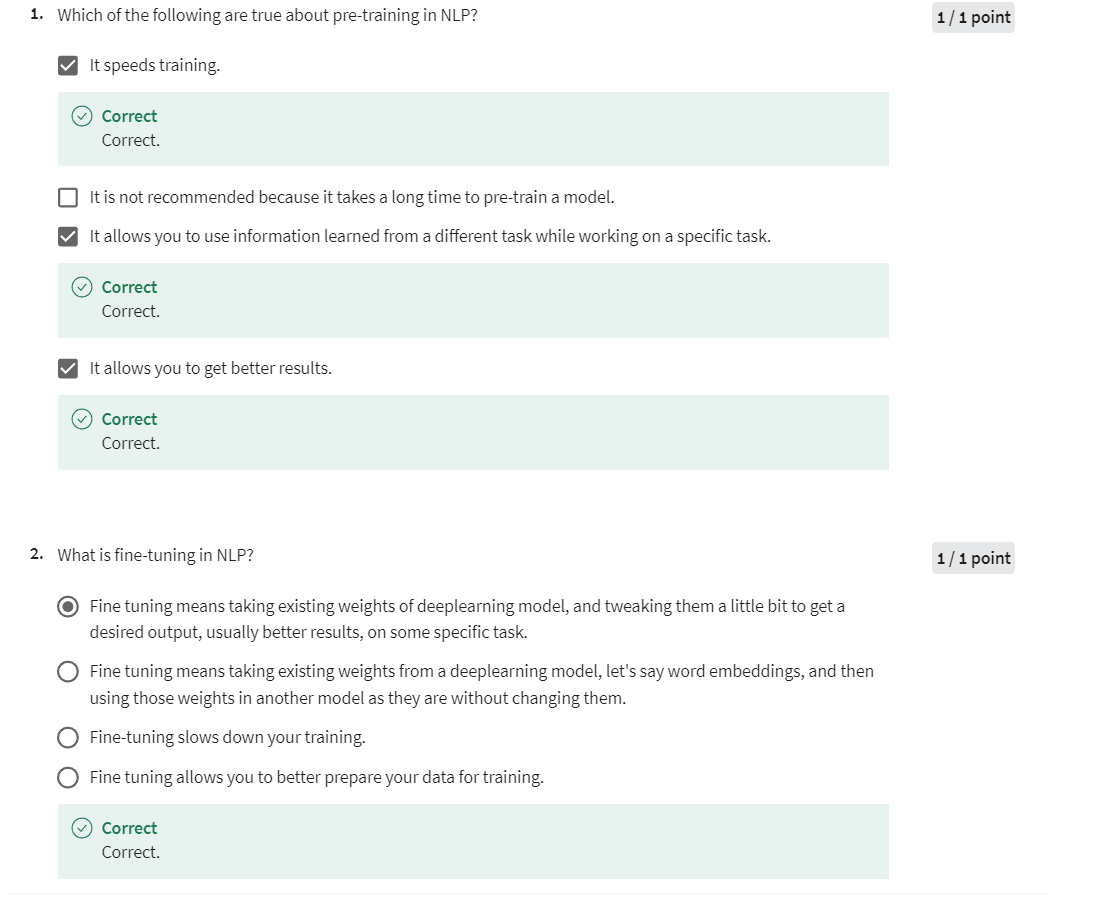

- Gain intuition for how transfer learning works in the context of NLP

- Identify two approaches to transfer learning

- Discuss the evolution of language models from CBOW to T5 and Bert

- Fine-tune BERT on a dataset

- Implement context-based question answering with T5

- Interpret the GLUE benchmark

Week 3 Overview

Good to see you again. In this week, you will learn

about transfer learning, which is a new concept

in this course. Transfer learning

allows you to get better results and

speeds up training. You’ll also be looking

at question answering. Let’s dive in. In Week 3 of Course 4, you’re going to cover many

different applications of NLP. One thing you are going to

look at is question answering. Given the question

and some context, can you tell us what the answer is going to be

inside that context? Another thing you’re going to

cover is transfer learning. For example, knowing some information by training something

in a specific task, how can you make use of that information and apply

it to a different task? You’re going to look at BERT, which is known as the Bidirectional

Encoder Representation which makes use of transformers. You’ll see how you can use bi-directionality to

improve performance. Then you’re going to

look at the T5 model. Basically, what this model

does, you can see here, it has several possible inputs. It could be a question,

you get an answer. It could be a review, and you’ll get the

rating over here. It’s all being fed

into one model.

Let’s look at

question answering. Over here you have context-based

question answering, meaning you take

in a question and the context and it

tells you where the answer is inside

that context over here. This is the highlighted

stuff which is the answer. Then you have closed book question answering

which only takes the question and it returns the answer without having

access to a context, so it comes up with

its own answer. Previously we’ve

seen how innovations in model architecture improve performance and we’ve also seen how data preparation could help. But over here, you’re going

to see that innovations in the way the training is being done also improves performance. In which case, you will see how transfer learning will

improve performance. This is the classical training that you’re used to seeing. You have a course review, this goes through a model, and let’s say you

predict the rating. Then you just predict

the rating the same way as you’ve

always been doing. Nothing changed here, this is just an overview of the classical training

that you’re used to.

Now, in transfer learning, let’s look at this example. Let’s say that you

have movie reviews and then you feed them into your model and you

predict a rating. Over here you have

the pre-train task, which is on movie reviews. Now, in training, you’re going to take the existing model

for movie reviews, and then you’re going to find two units or train it again, on course reviews, and you’ll predict the

rating for that review. As you can see over here, instead of initializing

the weights from scratch, you start with the

weights that you got from the movie reviews, and you use them as the starter points when training

for the course reviews. At the end, you do some

inference over here, and you do the inference the same way you’re used to doing. You just take the course review, you feed this into your model, and you get your prediction.

You can also use transfer

learning on different tasks. This is another

example where you feed in the ratings

and some review, and this gives you

sentiment classification. Then you can train it on a downstream task like

question answering, where you take the

initial weights over here and you train it on question

answering. When is Pi day. The model answers March 14th. Then you can ask them

model the same question. When’s my birthday over here? It does not know the answer. But this is just

another example of how you can use transfer

learning on different tasks.

Now we’re going to look at BERT, which makes use of

bi-directional context. In this case, you have

learning from deeplearning.ai, is like watching the sunset

with my best friend. Over here the context is

everything that comes before. Then let’s say you’re

trying to predict the next word, deeplearning.ai. Now, when doing bi-directional

representations, you’ll be looking at the

context from this side and from this side to

predict the middle word. This is one of the main

takeaways for bi-directionality.

Now let’s look at single

task versus multitask. Over here you have a single

model which takes in a review and then

predicts a rating. Over here you have

another model which takes in a question and

predicts an answer. This is a single task each, like one model per task. Now, what you can

do here with T5 is, it is the same

model that’s being used to take the review, predict the rating, and

then take the question, and predict the answer. Instead of having two

independent models, you end up having one model.

Let’s look at T5. Over here, the main takeaway is that the more data you have, generally the better

performance there is. For example, the English

Wikipedia dataset is around 13 gigabytes

compared to the C4, Colossal Clean Crawled Corpus

is about 800 gigabytes, which is what T5 was trained on. This is just to

give you like how much larger the C4 dataset is, when compared to the

English Wikipedia.

What are the desirable goals

for transfer learning? First of all, you want to reduce training time because you already had a pre-trained model. Hopefully, once you

use transfer learning, you’ll get faster convergence. It will also improve

predictions because you’ll learn a few things from

different tasks that might be helpful and useful for your currents predictions on

the task you’re training on. Finally, you might

require or need less data because your model

has already learned a lot from other tasks. If you have a smaller dataset, then transfer learning

might help you. You now know what

you’re about to learn. I’m very excited to show

you all these new concepts. In the next video, we’ll start by exploring

transfer learning.

Transfer Learning in NLP

This week, I’ll be talking about transfer learning with

the full transformer. I’ll also talk a

little bit about BERT, which is the Bidirectional

Encoder Representation for Transformers. Then I’ll talk about a special model known

as the T5 model, what you learned about. Now all of these concepts make

use of transfer learning. What is transfer learning?

Let me show you. You’ll now take a look at the

transfer learning options that you will have while

performing your NLP tasks. This is a quick recap where

you have your train data, goes into a model, then you have a prediction. Transfer learning will

come in two basic forms. The first one is using

feature-based learning, and the other one is

using fine-tuning. By feature-based, I mean things like word

vectors being learnt. Fine-tuning is you take an existing model,

existing weights, and then you tweak them a

little bit to make sure that they work on the specific

task you’re working on. There is pre-trained

data which makes use of labeled and

unlabeled data, let’s say you’re training your model on sentiments

for product reviews. Then you can use those

same weights to train your model on course reviews. Then the other thing

is pre-training task, which usually makes use

of language modeling. For example, you mask a word and you try to

predict what that word is, or you try to predict what

the next sentence would be.

Let’s look at

general-purpose learning, and this is something that

you’re already familiar with. Over here you have

I am because I’m learning and you’re trying

to predict the central word. The central word is

happy over here. You used a model known as the continuous

bag-of-words model. Or at least you got the

following embeddings. Now you can use

these embeddings as the inputs features and translation tasks to translate

from English to German.

Let’s look at a more

concrete example of feature-based

versus fine-tuning. Where you have word embeddings, put it in your model, you get a prediction. Then over here you get some type of new features

or new word embeddings. Then you feed them into a

completely different model. This gives you your prediction. Now, on the fine-tuning side, you have your embeddings, you feed it into your model, you get a prediction, and then you fine-tune

on this model, on the downstream task. Then you have some new inputs

on your new weight so that you fine-tune so you feed it

in and you get a prediction.

Let’s look at fine-tuning. This is a way to add

fine-tuning to your model. Let’s say you have movies and

you’re predicting one star, two stars, or three stars. You pre-trained and let’s say now you have course reviews. One way you can do this is you fix all of these weights

that you already have. Then you add a new

feed-forward network while keeping everything

else frozen here. Then you just tune on this new network

that you just added.

Data affects performance a lot. In this case, you have

data being fed into your model and you get

some neutral outcome. But as you have more data and you build a larger

model over here, then you get a much

better outcome. The more data you have, the better it is, and

the more data you have, the bigger the models

you can build will be able to capture the task

you’re trying to predict on.

Let’s look at labeled

versus unlabeled data. Now, this is just

a graphic example that tells you that usually you’ll have way more

unlabeled text data than labeled text data. Here’s an example. In pre-training you

have no labels. You feed this into your model. Then in downstream task, you could have something like, what day is Pi day, feed this into your model

and you get the March 14th. Remember, these are the word vectors we’re talking about.

Which tasks work

with unlabeled data? This is a self-supervised task, so you have the unlabeled data, and then you create

input features. You create targets or labels. This is how it works. You have unlabeled data, learning from

deep-learning AI is like watching the sunset

with my best friend. You create the inputs

and then blank. The sunset with my best blank. You’re trying to predict friend. You feed this into your model. You get your prediction. The target is friend, here prediction goes in. You have the loss

here and then you use this loss to

update your model. This is basic language modeling that you’ve already seen before.

Let’s look at fine-tuning a

model in the downstream task. You have your model here. You did some pre-training

on it either by masking words or predicting

the next sentence. Then use this model to train

it on downstream tasks. You can use this model, fine-tune it on translation or summarization, or

question answering.

Here’s a summary of what

you’ve seen so far. Usually you have

some train data, you have the model,

you make a prediction. We use transfer learning to get feature-based examples

or fine-tuning. Feature-based like word

vectors or word embeddings and fine-tuning is something that’s you can do on downstream tasks. Then we can also use labeled data and unlabeled

data to help us. You can train something on a different task on a

different data set. and that will also

help a little bit. Then you can use

pre-training task like language modeling

or masked words or next sentence prediction. You have now seen some

advantages of transfer learning.

ELMo, GPT, BERT, T5

I’ll show you a

chronological order of when the models

were discovered. We will also see the advantages and the disadvantages

of each model. Let’s start. This is a quick outline where you can see we start here with

continuous bag of words model, then we got ELMo, then we got GPT, then we got BERT AND finally we ended up having

T5 and so more. Like over here we’re

going to end up having many more models coming

up, hopefully soon. This is not a

complete history of all relevant models

and research findings, but it’s useful to see

what problems arise with each model and what

problems each model solves. Let’s look at context over here. Let’s say we have

the word right. Ideally, we want to see

what this word means. We can look at the

context before it. Then we can also look at

the context after it. That’s how we’ve been able to train word

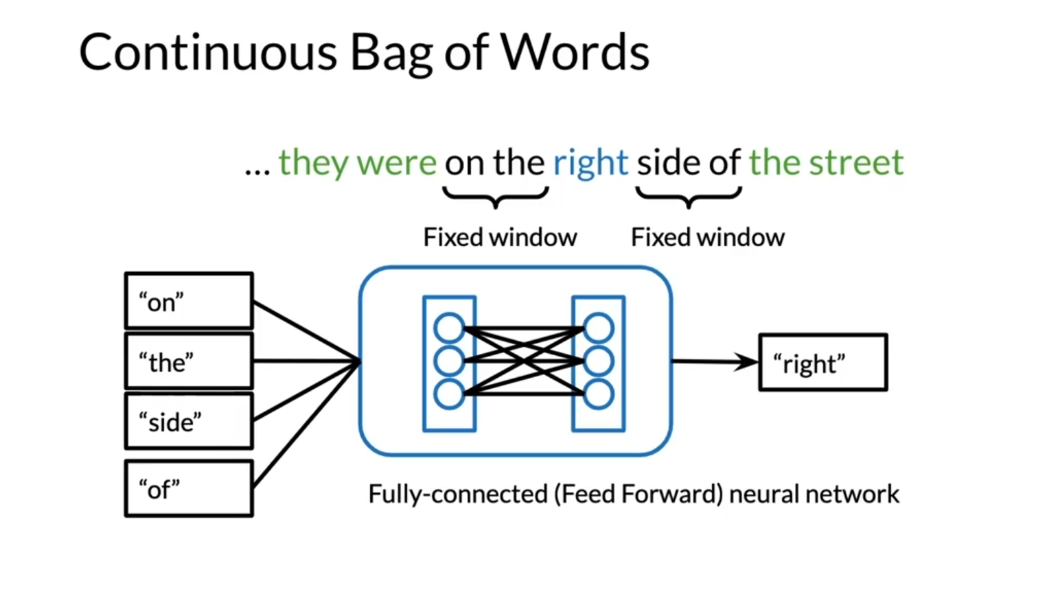

embeddings so far. The continuous bag

of words over here, you have the word right. Previously what

you’ve been doing, you would take a fixed window, say two before and two

after, or three or four, whatever as CS is

for the window size. Then you’ll take the

corresponding words, feed them into a neural network, and predict the central word in this case, which is right. Now the issue over here is that, what if we wanted to look at

not only the fixed window, but all the words before

and all the words after.

How can we do that? If

you want more context. They were on D over here. This is the first left part of the sentence and then all the right parts

of the sentence. Instead of having

the fixed window, we want to add the streets

or history for example. To use all of the context words, what researchers have done, they explored the

following using RNN. They would use an RNN from

the right and from the left. Then they would have a

bi-directional LSTM, which is a version of

recurrent neural network. You feed both of them in. Then you can predict

the center word right. That gives you the word

embedding for the word, right.

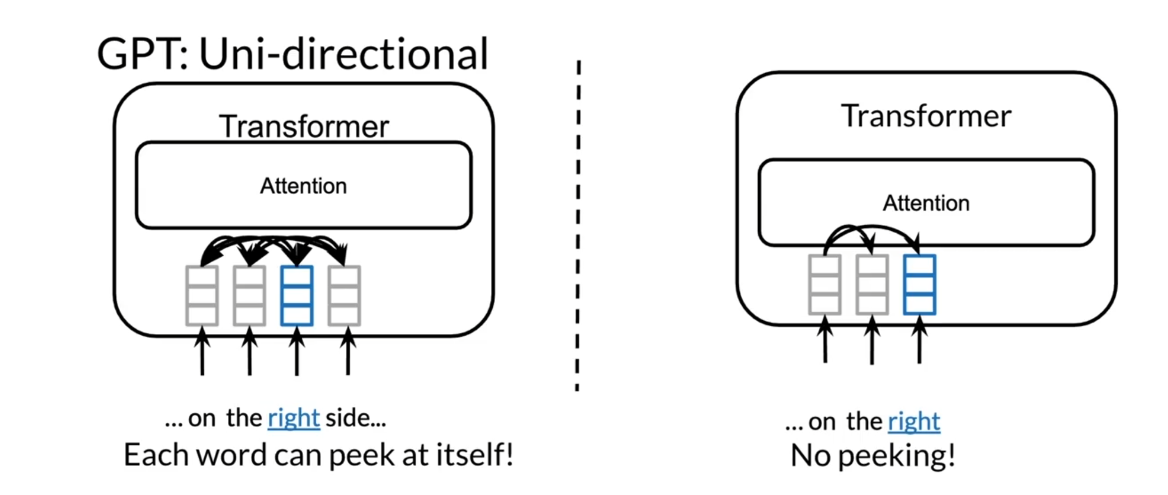

Now, open AI GPT what it did. We had transformer, the encoder decoder architecture that

you’re familiar with. Then we ended up having

this GPT which makes use of a decoder of stacks only. In this case we only

have one decoder, but you can have several

decoders in the picture. ELMo made use of RNNs or LSTMs. We would use these models to

predict the central word.

Why not use a

bi-directional model? In this case, this

is the transformer, and you can see that in

the transformer over here, each word can peek at itself. If we were to use it for under, right, you can only

look at itself. But the issue is that

you cannot peek forward. Remember, you’ve seen

this in causal attention where you don’t look

forward you only look at the previous ones.

BERT came and helped

us solve this problem. This is a recap. Transformers, encoder decoder, GPT makes use of decoders and BERTs makes use of encoders. Over here you have

the Legislature believed that they were on

the blank side of history, so they changed the law. Over here we can make use of bi-directional encoder

representations from transformers and this will

help us solve this issue.

This is an example

of a transformer plus bi-directional context. You feed this into your model and then you get right and of. Because of this,

you’re able to look at the sentence from

the beginning and from the end and make use of the context to predict

the corresponding words. If we’re to look at

words to sentences, meaning instead of trying

to predict just the word, we’ll try to predict what

the next sentence is. Given the sentence over here, the legislators believed that they were on the right

side of history. Is this the next sentence or

is this the next sentence? You have a sentence A and then you try to predict

the next sentence B. In this case it’s obviously

so they changed the law.

BERT pre-training tasks makes use of multi-mask

language modeling. The same thing that

you’ve seen before and it makes use of the next

sentence prediction. It takes two sentences

and it predicts whether it’s a yes

meaning sentence two follow sentence one, or sentence B follow

sentence A or not.

BERT(Bidirectional Encoder Representations from Transformers)预训练模型在预训练过程中使用了两种任务:

-

掩码语言建模(Masked Language Modeling,MLM):在这个任务中,BERT会随机地将输入序列中的一些单词标记为

[MASK],然后尝试根据上下文预测这些被掩码的单词。这个任务可以让模型学习到单词之间的语义关系和上下文信息。 -

下一句预测(Next Sentence Prediction,NSP):在这个任务中,BERT会接收一对句子作为输入,然后预测第二个句子是否是第一个句子的下一个句子。这个任务可以帮助模型学习到句子之间的逻辑关系和连贯性。

通过这两种任务的预训练,BERT模型可以学习到丰富的语言表示,从而在下游任务中取得良好的效果,例如问答、文本分类和命名实体识别等任务。

Let’s look at encoder

versus encoder decoder. Over here you have

the transformer, which had the encoder

and the decoder stack. Then you had GPT, which just had decoder stack, and then BERT, just

the encoder stack. T5 tested the

performance when using the encoder decoder as in the

original transformer model. The researchers

found that the model performed better when it contained both the encoder

and the decoder stacks.

Let’s look at the multi-task

training strategy over here so you have studying

with deeplearning.ai was. It’s being fed into the model and it gives you a

five star rating. Hopefully it’s a

five star rating. Then you have a question and

then you get the answer. But the question here is, how do you make sure that the model knows which

task it’s performing in? Because you can feed

in a review over here. How do you know

that it’s not going to return an answer instead? How do you know it’s

going to return a rating? Or if you feed in a question, how do you know it’s

not going to return some numerical outputs or some text version of

a numerical output? Let’s see how to do this. Over

here is an example where, let’s say you’re trying

to classify whether this is five stars or four

stars or three stars. You append the string,

classify for example. Then it classifies it

and you get five stars. Let’s say you want to summarize, so you add the string

summarize colon. It goes into the model. It’s automatically identifies

that you’re trying to summarize and it says

that it was all right. You have a question you

append question string. It knows that it’s a question

and it returns the answer. Now, this might not be the exact text that

you’ll find in the paper, but it gives you the

overall sense of the idea.

T5(Text-to-Text Transfer Transformer)是一种基于Transformer架构的通用文本生成模型,其预训练任务是文本到文本的转换。具体来说,T5的预训练任务是将输入文本转换为目标文本,可以是生成式任务(如翻译、摘要生成等)或分类任务(如文本分类、命名实体识别等)。

T5的预训练过程包括以下步骤:

-

输入-输出对构建:从大规模文本数据中构建输入-输出对,即将输入文本和目标文本组成的文本对。这些文本对可以是原始文本和重写文本、问题和答案等。

-

文本转换模型:使用Transformer架构构建文本转换模型,该模型由编码器和解码器组成。编码器将输入文本编码为上下文表示,解码器使用这些表示生成目标文本。

-

预训练任务:使用文本对作为输入-输出对,通过最大似然估计(MLE)或其他适当的损失函数来训练模型。模型的目标是最大化生成目标文本的概率。

T5的设计使其适用于各种文本生成任务,通过微调预训练模型,可以在特定的下游任务上取得良好的性能。

In summary, you’ve seen the continuous bag

of words model, which makes use of a

fixed context window. You’ve seen ELMo, which makes use of a

bi-directional LSTM. You’ve seen GPT, which

is just a decoder stack, and you can see

uni-directional in context. Then you’ve seen BERT, which makes use of bi-directional encoder

representation from the transformer, and it also makes use of multi-mask language modeling and next sentence prediction. Then you’ve seen the

T5 which makes use of the encode decoder

stack and also makes use of mask and

multi-task training. You have now seen an

overview of the models. You have seen how the text

to text model has a prefix. With the same model, you

can solve several tasks. In the next video, we will be looking at the birth

model in more detail, also known as the Bidirectional Encoder representation

for Transformers.

Reading: ELMo, GPT, BERT, T5

The models mentioned in the previous video were discovered in the following order.

In CBOW, you want to encode a word as a vector. To do this we used the context before the word and the context after the word and we use that model to learn and create features for the word. CBOW however uses a fixed window C (for the context).

What ElMo does is, it uses a bi-directional LSTM, which is another version of an RNN and you have the inputs from the left and the right.

Then Open AI introduced GPT, which is a uni-directional model that uses transformers. Although ElMo was bi-directional, it suffered from some issues such as capturing longer-term dependencies, which transformers tackle much better.

After that, the Bi-directional Encoder Representation from Transformers (BERT) was introduced which takes advantage of bi-directional transformers as the name suggests.

Last but not least, T5 was introduced which makes use of transfer learning and uses the same model to predict on many tasks. Here is an illustration of how it works.

Bidirectional Encoder Representations from Transformers (BERT)

I’ll now teach you about Bidirectional Encoder

Representations for Transformers, or in short, just BERT. BERT is a model that makes

use of the transformer, but it looks at the inputs

from two directions. Let’s dive in and

see how this works. Today, you’re going

to learn about the BERT architecture

and then you’re going to understand how BERT

pre-training works and see what the inputs

are and the outputs are. What is BERT? BERT is the Bidirectional

Encoder representations from transformers, and it makes use of transfer

learning and pre-training. How does this work?

Usually starts with some inputs embeddings, so E_1, E_2, all the way to some

random number E_n. Then you go through some transformer blocks,

as you can see here. Each blue circle is

a transformer block, goes up furthermore and then you get your T_1, T_2, T_n. Basically, there

are two steps in BERT’s framework,

pre-training and fine-tuning. During pre-training,

the model is trained on unlabeled data over different

pre-training tasks, as you’ve already seen before. For fine tuning,

the BERT model is first initialized with a

pre-trained parameters, and all of the parameters

are fine-tuned using labeled data from

the downstream tasks. For example in the

figure over here, you get the corresponding

embeddings, you run this through a

few transformer blocks and then you make

the prediction.

We’ll discuss some

notation over here. First of all, BERT a multi-layer

bidirectional transformer. It makes use of

positional embeddings. The famous model is BERT’s base, which has 12 layers or

12 transformer blocks, 12 attention heads, and

110 million parameters. These new models that are coming out now like GPT-3 and so forth, they have way more

parameters and way more blocks and layers.

Let’s talk about pre-training. Before feeding the word

sequences to the BERT model, we mask 15 percent of the words. Then the training data generator chooses 15 percent of these positions at

random for prediction. Then FDI token is chosen, we replace the ith token with, one, the mask token, 80 percent of the time, and then, two, a random token 10 percent

of the time, and then, three, the unchanged with either token 10

percent of the time. In this case then Ti, what you’ve seen in

the previous slide, will be used to

predict the original token with cross entropy loss. In this case, this is known

as the masked language model. Over here we have,"

After school, Lucas does his blank in the

library," so maybe work, maybe homework one

of these words that your BERT model is

going to try to predict. To do so usually what you do, you just add a dense layer after the Ti token and use it to classify after the

encoder outputs. You just multiply

the outputs vectors by the embedding

matrix and then to transform them into a

vocabulary dimension and you add a

softmax at the end.

This is another

sentence, “After school, Lucas does his homework in

the library,” and then, “After school blank his

homework in the blank.” You have to predict Lucas does, and then library also. In summary, you choose 15 percent of the

tokens at random. You mask them 80

percent of the time, replace them with a random

token 10 percent of the time, or keep as is 10

percent of the time. Then notice that there could be multiple masked

spans in a sentence. You could mask several

words in the same sentence. In BERT, also next sentence prediction is

also used when pre-training. Given two sentences,

if it’s true, it’s means the two sentences

follow one another. Otherwise, they’re different, they don’t lie in the same

sequence of the text. You have now developed an

intuition for this model. You’ve seen that

BERT makes use of the next sentence prediction and masked language modeling. This allows the model to have a general sense of the language. In the next video, I’m going to formalize this and show you the loss

function for BERT. Please go onto the next video.

BERT Objective

I will be talking about

the BERT objective. You’ll see what you are

trying to minimize. Specifically, I’ll show you how you can combine

word embeddings, sentence embeddings, and

positional embeddings as inputs. Let’s take a look at

how you can do this. You’re going to learn how

BERT inputs are fed into the model and the

different types of inputs and their structures. Then you’re going to visualize

the output and finally, you’re going to learn

about the BERT objective. Formalizing the input, this is the BERT

input representation. You start with

position embeddings. They allow you to indicate

the position in the sentence of the word where each word is in the corresponding

sentence. So you have this. Then you have the

segment embeddings. They allow you to indicate

whether it’s a sentence A or sentence B because remember in BERT you also use next

sentence prediction. Then you have the

token embeddings or the input embeddings. You also have a CLS token, which is used to indicate the

beginning of the sentence, and a SEP token, which is used to indicate

the end of the sentence. Then what you do, you just take the sum of the

token embeddings, the segmentation embeddings, and the position embeddings, and then you get your new input.

Over here you can see you

have masked sentence A, you have masked sentence B, they go into tokens, and then you have the CLS token, which is a special

classification symbol added in front of every input. Then you have the SEP token, which is the special

separator token. You convert them into

the embeddings so then you get your

transformer blocks. Then you can see at the end

you get your T_1 to T_N, your T_1 prime to T_M prime. Each T_I embedding

will be used to predict the masked word

via a simple softmax. You have this C also, embedding, which can be used for next

sentence prediction.

Let’s look at the

BERT objective now. For the Multi-Mask

language model, you use a cross-entropy

loss to predict the word that’s being masked or the

words that are being masked, and then you add this

to a binary loss for the next sentence

prediction so given the two sentences do they

follow one another or not?

In summary, you’ve seen

the BERT objective and you’ve seen the model

inputs and outputs. In the next video, I will show you how you can fine-tune this

pre-trained model. Specifically, I’ll show

you how you can use it to your own tasks

for your own projects. Please go on to the next video.

Reading: BERT Objective

We will first start by visualizing the input.

The input embeddings are the sum of the token embeddings, the segmentation embeddings and the position embeddings.

The input embeddings: you have a CLS token to indicate the beginning of the sentence and a sep to indicate the end of the sentence

The segment embeddings: allows you to indicate whether it is sentence a or b.

Positional embeddings: allows you to indicate the word’s position in the sentence.

The C token in the image above could be used for classification purposes. The unlabeled sentence A/B pair will depend on what you are trying to predict, it could range from question answering to sentiment. (in which case the second sentence could be just empty). The BERT objective is defined as follows:

You just combine the losses!

If you are interested in digging deeper into this topic, we recommend you to look at this article.

Fine tuning BERT

Using BERT can get you state of the art

results on many tasks or problems. In this video, I’m going to show you

how you can fine tune this model so that you can get it to work

on your own data sets. Let’s take a look at how you can do this. So right now you’re going to

see how you fine tune BERT. So during pre training remember you

had sentence A and sentence B, and then you use next sentence prediction and use the mask tokens to predict the mask

tokens that you mask from each sentence. So that’s in pre training. Now if you want to go on to MNLI or

like hypothesis premise scenario, then instead of having sentence A and

sentence B, you’re going to feed in the hypothesis

over here, and the premise over here. For NER you going to feed in

the sentence A over here, and then the corresponding tags over here. For question answering,

you will have SQuAD, for example, you’ll have your question over here and

then your answer over here.

So visually what does this look like? So remember this image

from the BERT paper. So this is what ends up happening. Over here you have the question,

over here you’ll have the paragraph and this will give you like your answer,

that starts in the end for the answer. Then for NER again,

you’ll have the sentence and the correspondent named entities. For MNLI you’ll have the hypothesis and

then the premise and so forth.

So in summary, given the place

of sentence A and sentence B, you can fill it with a text

in parts four sentence A, and then say like a no symbol to say

that you’re trying to classify the text whether it’s for

sentiment analysis, like happy or sad. So that’s the only way to do it. Question passage for question answering. You could have hypothesis premise for

MNLI. You could have sentence with

the named entities for NER, you can have sentence and

a paraphrase of the sentence. You could have an article in

the summary and so forth. So this is just the inputs

into your BERT model. Now that you know how to fine tune

your model on classification tasks, question answering, summarization and

many more things, we’ll take it to the next level and introduce you

to a new model known as the T5. Please go onto the next video

to learn about the T5 model.

Transformer: T5

The T5 model could be used

on several NLP tasks and it also uses similar

training strategy to birds. Concretely, it makes use of transfer

learning and masked language modeling. The T5 model also uses

transformers when training. So let’s take a look at how you can use

this model in your own applications. >> So you’re going to understand how T5

works, then you’re going to recognize the different types of attention used and

see an overview of the model architecture. So T5 transformers known as

the text to text transformer and you can use it in classification,

you can use it for question answering to answer a question. You can use it for machine translation,

you can use it for summarization and you can use it for sentiments. And there are other applications

that you can use T5 for, but we’ll focus on this for now.

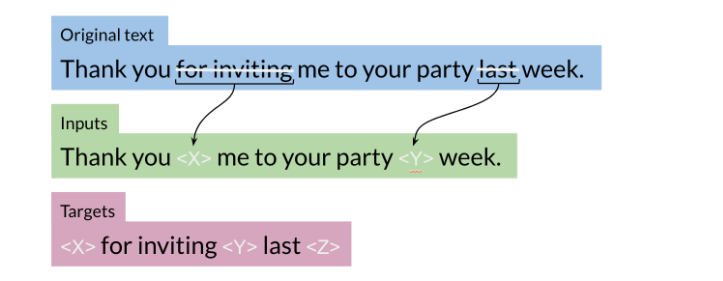

So the model architecture and the way the

pre training is done first of all is you have an original text, something like

this where you have thank you for inviting me to your party last week. So you mask the certain words,

so for inviting me last and then you replace it with these

tokens like brackets X, brackets Y. So brackets X corresponding to for

inviting and brackets why corresponding to last. And your targets are going to be

brackets X for inviting brackets Y last. And these tokens or these brackets like

they keep going in increments order, so then it’s bracket Z, then maybe

brackets A brackets B and so forth.

So each brackets corresponds to a certain

targets, the model architecture and a different transformer architectural

variants that we’re going to consider for the attention party are. So we start with the basic

encoder decoder representation. So you can see over here you have fully

visible attention in the encoder and then causal attention in the decoder and then you have the general encoder decoder

representation just as a notation. So light gray lines correspond

to causal masking and dark gray lines correspond to

the fully visible masking. So on the left, as I said again,

it’s the standard encoder decoder architecture in the middle over here

what we have we have the language model which consists of a single

transformer layers stack and it’s being fed the concatenation

of the input and the target. So it uses causal masking throughout

as you can see because they’re all great lines and you have X1

going inside over here, get a X2. X two goes into the model X three and

so forth. Now over here to the right we

have prefix language model which corresponds to allowing fully visible

masking over the inputs as you can see here in the dark arrows and

then causal masking in the rest. So as you can see over here

it’s doing causal masking.

So the model architecture which

uses encoder decoder stack, it has 12 transformer blocks each. So you can think of it as a dozen X and

then 220 million parameters. So in summary, you’ve seen prefix language

model attention, you’ve seen the model architecture for T5 and you’ve seen how

the pre training is done similar to birds, but we just use masked

language modeling here. >> You now have an overview

of the transformer model, you know how to train it and you’ve seen

that you can use it on multiple tasks. In the next video, I’ll be talking about

a few training strategies for this model. See you there.

Reading: Transformer T5

One of the major techniques that allowed the T5 model to reach state of the art is the concept of masking:

For example, you represent the “for inviting” with <X> and last with <Y> then the model predicts what the X should be and what the Y should be. This is exactly what we saw in the BERT loss. You can also mask out a few positions, not just one. The loss is only on the mask for BERT, for T5 it is on the target.

So we start with the basic encoder-decoder representation. There you have a fully visible attention in the encoder and then causal attention in the decoder. So light gray lines correspond to causal masking. And dark gray lines correspond to the fully visible masking.

In the middle we have the language model which consists of a single transformer layer stack. And it’s being fed the concatenation of the inputs and the target. So it uses causal masking throughout as you can see because they’re all gray lines. And you have X1 going inside, you get X2, X2 goes into the model and you get X3 and so forth.

To the right, we have prefix language model which corresponds to allowing fully visible masking over the inputs as you can see with the dark arrows. And then causal masking in the rest.

Multi-Task Training Strategy

Welcome. You will now see how you can train one

model to get you very good results on several NLP tasks. When training such a model, we usually

append a tag to notify whether we’re training on either machine translation,

question answering, summarization, sentiment,

or some other type of task. Let’s see how you can use this

in your own applications. >> Speaker 2: So the multitask

training strategy works as follow. So if you want to translate from English

to German, you append the prefix translate English to German, and it gives

you the corresponding translation. For cola sentence like,

the course is jumping well, and it says it’s not acceptable because

it’s grammatically incorrect. If you have two sentences and

you want to identify their similarity, you put in the stsp sentence 1, and then sentence 2 inside over here,

sentence 1, sentence 2. And then you get the corresponding score. If you want to summarize, you add

the summarize prefix to the article or the text you want to summarize and

it gives you the summary.

STSB(Semantic Textual Similarity Benchmark)是一个用于衡量两个文本片段之间语义相似性的基准数据集。在STSB数据集中,每个样本包含两个句子(sentence1和sentence2)以及一个相似性分数(similarity score),表示这两个句子之间的语义相似程度。

因此,当提到STSB数据集中的sentence1时,指的是数据集中的第一个句子,用于与sentence2进行比较以计算语义相似性分数。 STSB数据集通常用于训练和评估文本相似度模型,以便这些模型能够根据两个文本片段之间的语义相似程度对它们进行比较。

So this is how it works. Inputs and outputs format, so

for machine translation you just do translate blank to blank and

you add the sentence. To predict entailments, contradiction,

or whether it’s neutral, you would feed in something as follows,

so nnli premise, I hate pigeons, then the hypothesis, my feelings towards

pigeons are filled with animosity, and the target is entailment. So basically over here, this is going to

try to learn the overall structure of entailment, and

by feeding in the entire thing, the model would have full visibility

over the entire input, and then it would be tasked with marking a classification

by outputting the word entailment. So it is easy for the model to learn

to predict one of the correct class labels given the task prefix mnli,

in this case. If you know the main difference

between prefix LM and the BERT architecture is that

the classifier is integrated to the output layer of the transformer

decoder in the prefix LM. And over here you have

the Winograd schema, which is another way to predict whether a

pronoun, for example, over here, the city councilmen refused the demonstrators

a permit because they feared violence.

Winograd schema是一种用于测试自然语言处理系统理解普遍知识和推理能力的句子。这些句子由一个关键词和一个代词组成,根据关键词的不同,代词的指代也会不同,需要系统根据句子的上下文和常识进行正确的指代。

Winograd schema最早由计算机科学家Terry Winograd提出,被认为是对自然语言理解的一种较为困难的测试。这种测试通过评估系统是否能够正确理解和推理句子中的逻辑关系和语义含义来评估其智能程度。Winograd schema在评估人工智能系统的语言理解能力和推理能力方面具有重要意义,被广泛应用于自然语言处理和人工智能领域。

So you’re going to feed

this into your model, and then it will be tasked to predict

they as the city councilmen.

So for multi-task training strategy, this

is a table found in the original paper, and we’ll talk about what

the GLUE benchmark is, and these other benchmarks,

you can check them out. But for the purpose of this week,

we’ll be focusing on the GLUE benchmark, which would be the next video. And we’ll talk about adapter layers and

gradual unfreezing. But these are the scores reported,

and you can see that the T5 paper actually reaches states

of the art in many tasks. So how much data from

each task you train on?

So for the data training strategies,

there is examples proportional mixing, and in this case, what you end up doing,

you take an equal proportion, say, like 10% from each

data that you have. And if the first data sets, for

example, blue, you take 10% of this, then you’ll get 10% over here,

10% of this is larger, and 10% is just a random number I picked,

but you get the point. For the other type of data

training strategy is equal mixing, so regardless of the size of each data,

you take an equal sample. And then there is something in the middle

called temperature-scaled mixing, where you try to play with the parameters

to get something in between.

Now, we’ll talk about gradual

unfreezing versus adapter layers. So in gradual unfreezing,

what ends up happening, you unfreeze one layer at a time. So you say this is your neural network,

unfreezing the last one, you fine-tune using that, you keep the

others fixed, then unfreezing this one, and then you unfreeze this one, so

you keep unfreezing each layer. And for the adapter layers, you basically, add a neural network to each feed-forward

in each block of the transformer. And then, these new feed-forward networks, they’re designed so that the output

dimension matches the input. And this allows them to be inserted

without having any structural change. When fine-tuning only these

new adapter layers and the layer normalization

parameters are being updated.

So we’ll talk now a bit

more about fine-tuning. The approach that’s usually being used

here has the goal of training a single model that can simultaneously perform

many tasks at once, for example, the model, most of its parameters

are shared across all of the tasks. And we might train a single model on many

tasks, but when reporting performance, we can select a different checkpoint for

each task. So over here, the task could be

like translation, summarization, or masked language modeling. And they do the train in 2

to the power of 18 steps. >> Speaker 1: You learned about

multiple training strategies used for your transformer model. Now, that you know how to train this

model, you need the way to evaluate it. Concretely, you’ll be evaluating it using

the GLUE benchmark, which stands for General Language Understanding Evaluation

benchmark. See you there.

Gradual unfreezing是一种用于微调预训练语言模型的技术,特别是在处理少量标记数据时非常有效。在这种技术中,模型的不同层在微调过程中逐渐解冻(unfreeze),允许这些层的权重进行调整,以便适应特定任务的数据。

具体来说,gradual unfreezing通常遵循以下步骤:

-

冻结所有层:首先,将预训练模型中的所有层都冻结,使其权重在微调过程中不会更新。

-

解冻顶层:然后,只解冻模型的顶层(通常是最后几个层),允许这些层的权重在微调过程中进行调整,而其他层仍保持冻结状态。

-

微调顶层:使用少量的标记数据对解冻的顶层进行微调,以适应特定的任务。

-

逐层解冻:接下来,逐渐解冻模型的更多层,每次解冻一层或一组层,并使用更多的标记数据对解冻的层进行微调。

-

完全微调:最后,将所有层都解冻,并使用全部的标记数据对整个模型进行微调。

gradual unfreezing的优点在于,它可以有效地利用有限的标记数据来微调语言模型,避免在微调过程中过度调整预训练模型的权重,从而提高了微调的效果和泛化能力。

Adapter layers是一种用于在预训练语言模型中进行参数微调的轻量级结构。它们被设计用于在不修改整个模型架构的情况下向模型添加新任务。Adapter layers通常位于预训练模型的每个层之后,用于在特定任务上微调模型。

Adapter layers的主要特点包括:

-

轻量级:Adapter layers通常包含一个小的全连接层和一个非线性激活函数,参数数量相对较少,使得在添加新任务时不会显著增加模型的复杂度和计算成本。

-

复用预训练模型:通过使用Adapter layers,可以保持预训练模型的大部分参数不变,只微调添加的Adapter参数,从而可以更好地利用预训练模型学到的知识。

-

任务特定性:每个Adapter layer可以学习适应特定任务的表示,从而使得模型可以在不同的任务上共享预训练的表示,同时在各个任务上保持较好的性能。

-

可插拔性:由于Adapter layers的结构轻量级且模块化,可以方便地添加或删除Adapter layers来适应不同的任务需求,而无需重新训练整个模型。

总的来说,Adapter layers为在预训练语言模型上进行参数微调提供了一种灵活且高效的方法,可以有效地在各种自然语言处理任务上提高模型的性能。

GLUE Benchmark

I will show you one of the most commonly

used Benchmarks in natural language processing. Specifically, you’ll learn

about the GLUE Benchmark. This is used to train, evaluate, analyze NLP tasks. Let’s take a look at this

in some more detail. The glue benchmark stands for General Language

Understanding Evaluation. It is basically a collection

that is used to train, evaluate, analyze natural

language understanding systems. It has a lot of datasets

and each dataset has several genres and there are different sizes and

different difficulties. Some of them are, for example, used for co-reference

resolution. Others are just used for

simple sentiment analysis, others are used for question

answering, and so forth. It is used with a leaderboard, so people can use

the datasets and see how well their models

perform compared to others.

Tasks evaluated on could be, for example, sentence

grammatical or not. For example, if a sentence

makes sense or it does not, is going to be

used on sentiment, it could be used to

paraphrase some texts, it could be used on similarity,

on question duplicates. Whether a question is

answerable or not, whether it’s a contradiction,

whether it’s entailment. Also, for the Winograd schema, which is basically

trying to identify whether a pronoun refers to a certain noun

or to another noun.

It’s used to drive research. As I said, that

researchers usually use the GLUE as a benchmark. It is also a model agnostic, so it doesn’t matter

which model you use. Just evaluate on GLUE and see how well your

model performs. Finally, it allows you to make use of transfer learning

because you have access to several datasets and you can learn

certain things from different datasets

that will help you evaluate on a completely

new datasets within GLUE. You now know not only how to implement states

of the art models, but you also know how

to evaluate them. In the next video, I’ll be talking about

question answering and I’ll show you

how you can use these models to build a sophisticated QA system.

I’ll see you there.

Lab: SentencePiece and BPE

In order to process text in neural network models it is first required to encode text as numbers with ids, since the tensor operations act on numbers. Finally, if the output of the network is to be words, it is required to decode the predicted tokens ids back to text.

SentencePiece and BPE

Introduction to Tokenization

In order to process text in neural network models it is first required to encode text as numbers with ids, since the tensor operations act on numbers. Finally, if the output of the network is to be words, it is required to decode the predicted tokens ids back to text.

To encode text, the first decision that has to be made is to what level of granularity are we going to consider the text? Because ultimately, from these tokens, features are going to be created about them. Many different experiments have been carried out using words, morphological units, phonemic units or characters as tokens. For example,

- Tokens are tricky. (raw text)

- Tokens are tricky . (words)

- Token s _ are _ trick _ y . (morphemes)

- t oʊ k ə n z _ ɑː _ ˈt r ɪ k i. (phonemes, for STT)

- T o k e n s _ a r e _ t r i c k y . (character)

But how to identify these units, such as words, is largely determined by the language they come from. For example, in many European languages a space is used to separate words, while in some Asian languages there are no spaces between words. Compare English and Mandarin.

- Tokens are tricky. (original sentence)

- 标记很棘手 (Mandarin)

- Biāojì hěn jíshǒu (pinyin)

- 标记 很 棘手 (Mandarin with spaces)

So, the ability to tokenize, i.e. split text into meaningful fundamental units, is not always straight-forward.

Also, there are practical issues of how large our vocabulary of words, vocab_size, should be, considering memory limitations vs. coverage. A compromise may be need to be made between:

- the finest-grained models employing characters which can be memory intensive and

- more computationally efficient subword units such as n-grams or larger units.

In SentencePiece unicode characters are grouped together using either a unigram language model (used in this week’s assignment) or BPE, byte-pair encoding. We will discuss BPE, since BERT and many of its variants use a modified version of BPE and its pseudocode is easy to implement and understand… hopefully!

SentencePiece Preprocessing

NFKC Normalization

Unsurprisingly, even using unicode to initially tokenize text can be ambiguous, e.g.,

eaccent = '\u00E9'

e_accent = '\u0065\u0301'

print(f'{eaccent} = {e_accent} : {eaccent == e_accent}')

Output

é = é : False

SentencePiece uses the Unicode standard normalization form, NFKC, so this isn’t an issue. Looking at the example from above but with normalization:

from unicodedata import normalize

norm_eaccent = normalize('NFKC', '\u00E9')

norm_e_accent = normalize('NFKC', '\u0065\u0301')

print(f'{norm_eaccent} = {norm_e_accent} : {norm_eaccent == norm_e_accent}')

Output

é = é : True

Normalization has actually changed the unicode code point (unicode unique id) for one of these two characters.

def get_hex_encoding(s):

return ' '.join(hex(ord(c)) for c in s)

def print_string_and_encoding(s):

print(f'{s} : {get_hex_encoding(s)}')

for s in [eaccent, e_accent, norm_eaccent, norm_e_accent]:

print_string_and_encoding(s)

Output

é : 0xe9

é : 0x65 0x301

é : 0xe9

é : 0xe9

This normalization has other side effects which may be considered useful such as converting curly quotes “ to " their ASCII equivalent. (*Although we now lose directionality of the quote…)

Lossless Tokenization

SentencePiece also ensures that when you tokenize your data and detokenize your data the original position of white space is preserved. However, tabs and newlines are converted to spaces.

To ensure this lossless tokenization, SentencePiece replaces white space with _ (U+2581). So that a simple join of the tokens by replacing underscores with spaces can restore the white space, even if there are consecutive symbols. But remember first to normalize and then replace spaces with _ (U+2581).

s = 'Tokenization is hard.'

sn = normalize('NFKC', s)

sn_ = sn.replace(' ', '\u2581')

print(get_hex_encoding(s))

print(get_hex_encoding(sn))

print(get_hex_encoding(sn_))

Output

0x54 0x6f 0x6b 0x65 0x6e 0x69 0x7a 0x61 0x74 0x69 0x6f 0x6e 0x20 0x69 0x73 0x20 0x68 0x61 0x72 0x64 0x2e

0x54 0x6f 0x6b 0x65 0x6e 0x69 0x7a 0x61 0x74 0x69 0x6f 0x6e 0x20 0x69 0x73 0x20 0x68 0x61 0x72 0x64 0x2e

0x54 0x6f 0x6b 0x65 0x6e 0x69 0x7a 0x61 0x74 0x69 0x6f 0x6e 0x2581 0x69 0x73 0x2581 0x68 0x61 0x72 0x64 0x2e

BPE Algorithm

After discussing the preprocessing that SentencePiece performs, you will get the data, preprocess it, and apply the BPE algorithm. You will see how this reproduces the tokenization produced by training SentencePiece on the example dataset (from this week’s assignment).

Preparing our Data

First, you get the Squad data and process it as above.

import ast

def convert_json_examples_to_text(filepath):

example_jsons = list(map(ast.literal_eval, open(filepath))) # Read in the json from the example file

texts = [example_json['text'].decode('utf-8') for example_json in example_jsons] # Decode the byte sequences

text = '\n\n'.join(texts) # Separate different articles by two newlines

text = normalize('NFKC', text) # Normalize the text

with open('example.txt', 'w') as fw:

fw.write(text)

return text

text = convert_json_examples_to_text('./data/data.txt')

print(text[:900])

Output

Beginners BBQ Class Taking Place in Missoula!

Do you want to get better at making delicious BBQ? You will have the opportunity, put this on your calendar now. Thursday, September 22nd join World Class BBQ Champion, Tony Balay from Lonestar Smoke Rangers. He will be teaching a beginner level class for everyone who wants to get better with their culinary skills.

He will teach you everything you need to know to compete in a KCBS BBQ competition, including techniques, recipes, timelines, meat selection and trimming, plus smoker and fire information.

The cost to be in the class is $35 per person, and for spectators it is free. Included in the cost will be either a t-shirt or apron and you will be tasting samples of each meat that is prepared.

Discussion in 'Mac OS X Lion (10.7)' started by axboi87, Jan 20, 2012.

I've got a 500gb internal drive and a 240gb SSD.

When trying to restore using di

In the algorithm the vocab variable is actually a frequency dictionary of the words. Those words have been prepended with an underscore to indicate that they are the beginning of a word. Finally, the characters have been delimited by spaces so that the BPE algorithm can group the most common characters together in the dictionary in a greedy fashion. You will see how that is done shortly.

from collections import Counter

vocab = Counter(['\u2581' + word for word in text.split()])

vocab = {' '.join([l for l in word]): freq for word, freq in vocab.items()}

解释:vocab = {' '.join([l for l in word]): freq for word, freq in vocab.items()}

这段代码是将一个词典(vocab)中的每个单词按照字母拆分成一个字母的序列,并将序列中的字母用空格分隔开,然后将拆分后的序列作为键,原来单词对应的频率作为值重新构建成一个新的词典。

具体来说,假设原始的词典(vocab)如下所示:

{

'low': 5,

'lower': 3,

'newest': 2,

'widest': 4

}

经过这段代码处理后,生成的新词典如下所示:

{

'l o w': 5,

'l o w e r': 3,

'n e w e s t': 2,

'w i d e s t': 4

}

这种处理方式通常用于将单词拆分成子词(例如,按照字母级别拆分),以便后续进行一些文本处理或者特征提取操作。

def show_vocab(vocab, end='\n', limit=20):

"""Show word frequencys in vocab up to the limit number of words"""

shown = 0

for word, freq in vocab.items():

print(f'{word}: {freq}', end=end)

shown +=1

if shown > limit:

break

show_vocab(vocab)

Output

▁ B e g i n n e r s: 1

▁ B B Q: 3

▁ C l a s s: 2

▁ T a k i n g: 1

▁ P l a c e: 1

▁ i n: 15

▁ M i s s o u l a !: 1

▁ D o: 1

▁ y o u: 13

▁ w a n t: 1

▁ t o: 33

▁ g e t: 2

▁ b e t t e r: 2

▁ a t: 1

▁ m a k i n g: 2

▁ d e l i c i o u s: 1

▁ B B Q ?: 1

▁ Y o u: 1

▁ w i l l: 6

▁ h a v e: 4

▁ t h e: 31

You check the size of the vocabulary (frequency dictionary) because this is the one hyperparameter that BPE depends on crucially on how far it breaks up a word into SentencePieces. It turns out that for your trained model on the small dataset that 60% of 455 merges of the most frequent characters need to be done to reproduce the upperlimit of a 32K vocab_size over the entire corpus of examples.

print(f'Total number of unique words: {len(vocab)}')

print(f'Number of merges required to reproduce SentencePiece training on the whole corpus: {int(0.60*len(vocab))}')

Output

Total number of unique words: 455

Number of merges required to reproduce SentencePiece training on the whole corpus: 273

BPE Algorithm

Directly from the BPE paper you have the following algorithm.

import re, collections

def get_stats(vocab):

pairs = collections.defaultdict(int)

for word, freq in vocab.items():

symbols = word.split()

for i in range(len(symbols) - 1):

pairs[symbols[i], symbols[i+1]] += freq

return pairs

def merge_vocab(pair, v_in):

v_out = {}

bigram = re.escape(' '.join(pair))

p = re.compile(r'(?<!\S)' + bigram + r'(?!\S)')

for word in v_in:

w_out = p.sub(''.join(pair), word)

v_out[w_out] = v_in[word]

return v_out

def get_sentence_piece_vocab(vocab, frac_merges=0.60):

sp_vocab = vocab.copy()

num_merges = int(len(sp_vocab)*frac_merges)

for i in range(num_merges):

pairs = get_stats(sp_vocab)

best = max(pairs, key=pairs.get)

sp_vocab = merge_vocab(best, sp_vocab)

return sp_vocab

BPE(Byte Pair Encoding)算法是一种数据压缩算法,也被广泛应用于自然语言处理中的子词分割和词形还原等任务中。BPE算法由Philip Gage于1994年提出,后来被Sennrich等人在2016年应用于神经机器翻译中。

BPE算法的基本思想是通过迭代地合并数据中最频繁出现的一对连续字节对(byte pair)来构建一个字节对词典,从而实现数据的压缩。在自然语言处理中,字节对通常被视为字符对,因此BPE算法实际上是一种基于字符的合并算法。

BPE算法的步骤如下:

-

初始化:将每个字符视为一个单独的子词。

-

统计频率:统计数据中所有连续字符对(或字节对)的频率。

-

合并频率最高的字符对:将频率最高的字符对合并为一个新的字符,并将这个新字符加入到词典中。

-

更新词典:更新词典中的所有子词,将其中包含被合并的字符对的子词替换为新的字符。

-

重复步骤3和步骤4,直到达到预设的合并次数或者词典大小。

在自然语言处理中,BPE算法通常用于学习一种能够表示单词和子词的词典,从而在词典中生成更少、更具有信息量的单词和子词,以便于模型处理。例如,在神经机器翻译中,BPE算法可以用来构建源语言和目标语言的子词词典,以便于模型处理未登录词和低频词。

To understand what’s going on first take a look at the third function get_sentence_piece_vocab. It takes in the current vocab word-frequency dictionary and the fraction, frac_merges, of the total vocab_size to merge characters in the words of the dictionary, num_merges times. Then for each merge operation it get_stats on how many of each pair of character sequences there are. It gets the most frequent pair of symbols as the best pair. Then it merges that pair of symbols (removes the space between them) in each word in the vocab that contains this best (= pair). Consequently, merge_vocab creates a new vocab, v_out. This process is repeated num_merges times and the result is the set of SentencePieces (keys of the final sp_vocab).

Additional Discussion of BPE Algorithm

Please feel free to skip the below if the above description was enough.

In a little more detail you can see in get_stats you initially create a list of bigram (two character sequence) frequencies from the vocabulary. Later, this may include trigrams, quadgrams, etc. Note that the key of the pairs frequency dictionary is actually a 2-tuple, which is just shorthand notation for a pair.

In merge_vocab you take in an individual pair (of character sequences, note this is the most frequency best pair) and the current vocab as v_in. You create a new vocab, v_out, from the old by joining together the characters in the pair (removing the space), if they are present in a word of the dictionary.

Warning: the expression (?<!\S) means that either a whitespace character follows before the bigram or there is nothing before the bigram (it is the beginning of the word), similarly for (?!\S) for preceding whitespace or the end of the word.

sp_vocab = get_sentence_piece_vocab(vocab)

show_vocab(sp_vocab)

Output

▁B e g in n ers: 1

▁BBQ: 3

▁Cl ass: 2

▁T ak ing: 1

▁P la ce: 1

▁in: 15

▁M is s ou la !: 1

▁D o: 1

▁you: 13

▁w an t: 1

▁to: 33

▁g et: 2

▁be t ter: 2

▁a t: 1

▁mak ing: 2

▁d e l ic i ou s: 1

▁BBQ ?: 1

▁ Y ou: 1

▁will: 6

▁have: 4

▁the: 31

Train SentencePiece BPE Tokenizer on Example Data

Explore SentencePiece Model

First, explore the SentencePiece model provided with this week’s assignment. Remember you can always use Python’s built in help command to see the documentation for any object or method.

import sentencepiece as spm

sp = spm.SentencePieceProcessor(model_file='./data/sentencepiece.model')

Try it out on the first sentence of the example text.

s0 = 'Beginners BBQ Class Taking Place in Missoula!'

# encode: text => id

print(sp.encode_as_pieces(s0))

print(sp.encode_as_ids(s0))

# decode: id => text

print(sp.decode_pieces(sp.encode_as_pieces(s0)))

print(sp.decode_ids([12847, 277]))

Output

['▁Beginn', 'ers', '▁BBQ', '▁Class', '▁', 'Taking', '▁Place', '▁in', '▁Miss', 'oul', 'a', '!']

[12847, 277, 15068, 4501, 3, 12297, 3399, 16, 5964, 7115, 9, 55]

Beginners BBQ Class Taking Place in Missoula!

Beginners

Notice how SentencePiece breaks the words into seemingly odd parts, but you have seen something similar with BPE. But how close was the model trained on the whole corpus of examples with a vocab_size of 32,000 instead of 455? Here you can also test what happens to white space, like ‘\n’.

But first note that SentencePiece encodes the SentencePieces, the tokens, and has reserved some of the ids as can be seen in this week’s assignment.

uid = 15068

spiece = "\u2581BBQ"

unknown = "__MUST_BE_UNKNOWN__"

# id <=> piece conversion

print(f'SentencePiece for ID {uid}: {sp.id_to_piece(uid)}')

print(f'ID for Sentence Piece {spiece}: {sp.piece_to_id(spiece)}')

# returns 0 for unknown tokens (we can change the id for UNK)

print(f'ID for unknown text {unknown}: {sp.piece_to_id(unknown)}')

Output

SentencePiece for ID 15068: ▁BBQ

ID for Sentence Piece ▁BBQ: 15068

ID for unknown text __MUST_BE_UNKNOWN__: 2

print(f'Beginning of sentence id: {sp.bos_id()}')

print(f'Pad id: {sp.pad_id()}')

print(f'End of sentence id: {sp.eos_id()}')

print(f'Unknown id: {sp.unk_id()}')

print(f'Vocab size: {sp.vocab_size()}')

Output

Beginning of sentence id: -1

Pad id: 0

End of sentence id: 1

Unknown id: 2

Vocab size: 32000

You can also check what are the ids for the first part and last part of the vocabulary.

print('\nId\tSentP\tControl?')

print('------------------------')

# <unk>, <s>, </s> are defined by default. Their ids are (0, 1, 2)

# <s> and </s> are defined as 'control' symbol.

for uid in range(10):

print(uid, sp.id_to_piece(uid), sp.is_control(uid), sep='\t')

# for uid in range(sp.vocab_size()-10,sp.vocab_size()):

# print(uid, sp.id_to_piece(uid), sp.is_control(uid), sep='\t')

Output

Id SentP Control?

------------------------

0 <pad> True

1 </s> True

2 <unk> False

3 ▁ False

4 X False

5 . False

6 , False

7 s False

8 ▁the False

9 a False

Train SentencePiece BPE model with our example.txt

Finally, train your own BPE model directly from the SentencePiece library and compare it to the results of the implemention of the algorithm from the BPE paper itself.

spm.SentencePieceTrainer.train('--input=example.txt --model_prefix=example_bpe --vocab_size=450 --model_type=bpe')

sp_bpe = spm.SentencePieceProcessor()

sp_bpe.load('example_bpe.model')

print('*** BPE ***')

print(sp_bpe.encode_as_pieces(s0))

Output

*** BPE ***

['▁B', 'e', 'ginn', 'ers', '▁BBQ', '▁Cl', 'ass', '▁T', 'ak', 'ing', '▁P', 'la', 'ce', '▁in', '▁M', 'is', 's', 'ou', 'la', '!']

show_vocab(sp_vocab, end = ', ')

Output

▁B e g in n ers: 1, ▁BBQ: 3, ▁Cl ass: 2, ▁T ak ing: 1, ▁P la ce: 1, ▁in: 15, ▁M is s ou la !: 1, ▁D o: 1, ▁you: 13, ▁w an t: 1, ▁to: 33, ▁g et: 2, ▁be t ter: 2, ▁a t: 1, ▁mak ing: 2, ▁d e l ic i ou s: 1, ▁BBQ ?: 1, ▁ Y ou: 1, ▁will: 6, ▁have: 4, ▁the: 31,

The implementation of BPE’s code from the paper matches up pretty well with the library itself! The differences are probably accounted for by the vocab_size. There is also another technical difference in that in the SentencePiece implementation of BPE a priority queue is used to more efficiently keep track of the best pairs. Actually, there is a priority queue in the Python standard library called heapq if you would like to give that a try below!

Optionally try to implement BPE using a priority queue below

from heapq import heappush, heappop

def heapsort(iterable):

h = []

for value in iterable:

heappush(h, value)

return [heappop(h) for i in range(len(h))]

a = [1,4,3,1,3,2,1,4,2]

heapsort(a)

Output

[1, 1, 1, 2, 2, 3, 3, 4, 4]

For a more extensive example consider looking at the SentencePiece repo. The last few sections of this code were repurposed from that tutorial. Thanks for your participation! Next stop BERT and T5!

Welcome to Hugging Face 🤗

When it comes to building real-world applications with Transformer models, the vast majority of industry practitioners are working with pre-trained models, rather than building and training them from scratch.

In order to provide you with an opportunity to work with some pre-trained Transformer models, we at DeepLearning.AI have partnered with Hugging Face to create the upcoming videos and labs, where you can get some hands-on practice doing things just like people are doing in industry every day.

Move on to the next video to learn more about all the amazing open-source tools and resources that Hugging Face provides and after that, we’ll get into the labs.

Hugging Face Introduction

Hugging Face I

Hugging Face II

Hugging Face III

Lab: Question Answering with HuggingFace - Using a base model

Question Answering with BERT and HuggingFace

You’ve seen how to use BERT and other transformer models for a wide range of natural language tasks, including machine translation, summarization, and question answering. Transformers have become the standard model for NLP, similar to convolutional models in computer vision. And all started with Attention!

In practice, you’ll rarely train a transformer model from scratch. Transformers tend to be very large, so they take time, money, and lots of data to train fully. Instead, you’ll want to start with a pre-trained model and fine-tune it with your dataset if you need to.

Hugging Face (🤗) is the best resource for pre-trained transformers. Their open-source libraries simplify downloading and using transformer models like BERT, T5, and GPT-2. And the best part, you can use them alongside either TensorFlow, PyTorch or Flax.

In this notebook, you’ll use 🤗 transformers to use the DistilBERT model for question answering.

Pipelines

Before fine-tuning a model, you will look at the pipelines from Hugging Face to use pre-trained transformer models for specific tasks. The transformers library provides pipelines for popular tasks like sentiment analysis, summarization, and text generation. A pipeline consists of a tokenizer, a model, and the model configuration. All these are packaged together into an easy-to-use object. Hugging Face makes life easier.

Pipelines are intended to be used without fine-tuning and will often be immediately helpful in your projects. For example, transformers provides a pipeline for question answering that you can directly use to answer your questions if you give some context. Let’s see how to do just that.

You will import pipeline from transformers for creating pipelines.

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

from transformers import pipeline

Now, you will create the pipeline for question-answering, which uses the DistilBert model for extractive question answering (i.e., answering questions with the exact wording provided in the context).

# The task "question-answering" will return a QuestionAnsweringPipeline object

question_answerer = pipeline(task="question-answering", model="distilbert-base-cased-distilled-squad")

Notice that this environment already has the model stored in the directory distilbert-base-cased-distilled-squad. However if you were to run that exact code on your local computer, Huggingface will download the model for you, which is a great feature!

After running the last cell, you have a pipeline for performing question answering given a context string. The pipeline question_answerer you just created needs you to pass the question and context as strings. It returns an answer to the question from the context you provided. For example, here are the first few paragraphs from the Wikipedia entry for tea that you will use as the context.

context = """

Tea is an aromatic beverage prepared by pouring hot or boiling water over cured or fresh leaves of Camellia sinensis,

an evergreen shrub native to China and East Asia. After water, it is the most widely consumed drink in the world.

There are many different types of tea; some, like Chinese greens and Darjeeling, have a cooling, slightly bitter,

and astringent flavour, while others have vastly different profiles that include sweet, nutty, floral, or grassy

notes. Tea has a stimulating effect in humans primarily due to its caffeine content.

The tea plant originated in the region encompassing today's Southwest China, Tibet, north Myanmar and Northeast India,

where it was used as a medicinal drink by various ethnic groups. An early credible record of tea drinking dates to

the 3rd century AD, in a medical text written by Hua Tuo. It was popularised as a recreational drink during the

Chinese Tang dynasty, and tea drinking spread to other East Asian countries. Portuguese priests and merchants

introduced it to Europe during the 16th century. During the 17th century, drinking tea became fashionable among the

English, who started to plant tea on a large scale in India.

The term herbal tea refers to drinks not made from Camellia sinensis: infusions of fruit, leaves, or other plant

parts, such as steeps of rosehip, chamomile, or rooibos. These may be called tisanes or herbal infusions to prevent

confusion with 'tea' made from the tea plant.

"""

Now, you can ask your model anything related to that passage. For instance, “Where is tea native to?”.

result = question_answerer(question="Where is tea native to?", context=context)

print(result['answer'])

Output

China and East Asia

You can also pass multiple questions to your pipeline within a list so that you can ask:

- “Where is tea native to?”

- “When was tea discovered?”

- “What is the species name for tea?”

at the same time, and your question-answerer will return all the answers.

questions = ["Where is tea native to?",

"When was tea discovered?",

"What is the species name for tea?"]

results = question_answerer(question=questions, context=context)

for q, r in zip(questions, results):

print(f"{q} \n>> {r['answer']}")

Output

Where is tea native to?

>> China and East Asia

When was tea discovered?

>> 3rd century AD

What is the species name for tea?

>> Camellia sinensis

Although the models used in the Hugging Face pipelines generally give outstanding results, sometimes you will have particular examples where they don’t perform so well. Let’s use the following example with a context string about the Golden Age of Comic Books:

context = """

The Golden Age of Comic Books describes an era of American comic books from the

late 1930s to circa 1950. During this time, modern comic books were first published

and rapidly increased in popularity. The superhero archetype was created and many

well-known characters were introduced, including Superman, Batman, Captain Marvel

(later known as SHAZAM!), Captain America, and Wonder Woman.

Between 1939 and 1941 Detective Comics and its sister company, All-American Publications,

introduced popular superheroes such as Batman and Robin, Wonder Woman, the Flash,

Green Lantern, Doctor Fate, the Atom, Hawkman, Green Arrow and Aquaman.[7] Timely Comics,

the 1940s predecessor of Marvel Comics, had million-selling titles featuring the Human Torch,

the Sub-Mariner, and Captain America.[8]

As comic books grew in popularity, publishers began launching titles that expanded

into a variety of genres. Dell Comics' non-superhero characters (particularly the

licensed Walt Disney animated-character comics) outsold the superhero comics of the day.[12]

The publisher featured licensed movie and literary characters such as Mickey Mouse, Donald Duck,

Roy Rogers and Tarzan.[13] It was during this era that noted Donald Duck writer-artist

Carl Barks rose to prominence.[14] Additionally, MLJ's introduction of Archie Andrews

in Pep Comics #22 (December 1941) gave rise to teen humor comics,[15] with the Archie

Andrews character remaining in print well into the 21st century.[16]

At the same time in Canada, American comic books were prohibited importation under

the War Exchange Conservation Act[17] which restricted the importation of non-essential

goods. As a result, a domestic publishing industry flourished during the duration

of the war which were collectively informally called the Canadian Whites.

The educational comic book Dagwood Splits the Atom used characters from the comic

strip Blondie.[18] According to historian Michael A. Amundson, appealing comic-book

characters helped ease young readers' fear of nuclear war and neutralize anxiety

about the questions posed by atomic power.[19] It was during this period that long-running

humor comics debuted, including EC's Mad and Carl Barks' Uncle Scrooge in Dell's Four

Color Comics (both in 1952).[20][21]

"""

Let’s ask the following question: “What popular superheroes were introduced between 1939 and 1941?” The answer is in the fourth paragraph of the context string.

question = "What popular superheroes were introduced between 1939 and 1941?"

result = question_answerer(question=question, context=context)

print(result['answer'])

Output

teen humor comics

Here, the answer should be:

“Batman and Robin, Wonder Woman, the Flash,

Green Lantern, Doctor Fate, the Atom, Hawkman, Green Arrow, and Aquaman”. Instead, the pipeline returned a different answer. You can even try different question wordings:

- “What superheroes were introduced between 1939 and 1941?”

- “What comic book characters were created between 1939 and 1941?”

- “What well-known characters were created between 1939 and 1941?”

- “What well-known superheroes were introduced between 1939 and 1941 by Detective Comics?”

and you will only get incorrect answers.

questions = ["What popular superheroes were introduced between 1939 and 1941?",

"What superheroes were introduced between 1939 and 1941 by Detective Comics and its sister company?",

"What comic book characters were created between 1939 and 1941?",

"What well-known characters were created between 1939 and 1941?",

"What well-known superheroes were introduced between 1939 and 1941 by Detective Comics?"]

results = question_answerer(question=questions, context=context)

for q, r in zip(questions, results):

print(f"{q} \n>> {r['answer']}")

Output

What popular superheroes were introduced between 1939 and 1941?

>> teen humor comics

What superheroes were introduced between 1939 and 1941 by Detective Comics and its sister company?

>> Archie Andrews

What comic book characters were created between 1939 and 1941?

>> Archie

Andrews

What well-known characters were created between 1939 and 1941?

>> Archie

Andrews

What well-known superheroes were introduced between 1939 and 1941 by Detective Comics?

>> Archie Andrews

It seems like this model is a huge fan of Archie Andrews. It even considers him a superhero!

The example that fooled your question_answerer belongs to the TyDi QA dataset, a dataset from Google for question/answering in diverse languages. To achieve better results when you know that the pipeline isn’t working as it should, you need to consider fine-tuning your model.

In the next ungraded lab, you will get the chance to fine-tune the DistilBert model using the TyDi QA dataset.

Lab: Question Answering with HuggingFace 2 - Fine-tuning a model

Question Answering with BERT and HuggingFace 🤗 (Fine-tuning)

In the previous Hugging Face ungraded lab, you saw how to use the pipeline objects to use transformer models for NLP tasks. In that lab, the model didn’t output the desired answers to a series of precise questions for a context related to the history of comic books.

In this lab, you will fine-tune the model from that lab to give better answers for that type of context. To do that, you’ll be using the TyDi QA dataset but on a filtered version with only English examples. Additionally, you will use a lot of the tools that Hugging Face has to offer.

You have to note that, in general, you will fine-tune general-purpose transformer models to work for specific tasks. However, fine-tuning a general-purpose model can take a lot of time. That’s why you will be using the model from the question answering pipeline in this lab.

Begin by importing some libraries and/or objects you will use throughout the lab:

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

import numpy as np

from datasets import load_from_disk