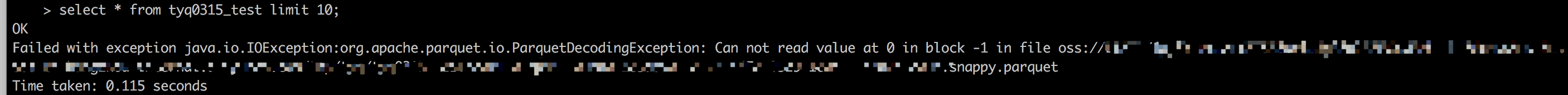

错误信息:

Failed with exception java.io.IOException:org.apache.parquet.io.ParquetDecodingException: Can not read value at 0 in block -1 in file oss:/xxxxxxxxxx.snappy.parquet

修改方式:

在运行spark-sql前 添加这样一句话:

spark.conf.set("spark.sql.parquet.writeLegacyFormat","true")

这样就能跑成了。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?