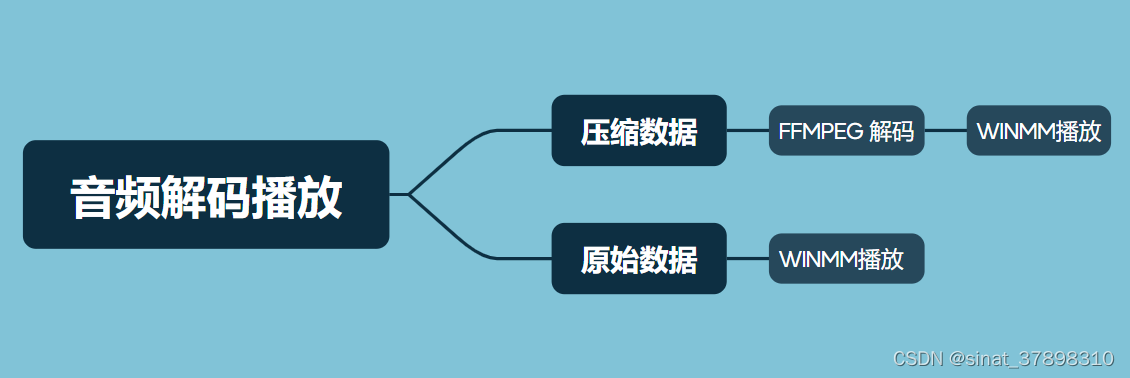

书接上传,上篇介绍了声音的基础知识和SDL 播放没冲编码调制文件的相关代码,下面继续介绍FFMPEG 解码和WINMM播放代码。

开发者所需软件

ffmpeg ffplay mediainfo vlc

使用ffmpeg 将MP3 文件转码为 PCM文件

命令行:ffmpeg -i 7.mp3 -f s16le -ar 22050 -ac 2 -acodec pcm_s16le pcm16k.pcm

使用ffplay 播放PCM文件

命令行:ffplay -ar 22050 -ac 2 -f s16le -i pcm16k.pcm

音频参数可使用MediaInfo 或者 Vlc 查看

WINMM 播放音频数据

打开设备接口

waveOutOpen;

准备缓冲区

waveOutPrepareHeader;

向设备写入缓冲区数据

waveOutWrite;

清理缓冲区

waveOutUnprepareHeader;

关闭设备接口

waveOutClose;

头文件

下面通过使用了双缓冲方法实现音频无卡顿播放,即使用了两个缓冲区送给音频设备。waveOutPrepareHeader 和 waveOutUnprepareHeader 的意思有点像“锁定”。就是只要 Prepare 了, 就不能改动, 特别地, 你不能释放已锁定 Wave Header 的内存, 否则会引起程序崩溃。内存指 Wave Header 的 lpData 指向的一块内存区域, 这块内存区域在播放完成之前不能释放。我们在这个程序里简单地通过 waveOutUnprepareHeader 的返回值来判断是否播放完成。在较为复杂的程序中, 我们可以通过回调函数来判断何时播放完毕。回调函数可以在 waveOutOpen 的 dwCallback 参数中设置。

#pragma once

#include <Windows.h>

#include <mmsystem.h>

#define MAX_BUFFER_SIZE (1024 * 8 * 16)

#pragma comment(lib , "winmm.lib")

class WaveOut

{

private:

HANDLE hEventPlay;

HWAVEOUT hWaveOut;

WAVEHDR wvHeader[2];

CHAR* bufCaching;

INT bufUsed;

INT iCurPlaying; /* index of current playing in 'wvHeader'. */

BOOL hasBegan;

public:

WaveOut();

~WaveOut();

int open(DWORD nSamplesPerSec, WORD wBitsPerSample, WORD nChannels);

void close();

int push(const CHAR* buf, int size); /* push buffer into 'bufCaching', if fulled, play it. */

int flush(); /* play the buffer in 'bufCaching'. */

private:

int play(const CHAR* buf, int size);

};实现文件

#include "waveout.h"

WaveOut::WaveOut() : hWaveOut(NULL)

{

wvHeader[0].dwFlags = 0;

wvHeader[1].dwFlags = 0;

wvHeader[0].lpData = (CHAR*)malloc(MAX_BUFFER_SIZE);

wvHeader[1].lpData = (CHAR*)malloc(MAX_BUFFER_SIZE);

wvHeader[0].dwBufferLength = MAX_BUFFER_SIZE;

wvHeader[1].dwBufferLength = MAX_BUFFER_SIZE;

bufCaching = (CHAR*)malloc(MAX_BUFFER_SIZE);

hEventPlay = CreateEvent(NULL, FALSE, FALSE, NULL);

}

WaveOut::~WaveOut()

{

close();

free(wvHeader[0].lpData);

free(wvHeader[1].lpData);

free(bufCaching);

CloseHandle(hEventPlay);

}

int WaveOut::open(DWORD nSamplesPerSec, WORD wBitsPerSample, WORD nChannels)

{

WAVEFORMATEX wfx;

if (!bufCaching || !hEventPlay || !wvHeader[0].lpData || !wvHeader[1].lpData)

{

return -1;

}

wfx.wFormatTag = WAVE_FORMAT_PCM;

wfx.nChannels = nChannels;

wfx.nSamplesPerSec = nSamplesPerSec;

wfx.wBitsPerSample = wBitsPerSample;

wfx.cbSize = 0;

wfx.nBlockAlign = wfx.wBitsPerSample * wfx.nChannels / 8;

wfx.nAvgBytesPerSec = wfx.nChannels * wfx.nSamplesPerSec * wfx.wBitsPerSample / 8;

if (waveOutOpen(&hWaveOut, WAVE_MAPPER, &wfx, (DWORD_PTR)hEventPlay, 0, CALLBACK_EVENT))

{

return -1;

}

waveOutPrepareHeader(hWaveOut, &wvHeader[0], sizeof(WAVEHDR));

waveOutPrepareHeader(hWaveOut, &wvHeader[1], sizeof(WAVEHDR));

if (!(wvHeader[0].dwFlags & WHDR_PREPARED) || !(wvHeader[1].dwFlags & WHDR_PREPARED))

{

return -1;

}

bufUsed = 0;

iCurPlaying = 0;

hasBegan = 0;

return 0;

}

void WaveOut::close()

{

waveOutUnprepareHeader(hWaveOut, &wvHeader[0], sizeof(WAVEHDR));

waveOutUnprepareHeader(hWaveOut, &wvHeader[1], sizeof(WAVEHDR));

waveOutClose(hWaveOut);

hWaveOut = NULL;

}

int WaveOut::push(const CHAR* buf, int size)

{

again:

if (bufUsed + size < MAX_BUFFER_SIZE)

{

memcpy(bufCaching + bufUsed, buf, size);

bufUsed += size;

}

else

{

memcpy(bufCaching + bufUsed, buf, MAX_BUFFER_SIZE - bufUsed);

if (!hasBegan)

{

if (0 == iCurPlaying)

{

memcpy(wvHeader[0].lpData, bufCaching, MAX_BUFFER_SIZE);

iCurPlaying = 1;

}

else

{

ResetEvent(hEventPlay);

memcpy(wvHeader[1].lpData, bufCaching, MAX_BUFFER_SIZE);

waveOutWrite(hWaveOut, &wvHeader[0], sizeof(WAVEHDR));

waveOutWrite(hWaveOut, &wvHeader[1], sizeof(WAVEHDR));

hasBegan = 1;

iCurPlaying = 0;

}

}

else if (play(bufCaching, MAX_BUFFER_SIZE) < 0)

{

return -1;

}

size -= MAX_BUFFER_SIZE - bufUsed;

buf += MAX_BUFFER_SIZE - bufUsed;

bufUsed = 0;

if (size > 0) goto again;

}

return 0;

}

int WaveOut::flush()

{

if (bufUsed > 0 && play(bufCaching, bufUsed) < 0)

{

return -1;

}

return 0;

}

int WaveOut::play(const CHAR* buf, int size)

{

WaitForSingleObject(hEventPlay, INFINITE);

wvHeader[iCurPlaying].dwBufferLength = size;

memcpy(wvHeader[iCurPlaying].lpData, buf, size);

if (waveOutWrite(hWaveOut, &wvHeader[iCurPlaying], sizeof(WAVEHDR)))

{

SetEvent(hEventPlay);

return -1;

}

iCurPlaying = !iCurPlaying;

return 0;

}

使用方法:

// audio_play.cpp : 定义控制台应用程序的入口点。

//

#define UseSdl 0

#define UseWmm 1

#include "stdafx.h"

#include <iostream>

#include <algorithm>

#if UseWmm

#include "waveout.h"

#endif

#if UseSdl

#include "SDL.h"

#endif

#pragma comment(lib , "SDL2.lib")

const static int PCM_BUFFER_SIZE = 4096;

#if UseSdl

unsigned char* audio_chunk;

unsigned int audio_len;

unsigned char* audio_pos;

#endif

#if UseSdl

//数据到来的回调函数

void read_audio_data_cb(void* udata, Uint8* stream, int len)

{

SDL_memset(stream, 0, len);

if (audio_len == 0)

return;

len = std::min(len, static_cast<int>(audio_len));

SDL_MixAudio(stream, audio_pos, len, SDL_MIX_MAXVOLUME);

audio_pos += len;

audio_len -= len;

}

#endif

#undef main

int main(int argc , char *argv[])

{

#if UseSdl

SDL_Init(SDL_INIT_AUDIO); //init

SDL_AudioSpec spec;

spec.freq = 44100;

spec.format = AUDIO_S16SYS;

spec.channels = 2;

spec.samples = 1024;

spec.callback = read_audio_data_cb;

spec.userdata = NULL;

//根据参数,打开音频设备

if (SDL_OpenAudio(&spec, NULL) < 0)

return -1;

//开始播放

SDL_PauseAudio(0);

#endif

#if UseWmm

WaveOut wvOut;

int sampleRate = 22050, bitsPerSample = 16, channels = 2;

if (wvOut.open(sampleRate, bitsPerSample, channels) < 0)

{

std::cout << "waveout open failed.\n";

return 0;

}

#endif

//打开文件

FILE *f = fopen("C:\\Users\\Hearo\\Desktop\\pcm16k.pcm", "rb+");

if (f == nullptr) return -1;

char* pcm_buffer = (char*)malloc(PCM_BUFFER_SIZE);

int ret = 0;

while (true)

{

ret = fread(pcm_buffer, 1, PCM_BUFFER_SIZE, f);

if (ret == 0) break;

#if UseSdl

audio_chunk = reinterpret_cast<Uint8*>(pcm_buffer);

audio_len = ret; //读取到的字节数

audio_pos = audio_chunk;

std::cout << "play " << audio_len << " data" << std::endl;

while (audio_len > 0) //等待audio_len长度的数据播放完成

SDL_Delay(1);

#endif

#if UseWmm

if (wvOut.push(pcm_buffer, ret) < 0)

{

std::cout << "play failed.\n";

}

#endif

}

#if UseWmm

if (wvOut.flush() < 0)

{

std::cout << "flush failed\n";

}

std::cout << "play ok.\n";

#endif

free(pcm_buffer);

#if UseSdl

SDL_Quit();

#endif

return 0;

}

FFMPEG 解码流程图示

AVFormatContext

封装格式上下文结构体,也是统领全局的结构体,保存了视频文件封装格式相关信息

AVInputFormat

每种封装格式(例如FLV, MKV, MP4, AVI)对应一个该结构体。

AVStream[2]

视频文件中每个流对应一个该结构体。

AVCodecContext

编码器上下文结构体,保存了音频编解码相关信息。

AVCodec

每种视频(音频)编解码器(例如H.264解码器)对应一个该结构体。

AVPacket

存储一帧压缩编码数据。

AVFrame

存储一帧解码后像素(采样)数据。

FLTP

32位平面浮点型

planar 和 package 的区别

planar 1:RRRRRRRRR

planar 2:LLLLLLLLLLLL

package 的方式是:RLRLRLRLRL

s16p

16位pacakge的形式

FFMPEG + WINMM + SDL完整代码

使用ffmpeg解码视频并渲染视频到窗口,网上是有不少例子的,但是大部分例子的细节都不是很完善,比如资源释放、flush解码缓存、多线程优化等都没有。特别是想要快速搭建一个demo时,总是要重新编写不少代码,比较不方便,所以在这里提供一个完善的例子,可以直接拷贝拿来使用。

Demo 使用宏定义 ,UseSdl为SDL播放,UseWmm为WINMM播放 ,UseFfg 使用FFMPEG解码MP3文件。

// audio_play.cpp : 定义控制台应用程序的入口点。

//

#define UseSdl 0

#define UseWmm 1

#define UseFfg 1

#if UseFfg

static int MAX_AUDIO_FRAME_SIZE = 192000;

#endif

#include "stdafx.h"

#include <iostream>

#include <algorithm>

#if UseFfg

extern "C" {

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

#include <libavutil/frame.h>

#include <libswscale/swscale.h>

#include <libswresample/swresample.h>

#include <libavfilter/avfilter.h>

#include <libavfilter/buffersink.h>

#include <libavfilter/buffersrc.h>

#include <libavutil/opt.h>

#include <libavutil/error.h>

}

#pragma comment(lib, "swscale.lib")

#pragma comment(lib, "swresample.lib")

#pragma comment(lib, "postproc.lib")

#pragma comment(lib, "avutil.lib")

#pragma comment(lib, "avformat.lib")

#pragma comment(lib, "avfilter.lib")

#pragma comment(lib, "avdevice.lib")

#pragma comment(lib, "avcodec.lib")

#endif

#if UseWmm

#include "waveout.h"

#endif

#if UseSdl

#include "SDL.h"

#endif

#pragma comment(lib , "SDL2.lib")

const static int PCM_BUFFER_SIZE = 4096;

#if UseSdl

unsigned char* audio_chunk;

unsigned int audio_len;

unsigned char* audio_pos;

#endif

#if UseSdl

//数据到来的回调函数

void read_audio_data_cb(void* udata, Uint8* stream, int len)

{

SDL_memset(stream, 0, len);

if (audio_len == 0)

return;

len = std::min(len, static_cast<int>(audio_len));

SDL_MixAudio(stream, audio_pos, len, SDL_MIX_MAXVOLUME);

audio_pos += len;

audio_len -= len;

}

#endif

#undef main

int main(int argc , char *argv[])

{

#if UseSdl

SDL_Init(SDL_INIT_AUDIO); //init

SDL_AudioSpec spec;

spec.freq = 44100;

spec.format = AUDIO_S16SYS;

spec.channels = 2;

spec.samples = 1024;

spec.callback = read_audio_data_cb;

spec.userdata = NULL;

//根据参数,打开音频设备

if (SDL_OpenAudio(&spec, NULL) < 0)

return -1;

//开始播放

SDL_PauseAudio(0);

#endif

#if UseFfg

AVFormatContext *m_AVFormatContext = nullptr;

AVCodecContext *m_AudioCodec = nullptr;

AVFrame *m_AvFrame;

// 打开文件

int result = avformat_open_input(&m_AVFormatContext, "75sVoyage.mp3", nullptr, nullptr);

if (result != 0 || m_AVFormatContext == nullptr)

return false;

// 查找流信息,把它存入AVFormatContext中

if (avformat_find_stream_info(m_AVFormatContext, nullptr) < 0)

return false;

int streamsCount = m_AVFormatContext->nb_streams;

int audio_index = 0;

const AVCodec *audio_codec = avcodec_find_decoder(m_AVFormatContext->streams[audio_index]->codecpar->codec_id);

if (nullptr == audio_codec) {

std::cout << "没找到对应的解码器:" << std::endl;

return false;

}

AVCodecContext *codec_ctx = avcodec_alloc_context3(audio_codec);

// 如果不加这个可能会 报错Invalid data found when processing input

avcodec_parameters_to_context(codec_ctx, m_AVFormatContext->streams[audio_index]->codecpar);

// 打开解码器

int ret = avcodec_open2(codec_ctx, audio_codec, NULL);

if (ret < 0) {

std::cout << "解码器打开失败:" << std::endl;

return false;

}

// 初始化包和帧数据结构

AVPacket *avPacket = av_packet_alloc();

av_init_packet(avPacket);

AVFrame *frame = av_frame_alloc();

std::cout << "sample_fmt:" << codec_ctx->sample_fmt << std::endl;

std::cout << "AV_SAMPLE_FMT_U8:" << AV_SAMPLE_FMT_U8 << std::endl;

std::cout << "采样率sample_fmt:" << codec_ctx->sample_fmt << std::endl;

//audio param

uint64_t outChannelLayout = AV_CH_LAYOUT_STEREO;

int outNbSamples = codec_ctx->frame_size;

int outSampleRate = 22050;

int outChannels = av_get_channel_layout_nb_channels(outChannelLayout);

int outBufferSize = av_samples_get_buffer_size(NULL, outChannels, outNbSamples, AV_SAMPLE_FMT_S16, 1);

uint8_t *outBuffer = (uint8_t*)av_malloc(MAX_AUDIO_FRAME_SIZE * 2);

int64_t inChannelLayout = av_get_default_channel_layout(codec_ctx->channels);

//allocate swr context

SwrContext * swrContext = swr_alloc();

swrContext = swr_alloc_set_opts(swrContext, outChannelLayout, AV_SAMPLE_FMT_S16, outSampleRate, inChannelLayout,

codec_ctx->sample_fmt, codec_ctx->sample_rate, 0, NULL);

swr_init(swrContext);

FILE *audio_pcm = fopen("trans.pcm", "wb");

while (true)

{

ret = av_read_frame(m_AVFormatContext, avPacket);

if (ret < 0) {

std::cout << "音频读取完毕" << std::endl;

break;

}

else if (audio_index == avPacket->stream_index)

{

ret = avcodec_send_packet(codec_ctx, avPacket);

if (ret == AVERROR(EAGAIN)) {

std::cout << "发送解码EAGAIN:" << std::endl;

}

else if (ret < 0) {

char error[1024];

av_strerror(ret, error, 1024);

std::cout << "发送解码失败:" << error << std::endl;

return false;

}

while (true) {

ret = avcodec_receive_frame(codec_ctx, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

else if (ret < 0) {

std::cout << "音频解码失败:" << std::endl;

return false;

}

swr_convert(swrContext, &outBuffer, MAX_AUDIO_FRAME_SIZE, (const uint8_t**)frame->data, frame->nb_samples);

fwrite(outBuffer, 1, outBufferSize, audio_pcm);

}

}

else {

av_packet_unref(avPacket);

}

}

if (audio_pcm != nullptr)

{

fclose(audio_pcm);

}

//释放资源

av_packet_unref(avPacket);

av_frame_free(&frame);

avcodec_free_context(&codec_ctx);

avformat_close_input(&m_AVFormatContext);

#endif

#if UseWmm

WaveOut wvOut;

int sampleRate = 22050, bitsPerSample = 16, channels = 2;

if (wvOut.open(sampleRate, bitsPerSample, channels) < 0)

{

std::cout << "waveout open failed.\n";

return 0;

}

#endif

//打开文件

FILE *f = fopen("trans.pcm", "rb+");

if (f == nullptr) return -1;

char* pcm_buffer = (char*)malloc(PCM_BUFFER_SIZE);

while (true)

{

ret = fread(pcm_buffer, 1, PCM_BUFFER_SIZE, f);

if (ret == 0) break;

#if UseSdl

audio_chunk = reinterpret_cast<Uint8*>(pcm_buffer);

audio_len = ret; //读取到的字节数

audio_pos = audio_chunk;

std::cout << "play " << audio_len << " data" << std::endl;

while (audio_len > 0) //等待audio_len长度的数据播放完成

SDL_Delay(1);

#endif

#if UseWmm

if (wvOut.push(pcm_buffer, ret) < 0)

{

std::cout << "play failed.\n";

}

#endif

}

#if UseWmm

if (wvOut.flush() < 0)

{

std::cout << "flush failed\n";

}

std::cout << "play ok.\n";

#endif

free(pcm_buffer);

#if UseSdl

SDL_Quit();

#endif

return 0;

}

===============音频方面结题====后续视频知识======歇了歇了====================

732

732

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?