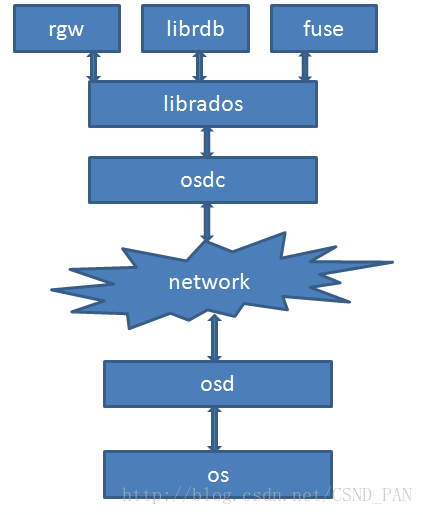

本文介绍Ceph客户端方面的某些模块的实现。客户端主要是实现了接口,对外提供访问的功能。上层可以通过接口来访问Ceph存储。Librados 与 Osdc 位于Ceph客户端中比较底层的位置,Librados 提供了Pool的创建、删除、对象的创建、删除等基本接口;Osdc则用于封装操作,计算对象的地址,发送请求和处理超时。如图:

根据LIBRADOS架构图,叙述大概的事件流程。在 Ceph分布式存储实战中 这本书中有如下一段话:

先根据配置文件调用LIBRADOS创建一个RADOS,接下来为这个RADOS创建一个radosclient,radosclient包含3个主要模块(finisher、Message、Objector)。再根据pool创建对应的ioctx,在ioctx中能够找到radosclient。在调用OSDC生成对应的OSD请求,与OSD进行通信响应请求。这从大体上叙述了librados与osdc在整个Ceph中的作用。

Librados

该模块包含两个部分,分别是RadosClient 模块和IoctxImpl。RadosClient处于最上层,是librados的核心管理类,管理着整个RADOS系统层面以及pool层面的管理。而IoctxImpl则对于其中的某一个pool进行管理,如对 对象的读写等操作的控制。

RadosClient(Librados模块)

IoctxImpl(Librados模块)

Objecter(Osdc 模块)

RadosClient类

class librados::RadosClient : public Dispatcher,

public md_config_obs_t

{

friend neorados::detail::RadosClient;

public:

using Dispatcher::cct;

private:

std::unique_ptr<CephContext,

std::function<void(CephContext*)>> cct_deleter;

public:

const ConfigProxy& conf{cct->_conf};

ceph::async::io_context_pool poolctx;

private:

enum {

DISCONNECTED,

CONNECTING,

CONNECTED,

} state{DISCONNECTED};

MonClient monclient{cct, poolctx};

MgrClient mgrclient{cct, nullptr, &monclient.monmap};

Messenger *messenger{nullptr}; //网络消息接口

uint64_t instance_id{0};

bool _dispatch(Message *m); //相关消息分发 Dispatcher类的函数重写

bool ms_dispatch(Message *m) override;

void ms_handle_connect(Connection *con) override;

bool ms_handle_reset(Connection *con) override;

void ms_handle_remote_reset(Connection *con) override;

bool ms_handle_refused(Connection *con) override;

Objecter *objecter{nullptr};// Osdc模块中的 用于发送封装好的OP消息

ceph::mutex lock = ceph::make_mutex("librados::RadosClient::lock");

ceph::condition_variable cond;

int refcnt{1};

version_t log_last_version{0};

rados_log_callback_t log_cb{nullptr};

rados_log_callback2_t log_cb2{nullptr};

void *log_cb_arg{nullptr};

std::string log_watch;

bool service_daemon = false;

std::string daemon_name, service_name;

std::map<std::string,std::string> daemon_metadata;

ceph::timespan rados_mon_op_timeout{};

int wait_for_osdmap();

public:

boost::asio::io_context::strand finish_strand{poolctx.get_io_context()};

explicit RadosClient(CephContext *cct);

~RadosClient() override;

int ping_monitor(std::string mon_id, std::string *result);

int connect();

void shutdown();

int watch_flush();

int async_watch_flush(AioCompletionImpl *c);

uint64_t get_instance_id();

int get_min_compatible_osd(int8_t* require_osd_release);

int get_min_compatible_client(int8_t* min_compat_client,

int8_t* require_min_compat_client);

int wait_for_latest_osdmap();

int create_ioctx(const char *name, IoCtxImpl **io);

int create_ioctx(int64_t, IoCtxImpl **io);

int get_fsid(std::string *s);

int64_t lookup_pool(const char *name);

bool pool_requires_alignment(int64_t pool_id);

int pool_requires_alignment2(int64_t pool_id, bool *requires);

uint64_t pool_required_alignment(int64_t pool_id);

int pool_required_alignment2(int64_t pool_id, uint64_t *alignment);

int pool_get_name(uint64_t pool_id, std::string *name,

bool wait_latest_map = false);

int pool_list(std::list<std::pair<int64_t, std::string> >& ls);

int get_pool_stats(std::list<std::string>& ls, std::map<std::string,::pool_stat_t> *result,

bool *per_pool);

int get_fs_stats(ceph_statfs& result);

bool get_pool_is_selfmanaged_snaps_mode(const std::string& pool);

/*

-1 was set as the default value and monitor will pickup the right crush rule with below order:

a) osd pool default crush replicated rule

b) the first rule

c) error out if no value find

*/

int pool_create(std::string& name, int16_t crush_rule=-1);

int pool_create_async(std::string& name, PoolAsyncCompletionImpl *c,

int16_t crush_rule=-1);

int pool_get_base_tier(int64_t pool_id, int64_t* base_tier);

int pool_delete(const char *name);

int pool_delete_async(const char *name, PoolAsyncCompletionImpl *c);

int blocklist_add(const std::string& client_address, uint32_t expire_seconds);

int mon_command(const std::vector<std::string>& cmd, const bufferlist &inbl,

bufferlist *outbl, std::string *outs);

void mon_command_async(const std::vector<std::string>& cmd, const bufferlist &inbl,

bufferlist *outbl, std::string *outs, Context *on_finish);

int mon_command(int rank,

const std::vector<std::string>& cmd, const bufferlist &inbl,

bufferlist *outbl, std::string *outs);

int mon_command(std::string name,

const std::vector<std::string>& cmd, const bufferlist &inbl,

bufferlist *outbl, std::string *outs);

int mgr_command(const std::vector<std::string>& cmd, const bufferlist &inbl,

bufferlist *outbl, std::string *outs);

int mgr_command(

const std::string& name,

const std::vector<std::string>& cmd, const bufferlist &inbl,

bufferlist *outbl, std::string *outs);

int osd_command(int osd, std::vector<std::string>& cmd, const bufferlist& inbl,

bufferlist *poutbl, std::string *prs);

int pg_command(pg_t pgid, std::vector<std::string>& cmd, const bufferlist& inbl,

bufferlist *poutbl, std::string *prs);

void handle_log(MLog *m);

int monitor_log(const std::string& level, rados_log_callback_t cb,

rados_log_callback2_t cb2, void *arg);

void get();

bool put();

void blocklist_self(bool set);

std::string get_addrs() const;

int service_daemon_register(

const std::string& service, ///< service name (e.g., 'rgw')

const std::string& name, ///< daemon name (e.g., 'gwfoo')

const std::map<std::string,std::string>& metadata); ///< static metadata about daemon

int service_daemon_update_status(

std::map<std::string,std::string>&& status);

mon_feature_t get_required_monitor_features() const;

int get_inconsistent_pgs(int64_t pool_id, std::vector<std::string>* pgs);

const char** get_tracked_conf_keys() const override;

void handle_conf_change(const ConfigProxy& conf,

const std::set <std::string> &changed) override;

};

IoctxImpl类

该类是pool的上下文信息,一个pool对应一个IoctxImpl对象。librados中所有关于IO操作的API都设计在librados::IoCtx中,接口的真正实现在IoCtxImpl中。它的处理过程如下:

1)把请求封装成ObjectOperation 类(osdc 中的)

2)把相关的pool信息添加到里面,封装成Objecter::Op对像

3)调用相应的函数 objecter- >op_submit 发送给相应的OSD

4)操作完成后,调用相应的回调函数。

OSDC

该模块是客户端模块比较底层的,模块,用于封装操作数据,计算对象的地址、发送请求和处理超时。

客户端读写操作分析

librados::IoCtxImpl::write

librados::IoCtxImpl::operate

Objecter::prepare_mutate_op

Objecter::op_submit

Objecter::_op_submit_with_budget

Objecter::_op_submit

Objecter::_calc_target

Objecter::_get_session

Objecter::_send_op

注:

prepare_mutate_op:

调用objecter->prepare_mutate_op把ObjectOperation封装为Op类型

op_submit:

该函数将封装好的Op操作通过网络发送出去。在op_submit中调用了_op_submit_with_budget用来处理Throttle相关的流量信息以及超时处理,最后该函数调用 _op_submit用来完成关键地址寻址和发送工作。

_calc_target:

调用_calc_target 来计算目标OSD

_get_session:

调用函数 _get_session 获取目标OSD的连接,如果返回-EAGAIN,就升级为写锁,重新获取

write

写操作消息封装

int librados::IoCtxImpl::write(const object_t& oid, bufferlist& bl,

size_t len, uint64_t off)

{

if (len > UINT_MAX/2)

return -E2BIG;

::ObjectOperation op;

prepare_assert_ops(&op);

bufferlist mybl;

mybl.substr_of(bl, 0, len);

op.write(off, mybl);

return operate(oid, &op, NULL); //调用operate处理

}

operate

int librados::IoCtxImpl::operate(const object_t& oid, ::ObjectOperation *o,

ceph::real_time *pmtime, int flags)

{

ceph::real_time ut = (pmtime ? *pmtime :

ceph::real_clock::now());

/* can't write to a snapshot */

if (snap_seq != CEPH_NOSNAP)

return -EROFS;

if (!o->size())

return 0;

ceph::mutex mylock = ceph::make_mutex("IoCtxImpl::operate::mylock");

ceph::condition_variable cond;

bool done;

int r;

version_t ver;

Context *oncommit = new C_SafeCond(mylock, cond, &done, &r);

int op = o->ops[0].op.op;

ldout(client->cct, 10) << ceph_osd_op_name(op) << " oid=" << oid

<< " nspace=" << oloc.nspace << dendl;

Objecter::Op *objecter_op = objecter->prepare_mutate_op(

oid, oloc,

*o, snapc, ut,

flags | extra_op_flags,

oncommit, &ver);

objecter->op_submit(objecter_op);

{

std::unique_lock l{mylock};

cond.wait(l, [&done] { return done;});

}

ldout(client->cct, 10) << "Objecter returned from "

<< ceph_osd_op_name(op) << " r=" << r << dendl;

set_sync_op_version(ver);

return r;

}

prepare_mutate_op

调用objecter->prepare_mutate_op把ObjectOperation封装为Op类型

Op *prepare_mutate_op(

const object_t& oid, const object_locator_t& oloc,

ObjectOperation& op, const SnapContext& snapc,

ceph::real_time mtime, int flags,

Context *oncommit, version_t *objver = NULL,

osd_reqid_t reqid = osd_reqid_t(),

ZTracer::Trace *parent_trace = nullptr) {

Op *o = new Op(oid, oloc, std::move(op.ops), flags | global_op_flags |

CEPH_OSD_FLAG_WRITE, oncommit, objver,

nullptr, parent_trace);

o->priority = op.priority;

o->mtime = mtime;

o->snapc = snapc;

o->out_rval.swap(op.out_rval);

o->out_bl.swap(op.out_bl);

o->out_handler.swap(op.out_handler);

o->out_ec.swap(op.out_ec);

o->reqid = reqid;

op.clear();

return o;

}

op_submit

该函数将封装好的Op操作通过网络发送出去。在op_submit中调用了_op_submit_with_budget用来处理Throttle相关的流量信息以及超时处理,最后该函数调用 _op_submit用来完成关键地址寻址和发送工作。

void Objecter::op_submit(Op *op, ceph_tid_t *ptid, int *ctx_budget)

{

shunique_lock rl(rwlock, ceph::acquire_shared);

ceph_tid_t tid = 0;

if (!ptid)

ptid = &tid;

op->trace.event("op submit");

_op_submit_with_budget(op, rl, ptid, ctx_budget);

}

void Objecter::_op_submit_with_budget(Op *op,

shunique_lock<ceph::shared_mutex>& sul,

ceph_tid_t *ptid,

int *ctx_budget)

{

ceph_assert(initialized);

ceph_assert(op->ops.size() == op->out_bl.size());

ceph_assert(op->ops.size() == op->out_rval.size());

ceph_assert(op->ops.size() == op->out_handler.size());

// throttle. before we look at any state, because

// _take_op_budget() may drop our lock while it blocks.

if (!op->ctx_budgeted || (ctx_budget && (*ctx_budget == -1))) {

int op_budget = _take_op_budget(op, sul);

// take and pass out the budget for the first OP

// in the context session

if (ctx_budget && (*ctx_budget == -1)) {

*ctx_budget = op_budget;

}

}

if (osd_timeout > timespan(0)) {

if (op->tid == 0)

op->tid = ++last_tid;

auto tid = op->tid;

op->ontimeout = timer.add_event(osd_timeout,

[this, tid]() {

op_cancel(tid, -ETIMEDOUT); });

}

_op_submit(op, sul, ptid);

}

void Objecter::_op_submit(Op *op, shunique_lock<ceph::shared_mutex>& sul, ceph_tid_t *ptid)

{

// rwlock is locked

ldout(cct, 10) << __func__ << " op " << op << dendl;

// pick target

ceph_assert(op->session == NULL);

OSDSession *s = NULL;

bool check_for_latest_map = false;

int r = _calc_target(&op->target, nullptr);

switch(r) {

case RECALC_OP_TARGET_POOL_DNE:

check_for_latest_map = true;

break;

case RECALC_OP_TARGET_POOL_EIO:

if (op->has_completion()) {

op->complete(osdc_errc::pool_eio, -EIO);

}

return;

}

// Try to get a session, including a retry if we need to take write lock

r = _get_session(op->target.osd, &s, sul);

if (r == -EAGAIN ||

(check_for_latest_map && sul.owns_lock_shared()) ||

cct->_conf->objecter_debug_inject_relock_delay) {

epoch_t orig_epoch = osdmap->get_epoch();

sul.unlock();

if (cct->_conf->objecter_debug_inject_relock_delay) {

sleep(1);

}

sul.lock();

if (orig_epoch != osdmap->get_epoch()) {

// map changed; recalculate mapping

ldout(cct, 10) << __func__ << " relock raced with osdmap, recalc target"

<< dendl;

check_for_latest_map = _calc_target(&op->target, nullptr)

== RECALC_OP_TARGET_POOL_DNE;

if (s) {

put_session(s);

s = NULL;

r = -EAGAIN;

}

}

}

if (r == -EAGAIN) {

ceph_assert(s == NULL);

r = _get_session(op->target.osd, &s, sul);

}

ceph_assert(r == 0);

ceph_assert(s); // may be homeless

_send_op_account(op);

// send?

ceph_assert(op->target.flags & (CEPH_OSD_FLAG_READ|CEPH_OSD_FLAG_WRITE));

bool need_send = false;

if (op->target.paused) {

ldout(cct, 10) << " tid " << op->tid << " op " << op << " is paused"

<< dendl;

_maybe_request_map();

} else if (!s->is_homeless()) {

need_send = true;

} else {

_maybe_request_map();

}

unique_lock sl(s->lock);

if (op->tid == 0)

op->tid = ++last_tid;

ldout(cct, 10) << "_op_submit oid " << op->target.base_oid

<< " '" << op->target.base_oloc << "' '"

<< op->target.target_oloc << "' " << op->ops << " tid "

<< op->tid << " osd." << (!s->is_homeless() ? s->osd : -1)

<< dendl;

_session_op_assign(s, op);

if (need_send) {

_send_op(op);

}

// Last chance to touch Op here, after giving up session lock it can

// be freed at any time by response handler.

ceph_tid_t tid = op->tid;

if (check_for_latest_map) {

_send_op_map_check(op);

}

if (ptid)

*ptid = tid;

op = NULL;

sl.unlock();

put_session(s);

ldout(cct, 5) << num_in_flight << " in flight" << dendl;

}

void Objecter::_send_op(Op *op)

{

// rwlock is locked

// op->session->lock is locked

// backoff?

auto p = op->session->backoffs.find(op->target.actual_pgid);

if (p != op->session->backoffs.end()) {

hobject_t hoid = op->target.get_hobj();

auto q = p->second.lower_bound(hoid);

if (q != p->second.begin()) {

--q;

if (hoid >= q->second.end) {

++q;

}

}

if (q != p->second.end()) {

ldout(cct, 20) << __func__ << " ? " << q->first << " [" << q->second.begin

<< "," << q->second.end << ")" << dendl;

int r = cmp(hoid, q->second.begin);

if (r == 0 || (r > 0 && hoid < q->second.end)) {

ldout(cct, 10) << __func__ << " backoff " << op->target.actual_pgid

<< " id " << q->second.id << " on " << hoid

<< ", queuing " << op << " tid " << op->tid << dendl;

return;

}

}

}

ceph_assert(op->tid > 0);

MOSDOp *m = _prepare_osd_op(op);

if (op->target.actual_pgid != m->get_spg()) {

ldout(cct, 10) << __func__ << " " << op->tid << " pgid change from "

<< m->get_spg() << " to " << op->target.actual_pgid

<< ", updating and reencoding" << dendl;

m->set_spg(op->target.actual_pgid);

m->clear_payload(); // reencode

}

ldout(cct, 15) << "_send_op " << op->tid << " to "

<< op->target.actual_pgid << " on osd." << op->session->osd

<< dendl;

ConnectionRef con = op->session->con;

ceph_assert(con);

#if 0

// preallocated rx ceph::buffer?

if (op->con) {

ldout(cct, 20) << " revoking rx ceph::buffer for " << op->tid << " on "

<< op->con << dendl;

op->con->revoke_rx_buffer(op->tid);

}

if (op->outbl &&

op->ontimeout == 0 && // only post rx_buffer if no timeout; see #9582

op->outbl->length()) {

op->outbl->invalidate_crc(); // messenger writes through c_str()

ldout(cct, 20) << " posting rx ceph::buffer for " << op->tid << " on " << con

<< dendl;

op->con = con;

op->con->post_rx_buffer(op->tid, *op->outbl);

}

#endif

op->incarnation = op->session->incarnation;

if (op->trace.valid()) {

m->trace.init("op msg", nullptr, &op->trace);

}

op->session->con->send_message(m); //最终发送数据

}

_calc_target

osdmap->object_locator_to_pg获取目标对象所在的PG

osdmap->pg_to_up_acting_osd通过CRUSH算法,获取该PG对应的OSD列表

int Objecter::_calc_target(op_target_t *t, Connection *con, bool any_change)

{

// rwlock is locked

bool is_read = t->flags & CEPH_OSD_FLAG_READ;

bool is_write = t->flags & CEPH_OSD_FLAG_WRITE;

t->epoch = osdmap->get_epoch();

ldout(cct,20) << __func__ << " epoch " << t->epoch

<< " base " << t->base_oid << " " << t->base_oloc

<< " precalc_pgid " << (int)t->precalc_pgid

<< " pgid " << t->base_pgid

<< (is_read ? " is_read" : "")

<< (is_write ? " is_write" : "")

<< dendl;

const pg_pool_t *pi = osdmap->get_pg_pool(t->base_oloc.pool);

if (!pi) {

t->osd = -1;

return RECALC_OP_TARGET_POOL_DNE;

}

if (pi->has_flag(pg_pool_t::FLAG_EIO)) {

return RECALC_OP_TARGET_POOL_EIO;

}

ldout(cct,30) << __func__ << " base pi " << pi

<< " pg_num " << pi->get_pg_num() << dendl;

bool force_resend = false;

if (osdmap->get_epoch() == pi->last_force_op_resend) {

if (t->last_force_resend < pi->last_force_op_resend) {

t->last_force_resend = pi->last_force_op_resend;

force_resend = true;

} else if (t->last_force_resend == 0) {

force_resend = true;

}

}

// apply tiering

t->target_oid = t->base_oid;

t->target_oloc = t->base_oloc;

if ((t->flags & CEPH_OSD_FLAG_IGNORE_OVERLAY) == 0) {

if (is_read && pi->has_read_tier())

t->target_oloc.pool = pi->read_tier;

if (is_write && pi->has_write_tier())

t->target_oloc.pool = pi->write_tier;

pi = osdmap->get_pg_pool(t->target_oloc.pool);

if (!pi) {

t->osd = -1;

return RECALC_OP_TARGET_POOL_DNE;

}

}

pg_t pgid;

if (t->precalc_pgid) {

ceph_assert(t->flags & CEPH_OSD_FLAG_IGNORE_OVERLAY);

ceph_assert(t->base_oid.name.empty()); // make sure this is a pg op

ceph_assert(t->base_oloc.pool == (int64_t)t->base_pgid.pool());

pgid = t->base_pgid;

} else {

int ret = osdmap->object_locator_to_pg(t->target_oid, t->target_oloc,

pgid);

if (ret == -ENOENT) {

t->osd = -1;

return RECALC_OP_TARGET_POOL_DNE;

}

}

ldout(cct,20) << __func__ << " target " << t->target_oid << " "

<< t->target_oloc << " -> pgid " << pgid << dendl;

ldout(cct,30) << __func__ << " target pi " << pi

<< " pg_num " << pi->get_pg_num() << dendl;

t->pool_ever_existed = true;

int size = pi->size;

int min_size = pi->min_size;

unsigned pg_num = pi->get_pg_num();

unsigned pg_num_mask = pi->get_pg_num_mask();

unsigned pg_num_pending = pi->get_pg_num_pending();

int up_primary, acting_primary;

vector<int> up, acting;

ps_t actual_ps = ceph_stable_mod(pgid.ps(), pg_num, pg_num_mask);

pg_t actual_pgid(actual_ps, pgid.pool());

if (!lookup_pg_mapping(actual_pgid, osdmap->get_epoch(), &up, &up_primary,

&acting, &acting_primary)) {

osdmap->pg_to_up_acting_osds(actual_pgid, &up, &up_primary,

&acting, &acting_primary);

pg_mapping_t pg_mapping(osdmap->get_epoch(),

up, up_primary, acting, acting_primary);

update_pg_mapping(actual_pgid, std::move(pg_mapping));

}

bool sort_bitwise = osdmap->test_flag(CEPH_OSDMAP_SORTBITWISE);

bool recovery_deletes = osdmap->test_flag(CEPH_OSDMAP_RECOVERY_DELETES);

unsigned prev_seed = ceph_stable_mod(pgid.ps(), t->pg_num, t->pg_num_mask);

pg_t prev_pgid(prev_seed, pgid.pool());

if (any_change && PastIntervals::is_new_interval(

t->acting_primary,

acting_primary,

t->acting,

acting,

t->up_primary,

up_primary,

t->up,

up,

t->size,

size,

t->min_size,

min_size,

t->pg_num,

pg_num,

t->pg_num_pending,

pg_num_pending,

t->sort_bitwise,

sort_bitwise,

t->recovery_deletes,

recovery_deletes,

t->peering_crush_bucket_count,

pi->peering_crush_bucket_count,

t->peering_crush_bucket_target,

pi->peering_crush_bucket_target,

t->peering_crush_bucket_barrier,

pi->peering_crush_bucket_barrier,

t->peering_crush_mandatory_member,

pi->peering_crush_mandatory_member,

prev_pgid)) {

force_resend = true;

}

bool unpaused = false;

bool should_be_paused = target_should_be_paused(t);

if (t->paused && !should_be_paused) {

unpaused = true;

}

if (t->paused != should_be_paused) {

ldout(cct, 10) << __func__ << " paused " << t->paused

<< " -> " << should_be_paused << dendl;

t->paused = should_be_paused;

}

bool legacy_change =

t->pgid != pgid ||

is_pg_changed(

t->acting_primary, t->acting, acting_primary, acting,

t->used_replica || any_change);

bool split_or_merge = false;

if (t->pg_num) {

split_or_merge =

prev_pgid.is_split(t->pg_num, pg_num, nullptr) ||

prev_pgid.is_merge_source(t->pg_num, pg_num, nullptr) ||

prev_pgid.is_merge_target(t->pg_num, pg_num);

}

if (legacy_change || split_or_merge || force_resend) {

t->pgid = pgid;

t->acting = std::move(acting);

t->acting_primary = acting_primary;

t->up_primary = up_primary;

t->up = std::move(up);

t->size = size;

t->min_size = min_size;

t->pg_num = pg_num;

t->pg_num_mask = pg_num_mask;

t->pg_num_pending = pg_num_pending;

spg_t spgid(actual_pgid);

if (pi->is_erasure()) {

for (uint8_t i = 0; i < t->acting.size(); ++i) {

if (t->acting[i] == acting_primary) {

spgid.reset_shard(shard_id_t(i));

break;

}

}

}

t->actual_pgid = spgid;

t->sort_bitwise = sort_bitwise;

t->recovery_deletes = recovery_deletes;

t->peering_crush_bucket_count = pi->peering_crush_bucket_count;

t->peering_crush_bucket_target = pi->peering_crush_bucket_target;

t->peering_crush_bucket_barrier = pi->peering_crush_bucket_barrier;

t->peering_crush_mandatory_member = pi->peering_crush_mandatory_member;

ldout(cct, 10) << __func__ << " "

<< " raw pgid " << pgid << " -> actual " << t->actual_pgid

<< " acting " << t->acting

<< " primary " << acting_primary << dendl;

t->used_replica = false;

if ((t->flags & (CEPH_OSD_FLAG_BALANCE_READS |

CEPH_OSD_FLAG_LOCALIZE_READS)) &&

!is_write && pi->is_replicated() && t->acting.size() > 1) {

int osd;

ceph_assert(is_read && t->acting[0] == acting_primary);

if (t->flags & CEPH_OSD_FLAG_BALANCE_READS) {

int p = rand() % t->acting.size();

if (p)

t->used_replica = true;

osd = t->acting[p];

ldout(cct, 10) << " chose random osd." << osd << " of " << t->acting

<< dendl;

} else {

// look for a local replica. prefer the primary if the

// distance is the same.

int best = -1;

int best_locality = 0;

for (unsigned i = 0; i < t->acting.size(); ++i) {

int locality = osdmap->crush->get_common_ancestor_distance(

cct, t->acting[i], crush_location);

ldout(cct, 20) << __func__ << " localize: rank " << i

<< " osd." << t->acting[i]

<< " locality " << locality << dendl;

if (i == 0 ||

(locality >= 0 && best_locality >= 0 &&

locality < best_locality) ||

(best_locality < 0 && locality >= 0)) {

best = i;

best_locality = locality;

if (i)

t->used_replica = true;

}

}

ceph_assert(best >= 0);

osd = t->acting[best];

}

t->osd = osd;

} else {

t->osd = acting_primary;

}

}

if (legacy_change || unpaused || force_resend) {

return RECALC_OP_TARGET_NEED_RESEND;

}

if (split_or_merge &&

(osdmap->require_osd_release >= ceph_release_t::luminous ||

HAVE_FEATURE(osdmap->get_xinfo(acting_primary).features,

RESEND_ON_SPLIT))) {

return RECALC_OP_TARGET_NEED_RESEND;

}

return RECALC_OP_TARGET_NO_ACTION;

}

_get_session

/**

* Look up OSDSession by OSD id.

*

* @returns 0 on success, or -EAGAIN if the lock context requires

* promotion to write.

*/

int Objecter::_get_session(int osd, OSDSession **session,

shunique_lock<ceph::shared_mutex>& sul)

{

ceph_assert(sul && sul.mutex() == &rwlock);

if (osd < 0) {

*session = homeless_session;

ldout(cct, 20) << __func__ << " osd=" << osd << " returning homeless"

<< dendl;

return 0;

}

auto p = osd_sessions.find(osd);

if (p != osd_sessions.end()) {

auto s = p->second;

s->get();

*session = s;

ldout(cct, 20) << __func__ << " s=" << s << " osd=" << osd << " "

<< s->get_nref() << dendl;

return 0;

}

if (!sul.owns_lock()) {

return -EAGAIN;

}

auto s = new OSDSession(cct, osd);

osd_sessions[osd] = s;

s->con = messenger->connect_to_osd(osdmap->get_addrs(osd));

s->con->set_priv(RefCountedPtr{s});

logger->inc(l_osdc_osd_session_open);

logger->set(l_osdc_osd_sessions, osd_sessions.size());

s->get();

*session = s;

ldout(cct, 20) << __func__ << " s=" << s << " osd=" << osd << " "

<< s->get_nref() << dendl;

return 0;

}

307

307

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?