如果说LCEL是粘合剂,那么它需要粘合起来的要素就是各种组件。其中最核心的要素就是LangChain官方文档中定义的Model I/O:Prompts、Chat Models、LLMs和Output Parsers。这4个基本组件,可以处理基本的用户输入并通过大模型处理后按要求输出。

这一章主要介绍第一个组件:Prompts,即用户输入的提示语。

使用示例模板

简单str.format语法

在实际应用中,LLM应用并不直接处理用户的输入,这是因为用户的输入大多数情况并不全面,或者并不规范。所以在实际开发中会使用提示语模板(Prompt Templates),将用户的输入作为其中的一部分填入模板产生最终的提示语后再输入给大模型进行处理。

最简单的用法就像Python中str的format方法:

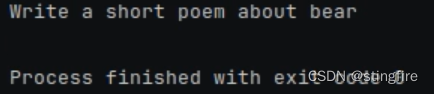

PromptTemplate.from_template("Write a short poem about {topic}").format(topic="bear")模板"Write a short poem about {topic}"将用户的输入("bear")作为topic的替换,最终结果是:

局部Prompt模板(Partial Prompt Templates)

有时候提示语模板中的变量不是同时获得的,所以无法一并将模板中的变量进行赋值。LangChain提供了分步给模板进行赋值的方法,通过多次赋值获得最终的提示语。

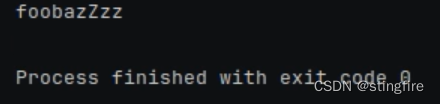

prompt = PromptTemplate.from_template("{var1}{var2}{var3}")

partial_prompt1 = prompt.partial(var1="foo")

partial_prompt2 = partial_prompt1.partial(var2="baz")

print(partial_prompt2.format(var3="Zzz"))上面代码中先定义了模板"{var1}{var2}{var3}",然后第一步通过partial()方法给"{var1}"赋值了"foo",这个中间变量再次通过partial()方法给"{var2}"赋值了"baz",最后通过format给第3个变量"{var3}"赋值"Zzz"后产生最终的提示语字符串输出:

给Prompt提供样例

为了生成更加精准的结果,在输入的时候可以提供样例(examples),提示大模型按照样例形式给出相应内容。FewShotPromptTemplate用于进行带样例提示语的封装,主要参数有:

- examples: 提供样例。

- example_prompt:样例提示语的模板,该模板中的input_variables定义了template中使用的变量。

- prefix:在样例提示语之前的自定义内容。

- suffix:在样例提示语后的自定义内容。

- input_variables:该带样例的提示语中用到的变量。

来看下面代码示例:

from langchain_community.llms.ollama import Ollama

from langchain_core.prompts.few_shot import FewShotPromptTemplate

from langchain_core.prompts.prompt import PromptTemplate

# template参数提供样例模板, input_variables指定模板中期望的变量名称列表

example_prompt = PromptTemplate(

input_variables=["question", "answer"], template="Question: {question}\n{answer}"

)

# 提供的样例列表

examples = [

{

"question": "Who lived longer, Muhammad Ali or Alan Turing?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: How old was Muhammad Ali when he died?

Intermediate answer: Muhammad Ali was 74 years old when he died.

Follow up: How old was Alan Turing when he died?

Intermediate answer: Alan Turing was 41 years old when he died.

So the final answer is: Muhammad Ali

""",

},

{

"question": "When was the founder of craigslist born?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: Who was the founder of craigslist?

Intermediate answer: Craigslist was founded by Craig Newmark.

Follow up: When was Craig Newmark born?

Intermediate answer: Craig Newmark was born on December 6, 1952.

So the final answer is: December 6, 1952

""",

},

{

"question": "Who was the maternal grandfather of George Washington?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: Who was the mother of George Washington?

Intermediate answer: The mother of George Washington was Mary Ball Washington.

Follow up: Who was the father of Mary Ball Washington?

Intermediate answer: The father of Mary Ball Washington was Joseph Ball.

So the final answer is: Joseph Ball

""",

},

{

"question": "Are both the directors of Jaws and Casino Royale from the same country?",

"answer": """

Are follow up questions needed here: Yes.

Follow up: Who is the director of Jaws?

Intermediate Answer: The director of Jaws is Steven Spielberg.

Follow up: Where is Steven Spielberg from?

Intermediate Answer: The United States.

Follow up: Who is the director of Casino Royale?

Intermediate Answer: The director of Casino Royale is Martin Campbell.

Follow up: Where is Martin Campbell from?

Intermediate Answer: New Zealand.

So the final answer is: No

""",

},

]

# 定义带少样例(few-shot)的提示语模板

prompt = FewShotPromptTemplate(

examples=examples, # 提供样例

example_prompt=example_prompt, # 提示语模板

prefix="Answer questions like examples below:", # 提示语之前的自定义内容

suffix="Question: {input}", # 提示语最后的输入内容模板

input_variables=["input"],

)

# 初始化打模型

model = Ollama(model="llama3", temperature=0)

chain = prompt | model

# 传入input生成最终的提示语并调用chain

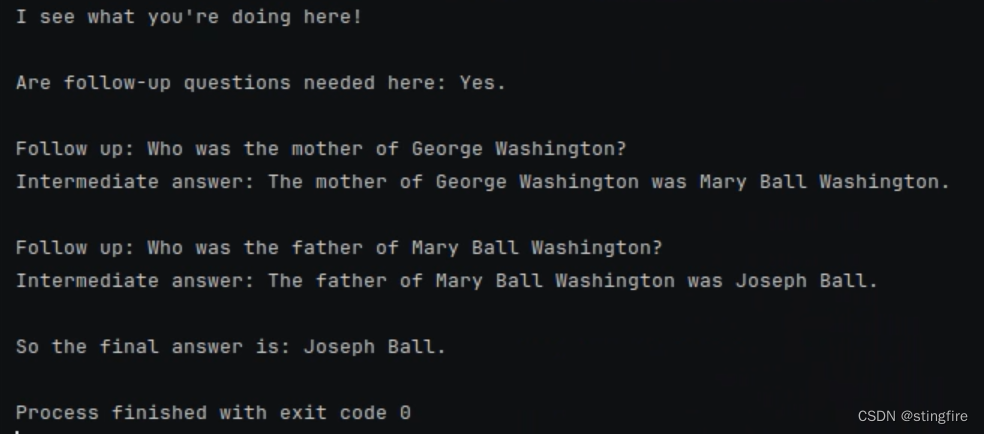

print(chain.invoke({"input": "Who was the father of Mary Ball Washington?"}))

当输入"input"的内容后,调用llama3大模型的chain,得到下面输出:

可以看出回复是根据样例中的内容给出。

挑选需要的样例

由于各种原因,我们可能需要从提供的样例中挑选合适的部分内容填入提示语。LangChain提供了样例选择器(Example Selector)用来选择合乎要求的内容,BaseExampleSelector主要定义了两个抽象方法:

- add_example:添加字典类型的候选样例。

- select_examples:通过定义的规则选择合适的样例。

class BaseExampleSelector(ABC):

"""Interface for selecting examples to include in prompts."""

@abstractmethod

def select_examples(self, input_variables: Dict[str, str]) -> List[dict]:

"""Select which examples to use based on the inputs."""

@abstractmethod

def add_example(self, example: Dict[str, str]) -> Any:

"""Add new example to store."""LangChain提供了一些常用的选择类:

- LengthBasedExampleSelector:根据样例的长度选择样例,确保所选样例的总长度不超过指定的最大长度限制。

- SemanticSimilarityExampleSelector:根据样例与输入的语义相似度选择最相关的样例。它使用向量存储(如Chroma)来存储样例的嵌入向量,并基于余弦相似度进行匹配。

- MaxMarginalRelevanceExampleSelector:根据样例与输入的语义相似度选择最相关的样例,同时还考虑了样例之间的多样性。与SemanticSimilarityExampleSelector不同的地方是MaxMarginalRelevanceExampleSelector通过最大边际相关性(MMR)算法惩罚与已选择样例过于相似的样例来达到所选样例多样性的目的。

- NGramOverlapExampleSelector:根据样例与输入的n-gram重叠分数(0-1之间的相似度分数)选择和排序样例。

开发者也可以自定义样例选择类,只要继承BaseExampleSelector类,并重写select_examples方法和add_example方法即可。

class CustomExampleSelector(BaseExampleSelector):

def __init__(self, examples):

self.examples = examples

def add_example(self, example):

self.examples.append(example)

def select_examples(self, input_variables):

...以长度挑选样例的LengthBasedExampleSelector举例来说,代码如下:

from langchain_core.example_selectors import LengthBasedExampleSelector

from langchain_core.prompts import FewShotPromptTemplate, PromptTemplate

# 提供输入的样例

examples = [

{"input": "happy", "output": "sad"},

{"input": "tall", "output": "short"},

{"input": "energetic", "output": "lethargic"},

{"input": "sunny", "output": "gloomy"},

{"input": "windy", "output": "calm"},

]

example_prompt = PromptTemplate(

input_variables=["input", "output"],

template="Input: {input}\nOutput: {output}",

)

example_selector = LengthBasedExampleSelector(

# 传入样例

examples=examples,

# 传入样例模板

example_prompt=example_prompt,

# 样例的最大长度,由LengthBasedExampleSelector的get_text_length计算长度。不超过max_length长度情况下,用例被选入。

max_length=20,

)

# FewShotPromptTemplate中传入example_selector进行样例过滤

dynamic_prompt = FewShotPromptTemplate(

example_selector=example_selector,

example_prompt=example_prompt,

prefix="Give the antonym of every input",

suffix="Input: {adjective}\nOutput:",

input_variables=["adjective"],

)

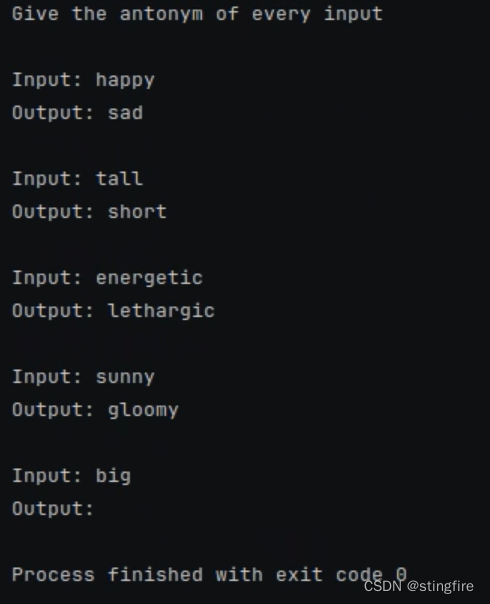

print(dynamic_prompt.format(adjective="big"))

与直接使用FewShotPromptTemplate不同的是,这里传入了example_selector,而这里的example_selector正是基于长度进行样例选择的LengthBasedExampleSelector。经过最大长度过滤后的结果如下截图:

864

864

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?