基于docker的airflow的构建

基于docker安装的官网教程,官网是入门的第一手好资料,虽说是官网,但大家环境各部相同,坑也是五花八门

主要记录排错手段和几个巨坑:

手段

1. 如果pod出现 unhealthy , 请用docker inspect ***(container_id)查询定位错误

2. 如果pod还在但是报错,请用docker logs ***(container_id)分析日志记录

巨坑(坑中之坑)

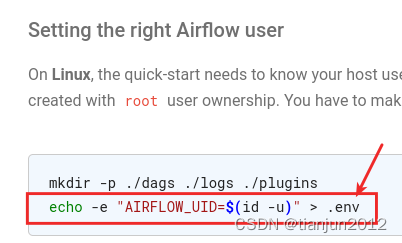

你没看错就是这个地方导致的,不能基于root安装,Ubuntu默认非root用户按教程一路起飞,centos一般都是root,一路各种掉坑,不然死活就是unhealthy,一查缺celery等各种依赖,但是用docker exec -it ***(container_id) bash进入pod查看,依赖都在,各种掉头发,别问我是怎么知道的

解决方法有两种:

3. 在.env里面指定为非0,如5000等

或

4.在docker-compose.yaml中指定这个非0的uid

小坑

1.hosts文件不能在通过Dockerfile覆盖要通过docker-compose.yaml中追加

2.有些系统提示权限受限,记得在docker-comopse.yaml中加privilege为true

3.改动docker网络中暴露的redis接口,具体查看docker-comopse.yaml

安装步骤:

1.二次封装镜像

Dockerfile

FROM apache/airflow:2.5.3-python3.8

USER root

# RUN sed -i 's#http://deb.debian.org#https://mirrors.163.com#g' /etc/apt/sources.list

RUN sed -i 's#http://deb.debian.org#https://mirrors.cloud.tencent.com#g' /etc/apt/sources.list

RUN apt-get clean

RUN apt-get update \

&& apt-get install -y gcc libkrb5-dev krb5-user \

&& apt-get autoremove -yqq --purge \

&& apt-get clean \

&& rm -rf /var/lib/apt/lists/*

USER airflow

COPY requirements.txt /

RUN pip install --no-cache-dir -r /requirements.txt -i https://mirrors.cloud.tencent.com/pypi/simple

# COPY airflow.cfg ${AIRFLOW_HOME}/

COPY html_content_template_file ${AIRFLOW_HOME}/

COPY subject_template_file ${AIRFLOW_HOME}/

COPY krb5.conf /etc/

构建命令: docker build -t tian/airflow:2.5.3 .注意最后的点

docker-compose.yaml

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

# Basic Airflow cluster configuration for CeleryExecutor with Redis and PostgreSQL.

#

# WARNING: This configuration is for local development. Do not use it in a production deployment.

#

# This configuration supports basic configuration using environment variables or an .env file

# The following variables are supported:

#

# AIRFLOW_IMAGE_NAME - Docker image name used to run Airflow.

# Default: apache/airflow:2.5.3

# AIRFLOW_UID - User ID in Airflow containers

# Default: 50000

# AIRFLOW_PROJ_DIR - Base path to which all the files will be volumed.

# Default: .

# Those configurations are useful mostly in case of standalone testing/running Airflow in test/try-out mode

#

# _AIRFLOW_WWW_USER_USERNAME - Username for the administrator account (if requested).

# Default: airflow

# _AIRFLOW_WWW_USER_PASSWORD - Password for the administrator account (if requested).

# Default: airflow

# _PIP_ADDITIONAL_REQUIREMENTS - Additional PIP requirements to add when starting all containers.

# Use this option ONLY for quick checks. Installing requirements at container

# startup is done EVERY TIME the service is started.

# A better way is to build a custom image or extend the official image

# as described in https://airflow.apache.org/docs/docker-stack/build.html.

# Default: ''

#

# Feel free to modify this file to suit your needs.

---

version: '3.8'

x-airflow-common:

&airflow-common

# In order to add custom dependencies or upgrade provider packages you can use your extended image.

# Comment the image line, place your Dockerfile in the directory where you placed the docker-compose.yaml

# and uncomment the "build" line below, Then run `docker-compose build` to build the images.

image: ${AIRFLOW_IMAGE_NAME:-tian/airflow:2.5.3}

# build: .

environment:

&airflow-common-env

AIRFLOW__CORE__DEFAULT_TIMEZONE: Asia/Shanghai #解决UTC时区问题

AIRFLOW__WEBSERVER__DEFAULT_UI_TIMEZONE: Asia/Shanghai #解决UTC时区问题

AIRFLOW__CORE__EXECUTOR: CeleryExecutor

AIRFLOW__DATABASE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

# For backward compatibility, with Airflow <2.3

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__RESULT_BACKEND: db+postgresql://airflow:airflow@postgres/airflow

AIRFLOW__CELERY__BROKER_URL: redis://:@redis:16379/0

AIRFLOW__CORE__FERNET_KEY: ''

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION: 'true'

#AIRFLOW__CORE__LOAD_EXAMPLES: 'true'

AIRFLOW__CORE__LOAD_EXAMPLES: 'false'

AIRFLOW__API__AUTH_BACKENDS: 'airflow.api.auth.backend.basic_auth,airflow.api.auth.backend.session'

# # E-mail

AIRFLOW__EMAIL__EMAIL_BACKEND: airflow.utils.email.send_email_smtp

AIRFLOW__EMAIL__FROM_EMAIL: airflow<bi_alert@yijiupi.com>

AIRFLOW__EMAIL__SUBJECT_TEMPLATE: /opt/airflow/template/subject_template_file

AIRFLOW__EMAIL__HTML_CONTENT_TEMPLATE: /opt/airflow/template/html_content_template_file

AIRFLOW__SMTP__SMTP_STARTTLS: 'false'

AIRFLOW__SMTP__SMTP_SSL: 'true'

AIRFLOW__SMTP__SMTP_HOST: smtp.exmail.qq.com

AIRFLOW__SMTP__SMTP_PORT: 465

AIRFLOW__SMTP__SMTP_USER: bi_alert@yijiupi.com

AIRFLOW__SMTP__SMTP_PASSWORD: LRBiN99ZgiGGKabM

AIRFLOW__SMTP__SMTP_MAIL_FROM: bi_alert@yijiupi.com

AIRFLOW__WEBSERVER__BASE_URL: http://localhost:8080

# yamllint disable rule:line-length

# Use simple http server on scheduler for health checks

# See https://airflow.apache.org/docs/apache-airflow/stable/administration-and-deployment/logging-monitoring/check-health.html#scheduler-health-check-server

# yamllint enable rule:line-length

AIRFLOW__SCHEDULER__ENABLE_HEALTH_CHECK: 'true'

# WARNING: Use _PIP_ADDITIONAL_REQUIREMENTS option ONLY for a quick checks

# for other purpose (development, test and especially production usage) build/extend Airflow image.

_PIP_ADDITIONAL_REQUIREMENTS: ${_PIP_ADDITIONAL_REQUIREMENTS:-}

volumes:

- ${AIRFLOW_PROJ_DIR:-.}/dags:/opt/airflow/dags

- ${AIRFLOW_PROJ_DIR:-.}/logs:/opt/airflow/logs

- ${AIRFLOW_PROJ_DIR:-.}/plugins:/opt/airflow/plugins

- ${AIRFLOW_PROJ_DIR:-.}/script:/opt/airflow/script # 其他大项目通过bash来启动

- ${AIRFLOW_PROJ_DIR:-.}/template:/opt/airflow/template # 发邮件的模板

# user: "${AIRFLOW_UID:-50000}:0"

user: "${AIRFLOW_UID:-0}:${AIRFLOW_GID:-0}"

depends_on:

&airflow-common-depends-on

redis:

condition: service_healthy

postgres:

condition: service_healthy

services:

postgres:

image: postgres:13

privileged: true

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

volumes:

- postgres-db-volume:/var/lib/postgresql/data

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 10s

retries: 5

start_period: 5s

restart: always

redis:

image: redis:latest

command: redis-server --port 16379

privileged: true

expose:

- 16379

healthcheck:

test: ["CMD", "redis-cli", "-p" ,"16379" , "ping"]

interval: 10s

timeout: 30s

retries: 50

start_period: 30s

restart: always

airflow-webserver:

<<: *airflow-common

command: webserver

privileged: true

ports:

- "8080:8080"

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8080/health"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-scheduler:

<<: *airflow-common

command: scheduler

privileged: true

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8974/health"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-worker:

<<: *airflow-common

command: celery worker

privileged: true

extra_hosts:

- "kdc-master:172.16.11.81"

- "kdc-slave:172.16.11.82"

- "haproxy.cdh.yjp.com:172.16.11.46"

- "bi-ml-redis.yjp.com:10.20.1.130"

- "Mysql-Portrait.yjp.com:10.20.1.59"

- "wuhu-master1.cdh.yjp.com:172.16.11.151"

- "wuhu-master2.cdh.yjp.com:172.16.11.152"

- "wuhu-master3.cdh.yjp.com:172.16.11.153"

- "in-yjgj-gateway.yjp.com:10.20.4.150" # 通过yjgj下推告警到wechat

healthcheck:

test:

- "CMD-SHELL"

- 'celery --app airflow.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}"'

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

environment:

<<: *airflow-common-env

# Required to handle warm shutdown of the celery workers properly

# See https://airflow.apache.org/docs/docker-stack/entrypoint.html#signal-propagation

DUMB_INIT_SETSID: "0"

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-triggerer:

<<: *airflow-common

command: triggerer

privileged: true

healthcheck:

test: ["CMD-SHELL", 'airflow jobs check --job-type TriggererJob --hostname "$${HOSTNAME}"']

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

airflow-init:

<<: *airflow-common

entrypoint: /bin/bash

# yamllint disable rule:line-length

command:

- -c

- |

function ver() {

printf "%04d%04d%04d%04d" $${1//./ }

}

airflow_version=$$(AIRFLOW__LOGGING__LOGGING_LEVEL=INFO && gosu airflow airflow version)

airflow_version_comparable=$$(ver $${airflow_version})

min_airflow_version=2.2.0

min_airflow_version_comparable=$$(ver $${min_airflow_version})

if (( airflow_version_comparable < min_airflow_version_comparable )); then

echo

echo -e "\033[1;31mERROR!!!: Too old Airflow version $${airflow_version}!\e[0m"

echo "The minimum Airflow version supported: $${min_airflow_version}. Only use this or higher!"

echo

exit 1

fi

if [[ -z "${AIRFLOW_UID}" ]]; then

echo

echo -e "\033[1;33mWARNING!!!: AIRFLOW_UID not set!\e[0m"

echo "If you are on Linux, you SHOULD follow the instructions below to set "

echo "AIRFLOW_UID environment variable, otherwise files will be owned by root."

echo "For other operating systems you can get rid of the warning with manually created .env file:"

echo " See: https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html#setting-the-right-airflow-user"

echo

fi

one_meg=1048576

mem_available=$$(($$(getconf _PHYS_PAGES) * $$(getconf PAGE_SIZE) / one_meg))

cpus_available=$$(grep -cE 'cpu[0-9]+' /proc/stat)

disk_available=$$(df / | tail -1 | awk '{print $$4}')

warning_resources="false"

if (( mem_available < 4000 )) ; then

echo

echo -e "\033[1;33mWARNING!!!: Not enough memory available for Docker.\e[0m"

echo "At least 4GB of memory required. You have $$(numfmt --to iec $$((mem_available * one_meg)))"

echo

warning_resources="true"

fi

if (( cpus_available < 2 )); then

echo

echo -e "\033[1;33mWARNING!!!: Not enough CPUS available for Docker.\e[0m"

echo "At least 2 CPUs recommended. You have $${cpus_available}"

echo

warning_resources="true"

fi

if (( disk_available < one_meg * 10 )); then

echo

echo -e "\033[1;33mWARNING!!!: Not enough Disk space available for Docker.\e[0m"

echo "At least 10 GBs recommended. You have $$(numfmt --to iec $$((disk_available * 1024 )))"

echo

warning_resources="true"

fi

if [[ $${warning_resources} == "true" ]]; then

echo

echo -e "\033[1;33mWARNING!!!: You have not enough resources to run Airflow (see above)!\e[0m"

echo "Please follow the instructions to increase amount of resources available:"

echo " https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html#before-you-begin"

echo

fi

mkdir -p /sources/logs /sources/dags /sources/plugins

chown -R "${AIRFLOW_UID}:0" /sources/{logs,dags,plugins}

exec /entrypoint airflow version

# yamllint enable rule:line-length

environment:

<<: *airflow-common-env

_AIRFLOW_DB_UPGRADE: 'true'

_AIRFLOW_WWW_USER_CREATE: 'true'

_AIRFLOW_WWW_USER_USERNAME: ${_AIRFLOW_WWW_USER_USERNAME:-airflow}

_AIRFLOW_WWW_USER_PASSWORD: ${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

_PIP_ADDITIONAL_REQUIREMENTS: ''

user: "0:0"

volumes:

- ${AIRFLOW_PROJ_DIR:-.}:/sources

airflow-cli:

<<: *airflow-common

profiles:

- debug

environment:

<<: *airflow-common-env

CONNECTION_CHECK_MAX_COUNT: "0"

# Workaround for entrypoint issue. See: https://github.com/apache/airflow/issues/16252

command:

- bash

- -c

- airflow

# You can enable flower by adding "--profile flower" option e.g. docker-compose --profile flower up

# or by explicitly targeted on the command line e.g. docker-compose up flower.

# See: https://docs.docker.com/compose/profiles/

flower:

<<: *airflow-common

command: celery flower

profiles:

- flower

ports:

- "5555:5555"

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:5555/"]

interval: 30s

timeout: 10s

retries: 5

start_period: 30s

restart: always

depends_on:

<<: *airflow-common-depends-on

airflow-init:

condition: service_completed_successfully

volumes:

postgres-db-volume:

cd ~/airflow && mkdir -p ./dags ./logs ./plugins ./template

构建命令: docker-compose up -d

卸载pod命令: docker-compose down --volumes --remove-orphans

卸载pod和镜像: docker-compose down --volumes --rmi all

可能需要用到的几个文件

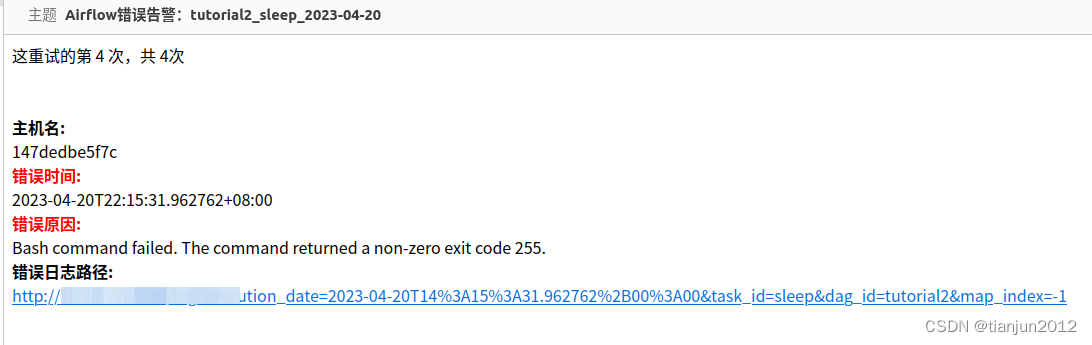

html_content_template_file

这重试的第 {{try_number}} 次,共 {{max_tries + 1}}次<br>

<br>

<br>

<strong>主机名:</strong><br> {{ti.hostname}}<br>

<strong><font color="red">错误时间:</font></strong><br>{{ execution_date.in_timezone("Asia/Shanghai")}}<br>

<strong><font color="red">错误原因:</font></strong><br>{{exception_html}}<br>

<strong>错误日志路径:</strong><br> <a href="{{ti.log_url}}">{{ti.log_url}}</a><br>

subject_template_file

Airflow错误告警:{{dag.dag_id}}_{{task.task_id}}_{{ execution_date.in_timezone("Asia/Shanghai").strftime("%Y-%m-%d") }}

krb5.conf

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

# pkinit_anchors = /etc/pki/tls/certs/ca-bundle.crt

default_realm = CDH.COM

#default_ccache_name = KEYRING:persistent:%{uid}

[realms]

CDH.COM = {

kdc = kdc-master

kdc = kdc-slave

admin_server = kdc-master

default_domain = CDH.COM

}

[domain_realm]

.cdh.com = CDH.COM

cdh.com = CDH.COM

requirements.txt

six

bitarray

thrift==0.16.0

thrift_sasl==0.4.3

kerberos==1.3.0

impyla==0.18.0

pandas==1.4.4

numpy==1.23.3

configparser==5.0.2

krbcontext==0.10

kafka-python==2.0.2

PyMySQL==1.0.3

调度 && 失败告警(邮件 && 微信)

1.公共 util工具类:

import json

import time

import pandas as pd

import pendulum

import requests

from impala.dbapi import connect

from kafka import KafkaProducer

from krbcontext import krbcontext

def read_sql(path):

"""读取路径中的文档"""

with open(path, 'r', encoding='utf-8') as f:

return f.read()

def get_data_from_impala(sql, host='haproxy.cdh.yjp.com', port=21050, keytab='/opt/airflow/dags/impala.keytab'):

"""

通过impala取数,转化为padans数据集

"""

def parse_cols(desc):

"""

取impala表列明

"""

return [i[0] for i in desc]

data_list = []

cols = []

with krbcontext(using_keytab=True, principal='impala',

keytab_file=keytab,

ccache_file='krb5cc_0'):

conn = connect(host, port, auth_mechanism='GSSAPI', kerberos_service_name='impala')

cur = conn.cursor()

print(sql)

cur.execute(sql)

for row in cur:

# print(row)

data_list.append(row)

cols = parse_cols(cur.description)

cur.close()

conn.close()

return pd.DataFrame(data_list, columns=cols)

def df2kafka_dic(df, table_name, bootstrap_servers='''wuhu-master1.cdh.yjp.com:9092,

wuhu-master2.cdh.yjp.com:9092,

wuhu-master3.cdh.yjp.com:9092''',

topic='ai_wide_table_neo4j'):

"""

将dataframe格式转换为推送至kafka的格式

:param topic:

:param bootstrap_servers:

:param key_cols:

:param df: dataframe

:param table_name: 表名

:param need_cols: 需要的字段, 主键需要写在最前面

:return:

"""

producer = KafkaProducer(bootstrap_servers=bootstrap_servers,

# compression_type='gzip', # 导致消息无法正常发送,且不报异常

retries=10,

linger_ms=0,

batch_size=16384 * 10,

max_request_size=1024 * 1024 * 10,

buffer_memory=1024 * 1024 * 256)

results = df.to_dict('records')

i = 0

for result in results:

result['tbl_name'] = table_name

js = json.dumps(result).encode('utf-8')

# print(js)

producer.send(topic=topic, value=js)

i += 1

print('-------写入kafka的数据条数:', i)

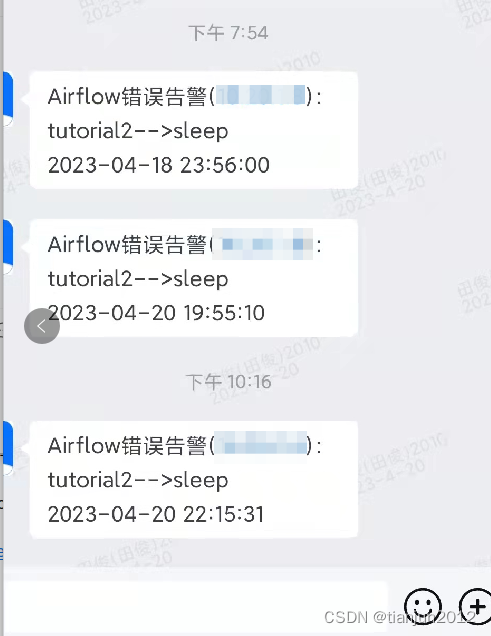

def send_msg_2_wechat(context):

"""

发送消息到wechat

:param context:

:return:

"""

ti = context['task_instance']

dag_id = ti.dag_id

task_id = ti.task

execution_date = context['execution_date']

time = execution_date.in_timezone("Asia/Shanghai").strftime("%Y-%m-%d %H:%M:%S")

url = 'http://*******

user_ids = [***, ***, ***, ***]

msg = f'Airflow错误告警:{dag_id}_{task_id}_{time}'

print(msg)

content = {'content': msg}

headers = {'x-tenant-id': '9', 'content-type': 'application/json'}

params = {'userIds': user_ids, 'magType': 'text', 'content': json.dumps(content)}

# response = requests.post(url=url, data=json.dumps(params), headers=headers)

#print(response.text)

print("----发送告警到wechat---")

if __name__ == '__main__':

print('--send msg to wechat--')

send_msg_2_wechat()

- dag-tutorial调度测试

from datetime import datetime, timedelta

from textwrap import dedent

# The DAG object; we'll need this to instantiate a DAG

import pendulum

from airflow import DAG

# Operators; we need this to operate!

from airflow.operators.bash import BashOperator

from utils import send_msg_2_wechat

with DAG(

"tutorial2",

# These args will get passed on to each operator

# You can override them on a per-task basis during operator initialization

default_args={

"depends_on_past": False,

"email": ["tianjun@yijiupi.com"],

"email_on_failure": True,

"email_on_retry": False,

"retries": 1,

"retry_delay": timedelta(seconds=10),

# 'queue': 'bash_queue',

# 'pool': 'backfill',

# 'priority_weight': 10,

# 'end_date': datetime(2016, 1, 1),

# 'wait_for_downstream': False,

# 'sla': timedelta(hours=2),

# 'execution_timeout': timedelta(seconds=300),

'on_failure_callback': send_msg_2_wechat,

# 'on_success_callback': some_other_function,

# 'on_retry_callback': another_function,

# 'sla_miss_callback': yet_another_function,

# 'trigger_rule': 'all_success'

},

description="A simple tutorial DAG",

schedule="56 23 * * *",

start_date=pendulum.datetime(2017, 1, 1, tz=pendulum.timezone("Asia/Chongqing")),

catchup=False,

tags=["example"],

) as dag:

# t1, t2 and t3 are examples of tasks created by instantiating operators

t1 = BashOperator(

task_id="print_date",

bash_command="date",

)

t2 = BashOperator(

task_id="sleep",

depends_on_past=False,

bash_command="sleep 5 && exit -1",

retries=3,

)

t1.doc_md = dedent(

"""\

#### Task Documentation

You can document your task using the attributes `doc_md` (markdown),

`doc` (plain text), `doc_rst`, `doc_json`, `doc_yaml` which gets

rendered in the UI's Task Instance Details page.

**Image Credit:** Randall Munroe, [XKCD](https://xkcd.com/license.html)

"""

)

dag.doc_md = __doc__ # providing that you have a docstring at the beginning of the DAG; OR

# dag.doc_md = """

# This is a documentation placed anywhere

# """ # otherwise, type it like this

dag.doc_md = dedent(

"""\

#### Task Documentation

You can document your task using the attributes `doc_md` (markdown),

`doc` (plain text), `doc_rst`, `doc_json`, `doc_yaml` which gets

rendered in the UI's Task Instance Details page.

**Image Credit:** Randall Munroe, [XKCD](https://xkcd.com/license.html)

"""

)

templated_command = dedent(

"""

{% for i in range(5) %}

echo "{{ ds }}"

echo "{{ macros.ds_add(ds, 7)}}"

{% endfor %}

"""

)

t3 = BashOperator(

task_id="templated",

depends_on_past=False,

bash_command=templated_command,

)

t1 >> [t2, t3]

- cdh-kerberos调度测试

import datetime

from textwrap import dedent

import pendulum

from airflow import DAG

from airflow.operators.python import PythonOperator

from utils import read_sql, get_data_from_impala, df2kafka_dic, send_msg_2_wechat

def get_data_impala_2_kafka(path):

date_key = (datetime.datetime.now() - datetime.timedelta(days=1)).strftime('%Y%m%d')

for sql in read_sql(path).split(';')[:-1]:

sql = sql.format(date_key=date_key)

print(sql)

df = get_data_from_impala(sql)

df2kafka_dic(df, table_name='yjp_dm_ai.dm_ai_trd_features_user_bak')

with DAG(

"ai-feature-user",

# These args will get passed on to each operator

# You can override them on a per-task basis during operator initialization

default_args={

"depends_on_past": False,

"email": ["tianjun@yijiupi.com"],

"email_on_failure": True,

"email_on_retry": False,

"retries": 2,

"retry_delay": datetime.timedelta(minutes=1),

# 'queue': 'bash_queue',

# 'pool': 'backfill',

# 'priority_weight': 10,

# 'end_date': datetime(2016, 1, 1),

# 'wait_for_downstream': False,

# 'sla': timedelta(hours=2),

# 'execution_timeout': timedelta(seconds=300),

'on_failure_callback': send_msg_2_wechat,

# 'on_success_callback': some_other_function,

# 'on_retry_callback': another_function,

# 'sla_miss_callback': yet_another_function,

# 'trigger_rule': 'all_success'

},

description="user features ",

schedule="56 01 * * *",

start_date=pendulum.datetime(2023, 4, 19, tz=pendulum.timezone("Asia/Shanghai")),

catchup=False,

tags=["ai-user-features"],

) as dag:

t1 = PythonOperator(

task_id='user-features',

provide_context=True,

python_callable=get_data_impala_2_kafka,

op_kwargs={'path': '/opt/airflow/dags/sql/user_features/user_features.sql'}

)

t1.doc_md = dedent(

"""

#### 用户侧特征详情...

"""

)

dag.doc_md = __doc__ # providing that you have a docstring at the beginning of the DAG; OR

dag.doc_md = """

用户侧特征调度

""" # otherwise, type it like this

t1

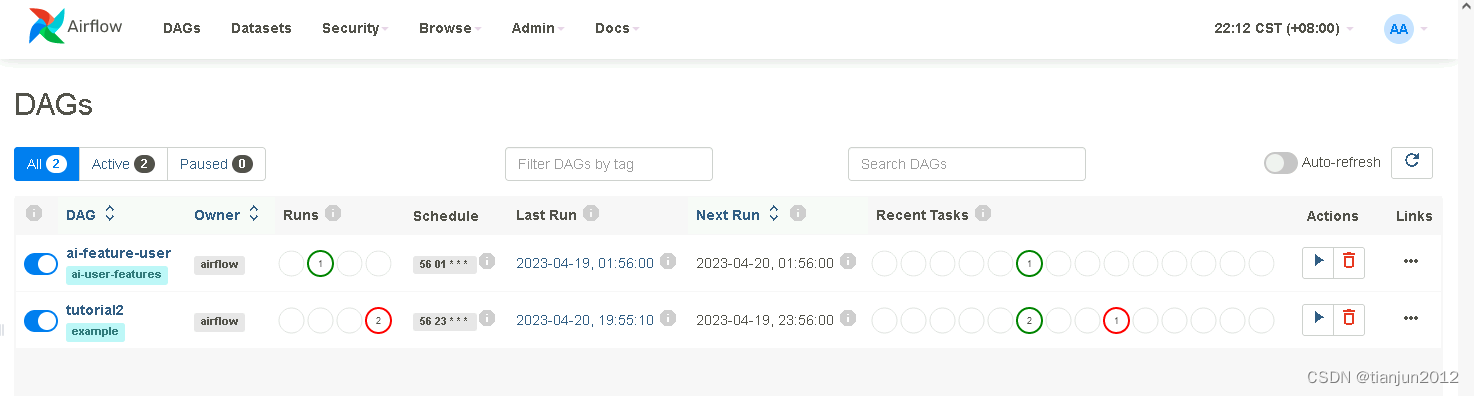

最后截图看看最后的成果:

4543

4543

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?