The algorithms we mentioned can solve the more general problem of learning to recognize whether a document belongs in one category or another.

Early attempts to filter spam were all rule-based classifiers, however, after learning those rules, spammers stopped exhibiting the obvious behaviors to get around the filters. To solve this problem, we can create separate instances and datasets based on both initially and as we receive more messages for individual users, groups, or sites.

STEP1: the extraction of features

The classifier that you will be building needs features to use for classifying different items. A feature is anything that you can determine as being either present or absent in the item.

STEP2: Training the Classifier

The classifiers learn how to classify a document by being trained. These classifiers are specifically designed to start off very uncertain and increase in certainty as it learns which features are important for making a distinction.

We can easily get the conditional probability -” the probability of A given B ” which is also written as Pr(A|B) . In our case, we can get

However, using only the information it has seen so far makes it incredibly sensitive during early training and to words that appear very rarely. To get around this problem, we’ll need to decide on an assumed probability, which will be used when we have very little information about the feature in question.Besides, we need to decide how much to weight the assumed probability.

we define the new probability as below:

The introduction of Classifiers

A Naive Classifier

Some introduction of naive classifier is discussed in

朴素贝叶斯学习笔记

In our case, we assume that the probability of one word in the document being in a specific category is unrelated to the probability of the other words being in that category. We can easily get:

Pr(C|D)=Pr(D|C)×Pr(C)/Pr(D)

where C means Category and D means Document

The next step in building the naive Bayes classifier is actually deciding in which category a new item belongs. In some applications it’s better for the classifier to admit that it doesn’t know the answer than to decide that the answer is the category with a marginally higher probability.

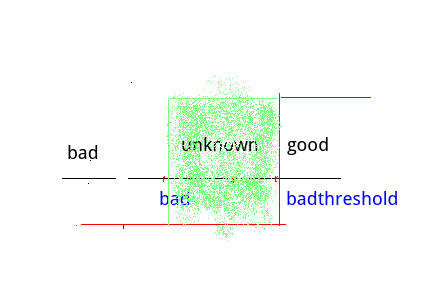

We set a threshold to show this idea.

when Pr(C|D)>Pr(Cbest|D)×threshold we can say this document belongs to “good” category, if not , it belongs to “unknown” category.The Fisher Method

Unlike the naive Bayesian filter, which uses the feature probabilities to create a whole document probability, the Fisher method calculates the probability of a category for each feature in the document, then combines the probabilities and tests to see if the set of probabilities is more or less likely than a random set.

P(Cj|Fi)=P(Fi|Cj)/∑k(Fk|Cj)

where Cj means the j th category,Fk means the k <script id="MathJax-Element-1753" type="math/tex">k</script>th feature.

class classifier:

def __init__(self,getfeatures,filename=None):

self.fc={}

self.cc={}

self.getfeatures=getfeatures

self.thresholds={}

def setthreshold(self,cat,t):

self.thresholds[cat]=t

def getthreshold(self,cat):

if cat not in self.thresholds:

return 1.0

return self.thresholds[cat]

def classify(self,item,default=None):

probs={}

max=0.0

for cat in self.categories():

probs[cat]=self.prob(item,cat)

if probs[cat]>max:

max=probs[cat]

best=cat

for cat in probs:

if cat==best:

continue

if probs[cat]*self.getthreshold(best)>probs[best]:

return default

return best

def incf(self,f,cat):

self.fc.setdefault(f,{})

self.fc[f].setdefault(cat,0)

self.fc[f][cat]+=1

def incc(self,cat):

self.cc.setdefault(cat,0)

self.cc[cat]+=1

def fcount(self,f,cat):

if f in self.fc and cat in self.fc[f]:

return float(self.fc[f][cat])

return 0.0

def catcount(self,cat):

if cat in self.cc:

return float(self.cc[cat])

return 0

def totalcount(self):

return sum(self.cc.values())

def categories(self):

return self.cc.keys()

def train(self,item,cat):

features=self.getfeatures(item)

for f in features:

self.incf(f,cat)

self.incc(cat)

########################################################

def ffcount(self,f):

if f in self.fc:

return sum(self.fc[f].values())

def ttotal(self):

s=0.0

for f in self.fc:

s+=self.ffcount(f)

return s

def docprob(self,item):

features=self.getfeatures(item)

p=1

for f in features:

p*=(self.ffcount(f)/self.ttotal())

return p

########################################################

def fprob(self,f,cat):

if self.catcount(cat)==0:

return 0

return self.fcount(f,cat)/self.catcount(cat)

def weightedprob(self,f,cat,prf,weight=1.0,ap=0.5):

basicprob=prf(f,cat)

totals=sum([self.fcount(f,c) for c in self.categories()])

bp=((weight*ap)+(totals*basicprob))/(weight+totals)

return bp

class naivebayes(classifier):

def docprob(self,item,cat):

features=self.getfeatures(item)

p=1

for f in features:

p*=self.weightedprob(f,cat,self.fprob)

return p

def prob(self,item,cat):

catprob=self.catcount(cat)/self.totalcount()

docprob=self.docprob(item,cat)

return catprob*docprob

class fisherclassifier(classifier):

def cprob(self,f,cat):

clf=self.fprob(f,cat)

if clf==0:

return 0

freqsum=sum([self.fprob(f,c) for c in self.categories()])

p=clf/(freqsum)

return p

def fisherprob(self,item,cat):

p=1

features=self.getfeatures(item)

for f in features:

p*=(self.weightedprob(f,cat,self.cprob))

fscore=-2*math.log(p)

return self.invchi2(fscore,len(features)*2)

def invchi2(self,chi,df):

m=chi/2.0

sum1 =term=math.exp(-m)

for i in range(1,df//2):

term *=m/i

sum1+=term

return min(sum1,1.0)REFERENCE

《Programming Collective Intelligence》

764

764

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?