目录

主要概念

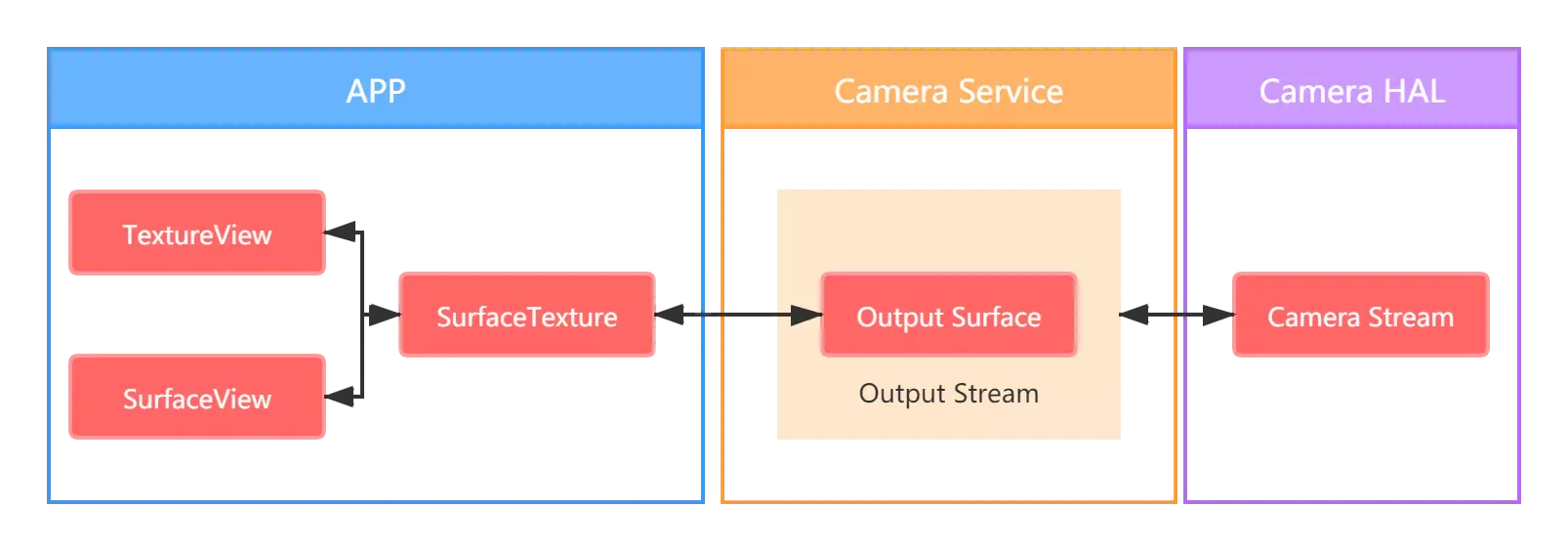

实现相机的预览是利用Camera2实现各种复杂功能的第一步,在这一步中,最关键的便是如何从摄像头获取数据。实现相机的预览有四个关键的要素:CameraDevice、CaptureSession、CameraManager、Surface。

概括地说,CameraDevice 是摄像设备,CameraManager 是一个允许我们与 CameraDevice对象交互的系统服务,Surface 是相机服务输出的对象,而通过 CaptureSession 可以建立起 CameraDevice 到一个或多个 Surface 的输出。

Ⅰ CameraManager

CameraManager是一项系统服务在使用之前获取即可。

//private lateinit var cameraManager: CameraManager

cameraManager = getSystemService(Context.CAMERA_SERVICE) as CameraManagerⅡ CameraDevice

每个摄像头都是一个 CameraDevice ,一个 CameraDevice 可以同时输出多个流,即可以将相机的图像传到多个 Surface(这允许我们可以同时预览、拍照、摄像)。在通过 openCamera 函数连接摄像头后,我们即可获得一个 CameraDevice 对象。

private lateinit var cameraDevice: CameraDevice

private val temp=object : CameraDevice.StateCallback(){

override fun onOpened(p0: CameraDevice) {

cameraDevice=p0

}

...省略部分重写

}Ⅲ Surface

Surface 是 CameraDevice 输出的对象,若想进一步在APP中实现预览,选择支持动画的 TextureView 进行转换即可。

Ⅳ CameraCaptureSession

创建一个最基础的 CameraCaptureSession 需要至少一个输出缓冲区(Surface)以及一个回调(CameraCaptureSession.Callback)。

cameraDevice.createCaptureSession( Surface的List, 回调函数 , 线程 )基本框架

个人一些浅浅的理解:

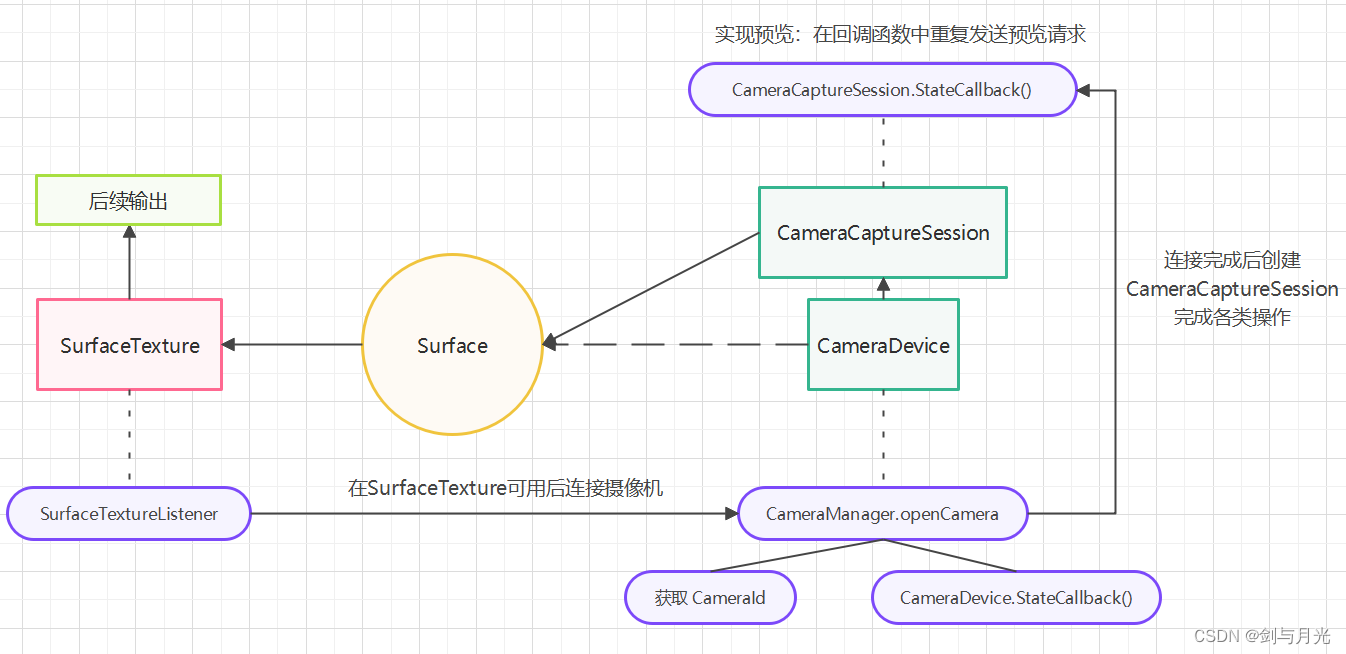

逻辑上的主线就是内层概念的串联。CameraDevice 通过 CaptureSession 将数据传入不同的 Surface,然后用 TextureView 的 SurfaceTexture 来接收。

实现方面,首先,由于 SurfaceTexture 处于接收的底端,需要在确保 SurfaceTexture 可用后再连接相机,所以可在 SurfaceTextureListener 中连接相机,连接完成后生成 CameraCaptureSession 向 SurfaceTexture 输出图像。

此外,还需要动态申请相机权限,并在用之前检查。否则,可以先安装,等闪退了去设置里手动打开权限。

代码实现(Kotlin)

准备工作

一、需要在 AndroidMenifest 清单文件中添加权限

<uses-feature android:name="android.hardware.camera.any" />

<uses-permission android:name="android.permission.CAMERA" />二、在 Layout 布局文件中创建一个 TextureView ,分配id(后续代码分配 的 id 为 textureView)

三、配置 Gradle 这个我也不太懂,可以参考我的(文末)

MainActivity

**添加包名**

import android.Manifest

import android.annotation.SuppressLint

import android.content.Context

import android.content.Intent

import android.content.pm.PackageManager

import android.graphics.SurfaceTexture

import android.hardware.camera2.*

import android.net.Uri

import android.os.*

import android.provider.Settings

import android.view.Surface

import android.view.TextureView

import android.widget.Toast

import androidx.appcompat.app.AppCompatActivity

import androidx.core.app.ActivityCompat

import androidx.core.content.ContextCompat

const val CAMERA_REQUEST_RESULT = 1

class MainActivity : AppCompatActivity() {

private lateinit var textureView: TextureView

private lateinit var cameraId: String

private lateinit var cameraManager: CameraManager

private lateinit var cameraDevice: CameraDevice

private lateinit var captureRequestBuilder: CaptureRequest.Builder // 用来构建重复发送的预览请求

private lateinit var cameraCaptureSession: CameraCaptureSession

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_main)

supportActionBar?.hide()

//变量初始化

textureView = findViewById(R.id.textureView)

cameraManager = getSystemService(Context.CAMERA_SERVICE) as CameraManager

textureView.surfaceTextureListener = surfaceTextureListener

// 权限判断

if (!wasCameraPermissionWasGiven()) {

requestPermissions(arrayOf(Manifest.permission.CAMERA,Manifest.permission.RECORD_AUDIO), CAMERA_REQUEST_RESULT)

}

}

// 设置相机相关参数

private fun setupCamera() {

val cameraIds: Array<String> = cameraManager.cameraIdList

// 获取后置摄像头ID

for (id in cameraIds) {

val cameraCharacteristics = cameraManager.getCameraCharacteristics(id)

if (cameraCharacteristics.get(CameraCharacteristics.LENS_FACING) == CameraCharacteristics.LENS_FACING_BACK) {

cameraId = id

break

}

}

}

//判断是否获得相机权限

private fun wasCameraPermissionWasGiven() : Boolean {

if (ContextCompat.checkSelfPermission(this, Manifest.permission.CAMERA) == PackageManager.PERMISSION_GRANTED)

{

return true

}

return false

}

//申请相机权限

override fun onRequestPermissionsResult(

requestCode: Int,

permissions: Array<out String>,

grantResults: IntArray

) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults)

if (ContextCompat.checkSelfPermission(this, Manifest.permission.CAMERA) == PackageManager.PERMISSION_GRANTED) {

surfaceTextureListener.onSurfaceTextureAvailable(textureView.surfaceTexture!!, textureView.width, textureView.height)

} else {

Toast.makeText(

this,

"Camera permission is needed to run this application",

Toast.LENGTH_LONG

)

.show()

if (ActivityCompat.shouldShowRequestPermissionRationale(

this,

Manifest.permission.CAMERA

)) {

val intent = Intent()

intent.action = Settings.ACTION_APPLICATION_DETAILS_SETTINGS

intent.data = Uri.fromParts("package", this.packageName, null)

startActivity(intent)

}

}

}

//连接相机

@SuppressLint("MissingPermission")

private fun connectCamera() {

cameraManager.openCamera(cameraId, cameraStateCallback, null)

}

// 当SurfaceTexture可用,设置相机然后打开相机,在 onSurfaceTextureAvailable 中实现

private val surfaceTextureListener = object : TextureView.SurfaceTextureListener {

@SuppressLint("MissingPermission")

override fun onSurfaceTextureAvailable(texture: SurfaceTexture, width: Int, height: Int) {

if (wasCameraPermissionWasGiven()) {

setupCamera()

connectCamera()

}

}

override fun onSurfaceTextureSizeChanged(texture: SurfaceTexture, width: Int, height: Int) {

}

override fun onSurfaceTextureDestroyed(texture: SurfaceTexture): Boolean {

return true

}

override fun onSurfaceTextureUpdated(texture: SurfaceTexture) {

}

}

// 获取相机设备后,建立 CaptureSession

private val cameraStateCallback = object : CameraDevice.StateCallback() {

override fun onOpened(camera: CameraDevice) {

cameraDevice = camera

//textureView.surfaceTexture默认的大小和textureView一致

val surfaceTexture : SurfaceTexture? = textureView.surfaceTexture

//创建 PREVIEW 预览请求,请求将在 CaptureSession 的回调函数中被使用(重复发送预览请求)

captureRequestBuilder = cameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW)

//目标

captureRequestBuilder.addTarget(Surface(surfaceTexture))

cameraDevice.createCaptureSession(listOf(Surface(surfaceTexture)), captureStateCallback, null)

}

override fun onDisconnected(cameraDevice: CameraDevice) {

}

override fun onError(cameraDevice: CameraDevice, error: Int) {

}

}

private val captureStateCallback = object : CameraCaptureSession.StateCallback() {

override fun onConfigureFailed(session: CameraCaptureSession) {

}

// 设置完成后重复发送预览请求

override fun onConfigured(session: CameraCaptureSession) {

cameraCaptureSession = session

cameraCaptureSession.setRepeatingRequest(captureRequestBuilder.build(), null, null)

}

}

}

6279

6279

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?