一、Android相机应用与Surface

Camera应用的预览一般通过SurfaceView去显示,SurfaceView作为显示的载体,

Surface surface = mSurfaceView.getSurfaceHolder().getSurface();获取的surface会通过Camera API1/API2的接口下发到framework层;

Camera应用的拍照在API2里面也是通过surface实现的

ImageReader captureReader = ImageReader.newInstance(width, height, format, maxNum);

Surface surface = captureReader.getSurface();无论预览还是拍照,都是以surface的形式将数据载体下发到framework层。

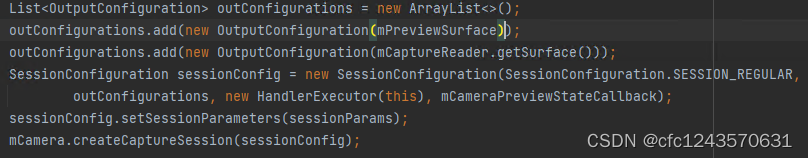

每个surface会被封装成OutputConfiguration,最后调用createCaptureSession去配流。

二、应用层的Surface下沉到cameraserver

1、首先看两个文件 OutputConfiguration.java; OutputConfiguration.cpp;

![]()

![]()

可以看到这java和cpp里面的OutputConfiguration都继承了Parcelable;这就是一个可序列化的接口,跨进程传递数据用的,所以java的OutputConfiguration最总会解析成cpp的OutputConfiguration。

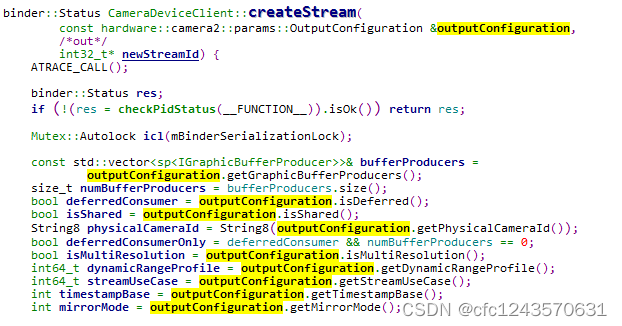

2、createCaptureSession 调用之后会一直到framework里面的cameraserver模块

frameworks/av/camera/libcameraservice/api2/CameraDeviceClient.cpp

java层的配流会先到CameraDeviceClient里面的 createStream

这里最主要的就是 bufferProducers,bufferProducers就是surface.graphicBufferProducer, surface就是java层的Surface转换而来的native层的Surface(frameworks/native/libs/gui/view/Surface.cpp)

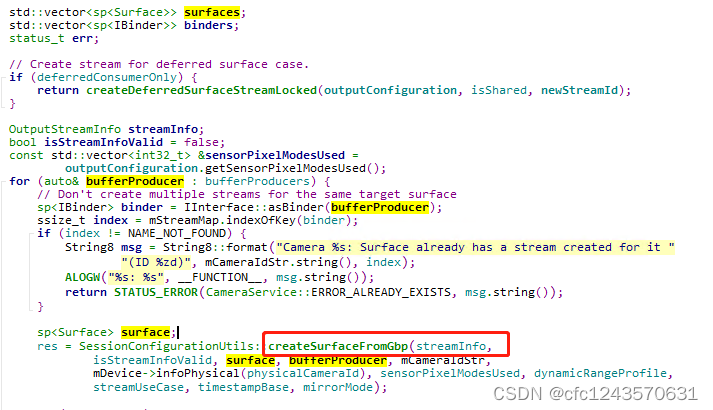

接着通过bufferProducers创建真正的Surface(frameworks/native/libs/gui/Surface.cpp)

以下是 createSurfaceFromGbp的主要内容,不重要的省略了

binder::Status createSurfaceFromGbp(

OutputStreamInfo& streamInfo, bool isStreamInfoValid,

sp<Surface>& surface, const sp<IGraphicBufferProducer>& gbp,

const String8 &logicalCameraId, const CameraMetadata &physicalCameraMetadata,

const std::vector<int32_t> &sensorPixelModesUsed, int64_t dynamicRangeProfile,

int64_t streamUseCase, int timestampBase, int mirrorMode) {

surface = new Surface(gbp, useAsync);

ANativeWindow *anw = surface.get();

int width, height, format;

android_dataspace dataSpace;

if ((err = anw->query(anw, NATIVE_WINDOW_WIDTH, &width)) != OK) {

String8 msg = String8::format("Camera %s: Failed to query Surface width: %s (%d)",

logicalCameraId.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION, msg.string());

}

if ((err = anw->query(anw, NATIVE_WINDOW_HEIGHT, &height)) != OK) {

String8 msg = String8::format("Camera %s: Failed to query Surface height: %s (%d)",

logicalCameraId.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION, msg.string());

}

if ((err = anw->query(anw, NATIVE_WINDOW_FORMAT, &format)) != OK) {

String8 msg = String8::format("Camera %s: Failed to query Surface format: %s (%d)",

logicalCameraId.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION, msg.string());

}

if ((err = anw->query(anw, NATIVE_WINDOW_DEFAULT_DATASPACE,

reinterpret_cast<int*>(&dataSpace))) != OK) {

String8 msg = String8::format("Camera %s: Failed to query Surface dataspace: %s (%d)",

logicalCameraId.string(), strerror(-err), err);

ALOGE("%s: %s", __FUNCTION__, msg.string());

return STATUS_ERROR(CameraService::ERROR_INVALID_OPERATION, msg.string());

}

............

if (!isStreamInfoValid) {

streamInfo.width = width;

streamInfo.height = height;

streamInfo.format = format;

streamInfo.dataSpace = dataSpace;

streamInfo.consumerUsage = consumerUsage;

streamInfo.sensorPixelModesUsed = overriddenSensorPixelModes;

streamInfo.dynamicRangeProfile = dynamicRangeProfile;

streamInfo.streamUseCase = streamUseCase;

streamInfo.timestampBase = timestampBase;

streamInfo.mirrorMode = mirrorMode;

return binder::Status::ok();

}

.....

}3、带着创建好的surface和streaminfo, 继续走createStream流程

err = mDevice->createStream(surfaces, deferredConsumer, streamInfo.width,

streamInfo.height, streamInfo.format, streamInfo.dataSpace,

static_cast<camera_stream_rotation_t>(outputConfiguration.getRotation()),

&streamId, physicalCameraId, streamInfo.sensorPixelModesUsed, &surfaceIds,

outputConfiguration.getSurfaceSetID(), isShared, isMultiResolution,

/*consumerUsage*/0, streamInfo.dynamicRangeProfile, streamInfo.streamUseCase,

streamInfo.timestampBase, streamInfo.mirrorMode);提前说一下,这里的surface对应的buffer就是我们以后下发request给HAL层带下去的buffer;所以所谓的buffer不是应用层直接创建的,是在cameraserver里面创建的

frameworks/av/camera/libcameraservice/device3/Camera3Device.cpp

createStream主要就是创建 Camera3OutputStream 对象,这里的consumers就是上面创建的Surface也被带到了Camera3OutputStream里面;Camera3OutputStream可以简单理解成管理数据输出buffer的。

status_t Camera3Device::createStream(const std::vector<sp<Surface>>& consumers,

bool hasDeferredConsumer, uint32_t width, uint32_t height, int format,

android_dataspace dataSpace, camera_stream_rotation_t rotation, int *id,

const String8& physicalCameraId, const std::unordered_set<int32_t> &sensorPixelModesUsed,

std::vector<int> *surfaceIds, int streamSetId, bool isShared, bool isMultiResolution,

uint64_t consumerUsage, int64_t dynamicRangeProfile, int64_t streamUseCase,

int timestampBase, int mirrorMode) {

sp<Camera3OutputStream> newStream;

if (format == HAL_PIXEL_FORMAT_BLOB) {

ssize_t blobBufferSize;

if (dataSpace == HAL_DATASPACE_DEPTH) {

blobBufferSize = getPointCloudBufferSize(infoPhysical(physicalCameraId));

if (blobBufferSize <= 0) {

SET_ERR_L("Invalid point cloud buffer size %zd", blobBufferSize);

return BAD_VALUE;

}

} else if (dataSpace == static_cast<android_dataspace>(HAL_DATASPACE_JPEG_APP_SEGMENTS)) {

blobBufferSize = width * height;

} else {

blobBufferSize = getJpegBufferSize(infoPhysical(physicalCameraId), width, height);

if (blobBufferSize <= 0) {

SET_ERR_L("Invalid jpeg buffer size %zd", blobBufferSize);

return BAD_VALUE;

}

}

newStream = new Camera3OutputStream(mNextStreamId, consumers[0],

width, height, blobBufferSize, format, dataSpace, rotation,

mTimestampOffset, physicalCameraId, sensorPixelModesUsed, transport, streamSetId,

isMultiResolution, dynamicRangeProfile, streamUseCase, mDeviceTimeBaseIsRealtime,

timestampBase, mirrorMode);

} else if (format == HAL_PIXEL_FORMAT_RAW_OPAQUE) {

bool maxResolution =

sensorPixelModesUsed.find(ANDROID_SENSOR_PIXEL_MODE_MAXIMUM_RESOLUTION) !=

sensorPixelModesUsed.end();

ssize_t rawOpaqueBufferSize = getRawOpaqueBufferSize(infoPhysical(physicalCameraId), width,

height, maxResolution);

if (rawOpaqueBufferSize <= 0) {

SET_ERR_L("Invalid RAW opaque buffer size %zd", rawOpaqueBufferSize);

return BAD_VALUE;

}

newStream = new Camera3OutputStream(mNextStreamId, consumers[0],

width, height, rawOpaqueBufferSize, format, dataSpace, rotation,

mTimestampOffset, physicalCameraId, sensorPixelModesUsed, transport, streamSetId,

isMultiResolution, dynamicRangeProfile, streamUseCase, mDeviceTimeBaseIsRealtime,

timestampBase, mirrorMode);

} else if (isShared) {

newStream = new Camera3SharedOutputStream(mNextStreamId, consumers,

width, height, format, consumerUsage, dataSpace, rotation,

mTimestampOffset, physicalCameraId, sensorPixelModesUsed, transport, streamSetId,

mUseHalBufManager, dynamicRangeProfile, streamUseCase, mDeviceTimeBaseIsRealtime,

timestampBase, mirrorMode);

} else if (consumers.size() == 0 && hasDeferredConsumer) {

newStream = new Camera3OutputStream(mNextStreamId,

width, height, format, consumerUsage, dataSpace, rotation,

mTimestampOffset, physicalCameraId, sensorPixelModesUsed, transport, streamSetId,

isMultiResolution, dynamicRangeProfile, streamUseCase, mDeviceTimeBaseIsRealtime,

timestampBase, mirrorMode);

} else {

newStream = new Camera3OutputStream(mNextStreamId, consumers[0],

width, height, format, dataSpace, rotation,

mTimestampOffset, physicalCameraId, sensorPixelModesUsed, transport, streamSetId,

isMultiResolution, dynamicRangeProfile, streamUseCase, mDeviceTimeBaseIsRealtime,

timestampBase, mirrorMode);

}

newStream->setStatusTracker(mStatusTracker);

newStream->setBufferManager(mBufferManager);

newStream->setImageDumpMask(mImageDumpMask);

res = mOutputStreams.add(mNextStreamId, newStream);

mSessionStatsBuilder.addStream(mNextStreamId);

*id = mNextStreamId++;

mNeedConfig = true;

return OK;

}Camera3OutputStream::Camera3OutputStream(int id,

sp<Surface> consumer,

uint32_t width, uint32_t height, int format,

android_dataspace dataSpace, camera_stream_rotation_t rotation,

nsecs_t timestampOffset, const String8& physicalCameraId,

const std::unordered_set<int32_t> &sensorPixelModesUsed, IPCTransport transport,

int setId, bool isMultiResolution, int64_t dynamicRangeProfile,

int64_t streamUseCase, bool deviceTimeBaseIsRealtime, int timestampBase,

int mirrorMode) :

Camera3IOStreamBase(id, CAMERA_STREAM_OUTPUT, width, height,

/*maxSize*/0, format, dataSpace, rotation,

physicalCameraId, sensorPixelModesUsed, setId, isMultiResolution,

dynamicRangeProfile, streamUseCase, deviceTimeBaseIsRealtime,

timestampBase),

mConsumer(consumer), // 至此,Surface已经交到了Stream

mTransform(0),

mTraceFirstBuffer(true),

mUseBufferManager(false),

mTimestampOffset(timestampOffset),

mConsumerUsage(0),

mDropBuffers(false),

mMirrorMode(mirrorMode),

mDequeueBufferLatency(kDequeueLatencyBinSize),

mIPCTransport(transport) {

}4、buffer算是已经创建好了,也给了Camera3OutputStream了,下面就要看buffer怎么拿出来使用了,那就到了下发request的阶段了。

在Camera3Device里面有一个内部线程 是RequestThread,该线程一直在不停的发送request给Hal层(以前经常有hal的同事说应用下发request慢了,导致预览卡顿,其实不是应用不停地下发request,应用只在参数主动改变的时候才会下发request)。下面是该线程的两个主要内容,我们主要看buffer怎么弄的,下发request就不详细跟下去了。

bool Camera3Device::RequestThread::threadLoop() {

res = prepareHalRequests(); // 准备request, 包括output_buffer

bool submitRequestSuccess = false;

submitRequestSuccess = sendRequestsBatch(); // 下发Request

return submitRequestSuccess;

}status_t Camera3Device::RequestThread::prepareHalRequests() {

......

res = outputStream->getBuffer(&outputBuffers->editItemAt(j),

waitDuration,

captureRequest->mOutputSurfaces[streamId]);

......

}status_t Camera3Stream::getBuffer(camera_stream_buffer *buffer,

nsecs_t waitBufferTimeout,

const std::vector<size_t>& surface_ids) {

res = getBufferLocked(buffer, surface_ids);

return res;

}status_t Camera3OutputStream::getBufferLocked(camera_stream_buffer *buffer,

const std::vector<size_t>&) {

ANativeWindowBuffer* anb;

int fenceFd = -1;

status_t res;

res = getBufferLockedCommon(&anb, &fenceFd);

handoutBufferLocked(*buffer, &(anb->handle), /*acquireFence*/fenceFd,

/*releaseFence*/-1, CAMERA_BUFFER_STATUS_OK, /*output*/true);

return OK;

}status_t Camera3OutputStream::getBufferLockedCommon(ANativeWindowBuffer** anb, int* fenceFd) {

if (!gotBufferFromManager) {

sp<Surface> consumer = mConsumer;

if (batchSize == 1) {

sp<ANativeWindow> anw = consumer;

res = anw->dequeueBuffer(anw.get(), anb, fenceFd);

}

}

}handoutBufferLocked 里面主要就是buffer.buffer = handle赋值了。

void Camera3IOStreamBase::handoutBufferLocked(camera_stream_buffer &buffer,

buffer_handle_t *handle,

int acquireFence,

int releaseFence,

camera_buffer_status_t status,

bool output) {

incStrong(this);

buffer.stream = this;

buffer.buffer = handle;

buffer.acquire_fence = acquireFence;

buffer.release_fence = releaseFence;

buffer.status = status;

}至此,cameraserver里面的buffer由来已经完成

1279

1279

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?