本人编写过ffmpeg编译时添加freetype支持,用于在视频中添加文字

但是在将字体添加到视频中时,采取的是在滤镜drawtext里面配置如下属性。

fontfile=zihun152hao-jijiachaojihei.ttf

这不是正常的编程写法:

1.这种写法需要本地有字体的ttf文件,比较麻烦

2.通常情况下,我们会指定微软雅黑,宋体之类的字体,不会特定的指字体文件

我们希望的是命令行中输入如下命令,能正常往视频中加字

ffmpeg -i 2022-01-16T12-15-37.mp4 -vf “drawtext=fontcolor=red:fontsize=80:font=SimHei:text=‘你好,我来了’:x=100:y=200” output_drawtext.mp4

但是事与愿违,运行报错,报错信息如下:

font属性不支持,若想支持,则需要先编译fontconfig库,然后在编译ffmpeg的时候,添加属性

–enable-libfontconfig 即可。

关于fontconfig的编译,本人参考了如下文章

vs2013编译ffmpeg之四 fontconfig、freetype、libiconv、libxml2、fribidi

本人是用vs2017编译的,编译起来其实挺麻烦的,尤其是fontconfig,本人采取的是如下地址的fontconfig,里面直接有vs工程。

https://github.com/ShiftMediaProject/fontconfig

编译了之后,再配置ffmpeg,报错,为此还修改了fontconfig.pc文件,如下所示,大家可以看到

Libs: -L${libdir} -lfontconfig -lws2_32 -lmsvcrt

很明显,这里面我额外链接了ws2_32.lib和msvcrt.lib,如果不链接这两个库,配置ffmpeg时,会报错,在ffbuild/config.log日志文件里面,会有大量的链接错误。

prefix=/usr/local

exec_prefix=/usr/local

libdir=/usr/local/lib

includedir=/usr/local/include

Name: Fontconfig

Description: Font configuration and customization library

Version: 2.13.1

Requires:

Requires.private:

Libs: -L${libdir} -lfontconfig -lws2_32 -lmsvcrt

Libs.private: -liconv

Cflags: -I${includedir}

ffmpeg添加fontconfig编译后,下面的添加文本就可以正常运行了,我采取的字体为黑体

ffmpeg -i 2022-01-16T12-15-37.mp4 -vf “drawtext=fontcolor=red:fontsize=80:font=SimHei:text=‘你好,我来了’:x=100:y=200” output_drawtext.mp4

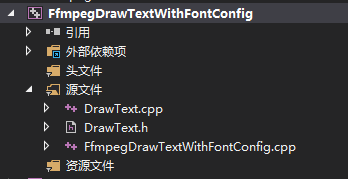

此外,本人还给出了代码的编写,来进行视频文件上添加文字,工程结构如下:

其中FfmpegDrawTextWithFontConfig.cpp的内容如下:

#include <iostream>

#include "DrawText.h"

#include <vector>

#ifdef __cplusplus

extern "C"

{

#endif

#pragma comment(lib, "avcodec.lib")

#pragma comment(lib, "avformat.lib")

#pragma comment(lib, "avutil.lib")

#pragma comment(lib, "avdevice.lib")

#pragma comment(lib, "avfilter.lib")

#pragma comment(lib, "postproc.lib")

#pragma comment(lib, "swresample.lib")

#pragma comment(lib, "swscale.lib")

#ifdef __cplusplus

};

#endif

std::string Unicode_to_Utf8(const std::string & str)

{

int nwLen = ::MultiByteToWideChar(CP_ACP, 0, str.c_str(), -1, NULL, 0);

wchar_t * pwBuf = new wchar_t[nwLen + 1];//一定要加1,不然会出现尾巴

ZeroMemory(pwBuf, nwLen * 2 + 2);

::MultiByteToWideChar(CP_ACP, 0, str.c_str(), str.length(), pwBuf, nwLen);

int nLen = ::WideCharToMultiByte(CP_UTF8, 0, pwBuf, -1, NULL, NULL, NULL, NULL);

char * pBuf = new char[nLen + 1];

ZeroMemory(pBuf, nLen + 1);

::WideCharToMultiByte(CP_UTF8, 0, pwBuf, nwLen, pBuf, nLen, NULL, NULL);

std::string retStr(pBuf);

delete[]pwBuf;

delete[]pBuf;

pwBuf = NULL;

pBuf = NULL;

return retStr;

}

int main()

{

CDrawText cVideoDrawText;

const char *pFileA = "E:\\learn\\ffmpeg\\FfmpegFilterTest\\x64\\Release\\in-computer.mp4";

const char *pFileOut = "E:\\learn\\ffmpeg\\FfmpegFilterTest\\x64\\Release\\in-computer_drawtext_fontconfig.mp4";

std::string strText = "牛逼哄哄";

strText = Unicode_to_Utf8(strText);

cVideoDrawText.StartDrawText(pFileA, pFileOut, 100, 300, 160, strText);

cVideoDrawText.WaitFinish();

return 0;

}

DrawText.h的内容如下:

#pragma once

#include <Windows.h>

#include <string>

#ifdef __cplusplus

extern "C"

{

#endif

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavdevice/avdevice.h"

#include "libavutil/audio_fifo.h"

#include "libavutil/avutil.h"

#include "libavutil/fifo.h"

#include "libavutil/frame.h"

#include "libavutil/imgutils.h"

#include "libavfilter/avfilter.h"

#include "libavfilter/buffersink.h"

#include "libavfilter/buffersrc.h"

#ifdef __cplusplus

};

#endif

class CDrawText

{

public:

CDrawText();

~CDrawText();

public:

int StartDrawText(const char *pFileA, const char *pFileOut, int x, int y, int iFontSize, std::string strText);

int WaitFinish();

private:

int OpenFileA(const char *pFileA);

int OpenOutPut(const char *pFileOut);

int InitFilter(const char* filter_desc);

private:

static DWORD WINAPI VideoAReadProc(LPVOID lpParam);

void VideoARead();

static DWORD WINAPI VideoDrawTextProc(LPVOID lpParam);

void VideoDrawText();

private:

AVFormatContext *m_pFormatCtx_FileA = NULL;

AVCodecContext *m_pReadCodecCtx_VideoA = NULL;

AVCodec *m_pReadCodec_VideoA = NULL;

AVCodecContext *m_pCodecEncodeCtx_Video = NULL;

AVFormatContext *m_pFormatCtx_Out = NULL;

AVFifoBuffer *m_pVideoAFifo = NULL;

int m_iVideoWidth = 1920;

int m_iVideoHeight = 1080;

int m_iYuv420FrameSize = 0;

private:

AVFilterGraph* m_pFilterGraph = NULL;

AVFilterContext* m_pFilterCtxSrcVideoA = NULL;

AVFilterContext* m_pFilterCtxSink = NULL;

private:

CRITICAL_SECTION m_csVideoASection;

HANDLE m_hVideoAReadThread = NULL;

HANDLE m_hVideoDrawTextThread = NULL;

};

DrawText.cpp的内容如下:

#include "DrawText.h"

//#include "log/log.h"

CDrawText::CDrawText()

{

InitializeCriticalSection(&m_csVideoASection);

}

CDrawText::~CDrawText()

{

DeleteCriticalSection(&m_csVideoASection);

}

int CDrawText::StartDrawText(const char *pFileA, const char *pFileOut, int x, int y, int iFontSize, std::string strText)

{

int ret = -1;

do

{

ret = OpenFileA(pFileA);

if (ret != 0)

{

break;

}

ret = OpenOutPut(pFileOut);

if (ret != 0)

{

break;

}

char szFilterDesc[512] = { 0 };

_snprintf(szFilterDesc, sizeof(szFilterDesc),

"fontcolor=blue:fontsize=%d:font=%s:text=\'%s\':x=%d:y=%d",

iFontSize, "SimHei", strText.c_str(), x, y);

InitFilter(szFilterDesc);

m_iYuv420FrameSize = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, m_pReadCodecCtx_VideoA->width, m_pReadCodecCtx_VideoA->height, 1);

//申请30帧缓存

m_pVideoAFifo = av_fifo_alloc(30 * m_iYuv420FrameSize);

m_hVideoAReadThread = CreateThread(NULL, 0, VideoAReadProc, this, 0, NULL);

m_hVideoDrawTextThread = CreateThread(NULL, 0, VideoDrawTextProc, this, 0, NULL);

} while (0);

return ret;

}

int CDrawText::WaitFinish()

{

int ret = 0;

do

{

if (NULL == m_hVideoAReadThread)

{

break;

}

WaitForSingleObject(m_hVideoAReadThread, INFINITE);

CloseHandle(m_hVideoAReadThread);

m_hVideoAReadThread = NULL;

WaitForSingleObject(m_hVideoDrawTextThread, INFINITE);

CloseHandle(m_hVideoDrawTextThread);

m_hVideoDrawTextThread = NULL;

} while (0);

return ret;

}

int CDrawText::OpenFileA(const char *pFileA)

{

int ret = -1;

do

{

if ((ret = avformat_open_input(&m_pFormatCtx_FileA, pFileA, 0, 0)) < 0) {

printf("Could not open input file.");

break;

}

if ((ret = avformat_find_stream_info(m_pFormatCtx_FileA, 0)) < 0) {

printf("Failed to retrieve input stream information");

break;

}

if (m_pFormatCtx_FileA->streams[0]->codecpar->codec_type != AVMEDIA_TYPE_VIDEO)

{

break;

}

m_pReadCodec_VideoA = (AVCodec *)avcodec_find_decoder(m_pFormatCtx_FileA->streams[0]->codecpar->codec_id);

m_pReadCodecCtx_VideoA = avcodec_alloc_context3(m_pReadCodec_VideoA);

if (m_pReadCodecCtx_VideoA == NULL)

{

break;

}

avcodec_parameters_to_context(m_pReadCodecCtx_VideoA, m_pFormatCtx_FileA->streams[0]->codecpar);

m_iVideoWidth = m_pReadCodecCtx_VideoA->width;

m_iVideoHeight = m_pReadCodecCtx_VideoA->height;

m_pReadCodecCtx_VideoA->framerate = m_pFormatCtx_FileA->streams[0]->r_frame_rate;

if (avcodec_open2(m_pReadCodecCtx_VideoA, m_pReadCodec_VideoA, NULL) < 0)

{

break;

}

ret = 0;

} while (0);

return ret;

}

int CDrawText::OpenOutPut(const char *pFileOut)

{

int iRet = -1;

AVStream *pAudioStream = NULL;

AVStream *pVideoStream = NULL;

do

{

avformat_alloc_output_context2(&m_pFormatCtx_Out, NULL, NULL, pFileOut);

{

AVCodec* pCodecEncode_Video = (AVCodec *)avcodec_find_encoder(m_pFormatCtx_Out->oformat->video_codec);

m_pCodecEncodeCtx_Video = avcodec_alloc_context3(pCodecEncode_Video);

if (!m_pCodecEncodeCtx_Video)

{

break;

}

pVideoStream = avformat_new_stream(m_pFormatCtx_Out, pCodecEncode_Video);

if (!pVideoStream)

{

break;

}

int frameRate = 10;

m_pCodecEncodeCtx_Video->flags |= AV_CODEC_FLAG_QSCALE;

m_pCodecEncodeCtx_Video->bit_rate = 4000000;

m_pCodecEncodeCtx_Video->rc_min_rate = 4000000;

m_pCodecEncodeCtx_Video->rc_max_rate = 4000000;

m_pCodecEncodeCtx_Video->bit_rate_tolerance = 4000000;

m_pCodecEncodeCtx_Video->time_base.den = frameRate;

m_pCodecEncodeCtx_Video->time_base.num = 1;

m_pCodecEncodeCtx_Video->width = m_iVideoWidth;

m_pCodecEncodeCtx_Video->height = m_iVideoHeight;

//pH264Encoder->pCodecCtx->frame_number = 1;

m_pCodecEncodeCtx_Video->gop_size = 12;

m_pCodecEncodeCtx_Video->max_b_frames = 0;

m_pCodecEncodeCtx_Video->thread_count = 4;

m_pCodecEncodeCtx_Video->pix_fmt = AV_PIX_FMT_YUV420P;

m_pCodecEncodeCtx_Video->codec_id = AV_CODEC_ID_H264;

m_pCodecEncodeCtx_Video->codec_type = AVMEDIA_TYPE_VIDEO;

av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "b-pyramid", "none", 0);

av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "preset", "superfast", 0);

av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "tune", "zerolatency", 0);

if (m_pFormatCtx_Out->oformat->flags & AVFMT_GLOBALHEADER)

m_pCodecEncodeCtx_Video->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

if (avcodec_open2(m_pCodecEncodeCtx_Video, pCodecEncode_Video, 0) < 0)

{

//编码器打开失败,退出程序

break;

}

}

if (!(m_pFormatCtx_Out->oformat->flags & AVFMT_NOFILE))

{

if (avio_open(&m_pFormatCtx_Out->pb, pFileOut, AVIO_FLAG_WRITE) < 0)

{

break;

}

}

avcodec_parameters_from_context(pVideoStream->codecpar, m_pCodecEncodeCtx_Video);

if (avformat_write_header(m_pFormatCtx_Out, NULL) < 0)

{

break;

}

iRet = 0;

} while (0);

if (iRet != 0)

{

if (m_pCodecEncodeCtx_Video != NULL)

{

avcodec_free_context(&m_pCodecEncodeCtx_Video);

m_pCodecEncodeCtx_Video = NULL;

}

if (m_pFormatCtx_Out != NULL)

{

avformat_free_context(m_pFormatCtx_Out);

m_pFormatCtx_Out = NULL;

}

}

return iRet;

}

DWORD WINAPI CDrawText::VideoAReadProc(LPVOID lpParam)

{

CDrawText *pVideoMerge = (CDrawText *)lpParam;

if (pVideoMerge != NULL)

{

pVideoMerge->VideoARead();

}

return 0;

}

void CDrawText::VideoARead()

{

AVFrame *pFrame;

pFrame = av_frame_alloc();

int y_size = m_pReadCodecCtx_VideoA->width * m_pReadCodecCtx_VideoA->height;

char *pY = new char[y_size];

char *pU = new char[y_size / 4];

char *pV = new char[y_size / 4];

AVPacket packet = { 0 };

int ret = 0;

while (1)

{

av_packet_unref(&packet);

ret = av_read_frame(m_pFormatCtx_FileA, &packet);

if (ret == AVERROR(EAGAIN))

{

continue;

}

else if (ret == AVERROR_EOF)

{

break;

}

else if (ret < 0)

{

break;

}

ret = avcodec_send_packet(m_pReadCodecCtx_VideoA, &packet);

if (ret >= 0)

{

ret = avcodec_receive_frame(m_pReadCodecCtx_VideoA, pFrame);

if (ret == AVERROR(EAGAIN))

{

continue;

}

else if (ret == AVERROR_EOF)

{

break;

}

else if (ret < 0) {

break;

}

while (1)

{

if (av_fifo_space(m_pVideoAFifo) >= m_iYuv420FrameSize)

{

///Y

int contY = 0;

for (int i = 0; i < pFrame->height; i++)

{

memcpy(pY + contY, pFrame->data[0] + i * pFrame->linesize[0], pFrame->width);

contY += pFrame->width;

}

///U

int contU = 0;

for (int i = 0; i < pFrame->height / 2; i++)

{

memcpy(pU + contU, pFrame->data[1] + i * pFrame->linesize[1], pFrame->width / 2);

contU += pFrame->width / 2;

}

///V

int contV = 0;

for (int i = 0; i < pFrame->height / 2; i++)

{

memcpy(pV + contV, pFrame->data[2] + i * pFrame->linesize[2], pFrame->width / 2);

contV += pFrame->width / 2;

}

EnterCriticalSection(&m_csVideoASection);

av_fifo_generic_write(m_pVideoAFifo, pY, y_size, NULL);

av_fifo_generic_write(m_pVideoAFifo, pU, y_size / 4, NULL);

av_fifo_generic_write(m_pVideoAFifo, pV, y_size / 4, NULL);

LeaveCriticalSection(&m_csVideoASection);

break;

}

else

{

Sleep(100);

}

}

}

if (ret == AVERROR(EAGAIN))

{

continue;

}

}

av_frame_free(&pFrame);

delete[] pY;

delete[] pU;

delete[] pV;

}

DWORD WINAPI CDrawText::VideoDrawTextProc(LPVOID lpParam)

{

CDrawText *pVideoMerge = (CDrawText *)lpParam;

if (pVideoMerge != NULL)

{

pVideoMerge->VideoDrawText();

}

return 0;

}

void CDrawText::VideoDrawText()

{

int ret = 0;

DWORD dwBeginTime = ::GetTickCount();

AVFrame *pFrameVideoA = av_frame_alloc();

uint8_t *videoA_buffer_yuv420 = (uint8_t *)av_malloc(m_iYuv420FrameSize);

av_image_fill_arrays(pFrameVideoA->data, pFrameVideoA->linesize, videoA_buffer_yuv420, AV_PIX_FMT_YUV420P, m_pReadCodecCtx_VideoA->width, m_pReadCodecCtx_VideoA->height, 1);

pFrameVideoA->width = m_iVideoWidth;

pFrameVideoA->height = m_iVideoHeight;

pFrameVideoA->format = AV_PIX_FMT_YUV420P;

AVFrame* pFrame_out = av_frame_alloc();

uint8_t *out_buffer_yuv420 = (uint8_t *)av_malloc(m_iYuv420FrameSize);

av_image_fill_arrays(pFrame_out->data, pFrame_out->linesize, out_buffer_yuv420, AV_PIX_FMT_YUV420P, m_iVideoWidth, m_iVideoHeight, 1);

AVPacket packet = { 0 };

int iPicCount = 0;

while (1)

{

if (NULL == m_pVideoAFifo)

{

break;

}

int iVideoASize = av_fifo_size(m_pVideoAFifo);

if (iVideoASize >= m_iYuv420FrameSize)

{

EnterCriticalSection(&m_csVideoASection);

av_fifo_generic_read(m_pVideoAFifo, videoA_buffer_yuv420, m_iYuv420FrameSize, NULL);

LeaveCriticalSection(&m_csVideoASection);

pFrameVideoA->pkt_dts = pFrameVideoA->pts = av_rescale_q_rnd(iPicCount, m_pCodecEncodeCtx_Video->time_base, m_pFormatCtx_Out->streams[0]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pFrameVideoA->pkt_duration = 0;

pFrameVideoA->pkt_pos = -1;

ret = av_buffersrc_add_frame(m_pFilterCtxSrcVideoA, pFrameVideoA);

if (ret < 0)

{

break;

}

ret = av_buffersink_get_frame(m_pFilterCtxSink, pFrame_out);

if (ret < 0)

{

//printf("Mixer: failed to call av_buffersink_get_frame_flags\n");

break;

}

pFrame_out->pkt_dts = pFrame_out->pts = av_rescale_q_rnd(iPicCount, m_pCodecEncodeCtx_Video->time_base, m_pFormatCtx_Out->streams[0]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pFrame_out->pkt_duration = 0;

pFrame_out->pkt_pos = -1;

pFrame_out->width = m_iVideoWidth;

pFrame_out->height = m_iVideoHeight;

pFrame_out->format = AV_PIX_FMT_YUV420P;

ret = avcodec_send_frame(m_pCodecEncodeCtx_Video, pFrame_out);

ret = avcodec_receive_packet(m_pCodecEncodeCtx_Video, &packet);

av_write_frame(m_pFormatCtx_Out, &packet);

iPicCount++;

}

else

{

if (m_hVideoAReadThread == NULL)

{

break;

}

Sleep(1);

}

}

av_write_trailer(m_pFormatCtx_Out);

avio_close(m_pFormatCtx_Out->pb);

av_frame_free(&pFrameVideoA);

}

int CDrawText::InitFilter(const char* filter_desc)

{

int ret = 0;

char args_videoA[512];

const char* pad_name_videoA = "in0";

const char* name_drawtext = "drawtext";

AVFilter* filter_src_videoA = (AVFilter *)avfilter_get_by_name("buffer");

AVFilter* filter_sink = (AVFilter *)avfilter_get_by_name("buffersink");

AVFilter *filter_drawtext = (AVFilter *)avfilter_get_by_name("drawtext");

AVFilterInOut* filter_output_videoA = avfilter_inout_alloc();

AVFilterInOut* filter_input = avfilter_inout_alloc();

m_pFilterGraph = avfilter_graph_alloc();

AVRational timeBase;

timeBase.num = 1;

timeBase.den = 10;

AVRational timeAspect;

timeAspect.num = 0;

timeAspect.den = 1;

_snprintf(args_videoA, sizeof(args_videoA),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

m_iVideoWidth, m_iVideoHeight, AV_PIX_FMT_YUV420P,

timeBase.num, timeBase.den,

timeAspect.num,

timeAspect.den);

AVFilterInOut* filter_outputs[1];

do

{

ret = avfilter_graph_create_filter(&m_pFilterCtxSrcVideoA, filter_src_videoA, pad_name_videoA, args_videoA, NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

AVFilterContext *drawTextFilter_ctx;

ret = avfilter_graph_create_filter(&drawTextFilter_ctx, filter_drawtext, name_drawtext, filter_desc, NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

ret = avfilter_graph_create_filter(&m_pFilterCtxSink, filter_sink, "out", NULL, NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

ret = av_opt_set_bin(m_pFilterCtxSink, "pix_fmts", (uint8_t*)&m_pCodecEncodeCtx_Video->pix_fmt, sizeof(m_pCodecEncodeCtx_Video->pix_fmt), AV_OPT_SEARCH_CHILDREN);

ret = avfilter_link(m_pFilterCtxSrcVideoA, 0, drawTextFilter_ctx, 0);

if (ret != 0)

{

break;

}

ret = avfilter_link(drawTextFilter_ctx, 0, m_pFilterCtxSink, 0);

if (ret != 0)

{

break;

}

ret = avfilter_graph_config(m_pFilterGraph, NULL);

if (ret < 0)

{

break;

}

ret = 0;

} while (0);

avfilter_inout_free(&filter_input);

av_free(filter_src_videoA);

avfilter_inout_free(filter_outputs);

char* temp = avfilter_graph_dump(m_pFilterGraph, NULL);

return ret;

}

679

679

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?