下载的flink 1.13.1版本,scala 2.11版本

按照官网,https://ci.apache.org/projects/flink/flink-docs-release-1.13/docs/try-flink/table_api/

下载https://github.com/apache/flink-playgrounds 这个代码

第一个异常:

Error: A JNI error has occurred, please check your installation and try again

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/flink/table/api/Table

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2701)

at java.lang.Class.privateGetMethodRecursive(Class.java:3048)

at java.lang.Class.getMethod0(Class.java:3018)

at java.lang.Class.getMethod(Class.java:1784)

at sun.launcher.LauncherHelper.validateMainClass(LauncherHelper.java:650)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:632)

Caused by: java.lang.ClassNotFoundException: org.apache.flink.table.api.Table

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 7 more

Process finished with exit code 1

按网上解决方案:pom.xml中 flink-table-api-java 等至少共三个都要的改生效周期范围

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>test</scope>-->

</dependency>第二个异常:

Exception in thread "main" org.apache.flink.table.api.NoMatchingTableFactoryException: Could not find a suitable table factory for 'org.apache.flink.table.delegation.ExecutorFactory' in

the classpath.

Reason: No factory implements 'org.apache.flink.table.delegation.ExecutorFactory'.

The following properties are requested:

class-name=org.apache.flink.table.planner.delegation.BlinkExecutorFactory

streaming-mode=true

The following factories have been considered:

org.apache.flink.table.module.CoreModuleFactory

at org.apache.flink.table.factories.TableFactoryService.filterByFactoryClass(TableFactoryService.java:215)

at org.apache.flink.table.factories.TableFactoryService.filter(TableFactoryService.java:176)

at org.apache.flink.table.factories.TableFactoryService.findAllInternal(TableFactoryService.java:164)

at org.apache.flink.table.factories.TableFactoryService.findAll(TableFactoryService.java:121)

at org.apache.flink.table.factories.ComponentFactoryService.find(ComponentFactoryService.java:50)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.create(TableEnvironmentImpl.java:310)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.create(TableEnvironmentImpl.java:280)

at org.apache.flink.table.api.TableEnvironment.create(TableEnvironment.java:94)

at org.apache.flink.playgrounds.spendreport.SpendReport.main(SpendReport.java:38)

Process finished with exit code 1

但前面有可能pom中的弄的少,或者忘了maven update reimport等,这个是需要注意的。

然后如果还不行,可能就是scala的版本问题,scala的application与 flink的scala版本最好一致。

我是换成scala 11 与flink的一致后就好了

异常三:

Exception in thread "main" org.apache.flink.playgrounds.spendreport.UnimplementedException: This method has not yet been implemented

at org.apache.flink.playgrounds.spendreport.SpendReport.report(SpendReport.java:33)

at org.apache.flink.playgrounds.spendreport.SpendReport.main(SpendReport.java:67)这个是本来代码里面的抛异常方法,不用管

按官方例子,修改report函数后出现第三个异常:

Exception in thread "main" org.apache.flink.table.api.ValidationException: Unable to create a source for reading table 'default_catalog.default_database.transactions'.

Table options are:

'connector'='kafka'

'format'='csv'

'properties.bootstrap.servers'='localhost:9092'

'topic'='transactions'

at org.apache.flink.table.factories.FactoryUtil.createTableSource(FactoryUtil.java:137)

at org.apache.flink.table.planner.plan.schema.CatalogSourceTable.createDynamicTableSource(CatalogSourceTable.java:116)

at org.apache.flink.table.planner.plan.schema.CatalogSourceTable.toRel(CatalogSourceTable.java:82)

at org.apache.calcite.rel.core.RelFactories$TableScanFactoryImpl.createScan(RelFactories.java:495)

at org.apache.calcite.tools.RelBuilder.scan(RelBuilder.java:1099)

at org.apache.calcite.tools.RelBuilder.scan(RelBuilder.java:1123)

at org.apache.flink.table.planner.plan.QueryOperationConverter$SingleRelVisitor.visit(QueryOperationConverter.java:351)

at org.apache.flink.table.planner.plan.QueryOperationConverter$SingleRelVisitor.visit(QueryOperationConverter.java:154)

at org.apache.flink.table.operations.CatalogQueryOperation.accept(CatalogQueryOperation.java:68)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:151)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:92)

at org.apache.flink.table.operations.CatalogQueryOperation.accept(CatalogQueryOperation.java:68)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:47)

at org.apache.flink.table.operations.ProjectQueryOperation.accept(ProjectQueryOperation.java:76)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:52)

at org.apache.flink.table.operations.AggregateQueryOperation.accept(AggregateQueryOperation.java:82)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:47)

at org.apache.flink.table.operations.ProjectQueryOperation.accept(ProjectQueryOperation.java:76)

at org.apache.flink.table.planner.calcite.FlinkRelBuilder.queryOperation(FlinkRelBuilder.scala:184)

at org.apache.flink.table.planner.delegation.PlannerBase.translateToRel(PlannerBase.scala:198)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.flink.table.planner.delegation.PlannerBase.translate(PlannerBase.scala:162)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.translate(TableEnvironmentImpl.java:1518)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:740)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:572)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:554)

at org.apache.flink.playgrounds.spendreport.SpendReport.main(SpendReport.java:79)

Caused by: org.apache.flink.table.api.ValidationException: Cannot discover a connector using option: 'connector'='kafka'

at org.apache.flink.table.factories.FactoryUtil.enrichNoMatchingConnectorError(FactoryUtil.java:467)

at org.apache.flink.table.factories.FactoryUtil.getDynamicTableFactory(FactoryUtil.java:441)

at org.apache.flink.table.factories.FactoryUtil.createTableSource(FactoryUtil.java:133)

... 48 more

Caused by: org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'kafka' that implements 'org.apache.flink.table.factories.DynamicTableFactory' in the classpath.

Available factory identifiers are:

blackhole

datagen

filesystem

print

at org.apache.flink.table.factories.FactoryUtil.discoverFactory(FactoryUtil.java:319)

at org.apache.flink.table.factories.FactoryUtil.enrichNoMatchingConnectorError(FactoryUtil.java:463)

... 50 more

这个是kafka找不到,想了想前面的,先所幸都修改声明周期(也就只剩下两个了),发现不行

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>test</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>test</scope>-->

</dependency>后面发现需要添加flink-connect-kafka的jar包,添加pom依赖

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>第四个异常:csv 格式的 类找不到

Exception in thread "main" org.apache.flink.table.api.ValidationException: Unable to create a source for reading table 'default_catalog.default_database.transactions'.

Table options are:

'connector'='kafka'

'format'='csv'

'properties.bootstrap.servers'='localhost:9092'

'topic'='transactions'

at org.apache.flink.table.factories.FactoryUtil.createTableSource(FactoryUtil.java:137)

at org.apache.flink.table.planner.plan.schema.CatalogSourceTable.createDynamicTableSource(CatalogSourceTable.java:116)

at org.apache.flink.table.planner.plan.schema.CatalogSourceTable.toRel(CatalogSourceTable.java:82)

at org.apache.calcite.rel.core.RelFactories$TableScanFactoryImpl.createScan(RelFactories.java:495)

at org.apache.calcite.tools.RelBuilder.scan(RelBuilder.java:1099)

at org.apache.calcite.tools.RelBuilder.scan(RelBuilder.java:1123)

at org.apache.flink.table.planner.plan.QueryOperationConverter$SingleRelVisitor.visit(QueryOperationConverter.java:351)

at org.apache.flink.table.planner.plan.QueryOperationConverter$SingleRelVisitor.visit(QueryOperationConverter.java:154)

at org.apache.flink.table.operations.CatalogQueryOperation.accept(CatalogQueryOperation.java:68)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:151)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:92)

at org.apache.flink.table.operations.CatalogQueryOperation.accept(CatalogQueryOperation.java:68)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:47)

at org.apache.flink.table.operations.ProjectQueryOperation.accept(ProjectQueryOperation.java:76)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:52)

at org.apache.flink.table.operations.AggregateQueryOperation.accept(AggregateQueryOperation.java:82)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:47)

at org.apache.flink.table.operations.ProjectQueryOperation.accept(ProjectQueryOperation.java:76)

at org.apache.flink.table.planner.calcite.FlinkRelBuilder.queryOperation(FlinkRelBuilder.scala:184)

at org.apache.flink.table.planner.delegation.PlannerBase.translateToRel(PlannerBase.scala:198)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.flink.table.planner.delegation.PlannerBase.translate(PlannerBase.scala:162)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.translate(TableEnvironmentImpl.java:1518)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:740)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:572)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:554)

at org.apache.flink.playgrounds.spendreport.SpendReport.main(SpendReport.java:79)

Caused by: org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'csv' that implements 'org.apache.flink.table.factories.DeserializationFormatFactory' in the classpath.

Available factory identifiers are:

raw

at org.apache.flink.table.factories.FactoryUtil.discoverFactory(FactoryUtil.java:319)

at org.apache.flink.table.factories.FactoryUtil$TableFactoryHelper.discoverOptionalFormatFactory(FactoryUtil.java:751)

at org.apache.flink.table.factories.FactoryUtil$TableFactoryHelper.discoverOptionalDecodingFormat(FactoryUtil.java:649)

at org.apache.flink.streaming.connectors.kafka.table.KafkaDynamicTableFactory.getValueDecodingFormat(KafkaDynamicTableFactory.java:275)

at org.apache.flink.streaming.connectors.kafka.table.KafkaDynamicTableFactory.createDynamicTableSource(KafkaDynamicTableFactory.java:142)

at org.apache.flink.table.factories.FactoryUtil.createTableSource(FactoryUtil.java:134)

... 48 more

Process finished with exit code 1

添加flink-csv的pom依赖包

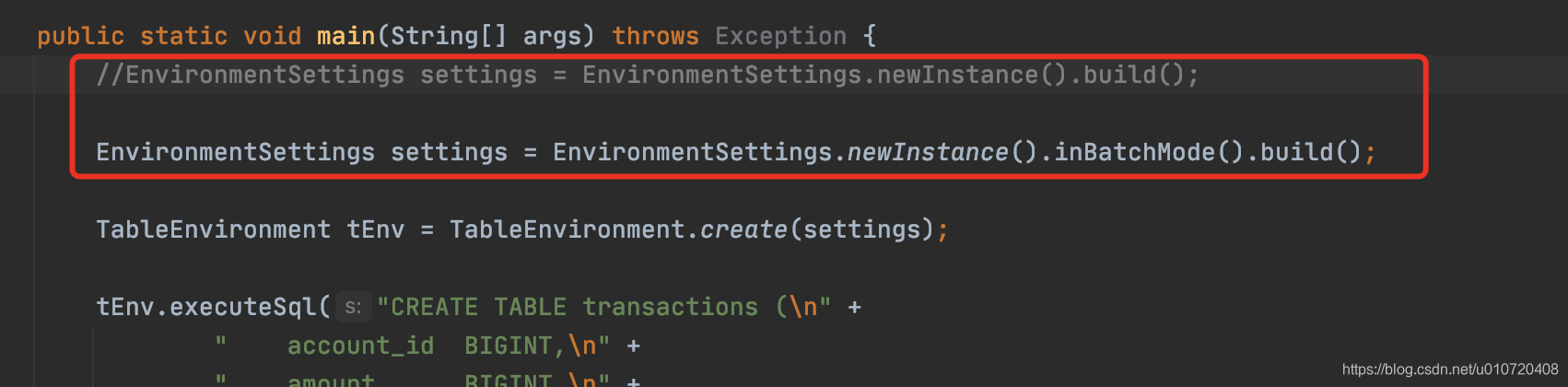

aa第五个异常:无边界流,不允许batch的方式

Exception in thread "main" org.apache.flink.table.api.ValidationException: Querying an unbounded table 'default_catalog.default_database.transactions' in batch mode is not allowed. The table source is unbounded.

at org.apache.flink.table.planner.connectors.DynamicSourceUtils.validateScanSourceForBatch(DynamicSourceUtils.java:515)

at org.apache.flink.table.planner.connectors.DynamicSourceUtils.validateScanSource(DynamicSourceUtils.java:462)

at org.apache.flink.table.planner.connectors.DynamicSourceUtils.prepareDynamicSource(DynamicSourceUtils.java:161)

at org.apache.flink.table.planner.connectors.DynamicSourceUtils.convertSourceToRel(DynamicSourceUtils.java:119)

at org.apache.flink.table.planner.plan.schema.CatalogSourceTable.toRel(CatalogSourceTable.java:85)

at org.apache.calcite.rel.core.RelFactories$TableScanFactoryImpl.createScan(RelFactories.java:495)

at org.apache.calcite.tools.RelBuilder.scan(RelBuilder.java:1099)

at org.apache.calcite.tools.RelBuilder.scan(RelBuilder.java:1123)

at org.apache.flink.table.planner.plan.QueryOperationConverter$SingleRelVisitor.visit(QueryOperationConverter.java:351)

at org.apache.flink.table.planner.plan.QueryOperationConverter$SingleRelVisitor.visit(QueryOperationConverter.java:154)

at org.apache.flink.table.operations.CatalogQueryOperation.accept(CatalogQueryOperation.java:68)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:151)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:92)

at org.apache.flink.table.operations.CatalogQueryOperation.accept(CatalogQueryOperation.java:68)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:47)

at org.apache.flink.table.operations.ProjectQueryOperation.accept(ProjectQueryOperation.java:76)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:52)

at org.apache.flink.table.operations.AggregateQueryOperation.accept(AggregateQueryOperation.java:82)

at org.apache.flink.table.planner.plan.QueryOperationConverter.lambda$defaultMethod$0(QueryOperationConverter.java:150)

at java.util.Collections$SingletonList.forEach(Collections.java:4824)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:150)

at org.apache.flink.table.planner.plan.QueryOperationConverter.defaultMethod(QueryOperationConverter.java:133)

at org.apache.flink.table.operations.utils.QueryOperationDefaultVisitor.visit(QueryOperationDefaultVisitor.java:47)

at org.apache.flink.table.operations.ProjectQueryOperation.accept(ProjectQueryOperation.java:76)

at org.apache.flink.table.planner.calcite.FlinkRelBuilder.queryOperation(FlinkRelBuilder.scala:184)

at org.apache.flink.table.planner.delegation.PlannerBase.translateToRel(PlannerBase.scala:198)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.flink.table.planner.delegation.PlannerBase.translate(PlannerBase.scala:162)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.translate(TableEnvironmentImpl.java:1518)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:740)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:572)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:554)

at org.apache.flink.playgrounds.spendreport.SpendReport.main(SpendReport.java:79)

是因为前面改了东西:换回非batch模式

下一个异常jdbc找不到:

Exception in thread "main" org.apache.flink.table.api.ValidationException: Unable to create a sink for writing table 'default_catalog.default_database.spend_report'.

Table options are:

'connector'='jdbc'

'driver'='com.mysql.jdbc.Driver'

'password'='tbds@Tbds.com'

'table-name'='spend_report'

'url'='jdbc:mysql://localhost:3306/sql-demo'

'username'='root'

at org.apache.flink.table.factories.FactoryUtil.createTableSink(FactoryUtil.java:171)

at org.apache.flink.table.planner.delegation.PlannerBase.getTableSink(PlannerBase.scala:367)

at org.apache.flink.table.planner.delegation.PlannerBase.translateToRel(PlannerBase.scala:201)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at org.apache.flink.table.planner.delegation.PlannerBase$$anonfun$1.apply(PlannerBase.scala:162)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.IterableLike$class.foreach(IterableLike.scala:72)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.flink.table.planner.delegation.PlannerBase.translate(PlannerBase.scala:162)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.translate(TableEnvironmentImpl.java:1518)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.executeInternal(TableEnvironmentImpl.java:740)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:572)

at org.apache.flink.table.api.internal.TableImpl.executeInsert(TableImpl.java:554)

at org.apache.flink.playgrounds.spendreport.SpendReport.main(SpendReport.java:79)

Caused by: org.apache.flink.table.api.ValidationException: Cannot discover a connector using option: 'connector'='jdbc'

at org.apache.flink.table.factories.FactoryUtil.enrichNoMatchingConnectorError(FactoryUtil.java:467)

at org.apache.flink.table.factories.FactoryUtil.getDynamicTableFactory(FactoryUtil.java:441)

at org.apache.flink.table.factories.FactoryUtil.createTableSink(FactoryUtil.java:167)

... 18 more

Caused by: org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'jdbc' that implements 'org.apache.flink.table.factories.DynamicTableFactory' in the classpath.

Available factory identifiers are:

blackhole

datagen

filesystem

kafka

print

upsert-kafka

at org.apache.flink.table.factories.FactoryUtil.discoverFactory(FactoryUtil.java:319)

at org.apache.flink.table.factories.FactoryUtil.enrichNoMatchingConnectorError(FactoryUtil.java:463)

... 20 more

缺包添加:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>运行还是包jdbc的错误,还需要 mysql的包,我的主持mysql8的,就找了个引用最多的包弄上

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.16</version>

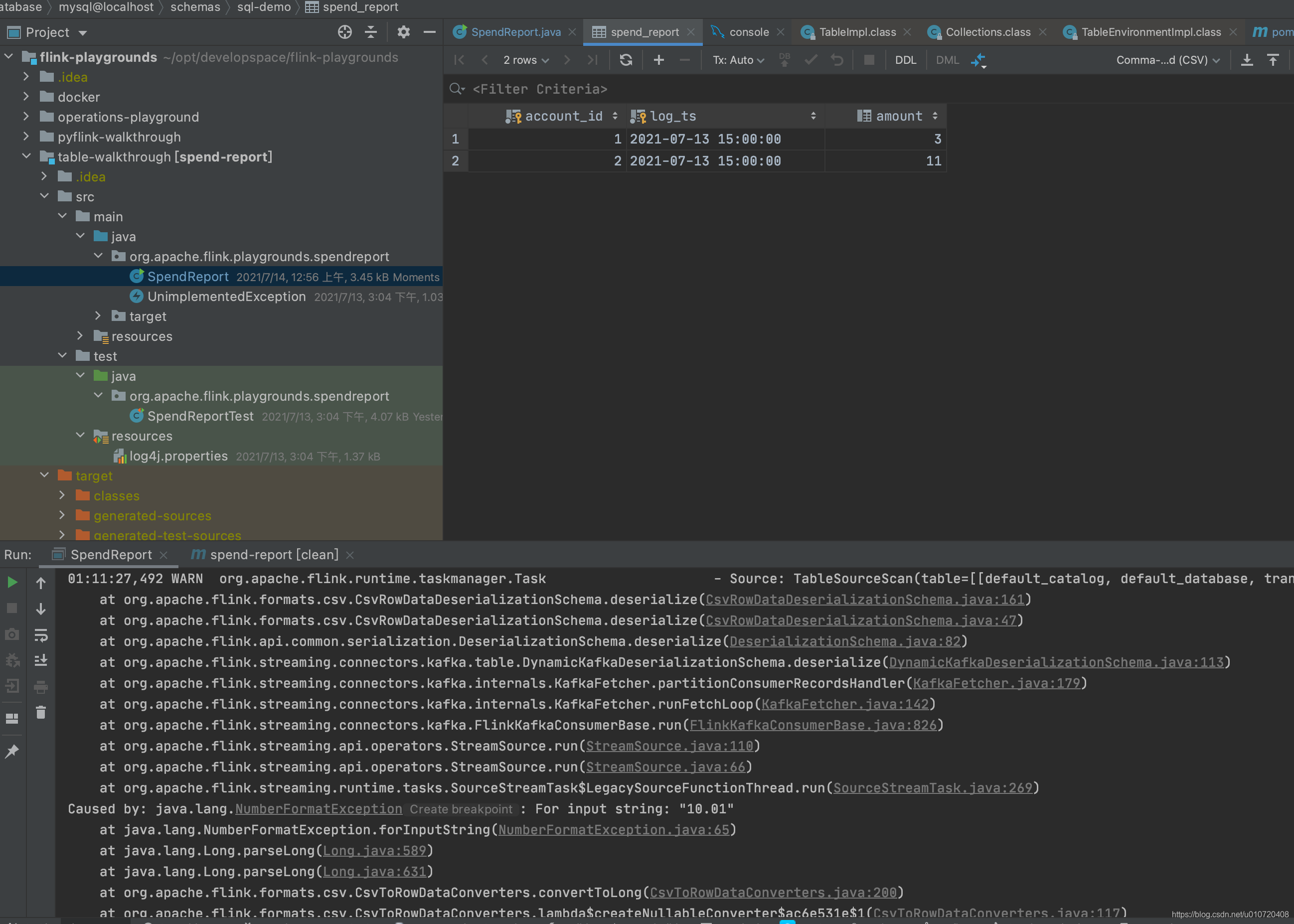

</dependency>然后启动就没啥问题了

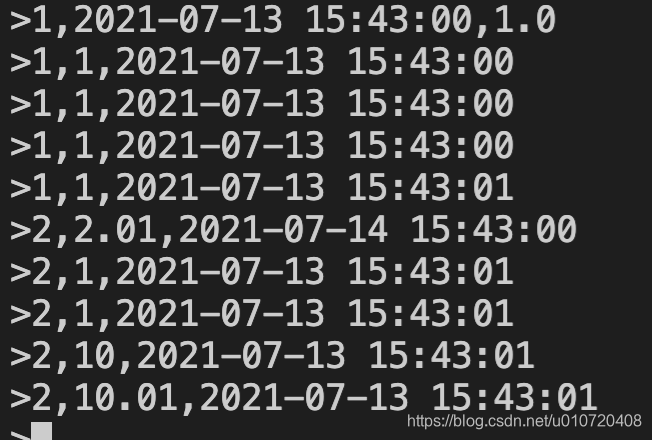

当时当kafka-producer 手动添加数据时,报找不到mysql表,

原来例子中的 create table 只是建立关系的,并非针对创建一张表,所以还需要手动mysql中创建一张表。

然后按照代码的例子,产生数据 就行

注意只能bigint, 就是amout 金额 浮点数不行

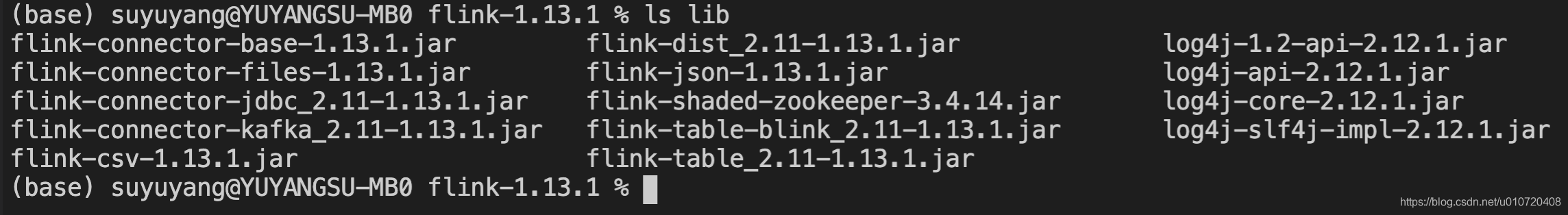

TIp: 官网建议依赖的包都用 provide,这样的话,就必须将依赖的jar等都要放到flink的lib目录下,因为依赖包多,打包内嵌jar就太大了,就不适合大数据平台的计算代码移动特点了。

本文详细记录了在使用Flink 1.13.1版本和Scala 2.11版本时,尝试按照官网教程运行Table API例子过程中遇到的异常及解决方法。从调整pom.xml中依赖的生效周期,到解决Scala版本不匹配问题,再到添加缺失的Flink-Kafka和Flink-Csv依赖,最后处理无边界流和JDBC连接问题。总结了每个异常的解决方案,包括手动创建MySQL表和理解create table的含义。此外,还提到了官网建议的依赖配置方式,以便于适应大数据平台的代码移动需求。

本文详细记录了在使用Flink 1.13.1版本和Scala 2.11版本时,尝试按照官网教程运行Table API例子过程中遇到的异常及解决方法。从调整pom.xml中依赖的生效周期,到解决Scala版本不匹配问题,再到添加缺失的Flink-Kafka和Flink-Csv依赖,最后处理无边界流和JDBC连接问题。总结了每个异常的解决方案,包括手动创建MySQL表和理解create table的含义。此外,还提到了官网建议的依赖配置方式,以便于适应大数据平台的代码移动需求。

6217

6217

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?