搭建ELK

ELK是由elasticsearch、logstash、kibana三个开源软件组成的一个组合体,ELK是elastic公司公司研发的一套完整的日志收集、分析和展示的企业级解决方案,在这三个软件当中,每个软件用于完成不同的功能,官方域名为elastic.io,ELK stack的主要优点:

- 处理方式灵活:elasticsearch是实时全文索引,具有强大的搜索功能

- 配置相当简单:elasticsearch的API全部使用JSON接口,logstash使用模块配置,kibana的配置文件部分更简单

- 检索性能高效:基于优秀的设计,虽然每次查询都是实时,但是也可以达到百亿数据的查询秒级响应。

- 集群线性扩展:elasticsearch和logstash都可以灵活线性扩展

- 前端操作绚丽:kibana的前端设计比较绚丽,而且操作简单

Elasticsearch

elasticsearch是一个高度可扩展的开源全文搜索和分析引擎,它可实现数据的实时全文搜索、支持分布式可实现高可用、提供API接口,可以处理大规模日志数据,比如nginx、tomcat、系统日志等功能。

elasticsearch的特点:

- 实时收索、实时分析

- 分布式架构、实时文件存储

- 文档导向,所有对象都是文档

- 高可用,易扩展,支持集群,分片与复制

- 接口友好,支持json

部署elasticsearch

GitHub - elastic/elasticsearch: Free and Open, Distributed, RESTful Search Engine,基于java开发

centos系统关闭服务器的防火墙和selinux,ubuntu关闭防火墙,保持各服务器时间同步

服务器1:172.20.22.24

服务器2:172.20.22.27

服务器3:172.20.22.28

###ubuntu

# apt install -y ntpdate

# rm -f /etc/localtime

# ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

# hwclock --systohc

# ntpdate -u ntp1.aliyun.com

###设置内核参数

# vim /etc/security/limits.conf

* soft nofile 500000

* hard nofile 500000

# vim /etc/security/limits.d/20-nproc.conf

* soft nproc 4096

elasticsearch soft nproc unlimited

root soft nproc unlimited

###安装jdk

# apt install -y openjdk-8-jdk

###每个节点都安装

# ls -lrt elasticsearch-7.12.1-amd64.deb

# dpkg -i elasticsearch-7.12.1-amd64.deb

###节点1配置文件

# grep '^[^#]' /etc/elasticsearch/elasticsearch.yml

cluster.name: m63-elastic #集群名称

node.name: node1 #当前节点在集群内的节点名称

path.data: /data/elasticsearch #数据保存目录

path.logs: /data/elasticsearch #日志保存目录

bootstrap.memory_lock: true #服务启动的时候锁定足够的内存,防止数据写入swap

network.host: 172.20.22.24 #监听IP

http.port: 9200 #监听端口

###集群中node节点发现列表

discovery.seed_hosts: ["172.20.22.24", "172.20.22.27","172.20.22.28"]

###集群初始化哪些节点可以被选举为master

cluster.initial_master_nodes: ["172.20.22.24", "172.20.22.27","172.20.22.28"]

action.destructive_requires_name: true

# mkdir /data/elasticsearch -p

# chown -R elasticsearch. /data/elasticsearch

# systemctl start elasticsearch.service

###节点2

# grep '^[^#]' /etc/elasticsearch/elasticsearch.yml

cluster.name: m63-elastic

node.name: node2

path.data: /data/elasticsearch

path.logs: /data/elasticsearch

network.host: 172.20.22.27

http.port: 9200

discovery.seed_hosts: ["172.20.22.24", "172.20.22.27","172.20.22.28"]

cluster.initial_master_nodes: ["172.20.22.24", "172.20.22.27","172.20.22.28"]

action.destructive_requires_name: true

# mkdir /data/elasticsearch -p

# chown -R elasticsearch. /data/elasticsearch

# systemctl start elasticsearch.service

###节点3

# grep '^[^#]' /etc/elasticsearch/elasticsearch.yml

cluster.name: m63-elastic

node.name: node3

path.data: /data/elasticsearch

path.logs: /data/elasticsearch

network.host: 172.20.22.28

http.port: 9200

discovery.seed_hosts: ["172.20.22.24", "172.20.22.27","172.20.22.28"]

cluster.initial_master_nodes: ["172.20.22.24", "172.20.22.27","172.20.22.28"]

action.destructive_requires_name: true

# mkdir /data/elasticsearch -p

# chown -R elasticsearch. /data/elasticsearch

# systemctl start elasticsearch.service浏览器访问验证

http://$IP:9200

Logstash

Logstash是一个具有实时传输能力的数据收集引擎,其可以通过插件实现日志收集和转发,支持日志过滤,支持普通log、自定义json格式的日志解析,最终把经过处理的日志发送给elasticsearch。

部署Logstash

Logstash是一个开源的数据收集引擎,可以水平伸缩,而且logstash是整个ELK当中用于最多插件的一个组件,其可以接收来自不同来源的数据并统一输出到指定的且可以是多个不同目的地

https://github.com/elastic/logstash #GitHub

Elastic Stack and Product Documentation | Elastic

环境准备:关闭防火墙和selinux,并且安装java环境

# apt install -y openjdk-8-jdk

# ls -lrt logstash-7.12.1-amd64.deb

# dpkg -i logstash-7.12.1-amd64.deb

###启动测试

# /usr/share/logstash/bin/logstash -e 'input { stdin {} } output { stdout {}}' ##标准输入和标准输出

hello world!~

{

"@version" => "1",

"@timestamp" => 2022-04-13T06:16:32.212Z,

"host" => "jenkins-slave",

"message" => "hello world!~"

}

###通过配置文件启动

# cd /etc/logstash/conf.d/

# cat test.conf

input {

stdin {}

}

output {

stdout {}

}

###通过指定配置文件启动

# /usr/share/logstash/bin/logstash -f test.conf -t ##检查配置文件语法

# /usr/share/logstash/bin/logstash -f test.conf

####输出到elasticsearch

# cat test.conf

input {

stdin {}

}

output {

#stdout {}

elasticsearch {

hosts => ["172.20.22.24:9200"]

index => "magedu-m63-test-%{+YYYY.MM.dd}"

}

}

# /usr/share/logstash/bin/logstash -f test.conf

version1

version2

version3

test1

test2

test3

####elasticsearch服务器查看收集到的数据

# ls -lrt /data/elasticsearch/nodes/0/indices/

total 4

drwxr-xr-x 4 elasticsearch elasticsearch 4096 Apr 13 14:36 DyCv8w7mTleuAvlItAJlWAkibana

kibana为elasticsearch提供一个查看数据的web界面,其主要是通过elasticsearch的API接口进行数据查找,并进行前端数据可视化的展现,另外还可以针对特定格式的数据生成相应的表格、柱状图、饼图等

部署kibana

# ls -lrt kibana-7.12.1-amd64.deb

# dpkg -i kibana-7.12.1-amd64.deb

# grep "^[^$|#]" /etc/kibana/kibana.yml

server.port: 5601

server.host: "172.20.22.24"

elasticsearch.hosts: ["http://172.20.22.27:9200"]

i18n.locale: "zh-CN"

# systemctl restart kibana浏览器访问http://172.20.22.24:5601

Stack Management-->索引模式-->创建索引模式

选择时间字段 查看对应创建的索引日志信息

查看对应创建的索引日志信息

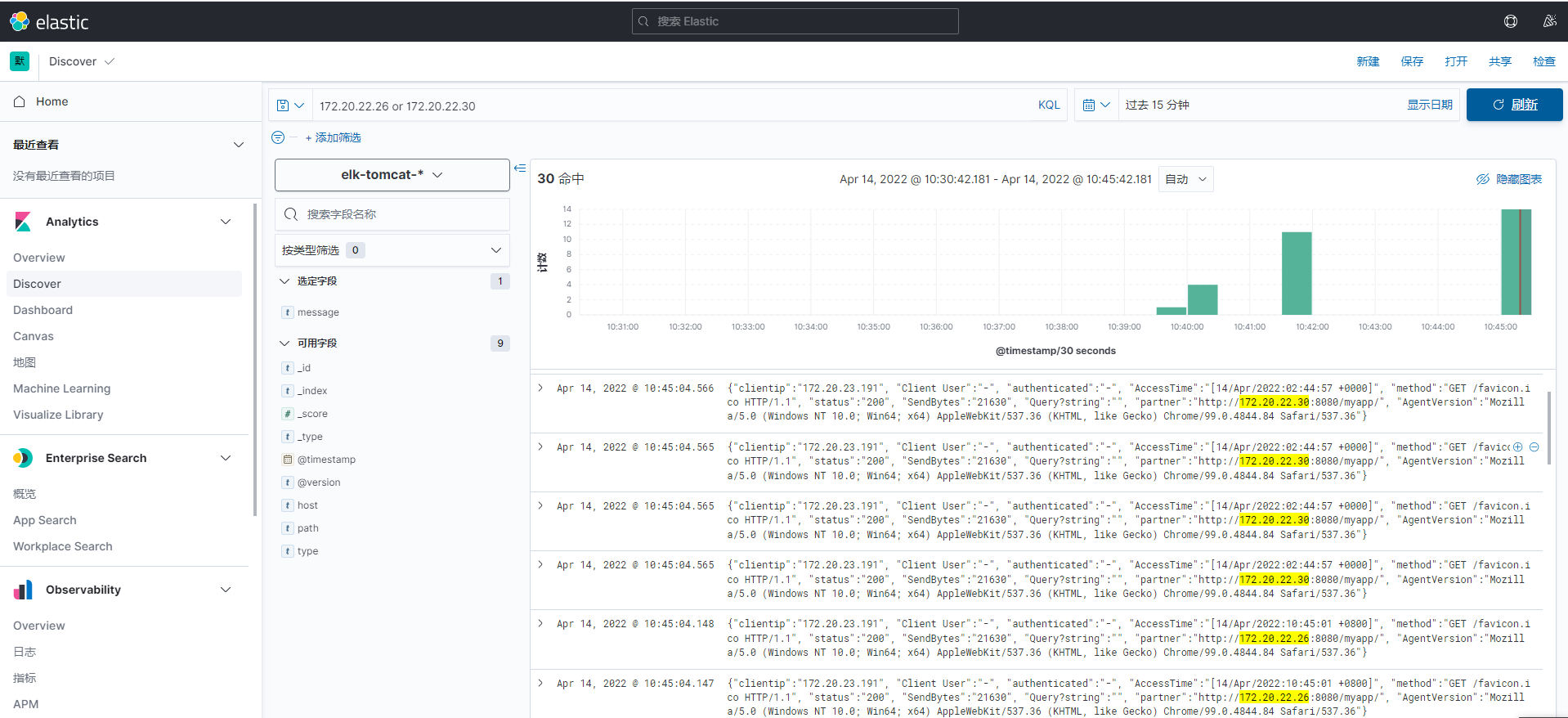

收集tomcat日志

收集tomcat服务器的访问日志以及tomcat错误日志进行实时统计,在kibana页面进行搜索展现,每台tomcat服务器要安装logstash负责收集日志,然后将日志转发给elasticsearch进行分析,再通过kibana在前端展现

部署tomcat

####tomcat1,172.20.22.30

# apt install -y openjdk-8-jdk

# ls -lrt apache-tomcat-8.5.77.tar.gz

-rw-r--r-- 1 root root 10559655 Apr 13 21:44 apache-tomcat-8.5.77.tar.gz

# tar xf apache-tomcat-8.5.77.tar.gz -C /usr/local/src/

# ln -s /usr/local/src/apache-tomcat-8.5.77 /usr/local/tomcat

# cd /usr/local/tomcat

###修改tomcat日志格式为json

# vim conf/server.xml

....

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="tomcat_access_log" suffix=".log"

pattern="{"clientip":"%h", "Client User":"%l", "authenticated":"%u", "AccessTime":"%t", "method":"%r", "status":"%s", "SendBytes":"%b", "Query?string":"%q", "partner":"%{Referer}i", "AgentVersion":"%{User-Agent}i"}" />

....

# mkdir /usr/local/tomcat/webapps/myapp

# echo "web1 172.20.22.30" > /usr/local/tomcat/webapps/myapp/index.html

# ./bin/catalina.sh start

###访问测试

# curl http://172.20.22.30:8080/myapp/

###查看访问日志

# tail -f /usr/local/tomcat/logs/tomcat_access_log.2022-04-13.log

####tomcat2,172.20.22.26

# apt install -y openjdk-8-jdk

# ls -lrt apache-tomcat-8.5.77.tar.gz

-rw-r--r-- 1 root root 10559655 Apr 13 21:44 apache-tomcat-8.5.77.tar.gz

# tar xf apache-tomcat-8.5.77.tar.gz -C /usr/local/src/

# ln -s /usr/local/src/apache-tomcat-8.5.77 /usr/local/tomcat

# cd /usr/local/tomcat

###修改tomcat日志格式为json

# vim conf/server.xml

....

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="tomcat_access_log" suffix=".log"

pattern="{"clientip":"%h", "Client User":"%l", "authenticated":"%u", "AccessTime":"%t", "method":"%r", "status":"%s", "SendBytes":"%b", "Query?string":"%q", "partner":"%{Referer}i", "AgentVersion":"%{User-Agent}i"}" />

....

# mkdir /usr/local/tomcat/webapps/myapp

# echo "web2 172.20.22.26" > /usr/local/tomcat/webapps/myapp/index.html

# ./bin/catalina.sh start

###访问测试

# curl http://172.20.22.26:8080/myapp/

###查看访问日志

# tail -f /usr/local/tomcat/logs/tomcat_access_log.2022-04-14.log部署logstash

在tomcat服务器安装logstash收集tomcat和系统日志

####tomcat1,172.20.22.30

# ls -lrt logstash-7.12.1-amd64.deb

# dpkg -i logstash-7.12.1-amd64.deb

# vim /etc/systemd/system/logstash.service

...

User=root

Group=root

...

# cd /etc/logstash/conf.d

# cat tomcat.conf

input {

file {

path => "/usr/local/tomcat/logs/tomcat_access_log*.log"

type => "tomcat-log"

start_position => "beginning"

stat_interval => "3"

}

file {

path => "/var/log/syslog"

type => "systemlog"

start_position => "beginning"

stat_interval => "3"

}

}

output {

if [type] == "tomcat-log" {

elasticsearch {

hosts => ["172.20.22.24:9200","172.20.22.27:9200"]

index => "elk-tomcat-%{+YYYY.MM.dd}"

}}

if [type] == "systemlog" {

elasticsearch {

hosts => ["172.20.22.27:9200","172.20.22.27:9200"]

index => "elk-syslog-%{+YYYY.MM.dd}"

}}

}

# /usr/share/logstash/bin/logstash -f tomcat.conf -t

# systemctl daemon-reload

# systemctl start logstash.service

# scp tomcat.conf root@3172.20.22.26

####tomcat2,172.20.22.26

# ls -lrt logstash-7.12.1-amd64.deb

# dpkg -i logstash-7.12.1-amd64.deb

# vim /etc/systemd/system/logstash.service

...

User=root

Group=root

...

# systemctl daemon-reload

# systemctl daemon-reload

# systemctl start logstash.service通过kibana展现

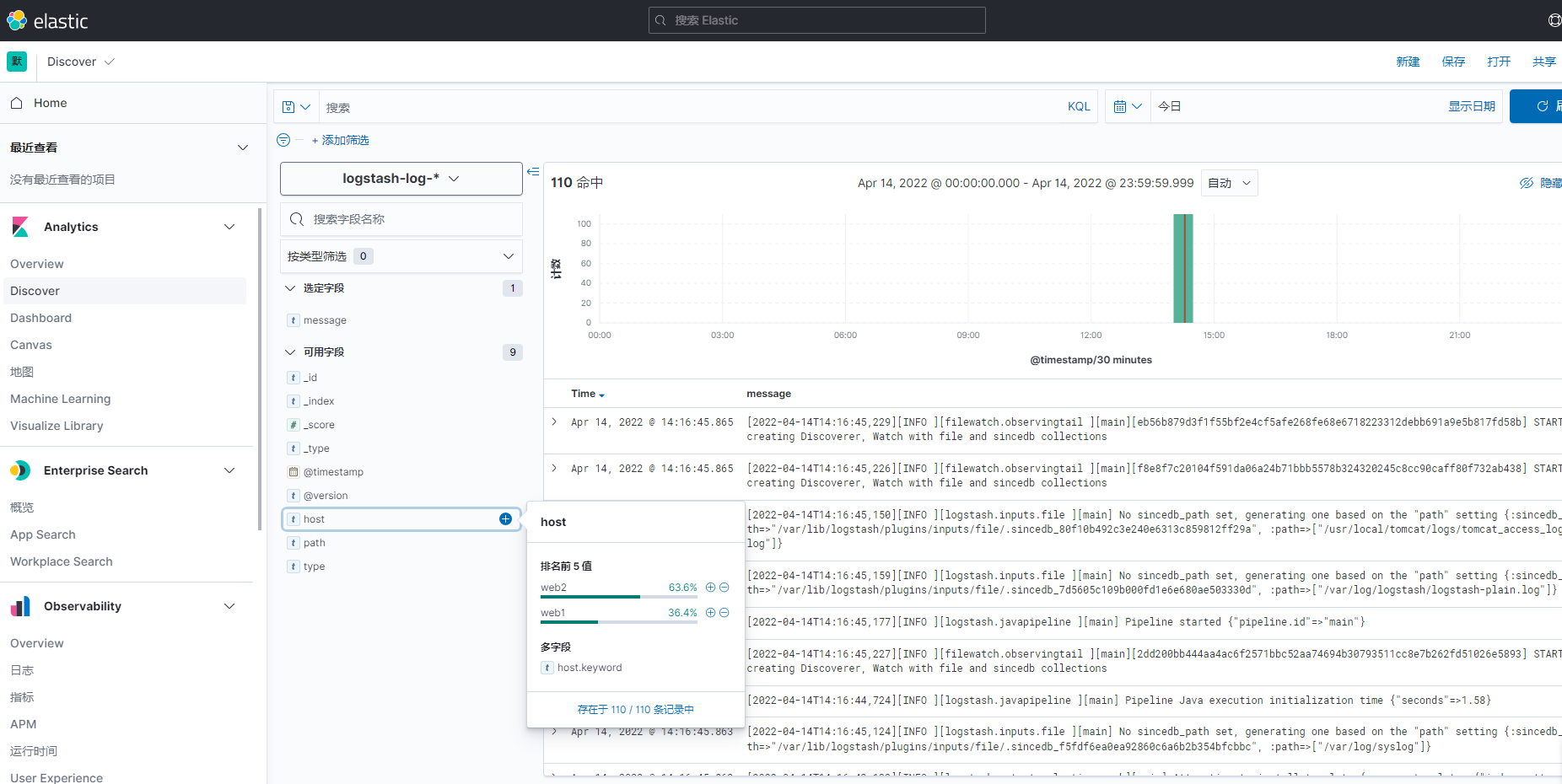

收集Java日志

使用codec的multiline插件实现多行匹配,这是一个可以将多行进行合并的插件,而且可以使用what指定将匹配到的行与前面的行合并还是和后面的行合并

Multiline codec plugin | Logstash Reference [8.1] | Elastic

添加logstash配置文件

###收集logstash自身的日志,172.20.22.26

# cd /etc/logstash/conf.d

# cat java.conf

input {

file {

path => "/var/log/logstash/logstash-plain.log"

type => "logstash-log"

start_position => "beginning"

stat_interval => "3"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}}

}

output {

if [type] == "logstash-log" {

elasticsearch {

hosts => ["172.20.22.24"]

index => "logstash-log-%{+YYYY.MM.dd}"

}}

}

# /usr/share/logstash/bin/logstash -f java.conf -t

# systemctl restart logstash.service

###收集logstash自身的日志,172.20.22.30

# cd /etc/logstash/conf.d

# cat java.conf

input {

file {

path => "/var/log/logstash/logstash-plain.log"

type => "logstash-log"

start_position => "beginning"

stat_interval => "3"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}}

}

output {

if [type] == "logstash-log" {

elasticsearch {

hosts => ["172.20.22.24"]

index => "logstash-log-%{+YYYY.MM.dd}"

}}

}

# /usr/share/logstash/bin/logstash -f java.conf -t

# systemctl restart logstash.service查看kibana收集到的日志

filebeat结合redis、logstash收集nginx日志

使用filebeat收集日志发送到logstash1,再由logstash1发送到redis,最后再由logstash2发送到elasticsearch

web1:172.20.22.30,部署好nginx、filebeat、llogstash

web2:172.20.22.26,部署好nginx、filebeat、llogstash

logstash服务器2:172.20.22.23,redis服务器:172.20.23.157

nginx服务器相关配置

部署nginx

# wget http://nginx.org/download/nginx-1.18.0.tar.gz

# tar xf nginx-1.18.0.tar.gz

# cd nginx-1.18.0

# ./configure --prefix=/usr/local/nginx --with-http_ssl_module

# make -j4 && make install

# /usr/local/nginx/sbin/nginx部署配置logstash

把filebeat收集到的日志信息发送到redis

# apt install -y openjdk-8-jdk

# dpkg -i logstash-7.12.1-amd64.deb

# cat /etc/logstash/conf.d/beats-to-redis.conf

input {

beats {

port => 5044

codec => "json"

}

beats {

port => 5045

codec => "json"

}

}

output {

if [fields][project] == "filebeat-systemlog" {

redis {

data_type => "list"

key => "filebeat-redis-systemlog"

host => "172.20.23.157"

port => "6379"

db => "0"

password => "12345678"

}}

if [fields][project] == "filebeat-nginx-accesslog" {

redis {

data_type => "list"

key => "filebeat-redis-nginx-accesslog"

host => "172.20.23.157"

port => "6379"

db => "1"

password => "12345678"

}}

if [fields][project] == "filebeat-nginx-errorlog" {

redis {

data_type => "list"

key => "filebeat-redis-nginx-errorlog"

host => "172.20.23.157"

port => "6379"

db => "1"

password => "12345678"

}}

}

# systemctl start logstash

# scp /etc/logstash/conf.d/beats-to-redis.conf root@172.20.22.26:/etc/logstash/conf.d/部署配置filebeat

通过filebeat收集日志信息发送到logstash

# dpkg -i filebeat-7.12.1-amd64.deb

# grep -v "#" /etc/filebeat/filebeat.yml | grep "^[^$]"

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/syslog

fields:

project: filebeat-systemlog

- type: log

enabled: true

paths:

- /usr/local/nginx/logs/access.log

fields:

project: filebeat-nginx-accesslog

- type: log

enabled: true

paths:

- /usr/local/nginx/logs/error.log

fields:

project: filebeat-nginx-errorlog

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

output.logstash:

hosts: ["172.20.22.30:5044","172.20.22.30:5045"]

enabled: true

worker: 2

compression_level: 3

loadbalance: true

# systemctl start filebeat

# scp /etc/filebeat/filebeat.yml root@172.20.22.26:/etc/filebeat/logstash服务器配置

logstash服务器2:172.20.22.23,把redis缓存的日志发送到elasticsearch

# apt install -y openjdk-8-jdk

# dpkg -i logstash-7.12.1-amd64.deb

# cat /etc/logstash/conf.d/redis-to-es.conf

input {

redis {

data_type => "list"

key => "filebeat-redis-nginx-accesslog"

host => "172.20.23.157"

port => "6379"

db => "1"

password => "12345678"

}

redis {

data_type => "list"

key => "filebeat-redis-nginx-errorlog"

host => "172.20.23.157"

port => "6379"

db => "1"

password => "12345678"

}

redis {

data_type => "list"

key => "filebeat-redis-systemlog"

host => "172.20.23.157"

port => "6379"

db => "0"

password => "12345678"

}

}

output {

if [fields][project] == "filebeat-systemlog" {

elasticsearch {

hosts => ["172.20.22.28:9200"]

index => "filebeat-systemlog-%{+YYYY.MM.dd}"

}}

if [fields][project] == "filebeat-nginx-accesslog" {

elasticsearch {

hosts => ["172.20.22.28:9200"]

index => "filebeat-nginx-accesslog-%{+YYYY.MM.dd}"

}}

if [fields][project] == "filebeat-nginx-errorlog" {

elasticsearch {

hosts => ["172.20.22.28:9200"]

index => "filebeat-nginx-errorlog-%{+YYYY.MM.dd}"

}}

}

# systemctl restart logstash.serviceredis安装配置

redis服务器:172.20.23.157,

# yum install -y redis

# vim /etc/redis.conf

####修改以下配置项

bind 0.0.0.0

....

save ""

....

requirepass 12345678

....

# systemctl start redis

###测试连接redis

# redis-cli

127.0.0.1:6379> auth 12345678

OK

127.0.0.1:6379> ping

PONG

###验证收集到的日志信息

127.0.0.1:6379[1]> keys *

1) "filebeat-redis-nginx-accesslog"

2) "filebeat-redis-nginx-errorlog"

127.0.0.1:6379[1]> select 0

OK

127.0.0.1:6379> keys *

1) "filebeat-redis-systemlog"通过head插件验证生成的索引

kibana验证收集到的日志信息

1860

1860

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?