prometheus-adapter支持自定义hpa

生成prometheus-adapter 证书

export PURPOSE=adapter

openssl req -x509 -sha256 -new -nodes -days 365 -newkey rsa:2048 -keyout ${PURPOSE}-ca.key -out ${PURPOSE}-ca.crt -subj "/CN=ca"

使用刚刚生成的证书创建secret

kubectl create secret generic prometheus-adapter-serving-certs --from-file=adapter-ca.crt --from-file=adapter-ca.key -n monitoring

查看生成的secret

[root@m1 katy]# kc get secret prometheus-adapter-serving-certs -n monitoring -o yaml

apiVersion: v1

data:

adapter-ca.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM3VENDQWRXZ0F3SUJBZ0lKQU8wY21xZFRGdlV3TUEwR0NTcUdTSWIzRFFFQkN3VUFNQTB4Q3pBSkJnTlYKQkFNTUFtTmhNQjRYRFRJeE1EZ3hNVEEzTXpZek1Wb1hEVEl5TURneE1UQTNNell6TVZvd0RURUxNQWtHQTFVRQpBd3dDWTJFd2dnRWlNQTBHQ1NxR1NJYjNEUUVCQVFVQUE0SUJEd0F3Z2dFS0FvSUJBUURqY0djaG5VK0RGS01XCjJUaG9lMHZucndlTnhQMW1RS1RDZ0RwNFV6Yk13RkIzaWhjVTZYSnQ1NHBSbDl1VHJ3NGxreDNleUNMYUlpM0QKTFpkK2tOYStwcHkxWFJxcVdGbEpjSk1WNk4zdy91dzl3bnVFZUdMYUZrOUNlWk5TZm04eTVtOHpKSjhuNDArcApERFRMcElFVUdFdWk0UzRTTDhyMWpjSVM3SHhnUWRsUy9ZYkJ2a2YwN1o2U3lLck80bnFoa0hLQkZMWks0UkFjCjhxQ0ZTYkIvSFVqVTYrcFRPdXBDK2U1VHUxd0tqS1RndVFYOTdyYlB0aUxpSmpNMlEydzFXdy8rTEd2N2NZYVUKNW5VWTdPQ0dvWjh5dklQY2hIU1Y3T2luQnBKYTNpMG5yek0wQkdRMUM5MHJuRm1jK2puYVRWMWgwd2toeHhJTgpGbmVyVkFBSEFnTUJBQUdqVURCT01CMEdBMVVkRGdRV0JCU0lwbjA3OU1oelRXY0VNRmJud25hQzdTdTU2REFmCkJnTlZIU01FR0RBV2dCU0lwbjA3OU1oelRXY0VNRmJud25hQzdTdTU2REFNQmdOVkhSTUVCVEFEQVFIL01BMEcKQ1NxR1NJYjNEUUVCQ3dVQUE0SUJBUUE4MS9ubjVoMmYveG1kNWdJUEJxdXdoTXRnc2VmRlVoSDE3emplMHFSOQpreG1VUGZESnZwbkk0WEkxUmNaMnUwaWUzeEg3dTdPSFZqZUNFdGtwV0xJc0hMamM3c3lIdG5UNktrWXNaR0RnCnVjVlR2bEhIaG5zWnRmaEhxSW4valVIRnJSWXFmQklhVFoxV09hTWVKZm5jZkRSU3JNeHorN0ltRjV2ckRaZHgKYlYxK0FHUUpDKytyeHhrRk9TVmx2azJEV3lHQ3NDVW1YdUlqVmsvSEp5QzBtT09POFZUbjNKaWZwQnFTVXhzSQpodC9XZUR2aEhVQXRMWEtIUXlLWjBtUWVnbWt3V2lwOHpkMFUzTENmRzZsQWZMRlZISldZWVowY1gySEYrY0FSCklFcGQwSEpINjVYTDlwTDRXckQ0dHR2T0M1ZEpzeVljOWZKQmxCbVp2U3psCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

adapter-ca.key: LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUV3QUlCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQktvd2dnU21BZ0VBQW9JQkFRRGpjR2NoblUrREZLTVcKMlRob2Uwdm5yd2VOeFAxbVFLVENnRHA0VXpiTXdGQjNpaGNVNlhKdDU0cFJsOXVUcnc0bGt4M2V5Q0xhSWkzRApMWmQra05hK3BweTFYUnFxV0ZsSmNKTVY2TjN3L3V3OXdudUVlR0xhRms5Q2VaTlNmbTh5NW04ekpKOG40MCtwCkREVExwSUVVR0V1aTRTNFNMOHIxamNJUzdIeGdRZGxTL1liQnZrZjA3WjZTeUtyTzRucWhrSEtCRkxaSzRSQWMKOHFDRlNiQi9IVWpVNitwVE91cEMrZTVUdTF3S2pLVGd1UVg5N3JiUHRpTGlKak0yUTJ3MVd3LytMR3Y3Y1lhVQo1blVZN09DR29aOHl2SVBjaEhTVjdPaW5CcEphM2kwbnJ6TTBCR1ExQzkwcm5GbWMram5hVFYxaDB3a2h4eElOCkZuZXJWQUFIQWdNQkFBRUNnZ0VCQUlHRXZDWkhXRVZVVmorbnVkaStCZzdNL09jOSsvUGo4aStWS0RiblpIaWIKTi9lckd0UGMwVDVITWR5Zk52cldJSjlETlNwdUhITE9MZk5OSGsyRUc5WjhPUmVMQ3FsaElJK1MzU0FIK1lQSgpHQzFmZUVtSzZQZzY1aTM3MytxRmQ3dXJ3RDJHcUYvbHNiS1o4ZUxhTG11TUhsNkdEMTlwK2hGMkJjUVRDZzBoCnY1cURDa21SSUNzVkJ2OTdQY3p3TXk2YTQyaUFHcUJYTERkWHhQSzJ3dUp3bkRKR01vdGVhcHBWbWVkWG91MFIKVkFrNHdYL0hKdWRUT2ZmaXZIRGtSdG5SMXg1YzZNWHNjajZ0ZlRFYUVsUHIvUHNWUDZVbTVPNmRyU201TnQweQorVG9Nb2VsRUdkbmRneTdoQmFaYmZpdlJEN1VzdlR0QlJCd0hjdGJlZmpFQ2dZRUErMzNQcWEyMzFORVdCRUdRCkV2czNzdnMrQ3QzTktUUE40MmM5WkNmc2VzRG95cU1wamhRTWZ6RnFxbFBrMEpSV3RtdncxdER3YTZNYllwTWIKMERObjNmaDJGT0crOEVxcllERCthRUVCYk5YV0wwWng3SXY0cnZhSUFjT3dheXYxZVhlZGNpYWk2VzJiWkVTeApqVmFQM0E1MGErQTVTMDQ4MDNVNFFZRVYzbWtDZ1lFQTU0UTB6b0x6NVpuK2drSUJXSEFUU2Z5VjJMRWh5Z292Ci9MbUZtaUVuZWVCSk96OVJURVl5NGtlc0QwNUhUOFh4Vkc1bHRLWTZtby9QclNnUlE3Ylg5b3FYMVdQSGI3L2IKT2s4alVyNWQyMmVvVVZSZUc2TUJqNmY2V3pHRWVqdnZwY2JYOHp5RXYvaGJzQnhYL1QrbXNQYm93ZkVGajlBKwpmSmZwaGczcS9POENnWUVBMnBualZmUWdaS1pSNHVVeVhLMXRIdkJ3WDNXb2pYWHdNd2hjUHFETlYyNHphMkFrCkVOR3dneWJyTnA2eHQvUVk0M3d6M2lYRHRXd1RzNzEzWWFRdFZxNVB4WnJzSTJaa1RMcUppUWxvT2JndDh1M2kKdk9CMkMyOVRqV1VTQmpZeHE2R2pnOE85dS9XQUtzbmpJNTNvY2psR1RUYWIxcTl0QThsU1d1M2ZtbkVDZ1lFQQpsUkM5dzMzenRoRDZHenFPalRmVVk1MzdpWU03ZzFBZDU3WTRQSzQrTWEzazJQNEN4WDZwZ3FLdE9VbW9oc2VuCmhEcDB4K1VEOU1MRjcvTE5jdkVHaXBwZitxaDlJQW5EQ1A4dGVqaFNURk9vdjN6My93bHNsdWVNUGkxYTVDMDEKTjJNWlptYS8vcTdWc2tYOXJYVFBTa0FnUzhkNVVraStBeEQ0N2pTRjZnY0NnWUVBMGlqNEFDWDZKRUhqeFBtSQpBcE1Gd0tOZzRtK2loWG1xem9nN0VRRTVaZ003eTZlS1hFS0VDNnlHVVVURnlFSGpWSHY1QWFlQ1FDQTBJa2ZLCmlTMTFwcEx6VCtHanpGV1FXUTR4Vml0NUpnaE4vRWNtV1ZjdzhLazhWR25IVlYxc25BNjJ6RllJaU44QWxDU0kKbEk1Z2RyYWpKQkdjMWVqdEUrQlVSRFhOYnlrPQotLS0tLUVORCBQUklWQVRFIEtFWS0tLS0tCg==

kind: Secret

metadata:

creationTimestamp: "2021-09-17T10:07:43Z"

name: prometheus-adapter-serving-certs11

namespace: monitoring

resourceVersion: "10403385"

selfLink: /api/v1/namespaces/monitoring/secrets/prometheus-adapter-serving-certs11

uid: 2c8d9e35-1bde-4acf-8297-5ad84d788cd4

type: Opaque

prometheus-adapter deployment挂载secret

在args里面增加以下内容

- --tls-cert-file=/var/run/adapter-cert/adapter-ca.crt

- --tls-private-key-file=/var/run/adapter-cert/adapter-ca.key

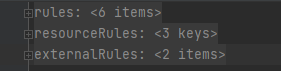

prometheus-adapter配置文件组成

prometheus-adapter配置文件由三部分组成:

基于内存和cpu的资源规则,在resourceRules模块下;

基于自定义指标的规则,在rules模块下;

基于外部指标的规则,在externalRules模块下,暂时还没使用到这个。

resourceRules默认是cpu和memory,不支持新增别的类型。

基于istio请求量配置

在promatheus-adapter的配置文件中的rules模块下,配置5分钟的请求量。

通过name.as将该规则重命名为http_requests_5m

- seriesQuery: 'istio_requests_total{namespace!="",pod!=""}'

resources:

overrides:

namespace:

resource: "namespace"

pod:

resource: "pod"

name:

matches: "^(.*)_total"

as: "http_requests_5m"

metricsQuery: 'sum(rate(<<.Series>>{<<.LabelMatchers>>}[5m])) by (<<.GroupBy>>)'

基于istio响应时间的配置

在prometehus-adapter配置文件的rules模块下,配置5分钟内的响应时间。

通过name.as将该规则重命名为http_requests_restime_5m。

- seriesQuery: '{__name__=~"istio_request_duration_milliseconds_.*",namespace!="",pod!="",reporter="destination"}'

seriesFilters:

- isNot: .*bucket

resources:

overrides:

namespace:

resource: namespace

pod:

resource: pod

name:

matches: ^(.*)

as: "http_requests_restime_5m"

metricsQuery: 'sum(rate(istio_request_duration_milliseconds_sum{<<.LabelMatchers>>}[5m]) > 0) by (<<.GroupBy>>) / sum(rate(istio_request_duration_milliseconds_count{<<.LabelMatchers>>}[5m]) > 0) by (<<.GroupBy>>)'

配置文件配置完成后,重启prometheus-adapter pod让配置生效。

prometheus-adapter配置的规则如何与kube-controller-manager交互

要区分是资源指标(resourceRules)还是自定义指标(rules)。

在创建HPA对象的时候需要指定spec.type,如果type为Resource则代表是要基于资源指标(cpu、memory)做HPA,如果type为Pods,则代表是基于pod做HPA。

基于Pod做HPA

kube-controller-manager通过HPA对象中的metric.name去查询是否存在这个规则名称,这个规则名称正常情况下是prometheus-adapter配置文件中设置好的,比如之前设置的http_requests_5m。

基于cpu或者memory做HPA

当是基于cpu或者memory做HPA的时候,kube-controller-manager通过resource类型查询的。

prometheus-adapter配置文件中的<<.LabelMatchers>>和<<.GroupBy>>是何时填充,由谁填充

当kube-controller-manager周期性的(默认15s,由参数–horizontal-pod-autoscaler-sync-period指定)查询当前资源使用情况的时候,通过HPA对象的namespace和spec.scaleTargetRef找到需要被HPA的对象的spec.selector.matchLabels填充给<<.LabelMatchers>>。

<<.GroupBy>>暂时不会赋值,但是不会影响最终效果的。

参考

https://itnext.io/horizontal-pod-autoscale-with-custom-metrics-8cb13e9d475

hpa demo

[root@m1 prometheus-adapter]# kc get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

sample-app Deployment/sample-app 66m/500m 1 10 1 4m4s

[root@m1 prometheus-adapter]# kd hpa

Name: sample-app

Namespace: cloudtogo-system

Labels: app=sample-app

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"autoscaling/v2beta1","kind":"HorizontalPodAutoscaler","metadata":{"annotations":{},"labels":{"app":"sample-app"},"name":"sa...

CreationTimestamp: Thu, 05 Aug 2021 07:47:42 +0000

Reference: Deployment/sample-app

Metrics: ( current / target )

"http_requests_per_second1" on pods: 66m / 50m

Min replicas: 1

Max replicas: 10

Deployment pods: 10 current / 10 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ReadyForNewScale recommended size matches current size

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from pods metric http_requests_per_second1

ScalingLimited True TooManyReplicas the desired replica count is more than the maximum replica count

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedGetPodsMetric 11m (x9 over 13m) horizontal-pod-autoscaler unable to get metric http_requests: unable to fetch metrics from custom metrics API: the server could not find the metric http_requests for pods

Warning FailedComputeMetricsReplicas 11m (x9 over 13m) horizontal-pod-autoscaler invalid metrics (1 invalid out of 1), first error is: failed to get pods metric value: unable to get metric http_requests: unable to fetch metrics from custom metrics API: the server could not find the metric http_requests for pods

Normal SuccessfulRescale 9m27s horizontal-pod-autoscaler New size: 2; reason: pods metric http_requests_per_second1 above target

Normal SuccessfulRescale 8m27s horizontal-pod-autoscaler New size: 3; reason: pods metric http_requests_per_second1 above target

Normal SuccessfulRescale 7m27s horizontal-pod-autoscaler New size: 4; reason: pods metric http_requests_per_second1 above target

Normal SuccessfulRescale 6m57s horizontal-pod-autoscaler New size: 5; reason: pods metric http_requests_per_second1 above target

Normal SuccessfulRescale 6m26s horizontal-pod-autoscaler New size: 6; reason: pods metric http_requests_per_second1 above target

Normal SuccessfulRescale 5m55s horizontal-pod-autoscaler New size: 8; reason: pods metric http_requests_per_second1 above target

Normal SuccessfulRescale 5m25s horizontal-pod-autoscaler New size: 10; reason: pods metric http_requests_per_second1 above target

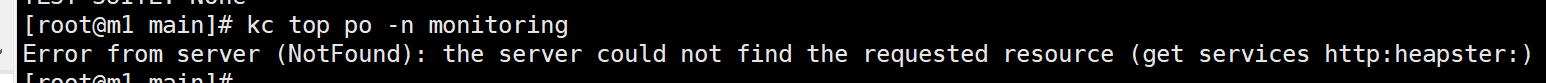

遇到的问题

使用部署了prometheus-adapter以后,执行kc top一直报错:

[root@m1 main]# kc top po -n monitoring

Error from server (NotFound): the server could not find the requested resource (get services http:heapster:)

原因在于custom-metrics-apiservice.yaml中缺少了配置

,完整的custom-metrics-apiservice.yaml如下

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: prometheus-adapter

namespace: moniotirng

version: v1beta1

versionPriority: 100

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.custom.metrics.k8s.io

spec:

service:

name: prometheus-adapter

namespace: moniotirng

group: custom.metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta2.custom.metrics.k8s.io

spec:

service:

name: prometheus-adapter

namespace: moniotirng

group: custom.metrics.k8s.io

version: v1beta2

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 200

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.external.metrics.k8s.io

spec:

service:

name: prometheus-adapter

namespace: moniotirng

group: external.metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

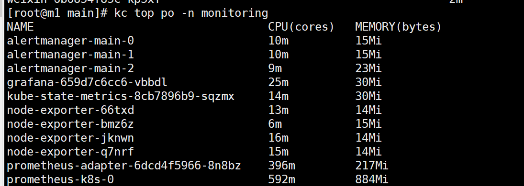

当配置了完整的custom-metrics-apiservice.yaml后,执行kc top命令就正常了。

参考

https://github.com/stefanprodan/k8s-prom-hpa

https://blog.csdn.net/weixin_38320674/article/details/105460033

https://www.cnblogs.com/yuhaohao/p/14109787.html

https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

194

194

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?