目标:使用多线程和日志爬取网页的所有链接的网址并且保存到本地

使用的库:

import urllib2

import threading #多线程

import time

from bs4 import BeautifulSoup

import logging #日志多线程代码:

class myThread(threading.Thread):

def __init__(self, url,name):

threading.Thread.__init__(self)

self.url = url

self.name=name

def run(self):

try:

logging.info('\n网址:'+self.url+"\n\n文件名:"+self.name+".html\n")

html=open_url(self.url)

saveflie(html,self.name)

except Exception as e:

logging.info('出错的网页'+e)

要执行的代码写入run函数里,创建线程时会直接运行。

编写open_url和saveflie函数

open_url函数:

def open_url(url):

request = urllib2.Request(url)

html = urllib2.urlopen(request).read()

return htmlsaveflie函数:

def saveflie(html,name):

with open(name +'.html', 'wb') as f:

f.write(html)

f.close

之后利用bs4获得一个网页的所有链接

再用多线程执行打开网页和保存网页

整体代码:

# -*- coding: UTF-8 -*-

import urllib2

import threading

import time

from bs4 import BeautifulSoup

import logging

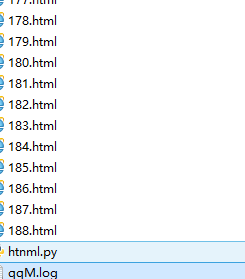

logging.basicConfig(level=logging.DEBUG,

format='%(asctime)s %(levelname)s %(message)s',

filename='qqM.log',

filemode='w')

class myThread(threading.Thread):

def __init__(self, url,name):

threading.Thread.__init__(self)

self.url = url

self.name=name

def run(self):

# threadLock.acquire()

try:

logging.info('\n网址:'+self.url+"\n\n文件名:"+self.name+".html\n")

html=open_url(self.url)

saveflie(html,self.name)

except Exception as e:

logging.info('出错的网页'+e)

# threadLock.release()

def open_url(url):

# threadLock.acquire()

request = urllib2.Request(url)

html = urllib2.urlopen(request).read()

# threadLock.release()

return html

def saveflie(html,name):

with open(name +'.html', 'wb') as f:

f.write(html)

f.close

def bs4(html):

url=[]

soup = BeautifulSoup(html, 'lxml')

for m in soup.find_all('a', 'j_th_tit '):

if 'href' in m.attrs:

a = m.attrs['href']

a = 'https://tieba.baidu.com' + a

url.append(a)

return url

# 构造url列表

urlList = []

urlList1=[]

threadLock = threading.Lock()

for i in range(1,5):

url='https://tieba.baidu.com/f?kw=%E6%9D%8E%E6%AF%85&ie=utf-8&pn='+str(i*50)

html=open_url(url)

url=bs4(html)

urlList1.append(url)

for i in urlList1:

for z in i:

print z

if z not in urlList:

urlList.append(z)

print len(urlList)

t_start = time.time()

k=1

threads=[]

for z in urlList:

thread1=myThread(z,str(k),)

threads.append(thread1)

k+=1

for t in threads:

# t.setDaemon(True)

c=t.start()

for i in threads:

i.join()

t_end = time.time()

print 'the thread way take %s s' % (t_end-t_start)

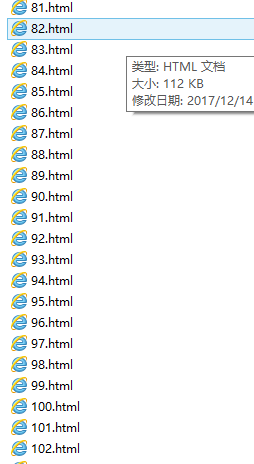

结果:

1968

1968

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?