在开始前请确保已完成安装docker swarm,如若未完成安装的请参考文章:

《Linux下docker、docker-compose安装》

《Linux下部署docker swarm集群》

1. 服务器列表

| IP | 名称 | hostname |

|---|---|---|

| 192.168.0.86 | manager86 | manager86 |

| 192.168.0.87 | manager87 | manager87 |

| 192.168.0.88 | manager88 | manager88 |

2. 三台服务器下载镜像

docker pull elasticsearch:7.5.0

docker pull kibana:7.5.0

docker pull logstash:7.5.0

3. 修改linux文件句柄数

echo vm.max_map_count=262144 >> /etc/sysctl.conf

sysctl -p

4. 新增用户elk并分配到docker组

新增组:

sudo groupadd docker

新增用户并设置主组docker:

useradd -g docker elk

设置密码:

passwd elk

刷新用户组:

newgrp docker

5. 授权文件夹

es相关的目录文件夹权限都授权给elk,因为es不能通过root启动

后续所有es用的文件件都要授权给elk用户,具体授权的目录根据自己实际使用的目录去授权

6. 开放端口

| 端口号 | 用途 | TCP | UDP |

|---|---|---|---|

| 9200 | es服务端口 | √ | × |

| 9300 | es集群内部通信端口 | √ | × |

| 5601 | kibana服务端口 | √ | × |

| 9600 | logstash TCP端口 | √ | × |

firewall-cmd --zone=public --add-port=9200/tcp --permanent

firewall-cmd --zone=public --add-port=9300/tcp --permanent

firewall-cmd --zone=public --add-port=5601/tcp --permanent

firewall-cmd --zone=public --add-port=9600/tcp --permanent

firewall-cmd --reload

7. 部署服务

7.1. 新建网络

docker network create elknet --driver overlay --subnet 172.45.0.0/16 --gateway 172.45.0.1

7.2. 检查网络是否新建完成

docker network ls

7.3. 搭建ES集群

在manager86上编排docker-compose-es.yml

version: '3.6'

services:

elasticsearch:

image: elasticsearch:7.5.0

hostname: elasticsearch

environment:

- node.name=elasticsearch

- cluster.name=docker-cluster

- node.master=true

- bootstrap.memory_lock=false

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- http.cors.enabled=true

- http.cors.allow-origin=*

- http.port=9200

- transport.tcp.port=9300

- network.host=0.0.0.0

- network.publish_host=elasticsearch

- discovery.seed_hosts=elasticsearch,elasticsearch2,elasticsearch3

- cluster.initial_master_nodes=elasticsearch,elasticsearch2,elasticsearch3

- discovery.zen.minimum_master_nodes=2

- xpack.security.enabled=false

volumes:

- esdata1:/usr/share/elasticsearch/data

ports:

- 9200:9200

- 9300:9300

networks:

- elknet

deploy:

placement:

constraints:

- node.labels.hostname == manager86

elasticsearch2:

image: elasticsearch:7.5.0

hostname: elasticsearch2

environment:

- node.name=elasticsearch2

- cluster.name=docker-cluster

- node.master=true

- bootstrap.memory_lock=false

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- http.cors.enabled=true

- http.cors.allow-origin=*

- http.port=9200

- transport.tcp.port=9300

- network.host=0.0.0.0

- network.publish_host=elasticsearch2

- discovery.seed_hosts=elasticsearch,elasticsearch2,elasticsearch3

- cluster.initial_master_nodes=elasticsearch,elasticsearch2,elasticsearch3

- discovery.zen.minimum_master_nodes=2

- xpack.security.enabled=false

volumes:

- esdata2:/usr/share/elasticsearch/data

ports:

- 9201:9200

- 9301:9300

networks:

- elknet

deploy:

placement:

constraints:

- node.labels.hostname == manager87

elasticsearch3:

image: elasticsearch:7.5.0

hostname: elasticsearch3

environment:

- node.name=elasticsearch3

- cluster.name=docker-cluster

- node.master=true

- bootstrap.memory_lock=false

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- http.cors.enabled=true

- http.cors.allow-origin=*

- http.port=9200

- transport.tcp.port=9300

- network.host=0.0.0.0

- network.publish_host=elasticsearch3

- discovery.seed_hosts=elasticsearch,elasticsearch2,elasticsearch3

- cluster.initial_master_nodes=elasticsearch,elasticsearch2,elasticsearch3

- discovery.zen.minimum_master_nodes=2

- xpack.security.enabled=false

volumes:

- esdata3:/usr/share/elasticsearch/data

ports:

- 9202:9200

- 9302:9300

networks:

- elknet

deploy:

placement:

constraints:

- node.labels.hostname == manager88

volumes:

esdata1:

driver: local

esdata2:

driver: local

esdata3:

driver: local

networks:

elknet:

external: true

使用命令在集群中启动服务

docker stack deploy --compose-file docker-compose-es.yml es

其中es为服务名称

查看服务:docker stack ls

查看内部服务详情:docker stack services es

每个服务的详细信息:docker stack ps es

查看ES集群情况可通过es日志查看或者es API查看

7.4. 搭建Kibana、logstash集群

在manager86上编排docker-compose-lk.yml

version: '3.6'

services:

logstash:

image: logstash:7.5.0

ports:

- "9600:9600"

- "5044:5044"

environment:

- "LS_JAVA_OPTS=-Xms256m -Xmx256m"

volumes:

- ./logstash/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

networks:

- elknet

kibana:

image: kibana:7.5.0

healthcheck: #健康检测,只有es服务正常后才启动kibana

test: ["CMD-SHELL", "curl --fail http://elasticsearch:9200/ || exit 1"]

interval: 10s

timeout: 5s

retries: 5

environment:

- ELASTICSEARCH_URL=http://elasticsearch:9200 #设置访问elasticsearch的地址

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200 #设置访问elasticsearch的地址

- "I18N_LOCALE=zh-CN"# 设置成中文显示

ports:

- "5601:5601"

networks:

- elknet

networks:

elknet:

external: true

修改logstash配置:TCP输入,elasticsearch输出

cd /home/elk/logstash

vim logstash.conf

配置如下:

input {

tcp {

port => 9600

codec => json_lines

}

}

output {

elasticsearch {

hosts => ["http://elasticsearch:9200"]

index => "zykj-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

同步到另外两台服务器

scp -r ./logstash/ root@192.168.0.87:/home/elk

scp -r ./logstash/ root@192.168.0.88:/home/elk

三台服务器都授权写权限

chmod 766 logstash.conf

使用命令在集群中启动服务

docker stack deploy --compose-file docker-compose-lk.yml lk

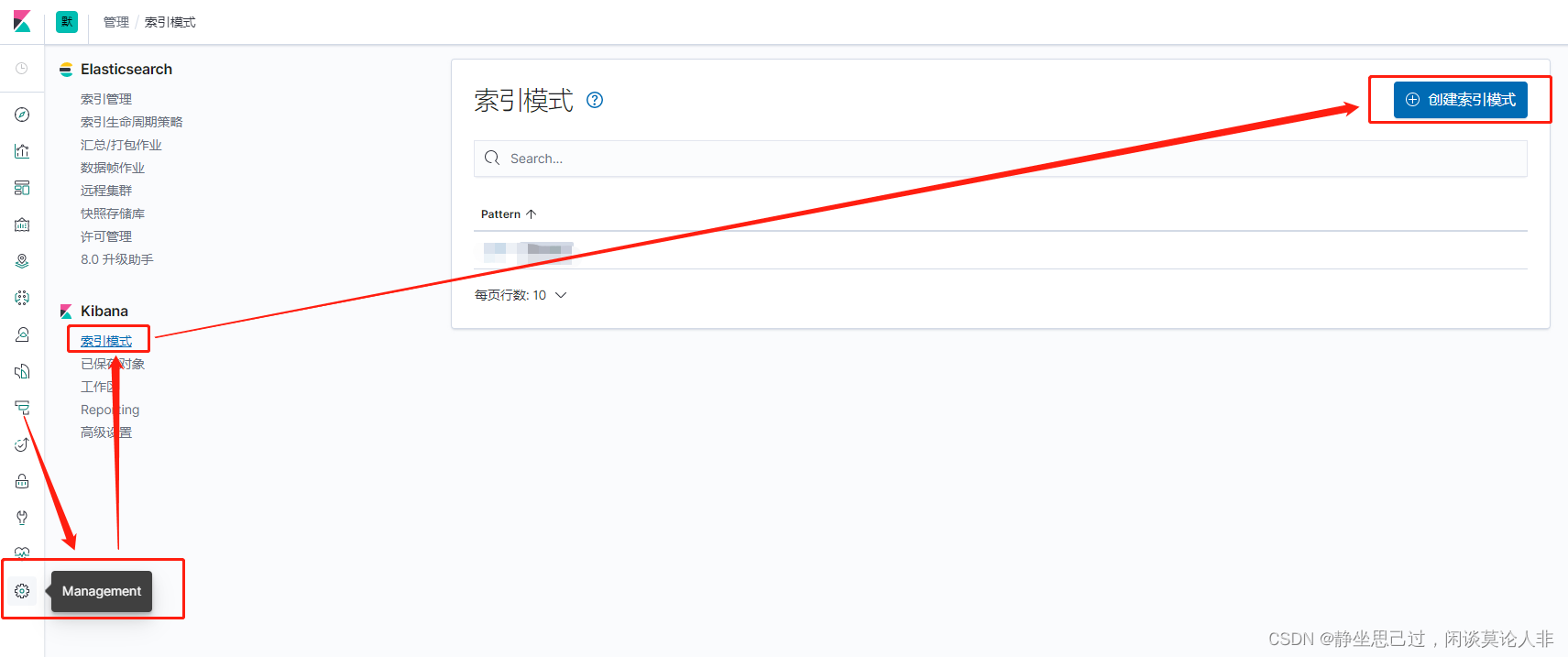

登录KibanaWeb界面,创建Kibana索引

8. 查看服务情况

kibana服务:http://ip:5601

es服务:http://ip:9200

logstash服务:http://ip:9600

675

675

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?