#include "stdafx.h"

#include <afxwin.h>

#include <array>

#include <iostream>

#include <map>

#include <vector>

// OpenCV 头文件

#include "opencv2/opencv.hpp"

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

// NiTE 头文件

#include <OpenNI.h>

#include <NiTE.h>

using namespace std;

using namespace openni;

using namespace nite;

const unsigned int roi_offset=70;

const unsigned int BIN_THRESH_OFFSET =5;

const unsigned int MEDIAN_BLUR_K = 5;

const double GRASPING_THRESH = 0.9;

const cv:: Scalar COLOR_BLUE =cv::Scalar(240,40,0);

const cv::Scalar COLOR_DARK_GREEN = cv::Scalar(0, 128, 0);

const cv::Scalar COLOR_LIGHT_GREEN =cv:: Scalar(0,255,0);

const cv::Scalar COLOR_YELLOW =cv:: Scalar(0,128,200);

const cv::Scalar COLOR_RED = cv::Scalar(0,0,255);

float handDepth ;

float handflag=5;

float opencvframe=3;

struct ConvexityDefect

{

cv::Point start;

cv::Point end;

cv::Point depth_point;

float depth;

};

int main( int argc, char **argv )

{

// 初始化OpenNI

OpenNI::initialize();

// 打开Kinect设备

Device mDevice;

mDevice.open( ANY_DEVICE );

// 创建深度数据流

VideoStream mDepthStream;

mDepthStream.create( mDevice, SENSOR_DEPTH );

// 设置VideoMode模式

VideoMode mDepthMode;

mDepthMode.setResolution( 640, 480 );

mDepthMode.setFps( 30 );

mDepthMode.setPixelFormat( PIXEL_FORMAT_DEPTH_1_MM );

mDepthStream.setVideoMode(mDepthMode);

// 同样的设置彩色数据流

VideoStream mColorStream;

mColorStream.create( mDevice, SENSOR_COLOR );

// 设置VideoMode模式

VideoMode mColorMode;

mColorMode.setResolution( 640, 480 );

mColorMode.setFps( 30 );

mColorMode.setPixelFormat( PIXEL_FORMAT_RGB888 );

mColorStream.setVideoMode( mColorMode);

// 设置深度图像映射到彩色图像

mDevice.setImageRegistrationMode( IMAGE_REGISTRATION_DEPTH_TO_COLOR );

// 初始化 NiTE

NiTE::initialize();

// 创建HandTracker跟踪器

HandTracker mHandTracker;

if( mHandTracker.create() != nite::STATUS_OK )

{

cerr << "Can't create user tracker" << endl;

return -1;

}

// 设定手势探测(GESTURE_WAVE、GESTURE_CLICK和GESTURE_HAND_RAISE)

mHandTracker.startGestureDetection( GESTURE_WAVE );

mHandTracker.startGestureDetection( GESTURE_CLICK );

//mHandTracker.startGestureDetection( GESTURE_HAND_RAISE );

//mHandTracker.setSmoothingFactor(0.1f);

// 创建深度图像显示

cv::namedWindow("Depth Image", CV_WINDOW_AUTOSIZE);

// 创建彩色图像显示

cv::namedWindow( "Color Image", CV_WINDOW_AUTOSIZE );

// 保存点坐标

map< HandId,vector<cv::Point2f> > mapHandData;

map< HandId,float > mapHanddepth;

vector<cv::Point2f> vWaveList;

vector<cv::Point2f> vClickList;

cv::Point2f ptSize( 3, 3 );

array<cv::Scalar,8> aHandColor;

aHandColor[0] = cv::Scalar( 255, 0, 0 );

aHandColor[1] = cv::Scalar( 0, 255, 0 );

aHandColor[2] = cv::Scalar( 0, 0, 255 );

aHandColor[3] = cv::Scalar( 255, 255, 0 );

aHandColor[4] = cv::Scalar( 255, 0, 255 );

aHandColor[5] = cv::Scalar( 0, 255, 255 );

aHandColor[6] = cv::Scalar( 255, 255, 255 );

aHandColor[7] = cv::Scalar( 0, 0, 0 );

// 环境初始化后,开始获取深度数据流和彩色数据流

mDepthStream.start();

mColorStream.start();

// 获得最大深度值

int iMaxDepth = mDepthStream.getMaxPixelValue();

// start

while( true )

{

/* POINT pt;

GetCursorPos(&pt);

cerr<<pt.x<<" "<<pt.y<<endl;*/

// 创建OpenCV::Mat,用于显示彩色数据图像

cv::Mat cImageBGR;

// 读取彩色数据帧信息流

VideoFrameRef mColorFrame;

mColorStream.readFrame( &mColorFrame );

// 将彩色数据流转换为OpenCV格式,记得格式是:CV_8UC3(含R\G\B)

const cv::Mat mImageRGB( mColorFrame.getHeight(), mColorFrame.getWidth(),

CV_8UC3, (void*)mColorFrame.getData() );

// RGB ==> BGR

cv::cvtColor( mImageRGB, cImageBGR, CV_RGB2BGR );

// 获取手Frame

HandTrackerFrameRef mHandFrame;

if( mHandTracker.readFrame( &mHandFrame ) == nite::STATUS_OK )

{

openni::VideoFrameRef mDepthFrame = mHandFrame.getDepthFrame();

// 将深度数据转换成OpenCV格式

const cv::Mat mImageDepth( mDepthFrame.getHeight(), mDepthFrame.getWidth(), CV_16UC1, (void*)mDepthFrame.getData() );

// 为了让深度图像显示的更加明显一些,将CV_16UC1 ==> CV_8U格式

cv::Mat mScaledDepth, mImageBGR;

mImageDepth.convertTo( mScaledDepth, CV_8U, 255.0 / iMaxDepth );

// 将灰度图转换成BGR格式,为了画出点的颜色坐标和轨迹

cv::cvtColor( mScaledDepth, mImageBGR, CV_GRAY2BGR );

// 检测手势

const nite::Array<GestureData>& aGestures = mHandFrame.getGestures();

for( int i = 0; i < aGestures.getSize(); ++ i )

{

const GestureData& rGesture = aGestures[i];

const Point3f& rPos = rGesture.getCurrentPosition();

cv::Point2f rPos2D;

mHandTracker.convertHandCoordinatesToDepth( rPos.x, rPos.y, rPos.z, &rPos2D.x, &rPos2D.y );

HandId mHandID;

if( mHandTracker.startHandTracking( rPos, &mHandID ) != nite::STATUS_OK )

cerr << "Can't track hand" << endl;

}

// 得到手心坐标

const nite::Array<HandData>& aHands = mHandFrame.getHands();

for( int i = 0; i < aHands.getSize(); ++ i )

{

const HandData& rHand = aHands[i];

HandId uID = rHand.getId();

if( rHand.isNew() )

{

mapHandData.insert( make_pair( uID, vector<cv::Point2f>() ) );

mapHanddepth.insert(make_pair(uID,float()));

}

if( rHand.isTracking() )

{

// 将手心坐标映射到彩色图像和深度图像中

const Point3f& rPos = rHand.getPosition();

cv::Point2f rPos2D;

mHandTracker.convertHandCoordinatesToDepth( rPos.x, rPos.y, rPos.z, &rPos2D.x, &rPos2D.y );

handDepth = rPos.z * 255/iMaxDepth;

cv::Point2f aPoint=rPos2D;

cv::circle( cImageBGR, aPoint, 3, cv::Scalar( 0, 0, 255 ), 4 );

cv::circle( mScaledDepth, aPoint, 3, cv::Scalar(0, 0, 255), 4);

// 在彩色图像中画出手的轮廓边

cv::Point2f ctlPoint, ctrPoint, cdlPoint, cdrPoint;

ctlPoint.x = aPoint.x - 100;

ctlPoint.y = aPoint.y - 100;

ctrPoint.x = aPoint.x - 100;

ctrPoint.y = aPoint.y + 100;

cdlPoint.x = aPoint.x + 100;

cdlPoint.y = aPoint.y - 100;

cdrPoint.x = aPoint.x + 100;

cdrPoint.y = aPoint.y + 100;

cv::line( cImageBGR, ctlPoint, ctrPoint, cv::Scalar( 255, 0, 0 ), 3 );

cv::line( cImageBGR, ctlPoint, cdlPoint, cv::Scalar( 255, 0, 0 ), 3 );

cv::line( cImageBGR, cdlPoint, cdrPoint, cv::Scalar( 255, 0, 0 ), 3 );

cv::line( cImageBGR, ctrPoint, cdrPoint, cv::Scalar( 255, 0, 0 ), 3 );

// 在深度图像中画出手的轮廓边

cv::Point2f mtlPoint, mtrPoint, mdlPoint, mdrPoint;

mtlPoint.x = aPoint.x - 100;

mtlPoint.y = aPoint.y - 100;

mtrPoint.x = aPoint.x - 100;

mtrPoint.y = aPoint.y + 100;

mdlPoint.x = aPoint.x + 100;

mdlPoint.y = aPoint.y - 100;

mdrPoint.x = aPoint.x + 100;

mdrPoint.y = aPoint.y + 100;

cv::line( mScaledDepth, mtlPoint, mtrPoint, cv::Scalar( 255, 0, 0 ), 3 );

cv::line( mScaledDepth, mtlPoint, mdlPoint, cv::Scalar( 255, 0, 0 ), 3 );

cv::line( mScaledDepth, mdlPoint, mdrPoint, cv::Scalar( 255, 0, 0 ), 3 );

cv::line( mScaledDepth, mtrPoint, mdrPoint, cv::Scalar( 255, 0, 0 ), 3 );

mapHandData[uID].push_back( rPos2D );

mapHanddepth[uID]=handDepth;

}

if( rHand.isLost() ){

mapHandData.erase(uID );

mapHanddepth.erase(uID);

}

}

for( auto itHand = mapHandData.begin(); itHand != mapHandData.end(); ++ itHand )

{

const cv::Scalar& rColor = aHandColor[ itHand->first % aHandColor.size() ];

const vector<cv::Point2f>& rPoints = itHand->second;

for( int i = 1; i < rPoints.size(); ++ i )

{

cv::line( mImageBGR, rPoints[i-1], rPoints[i], rColor, 2 );

cv::line( cImageBGR, rPoints[i-1], rPoints[i], rColor, 2 );

}

}

cv::imshow( "Depth Image", mImageBGR );

cv::imshow("Color Image", cImageBGR);

mHandFrame.release();

}

else

{

cerr << "Can't get new frame" << endl;

}

// 按键“q”退出循环

if( cv::waitKey( 1 ) == 'q' )

break;

}

mHandTracker.destroy();

mColorStream.destroy();

NiTE::shutdown();

OpenNI::shutdown();

return 0;

}

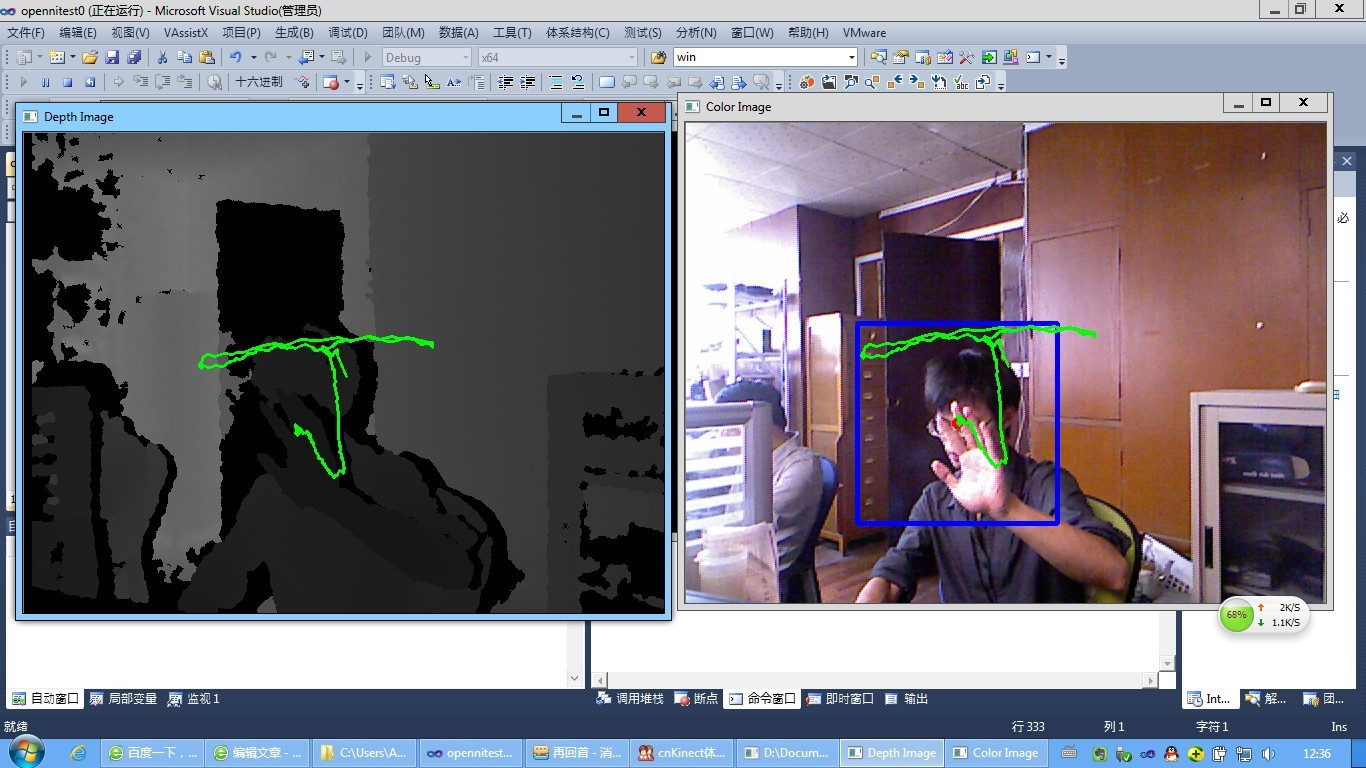

在手的部位画出一个矩形框,模拟画线,实现效果图

567

567

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?