接NN模型章节

实现不同点:图像不再是一维向量784一次性训练,而是将图像与kernel(n*n)卷积,更好的保留了图像相邻像素的关联性,直观体现在预测正确率上。

定义卷积层:conv2d

定义池化层:max_pool_2x2

本次实现采用的是LeNet-5实现:总共有7层设计,如下图所示:

conv1 -> pool1 -> conv2 -> pool2 -> fully connection1 -> fully connection2 -> softmax

实际设置参数详见代码。

图像信息:宽度(width) * 高度(height) * 厚度(channel)

卷积解析:通俗理解图像越变越小,越变越厚

采用5*5大小的卷积核。

VALID参数:图片卷积过后变小 28 * 28 -> (28 - 5 + 1) * (28 - 5 + 1)

SAME参数:图片卷积过程中填充外围像素为0 卷积后生成的图像大小与原图大小一致。

channel: 通道数

池化解析: 通俗理解图像宽度,高度减半。用于降维。

输入图片28*28*1 ->(卷积:valid) 24 * 24 * 20 -> (池化:max) 12 * 12 * 20 -> .......

全连接层 4*4*40 -> 1 * 1000(向量,需要reshape,通俗理解:立体转成平面) -> 全连接层 -> softmax层 1000 -> 10(输出)。

习惯性的在全连接层加入dropout,降低网络的过拟合现象。

import tensorflow as tf

import numpy as np

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot= True)

#计算正确率

def compute_accuracy(v_xs, v_ys):

global prediction

y_pre = sess.run(prediction, feed_dict={xs: v_xs, keep_prob: 1})

#将预测的结果和正确结果比较

correct_prediction = tf.equal(tf.argmax(y_pre,1), tf.argmax(v_ys,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

result = sess.run(accuracy, feed_dict={xs: v_xs, ys: v_ys, keep_prob: 1})

return result

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)#产生随机变量

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

#stride[1,x_movement, y_movement, 1]

#pading填充方式 SAME以0填充 VALID是全在内部卷积比原图小

return tf.nn.conv2d(x, W, strides=[1,1,1,1], padding='VALID')

#压缩图像

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='SAME')

#好处在于可以使用一部分数据

xs = tf.placeholder(tf.float32, [None, 784])/255. #28*28

ys = tf.placeholder(tf.float32, [None, 10])

keep_prob = tf.placeholder(tf.float32)

#-1不考虑图像维度, 1表示图像是灰度 或者是1个特征映射

x_image = tf.reshape(xs, [-1, 28, 28, 1])

#conv1 + pool1

W_conv1 = weight_variable([5,5,1,20]) #5*5 pading channel是1高度是20

b_conv1 = bias_variable([20])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)#激励 output size 24*24*20

h_pool1 = max_pool_2x2(h_conv1) #output size 12*12*32

#conv2 + poo2

W_conv2 = weight_variable([5,5,20,40]) #5*5 pading channel是20高度是40

b_conv2 = bias_variable([40])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)#激励 output size 4*4*40

h_pool2 = max_pool_2x2(h_conv2) #output size 4*4*40

#fully connection1

W_fc1 = weight_variable([4*4*40, 1000])

b_fc1 = bias_variable([1000])

h_pool2_flat = tf.reshape(h_pool2, [-1, 4*4*40])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

#fully connection2

W_fc2 = weight_variable([1000, 1000])

b_fc2 = bias_variable([1000])

h_fc2 = tf.nn.relu(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

h_fc2_drop = tf.nn.dropout(h_fc2, keep_prob)

#softmax 层

W_soft = weight_variable([1000, 10])

b_soft = bias_variable([10])

prediction = tf.nn.softmax(tf.matmul(h_fc2_drop,W_soft) + b_soft)

#激励函数用softmax

#prediction = add_layer(xs, 784, 10, activation_function=tf.nn.softmax)

#交叉熵代价函数

cross_entropy = tf.reduce_mean(-tf.reduce_sum(ys * tf.log(prediction),

reduction_indices=[1]))

train_step = tf.train.GradientDescentOptimizer(0.03).minimize(cross_entropy)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

iterator_times = -1

while mnist.train.epochs_completed <= 40:

# training

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step, feed_dict={xs: batch_xs, ys: batch_ys, keep_prob: 0.5})

if iterator_times != mnist.train.epochs_completed:

# to see the step improvement

iterator_times+=1

print(compute_accuracy(

mnist.test.images[:1000], mnist.test.labels[:1000]))

通过迭代40次,最高正确率达到99.2%

tensorflow2.0代码如下:

from tensorflow.keras.datasets import mnist

import tensorflow as tf

from tensorflow.keras import layers

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape(-1, 28, 28, 1).astype('float32') / 255.

x_test = x_test.reshape(-1, 28, 28, 1).astype('float32') / 255.

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

keep_prob = 0.5

inputs = tf.keras.Input(shape=[28,28,1], name='img')

print(inputs.shape)

conv1 = layers.Conv2D(20, 3, activation='relu',padding='valid')(inputs)

poo11= layers.MaxPool2D(2, strides=2)(conv1)

conv2 = layers.Conv2D(40, 3, activation='relu',padding='valid')(poo11)

poo12= layers.MaxPool2D(2, strides=2)(conv2)

flat = layers.Flatten()(poo12)

fc1 = layers.Dense(1000, activation='relu')(flat)

drop1 = layers.Dropout(keep_prob)(fc1)

fc2 = layers.Dense(1000, activation='relu')(drop1)

drop2 = layers.Dropout(keep_prob)(fc2)

pred = layers.Dense(10, activation='softmax')(drop2)

model = tf.keras.Model(inputs=inputs, outputs=pred)

model.compile(optimizer=tf.keras.optimizers.SGD(lr=0.3),

loss='sparse_categorical_crossentropy',#目标张量是整形张量

metrics=['accuracy'])

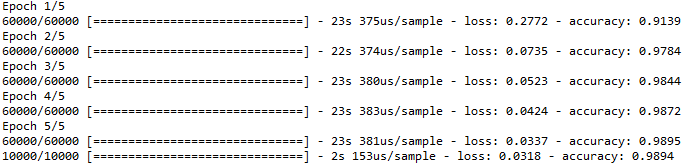

model.fit(x_train, y_train, batch_size=100, epochs=5)

test_score = model.evaluate(x_test, y_test)训练结果如下:

4819

4819

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?