spark耗时对数据大小并不是线性增长,而是随数据大小缓慢增长。

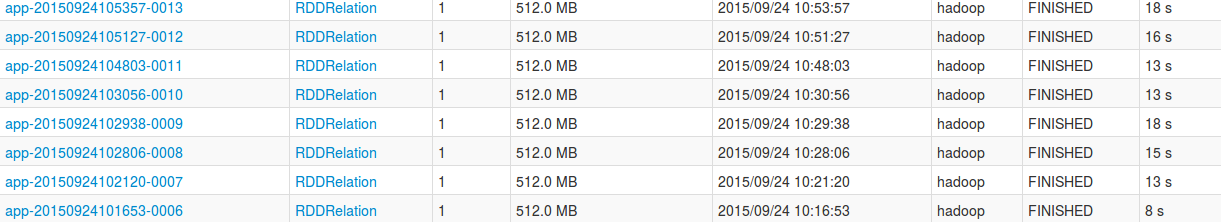

数据相差一个数量级,运行时间也只差几秒,下面是多次运行下面的程序的耗时情况:分别测试100,1000,10000

但是数据超过一定大小,并行化及注册为表都没问题,而执行sql查询则出现问题:

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Serialized task 0:0 was 11446277 bytes, which exceeds max allowed: spark.akka.frameSize (10485760 bytes) - reserved (204800 bytes). Consider increasing spark.akka.frameSize or using broadcast variables for large values.根据提示:对大数据可采用增加akka框架大小或者使用广播变量

package sql

/**

* Created by hadoop on 15-9-24.

*/

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql.functions._

// One method for defining the schema of an RDD is to make a case class with the desired column

// names and types.

//定义一个RDD的schema的一个方法是创建一个所需列名及类型的case class--------------------------------------------------

case class Record(key: Int, value: String)

object RDDRelation {

def main(args: Array[String]) {

val sparkConf = new SparkConf().setAppName("RDDRelation")

sparkConf.setMaster("spark://moon:7077") //尝试在集群上运行,会忽略VM参数-Dspark.master=local

val sc = new SparkContext(sparkConf)

sc.addJar("/usr/local/spark/IdeaProjects/out/artifacts/sparkPi/RDDRelation.jar")//add

val sqlContext = new SQLContext(sc)

// Importing the SQL context gives access to all the SQL functions and implicit conversions.

import sqlContext.implicits._

val df = sc.parallelize((1 to 1000000).map(i => Record(i, s"val_$i"))).toDF() //[1,val_1]------------------

// Any RDD containing case classes can be registered as a table. The schema of the table is

// automatically inferred using scala reflection.

df.registerTempTable("records")

// Once tables have been registered, you can run SQL queries over them.

println("Result of SELECT *:")

sqlContext.sql("SELECT * FROM records").collect().foreach(println)

// Aggregation queries are also supported.

val count = sqlContext.sql("SELECT COUNT(*) FROM records").collect().head.getLong(0)

println(s"COUNT(*): $count")

// The results of SQL queries are themselves RDDs and support all normal RDD functions. The

// items in the RDD are of type Row, which allows you to access each column by ordinal.

val rddFromSql = sqlContext.sql("SELECT key, value FROM records WHERE key < 10")

println("Result of RDD.map:")

rddFromSql.map(row => s"Key: ${row(0)}, Value: ${row(1)}").collect().foreach(println)

// Queries can also be written using a LINQ-like Scala DSL.

df.where($"key" === 1).orderBy($"value".asc).select($"key").collect().foreach(println)

/*

// Write out an RDD as a parquet file.

df.write.parquet("pair.parquet")

// Read in parquet file. Parquet files are self-describing so the schmema is preserved.

val parquetFile = sqlContext.read.parquet("pair.parquet")

// Queries can be run using the DSL on parequet files just like the original RDD.

parquetFile.where($"key" === 1).select($"value".as("a")).collect().foreach(println)

// These files can also be registered as tables.

parquetFile.registerTempTable("parquetFile")

sqlContext.sql("SELECT * FROM parquetFile").collect().foreach(println)

*/

sc.stop()

}

}/usr/local/jdk1.7/bin/java -Dspark.master=local -Didea.launcher.port=7534 -Didea.launcher.bin.path=/usr/local/spark/idea-IC-141.1532.4/bin -Dfile.encoding=UTF-8 -classpath /usr/local/jdk1.7/jre/lib/management-agent.jar:/usr/local/jdk1.7/jre/lib/jsse.jar:/usr/local/jdk1.7/jre/lib/plugin.jar:/usr/local/jdk1.7/jre/lib/jfxrt.jar:/usr/local/jdk1.7/jre/lib/javaws.jar:/usr/local/jdk1.7/jre/lib/charsets.jar:/usr/local/jdk1.7/jre/lib/jfr.jar:/usr/local/jdk1.7/jre/lib/jce.jar:/usr/local/jdk1.7/jre/lib/rt.jar:/usr/local/jdk1.7/jre/lib/deploy.jar:/usr/local/jdk1.7/jre/lib/resources.jar:/usr/local/jdk1.7/jre/lib/ext/zipfs.jar:/usr/local/jdk1.7/jre/lib/ext/sunjce_provider.jar:/usr/local/jdk1.7/jre/lib/ext/sunpkcs11.jar:/usr/local/jdk1.7/jre/lib/ext/dnsns.jar:/usr/local/jdk1.7/jre/lib/ext/localedata.jar:/usr/local/jdk1.7/jre/lib/ext/sunec.jar:/usr/local/spark/IdeaProjects/target/scala-2.10/classes:/home/hadoop/.sbt/boot/scala-2.10.4/lib/scala-library.jar:/usr/local/spark/spark-1.4.1-bin-hadoop2.4/lib/spark-assembly-1.4.1-hadoop2.4.0.jar:/usr/local/spark/idea-IC-141.1532.4/lib/idea_rt.jar com.intellij.rt.execution.application.AppMain sql.RDDRelation

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

15/09/24 10:59:13 INFO SparkContext: Running Spark version 1.4.1

15/09/24 10:59:14 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/09/24 10:59:14 WARN Utils: Your hostname, moon resolves to a loopback address: 127.0.1.1; using 172.18.15.5 instead (on interface wlan0)

15/09/24 10:59:14 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

15/09/24 10:59:14 INFO SecurityManager: Changing view acls to: hadoop

15/09/24 10:59:14 INFO SecurityManager: Changing modify acls to: hadoop

15/09/24 10:59:14 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); users with modify permissions: Set(hadoop)

15/09/24 10:59:15 INFO Slf4jLogger: Slf4jLogger started

15/09/24 10:59:15 INFO Remoting: Starting remoting

15/09/24 10:59:15 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@172.18.15.5:54134]

15/09/24 10:59:15 INFO Utils: Successfully started service 'sparkDriver' on port 54134.

15/09/24 10:59:15 INFO SparkEnv: Registering MapOutputTracker

15/09/24 10:59:15 INFO SparkEnv: Registering BlockManagerMaster

15/09/24 10:59:15 INFO DiskBlockManager: Created local directory at /tmp/spark-95371dfd-fa49-4da5-bd94-de9e2238ad11/blockmgr-4ca8e8dd-153d-486b-a3fe-2fe6f17eb121

15/09/24 10:59:15 INFO MemoryStore: MemoryStore started with capacity 710.4 MB

15/09/24 10:59:15 INFO HttpFileServer: HTTP File server directory is /tmp/spark-95371dfd-fa49-4da5-bd94-de9e2238ad11/httpd-1b01e978-00b0-4560-9b37-1e330098b014

15/09/24 10:59:15 INFO HttpServer: Starting HTTP Server

15/09/24 10:59:15 INFO Utils: Successfully started service 'HTTP file server' on port 41997.

15/09/24 10:59:15 INFO SparkEnv: Registering OutputCommitCoordinator

15/09/24 10:59:16 INFO Utils: Successfully started service 'SparkUI' on port 4040.

15/09/24 10:59:16 INFO SparkUI: Started SparkUI at http://172.18.15.5:4040

15/09/24 10:59:16 INFO AppClient$ClientActor: Connecting to master akka.tcp://sparkMaster@moon:7077/user/Master...

15/09/24 10:59:16 INFO SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-20150924105916-0016

15/09/24 10:59:16 INFO AppClient$ClientActor: Executor added: app-20150924105916-0016/0 on worker-20150924091347-172.18.15.5-41804 (172.18.15.5:41804) with 1 cores

15/09/24 10:59:16 INFO SparkDeploySchedulerBackend: Granted executor ID app-20150924105916-0016/0 on hostPort 172.18.15.5:41804 with 1 cores, 512.0 MB RAM

15/09/24 10:59:16 INFO AppClient$ClientActor: Executor updated: app-20150924105916-0016/0 is now LOADING

15/09/24 10:59:16 INFO AppClient$ClientActor: Executor updated: app-20150924105916-0016/0 is now RUNNING

15/09/24 10:59:16 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 55610.

15/09/24 10:59:16 INFO NettyBlockTransferService: Server created on 55610

15/09/24 10:59:16 INFO BlockManagerMaster: Trying to register BlockManager

15/09/24 10:59:16 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.15.5:55610 with 710.4 MB RAM, BlockManagerId(driver, 172.18.15.5, 55610)

15/09/24 10:59:16 INFO BlockManagerMaster: Registered BlockManager

15/09/24 10:59:16 INFO SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

15/09/24 10:59:17 INFO SparkContext: Added JAR /usr/local/spark/IdeaProjects/out/artifacts/sparkPi/RDDRelation.jar at http://172.18.15.5:41997/jars/RDDRelation.jar with timestamp 1443063557092

15/09/24 10:59:19 INFO SparkDeploySchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@172.18.15.5:44663/user/Executor#1655787999]) with ID 0

15/09/24 10:59:20 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.15.5:55914 with 265.4 MB RAM, BlockManagerId(0, 172.18.15.5, 55914)

Result of SELECT *:

15/09/24 10:59:20 INFO SparkContext: Starting job: collect at RDDRelation.scala:52

15/09/24 10:59:20 INFO DAGScheduler: Got job 0 (collect at RDDRelation.scala:52) with 2 output partitions (allowLocal=false)

15/09/24 10:59:20 INFO DAGScheduler: Final stage: ResultStage 0(collect at RDDRelation.scala:52)

15/09/24 10:59:20 INFO DAGScheduler: Parents of final stage: List()

15/09/24 10:59:20 INFO DAGScheduler: Missing parents: List()

15/09/24 10:59:20 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[2] at collect at RDDRelation.scala:52), which has no missing parents

15/09/24 10:59:20 INFO MemoryStore: ensureFreeSpace(3040) called with curMem=0, maxMem=744876933

15/09/24 10:59:20 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 3.0 KB, free 710.4 MB)

15/09/24 10:59:20 INFO MemoryStore: ensureFreeSpace(1816) called with curMem=3040, maxMem=744876933

15/09/24 10:59:20 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1816.0 B, free 710.4 MB)

15/09/24 10:59:20 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.15.5:55610 (size: 1816.0 B, free: 710.4 MB)

15/09/24 10:59:20 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:874

15/09/24 10:59:20 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[2] at collect at RDDRelation.scala:52)

15/09/24 10:59:20 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

15/09/24 10:59:21 WARN TaskSetManager: Stage 0 contains a task of very large size (11123 KB). The maximum recommended task size is 100 KB.

15/09/24 10:59:21 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 172.18.15.5, PROCESS_LOCAL, 11390355 bytes)

15/09/24 10:59:21 INFO TaskSchedulerImpl: Cancelling stage 0

15/09/24 10:59:21 INFO TaskSchedulerImpl: Stage 0 was cancelled

15/09/24 10:59:21 INFO DAGScheduler: ResultStage 0 (collect at RDDRelation.scala:52) failed in 0.682 s

15/09/24 10:59:21 INFO DAGScheduler: Job 0 failed: collect at RDDRelation.scala:52, took 0.844378 s

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Serialized task 0:0 was 11446277 bytes, which exceeds max allowed: spark.akka.frameSize (10485760 bytes) - reserved (204800 bytes). Consider increasing spark.akka.frameSize or using broadcast variables for large values.

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1273)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1264)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1263)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1263)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:730)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:730)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:730)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1457)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1418)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:48)

15/09/24 10:59:21 INFO SparkContext: Invoking stop() from shutdown hook

15/09/24 10:59:21 INFO SparkUI: Stopped Spark web UI at http://172.18.15.5:4040

15/09/24 10:59:21 INFO DAGScheduler: Stopping DAGScheduler

15/09/24 10:59:21 INFO SparkDeploySchedulerBackend: Shutting down all executors

15/09/24 10:59:21 INFO SparkDeploySchedulerBackend: Asking each executor to shut down

15/09/24 10:59:22 WARN ReliableDeliverySupervisor: Association with remote system [akka.tcp://sparkExecutor@172.18.15.5:44663] has failed, address is now gated for [5000] ms. Reason is: [Disassociated].

15/09/24 10:59:22 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

15/09/24 10:59:22 INFO Utils: path = /tmp/spark-95371dfd-fa49-4da5-bd94-de9e2238ad11/blockmgr-4ca8e8dd-153d-486b-a3fe-2fe6f17eb121, already present as root for deletion.

15/09/24 10:59:22 INFO MemoryStore: MemoryStore cleared

15/09/24 10:59:22 INFO BlockManager: BlockManager stopped

15/09/24 10:59:22 INFO BlockManagerMaster: BlockManagerMaster stopped

15/09/24 10:59:22 INFO SparkContext: Successfully stopped SparkContext

15/09/24 10:59:22 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

15/09/24 10:59:22 INFO Utils: Shutdown hook called

15/09/24 10:59:22 INFO Utils: Deleting directory /tmp/spark-95371dfd-fa49-4da5-bd94-de9e2238ad11

Process finished with exit code 1

1600

1600

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?