import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from tensorflow.examples.tutorials.mnist import input_data

from tensorflow.models.rnn import rnn, rnn_cell

mnist = input_data.read_data_sets('data/', one_hot=True)

train_img = mnist.train.images

train_lbl = mnist.train.labels

test_img = mnist.test.images

test_lbl = mnist.test.labels

dim_input = 28

dim_hidden = 128

dim_output = 10

nsteps = 28

weights = {'i2h' : tf.Variable(tf.random_normal([dim_input, dim_hidden], stddev=0.1)),

'fc' : tf.Variable(tf.random_normal([dim_hidden, dim_output], stddev=0.1))}

bias = {'i2h' : tf.Variable(tf.random_normal([dim_hidden], stddev=0.1)),

'fc' : tf.Variable(tf.random_normal([dim_output], stddev=0.1))}

def _rnn(_x, _init_state, _w, _b, _nstep):

# (batch_size, nsteps, dim_input) -> (nsteps, batch_size, dim_input)

# each time step input : (batch_size, dim_input)

_x = tf.transpose(_x, [1, 0, 2])

#(nsteps*batch_size, dim_input)

_x = tf.reshape(_x, [-1, dim_input])

#input 2 hiddenlayer embedding?

# _h = tf.matmul(_x, _w['i2h']) + _b['i2h']

_h = _x

_h_split = tf.split(0, _nstep, _h)

with tf.variable_scope('RNN'):

lstm_cell = rnn_cell.BasicLSTMCell(dim_hidden, forget_bias=1.0)

_lstm_o, _lstm_s = rnn.rnn(lstm_cell, _h_split, initial_state=_init_state)

_o = tf.matmul(_lstm_o[-1], _w['fc']) + _b['fc']

return {

'x':_x, 'h':_h, 'hsplit':_h_split,

'lstm_o': _lstm_o, 'lstm_s':_lstm_s, 'o': _o }

x = tf.placeholder(tf.float32, [None, nsteps, dim_input], name='input')

y = tf.placeholder(tf.float32, [None, dim_output], name='output')

init_state = tf.placeholder(tf.float32, [None, 2*dim_hidden])

rnn_mnist = _rnn(x, init_state, weights, bias, nsteps)

score = rnn_mnist['o']

state_final = rnn_mnist['lstm_s']

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(score, y))

lr = 0.001

optimizer = tf.train.AdamOptimizer(lr).minimize(loss)

# optimizer = tf.train.GradientDescentOptimizer(lr).minimize(loss)

pred = tf.equal(tf.argmax(score, 1), tf.argmax(y,1))

acc = tf.reduce_mean(tf.cast(pred, tf.float32))

init = tf.initialize_all_variables()

epoch = 20

batch_size = 100

snapshot = 5

save_step = 1

saver = tf.train.Saver()

sess = tf.Session()

sess.run(init)

loss_cache = []

acc_cache = []

state = np.zeros([batch_size, 2*dim_hidden])

for ep in xrange(epoch):

num_batch = mnist.train.num_examples/batch_size

avg_loss, avg_acc = 0, 0

for nb in xrange(num_batch):

batch_x, batch_y = mnist.train.next_batch(batch_size)

batch_x = batch_x.reshape(batch_size, nsteps, dim_input)

out = sess.run([optimizer, acc, loss, state_final], feed_dict={x:batch_x, y:batch_y, init_state: state})

# state = out[3]

avg_loss += out[2]/num_batch

avg_acc += out[1]/num_batch

loss_cache.append(avg_loss)

acc_cache.append(avg_acc)

if ep % snapshot ==0:

print 'Epoch: %d, loss: %.4f, acc: %.4f'%(ep, avg_loss, acc_cache[-1])

test_img = test_img.reshape(-1, nsteps, dim_input)

# print test_img.shape

# print test_lbl.shape

# print 'test accuracy:' , sess.run(acc, {x:test_img, y:test_lbl, init_state: np.zeros([test_img.shape[0], 2*dim_hidden])})

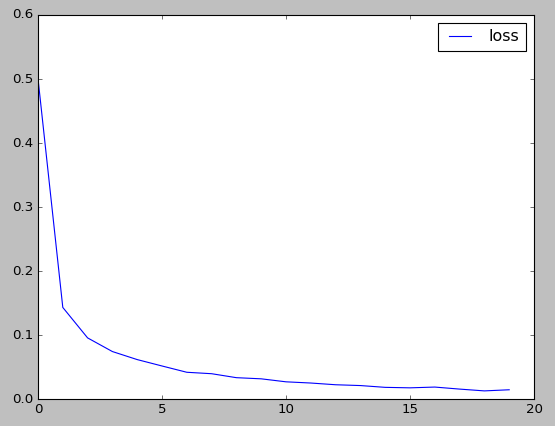

plt.figure(1)

plt.plot(range(len(loss_cache)), loss_cache, 'b-', label='loss')

plt.legend(loc = 'upper right')

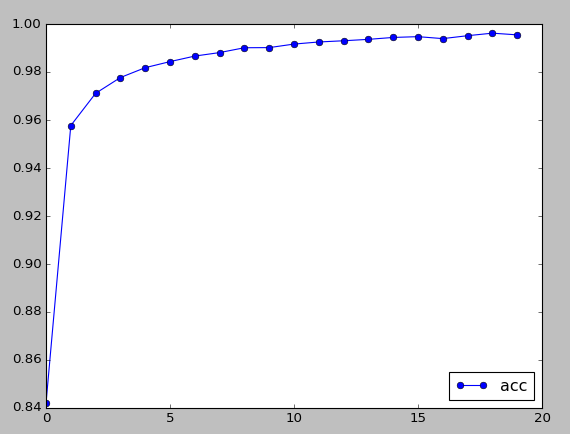

plt.figure(2)

plt.plot(range(len(acc_cache)), acc_cache, 'o-', label='acc')

plt.legend(loc = 'lower right')

plt.show()Tensorflow: recurrent neural network (mnist basic)

最新推荐文章于 2019-08-05 04:47:23 发布

509

509

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?