第二周: 正则表达式

http://www.cnblogs.com/moonache/p/5110322.html

第三周: Networks and Sockets

http://www.cnblogs.com/moonache/p/5112060.html

第四周:Programs that Surf the Web

http://www.cnblogs.com/moonache/p/5112088.html

BeautifulSoup:http://cuiqingcai.com/1319.html

官方文档:http://beautifulsoup.readthedocs.io/zh_CN/latest/

第五周:http://www.cnblogs.com/moonache/p/5112109.html

第四周课后作业:

习题1. 对下面网址中的comment数字求和

http://python-data.dr-chuck.net/comments_329805.html

import urllib

from bs4 import BeautifulSoup

url = raw_input("Enter-")

html = urllib.urlopen(url).read()

soup = BeautifulSoup(html,"html.parser")

tags = soup('span')

sum = 0

for tag in tags:

sum += int(tag.contents[0])

print sum

习题2.

In this assignment you will write a Python program that expands on http://www.pythonlearn.com/code/urllinks.py. The program will use urllib to read the HTML from the data files below, extract the href= vaues from the anchor tags, scan for a tag that is in a particular position relative to the first name in the list, follow that link and repeat the process a number of times and report the last name you find.

We provide two files for this assignment. One is a sample file where we give you the name for your testing and the other is the actual data you need to process for the assignment

Sample problem: Start at http://python-data.dr-chuck.net/known_by_Fikret.html

Find the link at position 3 (the first name is 1). Follow that link. Repeat this process 4 times. The answer is the last name that you retrieve.

Sequence of names: Fikret Montgomery Mhairade Butchi Anayah

Last name in sequence: Anayah

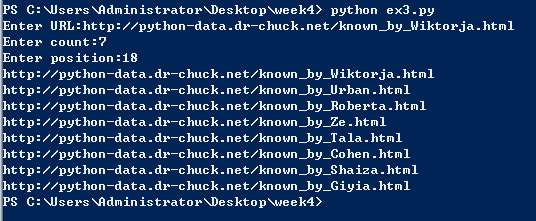

Actual problem: Start at: http://python-data.dr-chuck.net/known_by_Wiktorja.html

Find the link at position 18 (the first name is 1). Follow that link. Repeat this process 7 times. The answer is the last name that you retrieve.

Hint: The first character of the name of the last page that you will load is: G

解题思路:该题中需要使用迭代

import urllib

from bs4 import BeautifulSoup

url = raw_input("Enter URL:")

count = int(raw_input("Enter count:"))

position = int(raw_input("Enter position:"))

print url

for i in range(1,count+1):

html = urllib.urlopen(url).read()

soup = BeautifulSoup(html,"html.parser")

tags = soup('a')

url = tags[position-1].get('href')

print url

答案是Giyia

第五周课后作业:

Extracting Data from XML

In this assignment you will write a Python program somewhat similar to http://www.pythonlearn.com/code/geoxml.py. The program will prompt for a URL, read the XML data from that URL using urllib and then parse and extract the comment counts from the XML data, compute the sum of the numbers in the file.

We provide two files for this assignment. One is a sample file where we give you the sum for your testing and the other is the actual data you need to process for the assignment.

Sample data: http://python-data.dr-chuck.net/comments_42.xml (Sum=2553)

Actual data: http://python-data.dr-chuck.net/comments_329802.xml (Sum ends with 74)

You do not need to save these files to your folder since your program will read the data directly from the URL. Note: Each student will have a distinct data url for the assignment - so only use your own data url for analysis.

Data Format and Approach

The data consists of a number of names and comment counts in XML as follows:

Matthias

97

You are to look through all the tags and find the values sum the numbers. The closest sample code that shows how to parse XML is geoxml.py. But since the nesting of the elements in our data is different than the data we are parsing in that sample code you will have to make real changes to the code.

To make the code a little simpler, you can use an XPath selector string to look through the entire tree of XML for any tag named ‘count’ with the following line of code:

counts = tree.findall(‘.//count’)

Take a look at the Python ElementTree documentation and look for the supported XPath syntax for details. You could also work from the top of the XML down to the comments node and then loop through the child nodes of the comments node.

Sample Execution

$ python solution.py

Enter location: http://python-data.dr-chuck.net/comments_42.xml

Retrieving http://python-data.dr-chuck.net/comments_42.xml

Retrieved 4204 characters

Count: 50

Sum: 2…

解法一:

import urllib

import xml.etree.ElementTree as ET

location = raw_input('Enter location:')

print 'Retrieve',location

xml = urllib.urlopen(location).read()

print 'Retrieve %d characters' %len(xml)

commentinfo = ET.fromstring(xml) #使用该方法后相当于已经获取到树根,然后可以直接在树根下面查找child

comment = commentinfo.findall('comments/comment')

sum = 0

for item in comment:

sum += int(item.find('count').text)

#sum += int(item.text)

print sum解法二

import urllib

import xml.etree.ElementTree as ET

location = raw_input('Enter location:')

print 'Retrieve',location

xml = urllib.urlopen(location).read()

print 'Retrieve %d characters' %len(xml)

commentinfo = ET.fromstring(xml)

count = commentinfo.findall('.//count')

sum = 0

for item in count:

sum += int(item.text)

print sum第六周课后作业

1.编程作业一:https://pr4e.dr-chuck.com/tsugi/mod/python-data/index.php?PHPSESSID=197f44127af777033ac63a9769a1fab7

Extracting Data from JSON

In this assignment you will write a Python program somewhat similar to http://www.pythonlearn.com/code/json2.py. The program will prompt for a URL, read the JSON data from that URL using urllib and then parse and extract the comment counts from the JSON data, compute the sum of the numbers in the file and enter the sum below:

We provide two files for this assignment. One is a sample file where we give you the sum for your testing and the other is the actual data you need to process for the assignment.

Sample data: http://python-data.dr-chuck.net/comments_42.json (Sum=2553)

Actual data: http://python-data.dr-chuck.net/comments_329806.json (Sum ends with 50)

You do not need to save these files to your folder since your program will read the data directly from the URL. Note: Each student will have a distinct data url for the assignment - so only use your own data url for analysis.

Data Format

The data consists of a number of names and comment counts in JSON as follows:

{

comments: [

{

name: “Matthias”

count: 97

},

{

name: “Geomer”

count: 97

}

…

]

}

The closest sample code that shows how to parse JSON and extract a list is json2.py. You might also want to look at geoxml.py to see how to prompt for a URL and retrieve data from a URL.

Sample Execution

$ python solution.py

Enter location: http://python-data.dr-chuck.net/comments_42.json

Retrieving http://python-data.dr-chuck.net/comments_42.json

Retrieved 2733 characters

Count: 50

Sum: 2…

解题思路:

import json

import urllib

url = raw_input("Enter location:")

print 'Retrieving',url

html = urllib.urlopen(url).read()

print 'Retrieve %d characters' %len(html)

info = json.loads(html)

#comments = info.find('comments') #不可以使用这种方式,会报错

comments = info['comments']

print 'count:',len(comments)

sum = 0

for item in comments:

sum += item['count']

print sum2.编程作业二

Calling a JSON API

In this assignment you will write a Python program somewhat similar to http://www.pythonlearn.com/code/geojson.py. The program will prompt for a location, contact a web service and retrieve JSON for the web service and parse that data, and retrieve the first place_id from the JSON. A place ID is a textual identifier that uniquely identifies a place as within Google Maps.

API End Points

To complete this assignment, you should use this API endpoint that has a static subset of the Google Data:

http://python-data.dr-chuck.net/geojson

This API uses the same parameters (sensor and address) as the Google API. This API also has no rate limit so you can test as often as you like. If you visit the URL with no parameters, you get a list of all of the address values which can be used with this API.

To call the API, you need to provide a sensor=false parameter and the address that you are requesting as the address= parameter that is properly URL encoded using the urllib.urlencode() fuction as shown in http://www.pythonlearn.com/code/geojson.py

Test Data / Sample Execution

You can test to see if your program is working with a location of “South Federal University” which will have a place_id of “ChIJJ8oO7_B_bIcR2AlhC8nKlok”.

$ python solution.py

Enter location: South Federal University

Retrieving http://…

Retrieved 2101 characters

Place id ChIJJ8oO7_B_bIcR2AlhC8nKlok

Turn In

在上例中测试的数据在http://python-data.dr-chuck.net/geojson?sensor=false&address=South%20Federal%20University中

Please run your program to find the place_id for this location:

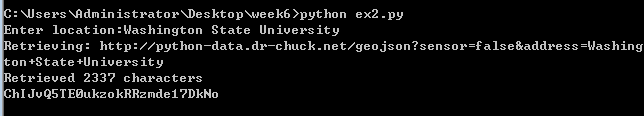

Washington State University

Make sure to enter the name and case exactly as above and enter the place_id and your Python code below. Hint: The first seven characters of the place_id are “ChIJvQ5 …”

Make sure to retreive the data from the URL specified above and not the normal Google API. Your program should work with the Google API - but the place_id may not match for this assignment.

import urllib

import json

serviceurl = 'http://python-data.dr-chuck.net/geojson?'

address = raw_input('Enter location:')

url = serviceurl + urllib.urlencode({'sensor':'false','address':address})

print 'Retrieving:',url

html = urllib.urlopen(url)

data = html.read()

print 'Retrieved',len(data),'characters'

try: js = json.loads(str(data))

except: js = None

if 'status' not in js or js['status'] != 'OK':

print '==== Failure To Retrieve ===='

results = js['results']

print results[0]['place_id']

779

779

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?