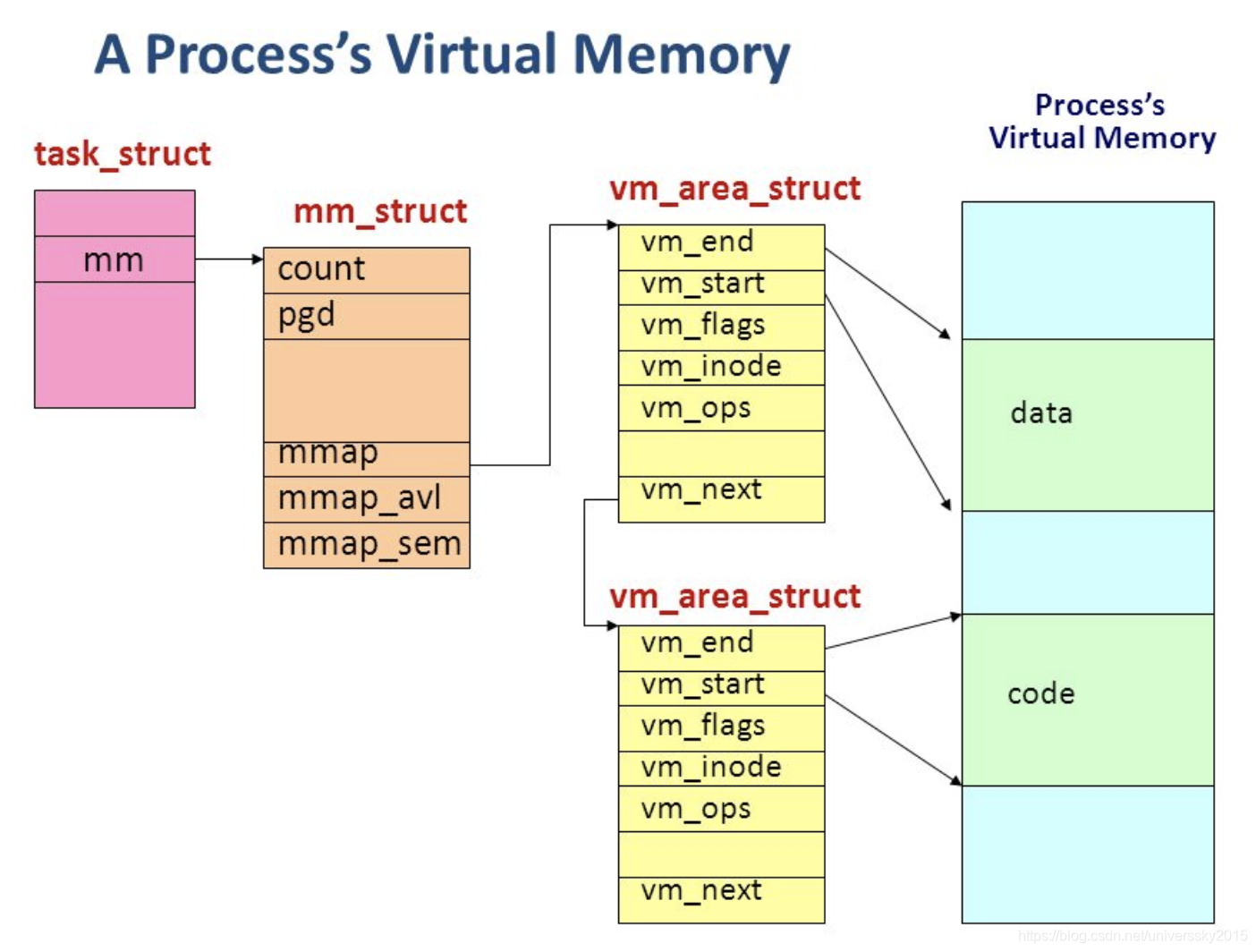

Each process has its own address space –- in modern processors it is implemented as a set of pages which map virtual addresses to a physical memory. When another process has to be executed on CPU, context switch occurs: after it processor special registers point to a new set of page tables, thus new virtual address space is used. Virtual address space also contains all binaries and libraries and saved process counter value, so another process will be executed after context switch. Processes may also have multiple threads. Each thread has independent state, including program counter and stack, thus threads may be executed in parallel, but they all threads share same address space.

Thread and Process

Process tree in Linux

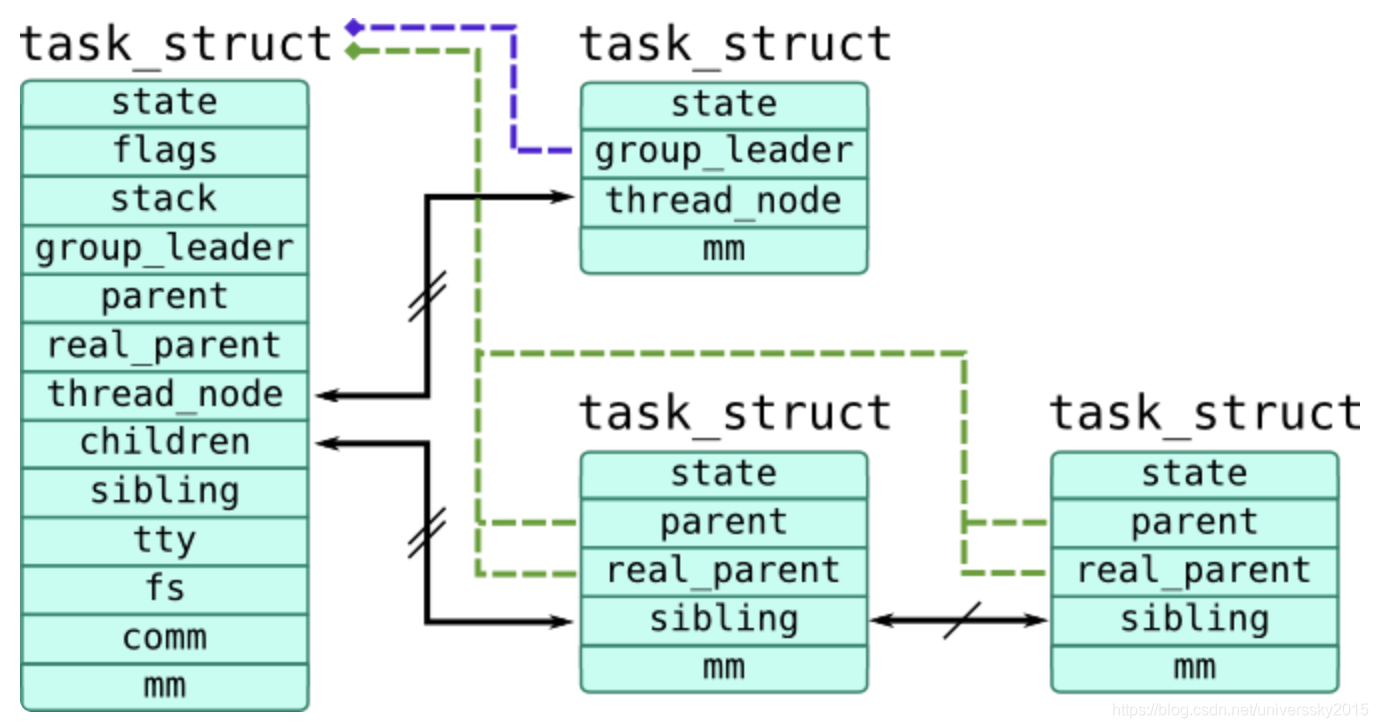

Processes and threads are implemented through universal task_struct structure (defined in include/linux/sched.h), so we will refer in our book as tasks. The first thread in process is called task group leader and all other threads are linked through list node thread_node and contain pointer group_leader which references task_struct of their process, that is , the task_struct of task group leader. Children processes refer to parent process through parent pointer and link through sibling list node. Parent process is linked with its children using children list head.

Relations between task_struct objects are shown in the following picture:

Task which is currently executed on CPU is accessible through current macro which actually calls function to get task from run-queue of CPU where it is called. To get current pointer in SystemTap, use task_current(). You can also get pointer to a task_struct using pid2task() function which accepts PID as its first argument. Task tapset provides several functions similar for functions used as Probe Context. They all get pointer to a task_struct as their argument:

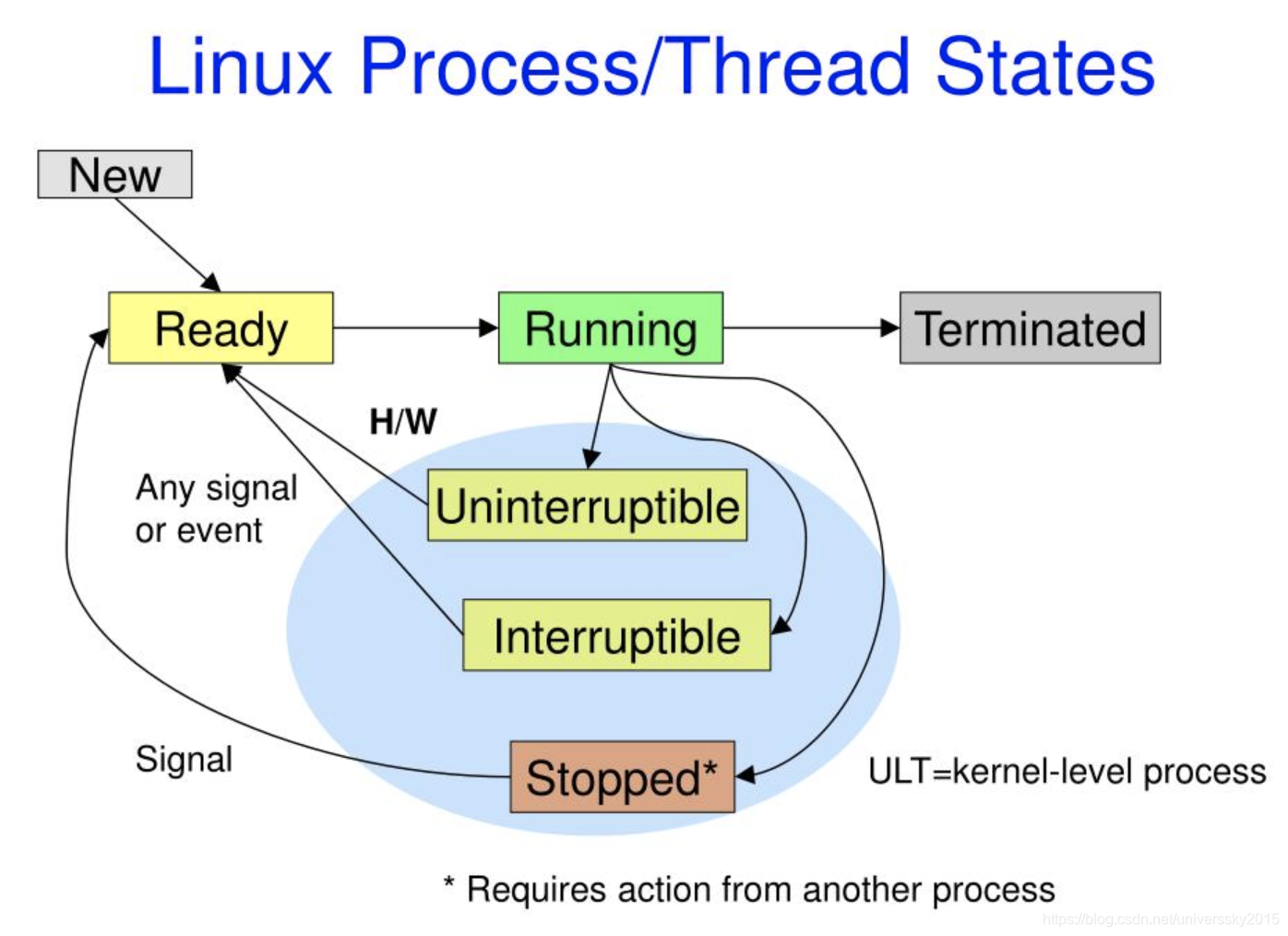

task_pid()andtask_tid()–- ID of the process ID (stored intgidfield) and thread (stored inpidfield) respectively. Note that kernel most of the kernel code doesn't check cachedpidandtgidbut use namespace wrappers.task_parent()–- returns pointer to a parent process, stored inparent/real_parentfieldstask_state()–- returns state bitmask stored instate, such asTASK_RUNNING(0),TASK_INTERRUPTIBLE(1),TASK_UNINTTERRUPTIBLE(2). Last 2 values are for sleeping or waiting tasks –- the difference that only interruptible tasks may receive signals.task_execname()–- reads executable name fromcommfield, which stores base name of executable path. Note thatcommrespects symbolic links.task_cpu()–- returns CPU to which task belongs

There are several other useful fields in task_struct:

mm(pointer tostruct mm_struct) refers to a address space of a process. For example,exe_file(pointer tostruct file) refers to executable file, whilearg_startandarg_endare addresses of first and last byte of argv passed to a process respectivelyfs(pointer tostruct fs_struct) contains filesystem information:pathcontains working directory of a task,rootcontains root directory (alterable usingchrootsystem call)start_timeandreal_start_time(represented asstruct timespecuntil 3.17, replaced withu64nanosecond timestamps) –- monotonic and real start time of a process.files(pointer tostruct files_struct) contains table of files opened by processutimeandstime(cputime_t) contain amount of time spent by CPU in userspace and kernel respectively. They can be accessed through Task Time tapset.

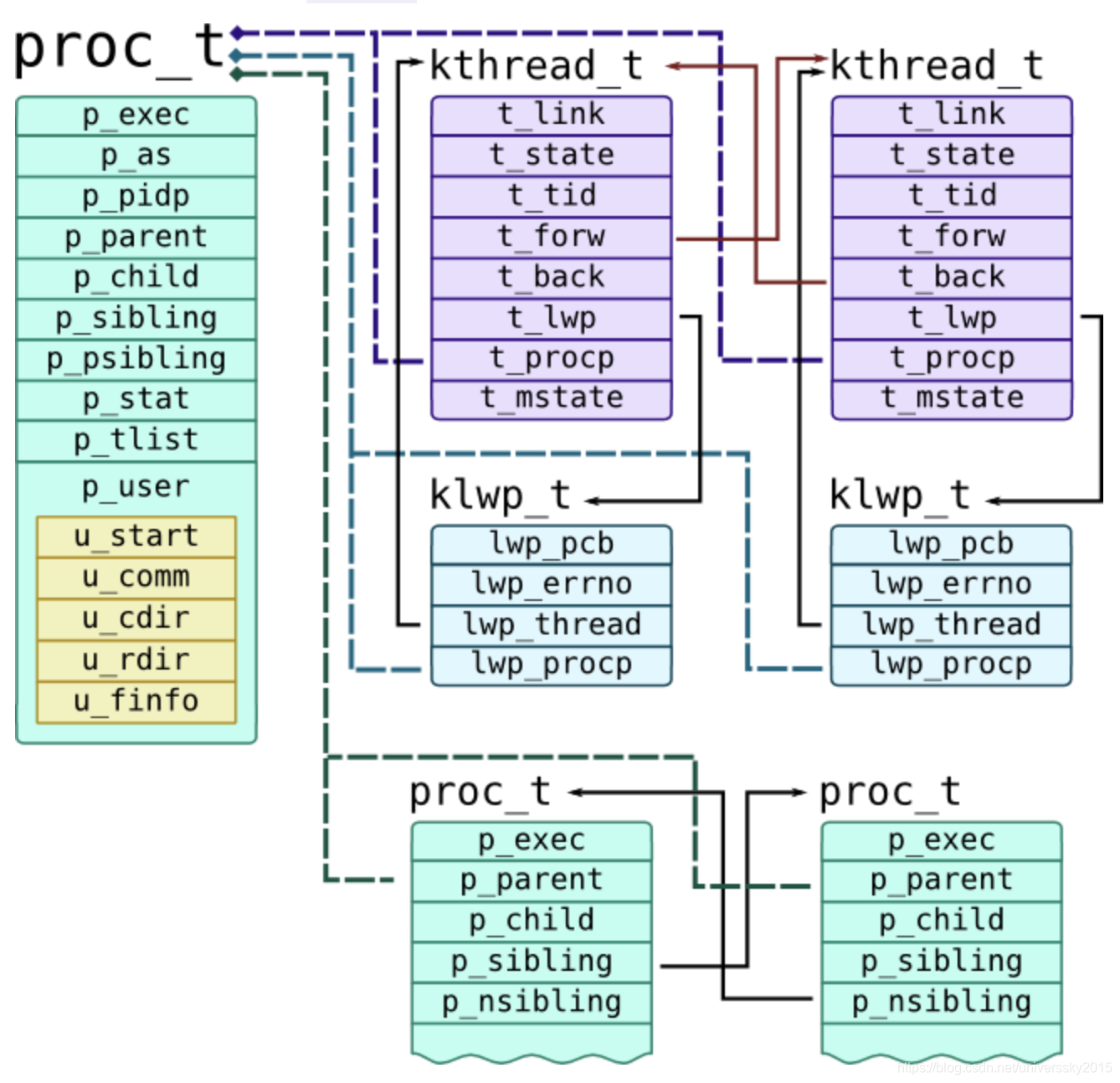

Process tree in Solaris

Solaris Kernel distinguishes threads and processes: on low level all threads represented by kthread_t, which are presented to userspace as Light-Weight Processes (or LWPs) defined as klwp_t. One or multiple LWPs constitute a process proc_t. They all have references to each other, as shown on the following picture:

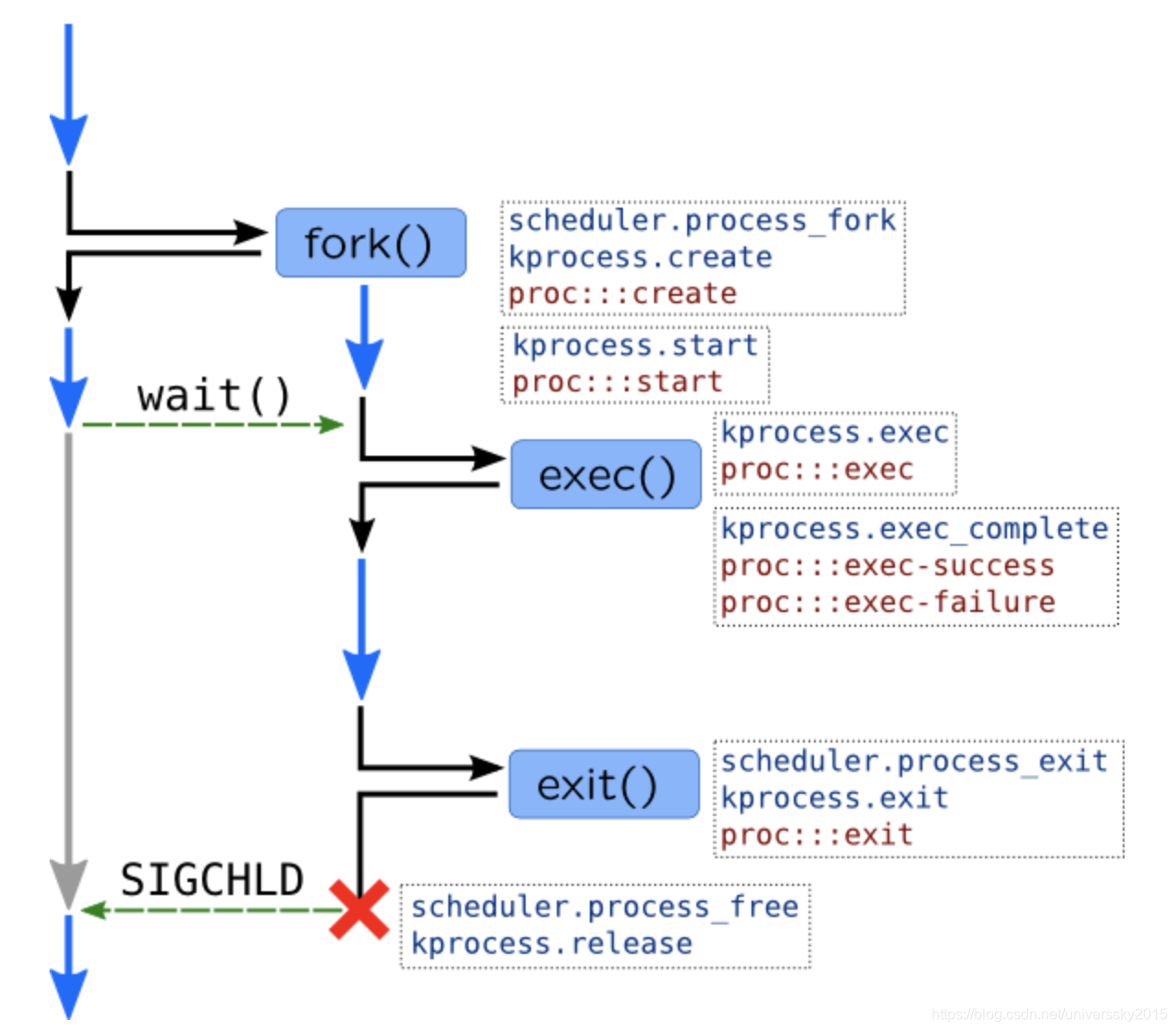

Lifetime of a process

Lifetime of a process and corresponding probes are shown in the following image:

Unlike Windows, in Unix process is spawned in two stages:

- Parent process calls

fork()system call. Kernel creates exact copy of a parent process including address space (which is available in copy-on-write mode) and open files, and gives it a new PID. Iffork()is successful, it will return in the context of two processes (parent and child), with the same instruction pointer. Following code usually closes files in child, resets signals, etc. - Child process calls

execve()system call, which replaces address space of a process with a new one based on binary which is passed toexecve()call.

參考資料:

https://myaut.github.io/dtrace-stap-book/kernel/proc.html

关于进程

(1)定义:

进程(Process)是计算机中的程序关于某数据集合上的一次运行活动,是系统进行资源分配和调度的基本单位,是操作系统结构的基础。

在早期面向进程设计的计算机结构中,进程是程序的基本执行实体;在当代面向线程设计的计算机结构中,进程是线程的容器。程序是指令、数据及其组织形式的描述,进程是程序的实体。

进程的概念主要有两点:

第一,进程是一个实体。每一个进程都有它自己的地址空间,一般情况下,包扩文本区域(text region)、数据区域(data region)和堆栈(stack region)。

文本区域存储处理器执行的代码;数据区域存储变量和进程执行期间使用的动态分配的内存;堆栈区域存储着活动过程调用的指令和本地变量。

第二,进程是一个“执行中的程序”。程序是一个没有生命的实体,只有处理器赋予程序生命时(操作系统执行之),它才能成为一个活动的实体,我们称其为进程。

(2)进程的特征:

- 动态性:进程的实质是程序在多道程序系统中的一次执行过程,进程是动态产生、消亡的;

- 并发性:任何进程都可以同其他进程一起并发执行;

- 独立性:进程是一个能独立运行的基本单位,同时也是系统分配资源和调度的独立单位;

- 异步性:由于进程间的相互制约,使得进程具有执行的间断性,即进程按各自独立的、不可预知的速度向前推进;

(3)进程与程序、线程的区别:

在面向进程设计的系统(如早期的UNIX,Linux 2.4及更早的版本)中,进程是程序的基本执行实体;在面向线程设计的系统(如当代多数操作系统、Linux 2.6及更新的版本)中,进程本身不是基本运行单位,而是线程的容器。简单地来说,进程与程序是动态与静止的区别,进程与程序是多对一的,同样,线程与进程也是多对一的。

3.1 操作系统是如何组织进程的

在Linux系统中, 进程在/linux/include/linux/sched.h 头文件中被定义为task_struct, 它是一个结构体, 一个它的实例化即为一个进程, task_struct由许多元素构成, 下面列举一些重要的元素进行分析。

- 标识符:与进程相关的唯一标识符,用来区别正在执行的进程和其他进程。

- 状态:描述进程的状态,因为进程有挂起,阻塞,运行等好几个状态,所以都有个标识符来记录进程的执行状态。

- 优先级:如果有好几个进程正在执行,就涉及到进程被执行的先后顺序的问题,这和进程优先级这个标识符有关。

- 程序计数器:程序中即将被执行的下一条指令的地址。

- 内存指针:程序代码和进程相关数据的指针。

- 上下文数据:进程执行时处理器的寄存器中的数据。

- I/O状态信息:包括显示的I/O请求,分配给进程的I/O设备和被进程使用的文件列表等。

- 记账信息:包括处理器的时间总和,记账号等等。

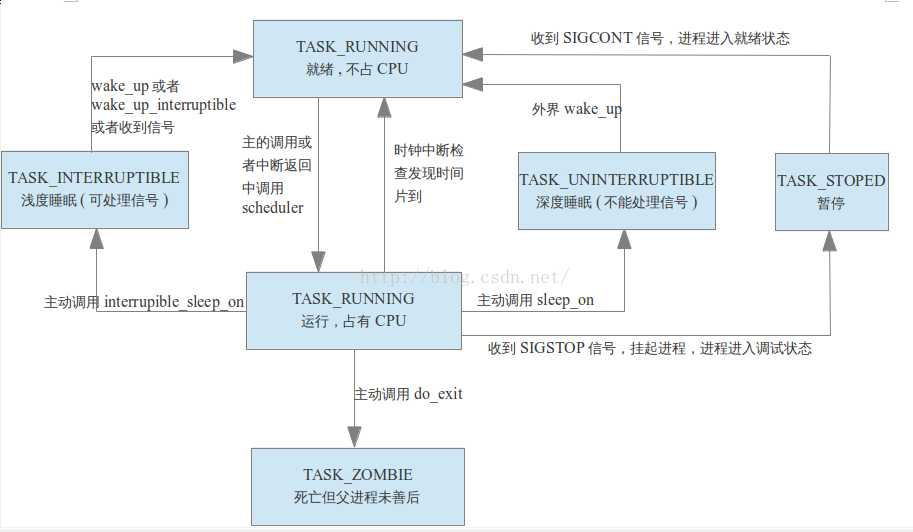

3.1 进程状态(STATE)

在task_struct结构体中, 定义进程的状态语句为

volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */valatile关键字的作用是确保本条指令不会因编译器的优化而省略, 且要求每次直接读值, 这样保证了对进程实时访问的稳定性。

进程在/linux/include/linux/sched.h 头文件中我们可以找到state的可能取值如下

/*

* Task state bitmask. NOTE! These bits are also

* encoded in fs/proc/array.c: get_task_state().

* We have two separate sets of flags: task->state

* is about runnability, while task->exit_state are

* the task exiting. Confusing, but this way

* modifying one set can‘t modify the other one by

* mistake.

*/

define TASK_RUNNING 0

define TASK_INTERRUPTIBLE 1

define TASK_UNINTERRUPTIBLE 2

define TASK_STOPPED 4

define TASK_TRACED 8/* in tsk->exit_state */

define EXIT_ZOMBIE 16

define EXIT_DEAD 32/* in tsk->state again */

define TASK_NONINTERACTIVE 64

define TASK_DEAD 128

根据state后面的注释, 可以得到当state<0时,表示此进程是处于不可运行的状态, 当state=0时, 表示此进程正处于运行状态, 当state>0时, 表示此进程处于停止运行状态。

以下列举一些state的常用取值

| 状态 | 描述 |

| :---------------------- | :----------------------------------------------------------- |

| 0(TASK_RUNNING) | 进程处于正在运行或者准备运行的状态中 |

| 1(TASK_INTERRUPTIBLE) | 进程处于可中断睡眠状态, 可通过信号唤醒 |

| 2(TASK_UNINTERRUPTIBLE) | 进程处于不可中断睡眠状态, 不可通过信号进行唤醒 |

| 4( TASK_STOPPED) | 进程被停止执行 |

| 8( TASK_TRACED) | 进程被监视 |

| 16( EXIT_ZOMBIE) | 僵尸状态进程, 表示进程被终止, 但是其父程序还未获取其被终止的信息。 |

| 32(EXIT_DEAD) | 进程死亡, 此状态为进程的最终状态 |

3.2 进程标识符(PID)

c pid_t pid; /*进程的唯一表示*/ pid_t tgid; /*进程组的标识符*/

在Linux系统中,一个线程组中的所有线程使用和该线程组的领头线程(该组中的第一个轻量级进程)相同的PID,并被存放在tgid成员中。只有线程组的领头线程的pid成员才会被设置为与tgid相同的值。注意,getpid()系统调用返回的是当前进程的tgid值而不是pid值。(线程是程序运行的最小单位,进程是程序运行的基本单位。)

3.3 进程的标记(FLAGS)

unsigned int flags; /* per process flags, defined below */反应进程状态的信息,但不是运行状态,用于内核识别进程当前的状态,以备下一步操作

flags成员的可能取值如下,这些宏以PF(ProcessFlag)开头

3.4 进程之间的关系

/*

* pointers to (original) parent process, youngest child, younger sibling,

* older sibling, respectively. (p->father can be replaced with

* p->parent->pid)

*/

struct task_struct *real_parent; /* real parent process (when being debugged) */

struct task_struct *parent; /* parent process */

/*

* children/sibling forms the list of my children plus the

* tasks I‘m ptracing.

*/

struct list_head children; /* list of my children */

struct list_head sibling; /* linkage in my parent‘s children list */

struct task_struct *group_leader; /* threadgroup leader */在Linux系统中,所有进程之间都有着直接或间接地联系,每个进程都有其父进程,也可能有零个或多个子进程。拥有同一父进程的所有进程具有兄弟关系。

real_parent指向其父进程,如果创建它的父进程不再存在,则指向PID为1的init进程。 parent指向其父进程,当它终止时,必须向它的父进程发送信号。它的值通常与 real_parent相同。 children表示链表的头部,链表中的所有元素都是它的子进程(进程的子进程链表)。 sibling用于把当前进程插入到兄弟链表中(进程的兄弟链表)。 group_leader指向其所在进程组的领头进程。

3.5 进程调度

3.5.1 优先级

int prio, static_prio, normal_prio;

unsigned int rt_priority;

/*

prio: 用于保存动态优先级

static_prio: 用于保存静态优先级, 可以通过nice系统调用来修改

normal_prio: 它的值取决于静态优先级和调度策略

priort_priority: 用于保存实时优先级

*/3.5.2 调度策略

unsigned int policy;

cpumask_t cpus_allowed;

/*

policy: 表示进程的调度策略

cpus_allowed: 用于控制进程可以在哪个处理器上运行

*/policy表示进程调度策略, 目前主要有以下五种策略

/*

* Scheduling policies

*/

#define SCHED_NORMAL 0 //按优先级进行调度

#define SCHED_FIFO 1 //先进先出的调度算法

#define SCHED_RR 2 //时间片轮转的调度算法

#define SCHED_BATCH 3 //用于非交互的处理机消耗型的进程

#define SCHED_IDLE 5//系统负载很低时的调度算法

字段 描述 所在调度器类 SCHED_NORMAL (也叫SCHED_OTHER)用于普通进程,通过CFS调度器实现。SCHED_BATCH用于非交互的处理器消耗型进程。SCHED_IDLE是在系统负载很低时使用 CFS SCHED_FIFO 先入先出调度算法(实时调度策略),相同优先级的任务先到先服务,高优先级的任务可以抢占低优先级的任务 RT SCHED_RR 轮流调度算法(实时调度策略),后 者提供 Roound-Robin 语义,采用时间片,相同优先级的任务当用完时间片会被放到队列尾部,以保证公平性,同样,高优先级的任务可以抢占低优先级的任务。不同要求的实时任务可以根据需要用sched_setscheduler()API 设置策略 RT SCHED_BATCH SCHED_NORMAL普通进程策略的分化版本。采用分时策略,根据动态优先级(可用nice()API设置),分配 CPU 运算资源。注意:这类进程比上述两类实时进程优先级低,换言之,在有实时进程存在时,实时进程优先调度。但针对吞吐量优化 CFS SCHED_IDLE 优先级最低,在系统空闲时才跑这类进程(如利用闲散计算机资源跑地外文明搜索,蛋白质结构分析等任务,是此调度策略的适用者) CFS

3.6 进程的地址空间

进程都拥有自己的资源,这些资源指的就是进程的地址空间,每个进程都有着自己的地址空间,在task_struct中,有关进程地址空间的定义如下:

struct mm_struct *mm, *active_mm;

/*

mm: 进程所拥有的用户空间内存描述符,内核线程无的mm为NULL

active_mm: active_mm指向进程运行时所使用的内存描述符, 对于普通进程而言,这两个指针变量的值相同。但是内核线程kernel thread是没有进程地址空间的,所以内核线程的tsk->mm域是空(NULL)。但是内核必须知道用户空间包含了什么,因此它的active_mm成员被初始化为前一个运行进程的active_mm值。

*/如果当前内核线程被调度之前运行的也是另外一个内核线程时候,那么其mm和avtive_mm都是NULL

以上即为操作系统是怎么组织进程的一些分析, 有了这些作为基础, 我们就可以进行下一步的分析

4.进程状态之间是如何转换的

关于linux进程状态(STATE)的定义, 取值以及描述都在进程状态中进行了详细的分析, 这里就不做过多的赘述。

下面给出进程的各种状态之间是如何进行互相转换的关系图:

5.进程是如何进行调度的

5.1 与进程调度有关的数据结构

在了解进程是如何进行调度之前, 我们需要先了解一些与进程调度有关的数据结构。

5.1.1 可运行队列(runqueue)

在/kernel/sched.c文件下, 可运行队列被定义为struct rq, 每一个CPU都会拥有一个struct rq, 它主要被用来存储一些基本的用于调度的信息, 包括及时调度和CFS调度。在Linux kernel 2.6中, struct rq是一个非常重要的数据结构, 接下来我们介绍一下它的部分重要字段:

/* 选取出部分字段做注释 */

//runqueue的自旋锁,当对runqueue进行操作的时候,需要对其加锁。由于每个CPU都有一个runqueue,这样会大大减少竞争的机会

spinlock_t lock;

// 此变量是用来记录active array中最早用完时间片的时间

unsigned long expired_timestamp;

//记录该CPU上就绪进程总数,是active array和expired array进程总数和

unsigned long nr_running;

// 记录该CPU运行以来发生的进程切换次数

unsigned long long nr_switches;

// 记录该CPU不可中断状态进程的个数

unsigned long nr_uninterruptible;

// 这部分是rq的最最最重要的部分, 我将在下面仔细分析它们

struct prio_array *active, *expired, arrays[2];5.1.2 优先级数组(prio_array)

Linux kernel 2.6版本中, 在rq中多加了两个按优先级排序的数组active array和expired array 。

这两个队列的结构是struct prio_array, 它被定义在/kernel/sched.c中, 其数据结构为:

struct prio_array {

unsigned int nr_active; //

DECLARE_BITMAP(bitmap, MAX_PRIO+1); /* include 1 bit for delimiter */

/*开辟MAX_PRIO + 1个bit的空间, 当某一个优先级的task正处于TASK_RUNNING状态时, 其优先级对应的二进制位将会被标记为1, 因此当你需要找此时需要运行的最高的优先级时, 只需要找到bitmap的哪一位被标记为1了即可*/

struct list_head queue[MAX_PRIO]; // 每一个优先级都有一个list头

};Active array表示的是CPU选择执行的运行进程队列, 在这个队列里的进程都有时间片剩余, *active指针总是指向它。Expired array则是用来存放在Active array中使用完时间片的进程, *expired指针总是指向它。

一旦在active array里面的某一个普通进程的时间片使用完了, 调度器将重新计算该进程的时间片与优先级, 并将它从active array中删除, 插入到expired array中的相应的优先级队列中 。

当active array内的所有task都用完了时间片, 这时只需要将*active与*expired这两个指针交换下, 即可切换运行队列。

5.2 调度算法(O(1)算法)

5.2.1 介绍O(1)算法

何为O(1)算法: 该算法总能够在有限的时间内选出优先级最高的进程然后执行, 而不管系统中有多少个可运行的进程, 因此命名为O(1)算法。

5.2.2 O(1)算法的原理

在前面我们提到了两个按优先级排序的数组active array和expired array, 这两个数组是实现O(1)算法的关键所在。

O(1)调度算法每次都是选取在active array数组中且优先级最高的进程来运行。

那么该算法如何找到优先级最高的进程呢? 大家还记得前面prio_array内的DECLARE_BITMAP(bitmap, MAX_PRIO+1);字段吗?这里它就发挥出作用了(详情看代码注释), 这里只要找到bitmap内哪一个位被设置为了1, 即可得到当前系统所运行的task的优先级(idx, 通过sehed_find_first_bit()方法实现), 接下来找到idx所对应的进程链表(queue), queue内的所有进程都是目前可运行的并且拥有最高优先级的进程, 接着依次执行这些进程,。

该过程定义在schedule函数中, 主要代码如下:

struct task_struct *prev, *next;

struct list_head *queue;

struct prio_array *array;

int idx;

prev = current;

array = rq->active;

idx = sehed_find_first_bit(array->bitmap); //找到位图中第一个不为0的位的序号

queue = array->queue + idx; //得到对应的队列链表头

next = list_entry(queue->next, struct task_struct, run_list); //得到进程描述符

if (prev != next) //如果选出的进程和当前进程不是同一个,则交换上下文

context_switch();

6. 对该操作系统进程模型的看法

很多年前就有人说Linux必定会取代Windows,已经过去这么多年了,就我所知道的是使用Windows越来越多,放弃Linux的也越来越多。很简单,从桌面端来说,我认为Linux是不能战胜Windows的,Windows是由有积极进取心的商业公司生产出来的,漂亮、迷人、方便。而Linux不是技术不行,而是这种东西做出来,基本上没有人欣赏,旁人很难理解的。但Linux有它的优点,就凭这个我支持。可它不做改变的话,就只能保持着这个状态,在一堆狂热的职业或业余的程序员中间流传着。

https://www.cnblogs.com/kkawf/p/8975612.html

7. 参考资料

- https://blog.csdn.net/bit_clearoff/article/details/54292300

- https://blog.csdn.net/qq_29503203/article/details/54618275

- https://blog.csdn.net/gatieme/article/details/51383272

- https://blog.csdn.net/bullbat/article/details/7160246

- Linux kernel 2.6 源码下载链接

struct task_struct源碼:

linux/include/linux/sched.h 文件的第 631-1333行:

struct task_struct {

#ifdef CONFIG_THREAD_INFO_IN_TASK

/*

* For reasons of header soup (see current_thread_info()), this

* must be the first element of task_struct.

*/

struct thread_info thread_info;

#endif

/* -1 unrunnable, 0 runnable, >0 stopped: */

volatile long state;

/*

* This begins the randomizable portion of task_struct. Only

* scheduling-critical items should be added above here.

*/

randomized_struct_fields_start

void *stack;

refcount_t usage;

/* Per task flags (PF_*), defined further below: */

unsigned int flags;

unsigned int ptrace;

#ifdef CONFIG_SMP

int on_cpu;

struct __call_single_node wake_entry;

#ifdef CONFIG_THREAD_INFO_IN_TASK

/* Current CPU: */

unsigned int cpu;

#endif

unsigned int wakee_flips;

unsigned long wakee_flip_decay_ts;

struct task_struct *last_wakee;

/*

* recent_used_cpu is initially set as the last CPU used by a task

* that wakes affine another task. Waker/wakee relationships can

* push tasks around a CPU where each wakeup moves to the next one.

* Tracking a recently used CPU allows a quick search for a recently

* used CPU that may be idle.

*/

int recent_used_cpu;

int wake_cpu;

#endif

int on_rq;

int prio;

int static_prio;

int normal_prio;

unsigned int rt_priority;

const struct sched_class *sched_class;

struct sched_entity se;

struct sched_rt_entity rt;

#ifdef CONFIG_CGROUP_SCHED

struct task_group *sched_task_group;

#endif

struct sched_dl_entity dl;

#ifdef CONFIG_UCLAMP_TASK

/*

* Clamp values requested for a scheduling entity.

* Must be updated with task_rq_lock() held.

*/

struct uclamp_se uclamp_req[UCLAMP_CNT];

/*

* Effective clamp values used for a scheduling entity.

* Must be updated with task_rq_lock() held.

*/

struct uclamp_se uclamp[UCLAMP_CNT];

#endif

#ifdef CONFIG_PREEMPT_NOTIFIERS

/* List of struct preempt_notifier: */

struct hlist_head preempt_notifiers;

#endif

#ifdef CONFIG_BLK_DEV_IO_TRACE

unsigned int btrace_seq;

#endif

unsigned int policy;

int nr_cpus_allowed;

const cpumask_t *cpus_ptr;

cpumask_t cpus_mask;

#ifdef CONFIG_PREEMPT_RCU

int rcu_read_lock_nesting;

union rcu_special rcu_read_unlock_special;

struct list_head rcu_node_entry;

struct rcu_node *rcu_blocked_node;

#endif /* #ifdef CONFIG_PREEMPT_RCU */

#ifdef CONFIG_TASKS_RCU

unsigned long rcu_tasks_nvcsw;

u8 rcu_tasks_holdout;

u8 rcu_tasks_idx;

int rcu_tasks_idle_cpu;

struct list_head rcu_tasks_holdout_list;

#endif /* #ifdef CONFIG_TASKS_RCU */

#ifdef CONFIG_TASKS_TRACE_RCU

int trc_reader_nesting;

int trc_ipi_to_cpu;

union rcu_special trc_reader_special;

bool trc_reader_checked;

struct list_head trc_holdout_list;

#endif /* #ifdef CONFIG_TASKS_TRACE_RCU */

struct sched_info sched_info;

struct list_head tasks;

#ifdef CONFIG_SMP

struct plist_node pushable_tasks;

struct rb_node pushable_dl_tasks;

#endif

struct mm_struct *mm;

struct mm_struct *active_mm;

/* Per-thread vma caching: */

struct vmacache vmacache;

#ifdef SPLIT_RSS_COUNTING

struct task_rss_stat rss_stat;

#endif

int exit_state;

int exit_code;

int exit_signal;

/* The signal sent when the parent dies: */

int pdeath_signal;

/* JOBCTL_*, siglock protected: */

unsigned long jobctl;

/* Used for emulating ABI behavior of previous Linux versions: */

unsigned int personality;

/* Scheduler bits, serialized by scheduler locks: */

unsigned sched_reset_on_fork:1;

unsigned sched_contributes_to_load:1;

unsigned sched_migrated:1;

unsigned sched_remote_wakeup:1;

#ifdef CONFIG_PSI

unsigned sched_psi_wake_requeue:1;

#endif

/* Force alignment to the next boundary: */

unsigned :0;

/* Unserialized, strictly 'current' */

/* Bit to tell LSMs we're in execve(): */

unsigned in_execve:1;

unsigned in_iowait:1;

#ifndef TIF_RESTORE_SIGMASK

unsigned restore_sigmask:1;

#endif

#ifdef CONFIG_MEMCG

unsigned in_user_fault:1;

#endif

#ifdef CONFIG_COMPAT_BRK

unsigned brk_randomized:1;

#endif

#ifdef CONFIG_CGROUPS

/* disallow userland-initiated cgroup migration */

unsigned no_cgroup_migration:1;

/* task is frozen/stopped (used by the cgroup freezer) */

unsigned frozen:1;

#endif

#ifdef CONFIG_BLK_CGROUP

unsigned use_memdelay:1;

#endif

#ifdef CONFIG_PSI

/* Stalled due to lack of memory */

unsigned in_memstall:1;

#endif

unsigned long atomic_flags; /* Flags requiring atomic access. */

struct restart_block restart_block;

pid_t pid;

pid_t tgid;

#ifdef CONFIG_STACKPROTECTOR

/* Canary value for the -fstack-protector GCC feature: */

unsigned long stack_canary;

#endif

/*

* Pointers to the (original) parent process, youngest child, younger sibling,

* older sibling, respectively. (p->father can be replaced with

* p->real_parent->pid)

*/

/* Real parent process: */

struct task_struct __rcu *real_parent;

/* Recipient of SIGCHLD, wait4() reports: */

struct task_struct __rcu *parent;

/*

* Children/sibling form the list of natural children:

*/

struct list_head children;

struct list_head sibling;

struct task_struct *group_leader;

/*

* 'ptraced' is the list of tasks this task is using ptrace() on.

*

* This includes both natural children and PTRACE_ATTACH targets.

* 'ptrace_entry' is this task's link on the p->parent->ptraced list.

*/

struct list_head ptraced;

struct list_head ptrace_entry;

/* PID/PID hash table linkage. */

struct pid *thread_pid;

struct hlist_node pid_links[PIDTYPE_MAX];

struct list_head thread_group;

struct list_head thread_node;

struct completion *vfork_done;

/* CLONE_CHILD_SETTID: */

int __user *set_child_tid;

/* CLONE_CHILD_CLEARTID: */

int __user *clear_child_tid;

u64 utime;

u64 stime;

#ifdef CONFIG_ARCH_HAS_SCALED_CPUTIME

u64 utimescaled;

u64 stimescaled;

#endif

u64 gtime;

struct prev_cputime prev_cputime;

#ifdef CONFIG_VIRT_CPU_ACCOUNTING_GEN

struct vtime vtime;

#endif

#ifdef CONFIG_NO_HZ_FULL

atomic_t tick_dep_mask;

#endif

/* Context switch counts: */

unsigned long nvcsw;

unsigned long nivcsw;

/* Monotonic time in nsecs: */

u64 start_time;

/* Boot based time in nsecs: */

u64 start_boottime;

/* MM fault and swap info: this can arguably be seen as either mm-specific or thread-specific: */

unsigned long min_flt;

unsigned long maj_flt;

/* Empty if CONFIG_POSIX_CPUTIMERS=n */

struct posix_cputimers posix_cputimers;

#ifdef CONFIG_POSIX_CPU_TIMERS_TASK_WORK

struct posix_cputimers_work posix_cputimers_work;

#endif

/* Process credentials: */

/* Tracer's credentials at attach: */

const struct cred __rcu *ptracer_cred;

/* Objective and real subjective task credentials (COW): */

const struct cred __rcu *real_cred;

/* Effective (overridable) subjective task credentials (COW): */

const struct cred __rcu *cred;

#ifdef CONFIG_KEYS

/* Cached requested key. */

struct key *cached_requested_key;

#endif

/*

* executable name, excluding path.

*

* - normally initialized setup_new_exec()

* - access it with [gs]et_task_comm()

* - lock it with task_lock()

*/

char comm[TASK_COMM_LEN];

struct nameidata *nameidata;

#ifdef CONFIG_SYSVIPC

struct sysv_sem sysvsem;

struct sysv_shm sysvshm;

#endif

#ifdef CONFIG_DETECT_HUNG_TASK

unsigned long last_switch_count;

unsigned long last_switch_time;

#endif

/* Filesystem information: */

struct fs_struct *fs;

/* Open file information: */

struct files_struct *files;

/* Namespaces: */

struct nsproxy *nsproxy;

/* Signal handlers: */

struct signal_struct *signal;

struct sighand_struct __rcu *sighand;

sigset_t blocked;

sigset_t real_blocked;

/* Restored if set_restore_sigmask() was used: */

sigset_t saved_sigmask;

struct sigpending pending;

unsigned long sas_ss_sp;

size_t sas_ss_size;

unsigned int sas_ss_flags;

struct callback_head *task_works;

#ifdef CONFIG_AUDIT

#ifdef CONFIG_AUDITSYSCALL

struct audit_context *audit_context;

#endif

kuid_t loginuid;

unsigned int sessionid;

#endif

struct seccomp seccomp;

/* Thread group tracking: */

u64 parent_exec_id;

u64 self_exec_id;

/* Protection against (de-)allocation: mm, files, fs, tty, keyrings, mems_allowed, mempolicy: */

spinlock_t alloc_lock;

/* Protection of the PI data structures: */

raw_spinlock_t pi_lock;

struct wake_q_node wake_q;

#ifdef CONFIG_RT_MUTEXES

/* PI waiters blocked on a rt_mutex held by this task: */

struct rb_root_cached pi_waiters;

/* Updated under owner's pi_lock and rq lock */

struct task_struct *pi_top_task;

/* Deadlock detection and priority inheritance handling: */

struct rt_mutex_waiter *pi_blocked_on;

#endif

#ifdef CONFIG_DEBUG_MUTEXES

/* Mutex deadlock detection: */

struct mutex_waiter *blocked_on;

#endif

#ifdef CONFIG_DEBUG_ATOMIC_SLEEP

int non_block_count;

#endif

#ifdef CONFIG_TRACE_IRQFLAGS

struct irqtrace_events irqtrace;

unsigned int hardirq_threaded;

u64 hardirq_chain_key;

int softirqs_enabled;

int softirq_context;

int irq_config;

#endif

#ifdef CONFIG_LOCKDEP

# define MAX_LOCK_DEPTH 48UL

u64 curr_chain_key;

int lockdep_depth;

unsigned int lockdep_recursion;

struct held_lock held_locks[MAX_LOCK_DEPTH];

#endif

#ifdef CONFIG_UBSAN

unsigned int in_ubsan;

#endif

/* Journalling filesystem info: */

void *journal_info;

/* Stacked block device info: */

struct bio_list *bio_list;

#ifdef CONFIG_BLOCK

/* Stack plugging: */

struct blk_plug *plug;

#endif

/* VM state: */

struct reclaim_state *reclaim_state;

struct backing_dev_info *backing_dev_info;

struct io_context *io_context;

#ifdef CONFIG_COMPACTION

struct capture_control *capture_control;

#endif

/* Ptrace state: */

unsigned long ptrace_message;

kernel_siginfo_t *last_siginfo;

struct task_io_accounting ioac;

#ifdef CONFIG_PSI

/* Pressure stall state */

unsigned int psi_flags;

#endif

#ifdef CONFIG_TASK_XACCT

/* Accumulated RSS usage: */

u64 acct_rss_mem1;

/* Accumulated virtual memory usage: */

u64 acct_vm_mem1;

/* stime + utime since last update: */

u64 acct_timexpd;

#endif

#ifdef CONFIG_CPUSETS

/* Protected by ->alloc_lock: */

nodemask_t mems_allowed;

/* Seqence number to catch updates: */

seqcount_spinlock_t mems_allowed_seq;

int cpuset_mem_spread_rotor;

int cpuset_slab_spread_rotor;

#endif

#ifdef CONFIG_CGROUPS

/* Control Group info protected by css_set_lock: */

struct css_set __rcu *cgroups;

/* cg_list protected by css_set_lock and tsk->alloc_lock: */

struct list_head cg_list;

#endif

#ifdef CONFIG_X86_CPU_RESCTRL

u32 closid;

u32 rmid;

#endif

#ifdef CONFIG_FUTEX

struct robust_list_head __user *robust_list;

#ifdef CONFIG_COMPAT

struct compat_robust_list_head __user *compat_robust_list;

#endif

struct list_head pi_state_list;

struct futex_pi_state *pi_state_cache;

struct mutex futex_exit_mutex;

unsigned int futex_state;

#endif

#ifdef CONFIG_PERF_EVENTS

struct perf_event_context *perf_event_ctxp[perf_nr_task_contexts];

struct mutex perf_event_mutex;

struct list_head perf_event_list;

#endif

#ifdef CONFIG_DEBUG_PREEMPT

unsigned long preempt_disable_ip;

#endif

#ifdef CONFIG_NUMA

/* Protected by alloc_lock: */

struct mempolicy *mempolicy;

short il_prev;

short pref_node_fork;

#endif

#ifdef CONFIG_NUMA_BALANCING

int numa_scan_seq;

unsigned int numa_scan_period;

unsigned int numa_scan_period_max;

int numa_preferred_nid;

unsigned long numa_migrate_retry;

/* Migration stamp: */

u64 node_stamp;

u64 last_task_numa_placement;

u64 last_sum_exec_runtime;

struct callback_head numa_work;

/*

* This pointer is only modified for current in syscall and

* pagefault context (and for tasks being destroyed), so it can be read

* from any of the following contexts:

* - RCU read-side critical section

* - current->numa_group from everywhere

* - task's runqueue locked, task not running

*/

struct numa_group __rcu *numa_group;

/*

* numa_faults is an array split into four regions:

* faults_memory, faults_cpu, faults_memory_buffer, faults_cpu_buffer

* in this precise order.

*

* faults_memory: Exponential decaying average of faults on a per-node

* basis. Scheduling placement decisions are made based on these

* counts. The values remain static for the duration of a PTE scan.

* faults_cpu: Track the nodes the process was running on when a NUMA

* hinting fault was incurred.

* faults_memory_buffer and faults_cpu_buffer: Record faults per node

* during the current scan window. When the scan completes, the counts

* in faults_memory and faults_cpu decay and these values are copied.

*/

unsigned long *numa_faults;

unsigned long total_numa_faults;

/*

* numa_faults_locality tracks if faults recorded during the last

* scan window were remote/local or failed to migrate. The task scan

* period is adapted based on the locality of the faults with different

* weights depending on whether they were shared or private faults

*/

unsigned long numa_faults_locality[3];

unsigned long numa_pages_migrated;

#endif /* CONFIG_NUMA_BALANCING */

#ifdef CONFIG_RSEQ

struct rseq __user *rseq;

u32 rseq_sig;

/*

* RmW on rseq_event_mask must be performed atomically

* with respect to preemption.

*/

unsigned long rseq_event_mask;

#endif

struct tlbflush_unmap_batch tlb_ubc;

union {

refcount_t rcu_users;

struct rcu_head rcu;

};

/* Cache last used pipe for splice(): */

struct pipe_inode_info *splice_pipe;

struct page_frag task_frag;

#ifdef CONFIG_TASK_DELAY_ACCT

struct task_delay_info *delays;

#endif

#ifdef CONFIG_FAULT_INJECTION

int make_it_fail;

unsigned int fail_nth;

#endif

/*

* When (nr_dirtied >= nr_dirtied_pause), it's time to call

* balance_dirty_pages() for a dirty throttling pause:

*/

int nr_dirtied;

int nr_dirtied_pause;

/* Start of a write-and-pause period: */

unsigned long dirty_paused_when;

#ifdef CONFIG_LATENCYTOP

int latency_record_count;

struct latency_record latency_record[LT_SAVECOUNT];

#endif

/*

* Time slack values; these are used to round up poll() and

* select() etc timeout values. These are in nanoseconds.

*/

u64 timer_slack_ns;

u64 default_timer_slack_ns;

#ifdef CONFIG_KASAN

unsigned int kasan_depth;

#endif

#ifdef CONFIG_KCSAN

struct kcsan_ctx kcsan_ctx;

#ifdef CONFIG_TRACE_IRQFLAGS

struct irqtrace_events kcsan_save_irqtrace;

#endif

#endif

#ifdef CONFIG_FUNCTION_GRAPH_TRACER

/* Index of current stored address in ret_stack: */

int curr_ret_stack;

int curr_ret_depth;

/* Stack of return addresses for return function tracing: */

struct ftrace_ret_stack *ret_stack;

/* Timestamp for last schedule: */

unsigned long long ftrace_timestamp;

/*

* Number of functions that haven't been traced

* because of depth overrun:

*/

atomic_t trace_overrun;

/* Pause tracing: */

atomic_t tracing_graph_pause;

#endif

#ifdef CONFIG_TRACING

/* State flags for use by tracers: */

unsigned long trace;

/* Bitmask and counter of trace recursion: */

unsigned long trace_recursion;

#endif /* CONFIG_TRACING */

#ifdef CONFIG_KCOV

/* See kernel/kcov.c for more details. */

/* Coverage collection mode enabled for this task (0 if disabled): */

unsigned int kcov_mode;

/* Size of the kcov_area: */

unsigned int kcov_size;

/* Buffer for coverage collection: */

void *kcov_area;

/* KCOV descriptor wired with this task or NULL: */

struct kcov *kcov;

/* KCOV common handle for remote coverage collection: */

u64 kcov_handle;

/* KCOV sequence number: */

int kcov_sequence;

/* Collect coverage from softirq context: */

unsigned int kcov_softirq;

#endif

#ifdef CONFIG_MEMCG

struct mem_cgroup *memcg_in_oom;

gfp_t memcg_oom_gfp_mask;

int memcg_oom_order;

/* Number of pages to reclaim on returning to userland: */

unsigned int memcg_nr_pages_over_high;

/* Used by memcontrol for targeted memcg charge: */

struct mem_cgroup *active_memcg;

#endif

#ifdef CONFIG_BLK_CGROUP

struct request_queue *throttle_queue;

#endif

#ifdef CONFIG_UPROBES

struct uprobe_task *utask;

#endif

#if defined(CONFIG_BCACHE) || defined(CONFIG_BCACHE_MODULE)

unsigned int sequential_io;

unsigned int sequential_io_avg;

#endif

#ifdef CONFIG_DEBUG_ATOMIC_SLEEP

unsigned long task_state_change;

#endif

int pagefault_disabled;

#ifdef CONFIG_MMU

struct task_struct *oom_reaper_list;

#endif

#ifdef CONFIG_VMAP_STACK

struct vm_struct *stack_vm_area;

#endif

#ifdef CONFIG_THREAD_INFO_IN_TASK

/* A live task holds one reference: */

refcount_t stack_refcount;

#endif

#ifdef CONFIG_LIVEPATCH

int patch_state;

#endif

#ifdef CONFIG_SECURITY

/* Used by LSM modules for access restriction: */

void *security;

#endif

#ifdef CONFIG_GCC_PLUGIN_STACKLEAK

unsigned long lowest_stack;

unsigned long prev_lowest_stack;

#endif

#ifdef CONFIG_X86_MCE

u64 mce_addr;

__u64 mce_ripv : 1,

mce_whole_page : 1,

__mce_reserved : 62;

struct callback_head mce_kill_me;

#endif

/*

* New fields for task_struct should be added above here, so that

* they are included in the randomized portion of task_struct.

*/

randomized_struct_fields_end

/* CPU-specific state of this task: */

struct thread_struct thread;

/*

* WARNING: on x86, 'thread_struct' contains a variable-sized

* structure. It *MUST* be at the end of 'task_struct'.

*

* Do not put anything below here!

*/

};

1820

1820

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?