下载faster r-cnn

git clone --recursive https://github.com/rbgirshick/py-faster-rcnn.git

进入py-faster-rcnn/lib

make

结果如下图:

进入py-faster-rcnn/caffe-fast-rcnn

cp Makefile.config.example MAkefile.config

然后配置Makefile.config文件,可参考我的Makefile.config

## Refer to http://caffe.berkeleyvision.org/installation.html

# Contributions simplifying and improving our build system are welcome!

# cuDNN acceleration switch (uncomment to build with cuDNN).

USE_CUDNN := 1

# CPU-only switch (uncomment to build without GPU support).

# CPU_ONLY := 1

# uncomment to disable IO dependencies and corresponding data layers

# USE_OPENCV := 0

# USE_LEVELDB := 0

# USE_LMDB := 0

# uncomment to allow MDB_NOLOCK when reading LMDB files (only if necessary)

# You should not set this flag if you will be reading LMDBs with any

# possibility of simultaneous read and write

# ALLOW_LMDB_NOLOCK := 1

# Uncomment if you're using OpenCV 3

# OPENCV_VERSION := 3

# To customize your choice of compiler, uncomment and set the following.

# N.B. the default for Linux is g++ and the default for OSX is clang++

# CUSTOM_CXX := g++

# CUDA directory contains bin/ and lib/ directories that we need.

CUDA_DIR := /usr/local/cuda

# On Ubuntu 14.04, if cuda tools are installed via

# "sudo apt-get install nvidia-cuda-toolkit" then use this instead:

# CUDA_DIR := /usr

# CUDA architecture setting: going with all of them.

# For CUDA < 6.0, comment the *_50 lines for compatibility.

CUDA_ARCH := -gencode arch=compute_30,code=sm_30 \

-gencode arch=compute_35,code=sm_35 \

-gencode arch=compute_50,code=sm_50 \

-gencode arch=compute_50,code=compute_50 \

-gencode arch=compute_53,code=compute_53 \

-gencode arch=compute_61,code=compute_61

# BLAS choice:

# atlas for ATLAS (default)

# mkl for MKL

# open for OpenBlas

BLAS := open

# Custom (MKL/ATLAS/OpenBLAS) include and lib directories.

# Leave commented to accept the defaults for your choice of BLAS

# (which should work)!

# BLAS_INCLUDE := /path/to/your/blas

# BLAS_LIB := /path/to/your/blas

# Homebrew puts openblas in a directory that is not on the standard search path

# BLAS_INCLUDE := $(shell brew --prefix openblas)/include

# BLAS_LIB := $(shell brew --prefix openblas)/lib

# This is required only if you will compile the matlab interface.

# MATLAB directory should contain the mex binary in /bin.

MATLAB_DIR := /usr/local/MATLAB/R2013b

# MATLAB_DIR := /Applications/MATLAB_R2012b.app

# NOTE: this is required only if you will compile the python interface.

# We need to be able to find Python.h and numpy/arrayobject.h.

PYTHON_INCLUDE := /usr/include/python2.7 \

/usr/lib64/python2.7/site-packages/numpy/core/include \

/usr/lib/python2.7/dist-packages/numpy/core/include

# Anaconda Python distribution is quite popular. Include path:

# Verify anaconda location, sometimes it's in root.

# ANACONDA_HOME := $(HOME)/anaconda

# PYTHON_INCLUDE := $(ANACONDA_HOME)/include \

# $(ANACONDA_HOME)/include/python2.7 \

# $(ANACONDA_HOME)/lib/python2.7/site-packages/numpy/core/include \

# Uncomment to use Python 3 (default is Python 2)

# PYTHON_LIBRARIES := boost_python3 python3.5m

# PYTHON_INCLUDE := /usr/include/python3.5m \

# /usr/lib/python3.5/dist-packages/numpy/core/include

# We need to be able to find libpythonX.X.so or .dylib.

PYTHON_LIB := /usr/lib

# PYTHON_LIB := $(ANACONDA_HOME)/lib

# Homebrew installs numpy in a non standard path (keg only)

# PYTHON_INCLUDE += $(dir $(shell python -c 'import numpy.core; print(numpy.core.__file__)'))/include

# PYTHON_LIB += $(shell brew --prefix numpy)/lib

# Uncomment to support layers written in Python (will link against Python libs)

WITH_PYTHON_LAYER := 1

# Whatever else you find you need goes here.

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib

# If Homebrew is installed at a non standard location (for example your home directory) and you use it for general dependencies

INCLUDE_DIRS += /usr/local/hdf5/include

LIBRARY_DIRS += /usr/local/hdf5/lib

# Uncomment to use `pkg-config` to specify OpenCV library paths.

# (Usually not necessary -- OpenCV libraries are normally installed in one of the above $LIBRARY_DIRS.)

# USE_PKG_CONFIG := 1

# N.B. both build and distribute dirs are cleared on `make clean`

BUILD_DIR := build

DISTRIBUTE_DIR := distribute

# Uncomment for debugging. Does not work on OSX due to https://github.com/BVLC/caffe/issues/171

# DEBUG := 1

# The ID of the GPU that 'make runtest' will use to run unit tests.

TEST_GPUID := 0

# enable pretty build (comment to see full commands)

Q ?= @

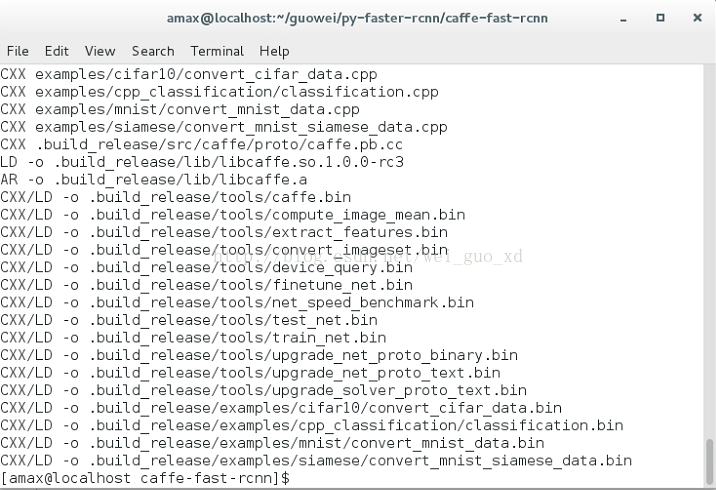

执行:make all -j8

结果如下图:

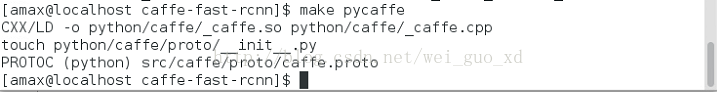

执行:make pycaffe

结果如下图:

下载在VOC2007trainval上预训练的faster r-cnn检测器:

执行:

./data/scripts/fetch_faster_rcnn_models.sh

下载的模型如图所示:

运行演示脚本,

./tools/demo.py

检测结果如下图:(脚本用的是VGG16_faster_rcnn_final.caffemodel)

下载VOCdevkit训练,验证,测试数据集:

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tar

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tar

提取压缩文件放到VOCdevkit文件夹下:

tar xvf VOCtrainval_06-Nov-2007.tar

tar xvf VOCtest_06-Nov-2007.tar

tar xvf VOCdevkit_08-Jun-2007.tar

文件夹的结构如下图所示:

为VOCdevkit创建VOCdevkit2007快捷方式,

cd data

ln -s $VOCdevkit VOCdevkit2007

格式如下图所示:

下载在ImageNet上预训练好的模型:

执行:

./data/scripts/fetch_imagenet_models.sh

下载的模型如下图所示:(ResNet.v2.caffemodel是我额外再下载的)

下面开始用voc2007进行训练,执行:

./experiments/scripts/faster_rcnn_end2end.sh [GPU_ID] [NET] [--set ...]

./experiments/scripts/faster_rcnn_end2end.sh 0 ZF pascal_voc(联合训练)

./experiments/scripts/faster_rcnn_alt_opt.sh 0 ZF pascal_voc(分步训练)

下面开始用faster r-cnn来训练自己的数据集(我使用的是faster_rcnn_end2end.sh来执行训练):

首先需要修改网络模型文件:

1./py-faster-rcnn/models/pascal_voc/VGG16/faster_rcnn_end2end/train.prototxt

name: "VGG_ILSVRC_16_layers"

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 2" #数目为训练类别+1

}

}

layer {

name: 'roi-data'

type: 'Python'

bottom: 'rpn_rois'

bottom: 'gt_boxes'

top: 'rois'

top: 'labels'

top: 'bbox_targets'

top: 'bbox_inside_weights'

top: 'bbox_outside_weights'

python_param {

module: 'rpn.proposal_target_layer'

layer: 'ProposalTargetLayer'

param_str: "'num_classes': 2"

}

}

layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 8 #(类别数+1)*4

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

}

2./py-faster-rcnn/models/pascal_voc/VGG16/faster_rcnn_end2end/test.prototxt

layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 2

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

inner_product_param {

num_output: 8

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

}

3.修改/home/amax/guowei/py-faster-rcnn/lib/datasets/pascal_voc.py

class pascal_voc(imdb):

def __init__(self, image_set, year, devkit_path=None):

imdb.__init__(self, 'voc_' + year + '_' + image_set)

self._year = year

self._image_set = image_set

self._devkit_path = self._get_default_path() if devkit_path is None \

else devkit_path

self._data_path = os.path.join(self._devkit_path, 'VOC' + self._year)

self._classes = ('__background__', # always index 0

'aeroplane')

self._class_to_ind = dict(zip(self.classes, xrange(self.num_classes)))

self._image_ext = '.jpg'

修改self._classes为你的类别加背景。

4./home/amax/guowei/py-faster-rcnn/lib/datasets/factory.py修改

for year in ['2007', '2012','2001','2002','2006','5000']:

for split in ['train', 'val', 'trainval', 'test']:

name = 'voc_{}_{}'.format(year, split)

__sets[name] = (lambda split=split, year=year: pascal_voc(split, year))

我的数据集叫:VOC5000,所以把5000加到年份当中。

5/home/amax/guowei/py-faster-rcnn/experiments/scripts/faster_rcnn_end2end.sh修改

case $DATASET in

pascal_voc)

TRAIN_IMDB="voc_5000_trainval"

TEST_IMDB="voc_5000_test"

PT_DIR="pascal_voc"

ITERS=4000

;;

把训练数据集和测试数据集改为你的数据集,迭代次数改为4000。

开始训练,执行下面程序:

/experiments/scripts/faster_rcnn_end2end.sh 0 VGG16 pascal_voc

我的numpy更新到了最新版,如果出现和数据类型相关错误,可参考以下:链接:

实验结果如下图:

利用VGG16,迭代了4000次,取得了78.8%的精度。

本文档详细介绍了如何在Python环境下配置Caffe,并利用Py-Faster-RCNN框架训练深度学习模型进行目标检测。通过步骤指导,读者将能够将自己的数据集应用于该算法。

本文档详细介绍了如何在Python环境下配置Caffe,并利用Py-Faster-RCNN框架训练深度学习模型进行目标检测。通过步骤指导,读者将能够将自己的数据集应用于该算法。

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?