人工智能ai以算法为基础

8 ways to infuse trust into your AI experiences

将信任注入您的AI体验的8种方法

At IBM, we’re building software solutions that help our users make smarter decisions faster. In the world of data and artificial intelligence (AI), it all comes down to designing products our users can trust enough to help them make those important decisions.

在IBM,我们正在构建软件解决方案,以帮助我们的用户更快地做出更明智的决策。 在数据和人工智能(AI)领域,一切都取决于设计我们的用户可以信赖的产品,以帮助他们做出重要决策。

This focus on trust goes beyond data security and validation, it’s about helping our users understand their data, providing relevant recommendations when they need it, and empowering them to create solutions they can be confident in.

对信任的关注不仅限于数据安全性和验证,还在于帮助我们的用户理解他们的数据,在需要时提供相关建议,并赋予他们创建对其有信心的解决方案的能力。

As we designed our end to end AI platform IBM Cloud Pak for Data, as well as a diverse set of AI offerings and solutions in our IBM Watson portfolio, we focused on the following 8 principles for establishing trust within AI experiences.

在设计端对端AI平台IBM Cloud Pak for Data以及IBM Watson产品组合中的各种AI产品和解决方案时,我们集中在以下8条原则上,以在AI体验中建立信任。

可解释性 (Explainability)

At IBM, we believe that good design does not sacrifice transparency and that imperceptible AI is not ethical AI. When designing for AI, you should never hide the decision-making process and your users should always be aware that they are with an AI. To do this, you need to bring explainability into every AI experience so that your users understand the conclusions and recommendations made by the AI.

在IBM,我们相信良好的设计不会牺牲透明度,并且难以察觉的AI并非道德AI。 在为AI设计时,您永远不应隐藏决策过程,并且用户应始终意识到自己拥有AI。 为此,您需要在每个AI体验中都加入可解释性,以便您的用户理解AI的结论和建议。

We’ve established a set of ethical guidelines related to designing for AI called Everyday Ethics for AI that outlines fundamental ways for you to bring explainability into your AI experiences.

我们已经建立了一套与AI设计相关的道德准则,称为AI Everyday Ethics for AI ,概述了将解释性带入AI体验的基本方法。

Allow for questions. A user should be able to ask why an AI is doing what it’s doing on an ongoing basis. This should be clear and upfront in the user interface.

提出问题。 用户应该能够问一问为什么AI会持续不断地执行其操作。 这应该在用户界面中清晰明确。

Decision-making processes must be reviewable, especially if the AI is working with highly sensitive personal information data like personally identifiable information, protected health information, and/or biometric data.

决策过程必须是可审查的 ,尤其是当AI正在处理高度敏感的个人信息数据(例如个人身份信息,受保护的健康信息和/或生物识别数据)时。

When an AI is assisting users with making any highly sensitive decisions, the AI must be able to provide them with a sufficient explanation of recommendations, the data used, and the reasoning behind the recommendations.

当AI协助用户做出任何高度敏感的决策时, AI必须能够为他们提供建议的充分说明,所使用的数据以及建议背后的理由。

Teams should have access to a record of an AI’s decision processesand be amenable to verification of those decision processes.

团队应有权访问AI决策过程的记录,并能够验证这些决策过程。

公平 (Fairness)

Humans are inherently biased, and since humans build AI systems…there’s a pretty good chance that human bias could be embedded into the systems we create. It’s our responsibility to minimize algorithmic bias through continuous research and data collection that represents a diverse population. Fairness, like explainability, should be standard practice when it comes to infusing products and services with AI. Meaning that whenever sensitive data is involved, you should design AI experiences that not only minimize bias, but also help your users do the same. You can see this through the bias detector within Watson Openscale where users are alerted to potential bias in data sets.

人类天生就有偏见,并且由于人类构建了AI系统……人类偏见很有可能会嵌入我们创建的系统中。 我们的责任是通过代表不同人群的持续研究和数据收集来最大程度地减少算法偏差。 在将产品和服务与AI融合时,像可解释性一样,公平应该成为标准做法。 这意味着无论何时涉及敏感数据,您都应该设计AI体验,不仅可以最大程度地减少偏差,还可以帮助您的用户做到这一点。 您可以通过Watson Openscale内的偏差检测器看到此情况,在此情况下,会提醒用户注意数据集中的潜在偏差。

There’s also some great work being done by AI Fairness 360, a team of developers who have built an open-source toolkit to help teams examine, report, and mitigate discrimination and bias in their machine learning models. The best part? You can start using these metrics and datasets to start detecting bias in your own AI experiences today.

AI Fairness 360也正在做一些伟大的工作,这是一个由开发人员组成的团队,他们开发了一个开源工具包来帮助团队检查,报告并减轻其机器学习模型中的歧视和偏见。 最好的部分? 您可以立即使用这些指标和数据集来开始检测您自己的AI体验中的偏差。

声音和语调 (Voice and Tone)

Walking the voice and tone tightrope is a real challenge for anybody designing or writing for AI. It’s all about finding the balance between too technical and overly simplified. The language that you use within your experiences can go a long way when it comes to building trust with your users. We’ve found that it is best to be succinct and value-driven, and use straightforward language. It’s equally important that you don’t personify the AI because…it isn’t a person. At IBM, this means paying close attention to the language we use when our users are directly interacting with Watson. For example, instead of saying “What can I help you with?”, the Watson avatar should lead with something personal and user-focused like, “What do you want help with next?”

对于任何为AI设计或写作的人来说,走语音和音频走钢丝都是一个真正的挑战。 都是为了在过于技术和过于简化之间找到平衡。 在与用户建立信任方面,您在体验中使用的语言可能会走很长一段路。 我们发现最好是简洁明了,以价值为导向,并使用简单明了的语言。 同样重要的是,不要将AI拟人化,因为……它不是人。 在IBM,这意味着当我们的用户直接与Watson进行交互时,请密切注意我们使用的语言。 例如,Watson化身不要说“我能为您提供什么帮助?”,而是以个人和用户为中心的内容作为引导,例如“您接下来需要什么帮助?”。

一致性 (Consistency)

If you have a suite of products you expect the same key commands or icons to behave the same as you move between products. Well, the same goes for AI experiences.

如果您有一套产品,则期望在产品之间移动时,相同的按键命令或图标的行为相同。 好吧,人工智能体验也是如此。

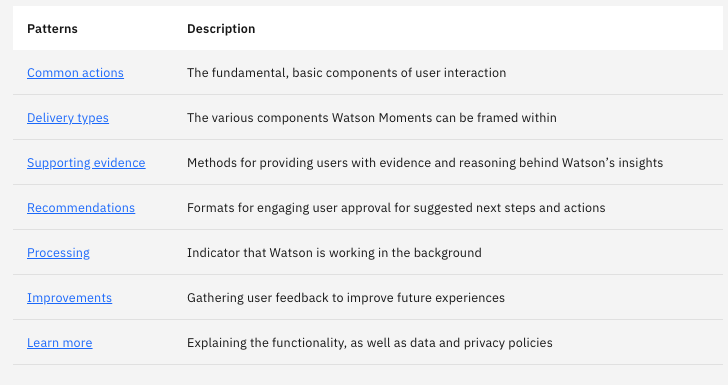

When we’re designing with AI we should intentionally design consistent experiences of common elements from product to product. To do this, you can define and leverage AI design patterns. We’ve established universal patterns that should be applied to any moment where Watson is providing guidance or insight. This consistent look and feel ensures that your users aren’t having to relearn a new language every time they open a product with AI capabilities.

当我们使用AI进行设计时,我们应该有意识地设计出产品之间相同要素的一致体验。 为此,您可以定义和利用AI设计模式。 我们已经建立了通用模式,该模式应适用于Watson提供指导或见解的任何时刻。 这种一致的外观确保您的用户每次打开具有AI功能的产品时都不必重新学习新的语言。

可预测性 (Predictability)

Predictability is established through consistency. As you continue to deliver these transparent and easily-recognizable AI moments, you’ll get to the point where your users will grow accustomed to AI working alongside them. Ultimately, the need to overtly highlight these experiences will diminish because they will start to understand the possibilities and limits of AI. When you iterate on your experiences, always consider the future and how your users’ understanding and comfort with AI will evolve over time. It’s your job to guide them along the path toward AI maturity, meet them where they’re at, and avoid as many unknowns and surprises as possible.

可预测性是通过一致性建立的。 随着您继续提供这些透明且易于识别的AI时刻,您将达到使用户习惯于与AI一起工作的地步。 最终,公开强调这些经验的需求将减少,因为它们将开始理解AI的可能性和局限性。 当您迭代自己的体验时,请始终考虑未来以及用户对AI的理解和舒适度将随着时间的推移而发展。 引导他们沿着AI走向成熟的道路,与他们相处的地方相遇并避免尽可能多的未知和意外是您的工作。

易学性 (Learnability)

To continuously educate your user and meet them where they’re at along their journey to AI, we recommend leaning on the principles of progressive disclosure. As designers, it’s our job to account for our users’ needs and serve up relevant guidance or content when they need it. We’ve found that most users crave a high-level understanding of what’s going on, but not all of them want to delve into the mechanics of AI. From in-product guidance to expert-level documentation, be sure to consider the moments where your users might need to dive deeper and those times when the complexity might just be too much.

为了持续教育您的用户并在他们走向AI的过程中与他们会面,我们建议您遵循渐进公开的原则。 作为设计师,负责用户需求并在需要时提供相关指导或内容是我们的工作。 我们发现,大多数用户都渴望对正在发生的事情有一个全面的了解,但并非所有人都希望深入研究AI的机制。 从产品内指南到专家级文档,请务必考虑您的用户可能需要更深入研究的时机以及复杂性可能太多的时机。

明晰 (Clarity)

When you’re designing for trust within AI experiences, it’s important to think about the unique ways AI can be used to help your users accomplish their goals. One way to do this is to think about clarity. Ask yourself: “how can AI help our users see beyond the obvious?” and on the design side: “what do we need to do to make sure everything within this AI experience is clear and consumable?” Often times, this all comes down to seeing beyond the obvious and translating complex insights into plain language.

当您为在AI体验中获得信任而设计时,请务必考虑可以使用AI来帮助用户实现其目标的独特方式。 一种方法是考虑清晰度。 问问自己:“人工智能如何帮助我们的用户看得见?” 在设计方面:“我们需要做些什么来确保AI体验中的所有内容都清晰易读?” 通常情况下,这一切都归结为不仅仅将显而易见的复杂见解转化为通俗易懂的语言。

At IBM, this sense of clarity is extremely relevant within Cognos Analytics, an analytics experience that our users explore, visualize, and share insights from their data. With a little help from AI, advanced pattern detection points out interesting relationships our users might not have known were there. And every visualization is accompanied by statistical insights that are presented in plain language.

在IBM,这种清晰感与Cognos Analytics极为相关, Cognos Analytics是我们的用户探索,可视化和共享其数据见解的一种分析体验。 在AI的一点帮助下,高级模式检测可以指出我们的用户可能不知道的有趣关系。 每个可视化都伴随着以明文形式呈现的统计见解。

置信度 (Confidence)

In the world of AI and machine learning, accuracy is key. As you design solutions, always be sure to showcase accuracy and relevancy, so your users can clearly understand how confident the model is in the prediction it made. For a model that contains two output classes (cat vs. tiger) a confidence score of 51% is slightly better than flipping a coin, but a confidence score of 99% indicates that the model is very certain in the judgment that is provided. Knowing the model’s confidence will help your users gauge how much trust they should place in the recommendation.

在AI和机器学习的世界中,准确性是关键。 在设计解决方案时,请务必确保展示准确性和相关性,以便您的用户可以清楚地了解模型对所做预测的信心。 对于包含两个输出类别(猫与老虎)的模型,置信度51%优于掷硬币,但是置信度99%表明模型在提供的判断中非常确定。 知道模型的置信度将帮助您的用户确定他们应该对建议多大的信任。

不要一个人做 (Don’t do it alone)

When it comes to delivering AI experiences — or any experience for that matter — a neverending commitment to co-creation is the key to creating something that your users will trust. This means working alongside your users to identify and design for their real needs. At IBM, we’ve developed a robust user research practice that’s centered around co-creation with them.

在提供AI体验(或与此相关的任何体验)时,对共同创造的永无止境的承诺是创建用户可以信赖的东西的关键。 这意味着与您的用户一起确定和设计他们的实际需求。 在IBM,我们已经开发了一种强大的用户研究实践,该实践围绕着与他们的共同创造。

And the only way to successfully guide your users along their journey to AI is through collaboration, innovation, and trust.

成功地引导用户迈向AI的唯一途径就是通过协作,创新和信任。

Arin Bhowmick (@arinbhowmick) is Vice President and Chief Design Officer, IBM Cloud, Data and AI, based in San Francisco, California. The above article is personal and does not necessarily represent IBM’s positions, strategies or opinions.

Arin Bhowmick ( @arinbhowmick )是位于加利福尼亚州旧金山的IBM云,数据和AI副总裁兼首席设计官。 以上文章是个人的,不一定代表IBM的立场,战略或观点。

翻译自: https://medium.com/design-ibm/designing-for-ai-trust-ae0342d2b270

人工智能ai以算法为基础

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?