“Our intelligence is what makes us human, and AI is an extension of that quality”. -Yann LeCun, Professor at NYU

“我们的才智使我们成为人类,而人工智能是这种品质的延伸”。 -纽约大学教授Yan LeCun

生成AI和Markov链简介 (Introduction to Generative AI and Markov Chains)

Generative AI is a popular topic in the field of Machine Learning and Artificial Intelligence, whose task, as the name suggests, is to generate new data.

生成式人工智能是机器学习和人工智能领域的热门话题,顾名思义,其任务是生成新数据。

There are quite a few ways in which such AI Models are trained , like using Recurrent Neural Networks, Generative Adversarial Networks, Markov Chains etc.

此类AI模型的训练方法有很多种,例如使用递归神经网络,生成对抗网络,马尔可夫链等。

In this article, we are going to look at Markov Chains and understand how they work.We won’t dive deep into the mathematics behind it, as this article is simply meant to get you comfortable with the concept of Markov Chains

在本文中,我们将研究Markov Chains并理解它们的工作原理,因为本文只是为了让您熟悉Markov Chains的概念,因此我们不会深入研究其背后的数学原理。

Markov Chains are models which describe a sequence of possible events in which probability of the next event occuring depends on the present state the working agent is in.

马尔可夫链是描述一系列可能事件的模型,其中下一个事件发生的可能性取决于工作人员所处的当前状态。

This may sound confusing, but it’ll become much clearer as we go along in this article. We will be covering the following topics:

这听起来可能令人困惑,但是随着本文的进行,它将变得更加清晰。 我们将涵盖以下主题:

- Concept of Markov Chains 马尔可夫链的概念

- Application of Markov Chains in Generative AI 马尔可夫链在生成式AI中的应用

- Limitations of Markov Chains 马尔可夫链的局限性

马尔可夫链的概念 (Concept Of Markov Chains)

A Markov Chain model predicts a sequence of datapoints after a given input data. This generated sequence is a combination of different elements based on the probability of each them occuring immediately after our test data. The length of the input and output data sequences depends on the order of the Markov Chain — which will be explained later in this article.

马尔可夫链模型可预测给定输入数据之后的一系列数据点。 生成的序列是不同元素的组合,基于每个元素在我们的测试数据之后立即出现的概率。 输入和输出数据序列的长度取决于马尔可夫链的顺序-这将在本文后面进行解释。

To explain it simply, lets take an example of a Text Generation AI. This AI can construct sentences if you pass a test word and specify the number of words the sentence must contain.

为了简单解释,让我们以Text Generation AI为例。 如果您通过测试单词并指定句子必须包含的单词数,则此AI可以构造句子。

Before going further, lets first understand how a Markov Chain model for text generation is designed. Suppose you want to make an AI that generates stories in the style of a certain author. You would start by collecting a bunch of stories by this author. Your training code will read this text and form a vocabulary i.e list out the unique words used in the entire text.

在继续之前,让我们首先了解如何设计用于文本生成的马尔可夫链模型。 假设您想创建一个以某种作者风格生成故事的AI。 您将从收集该作者的一堆故事开始。 您的培训代码将阅读此文本并形成词汇表,即列出整个文本中使用的独特单词。

After this, a key-value pair is created for each word, where the key is the word itself, and the value is a list of all words that have occured immediately after this key. This entire collection of key-value pairs is basically your Markov Chain model.

此后,将为每个单词创建一个键-值对,其中键是单词本身,并且值是此键之后立即出现的所有单词的列表。 键值对的整个集合基本上就是您的马尔可夫链模型。

Now, lets get on with our example of a Text Generation AI. Here’s a snippet of an example model

现在,让我们继续我们的文本生成AI示例。 这是一个示例模型的片段

This is just a snippet. For the sake of simplicity, I have shown key-value pairs for only 4 words.

这只是一个片段。 为了简单起见,我仅显示了4个单词的键值对。

Now, you pass it a test word, say “the”. As you can see from the image, the words that have appeared after “the” are “new”, “apple”, “dog”, “cat”,“chair” and “hair”. Since they all have occured exactly once, there is an equal chance of either of them appearing right after “the”.

现在,您通过测试字,说“ the”。 从图像中可以看到,在“ the”之后出现的词是“ new”,“ apple”,“ dog”,“ cat”,“ chair”和“ hair”。 由于它们只发生了一次,因此它们中的任何一个都有出现在“ the”之后的机会均等。

The code will randomly pick a word from this list. Lets say it picked “apple”. So, now you’ve got a part of a sentence : “the apple”. Now the exact same process will be repeated on the word “apple” to get the next word. Lets say it is “is”.

该代码将从该列表中随机选择一个单词。 可以说它选了“苹果”。 所以,现在您有了句子的一部分:“苹果”。 现在,将对单词“ apple”重复完全相同的过程以获得下一个单词。 可以说它是“是”。

Now the portion of sentence you have is : “the apple is”. Similarly, this process is run on the word “is” and so on until you get a sentence containing your desired number of words (which is the number of time you will run the program in a loop). Here’s a simplified chart of it all.

现在,您拥有的句子部分是:“苹果是”。 同样,此过程在单词“ is”上运行,依此类推,直到得到包含所需单词数(即循环运行程序的时间)的句子。 这是所有内容的简化图表。

As you can see, our output from the test word “the” is “the apple is delicious”. It is also possible that a sentence like “the chair has juice” (assuming “has” is one of the values in the key-value list of the word “chair”) is formed.

如您所见,测试词“ the”的输出是“苹果好吃”。 还可能形成一个句子,例如“椅子上有汁”(假设“有”是单词“椅子”的键值列表中的值之一)。

The relevance of the generated sentences will directly depend on the amount of data you have used for training. The more data you have, the more vocabulary your model will develop.

生成的句子的相关性将直接取决于您用于训练的数据量。 您拥有的数据越多,您的模型将开发出越多的词汇量。

One of the major things to note is that the more number of times a particular word occurs after a certain test word in your training data, the higher is the probability of it occuring in your final output.

要注意的主要事情之一是,在训练数据中,某个特定单词在特定测试单词之后出现的次数越多,则该单词出现在最终输出中的可能性就越高。

For example, if in your training data , the phrase “the apple” has occured 100 times, and “the chair” has occured 50 times, in your final output, for the test word “the”, “apple” has a higher probability of occuring than “chair”.

例如,如果在您的训练数据中,短语“苹果”出现了100次,而“椅子”出现了50次,那么在您的最终输出中,对于测试词“ the”,“ apple”的可能性更高比“椅子”发生的次数多。

This is based on the basic probability rules

这是基于基本的概率规则

Now, lets look at a term we came across earlier in this section : Order of a Markov Chain

现在,让我们看一下本节前面提到的一个术语:马尔可夫链的阶数

马氏链的顺序 (Order Of A Markov Chain)

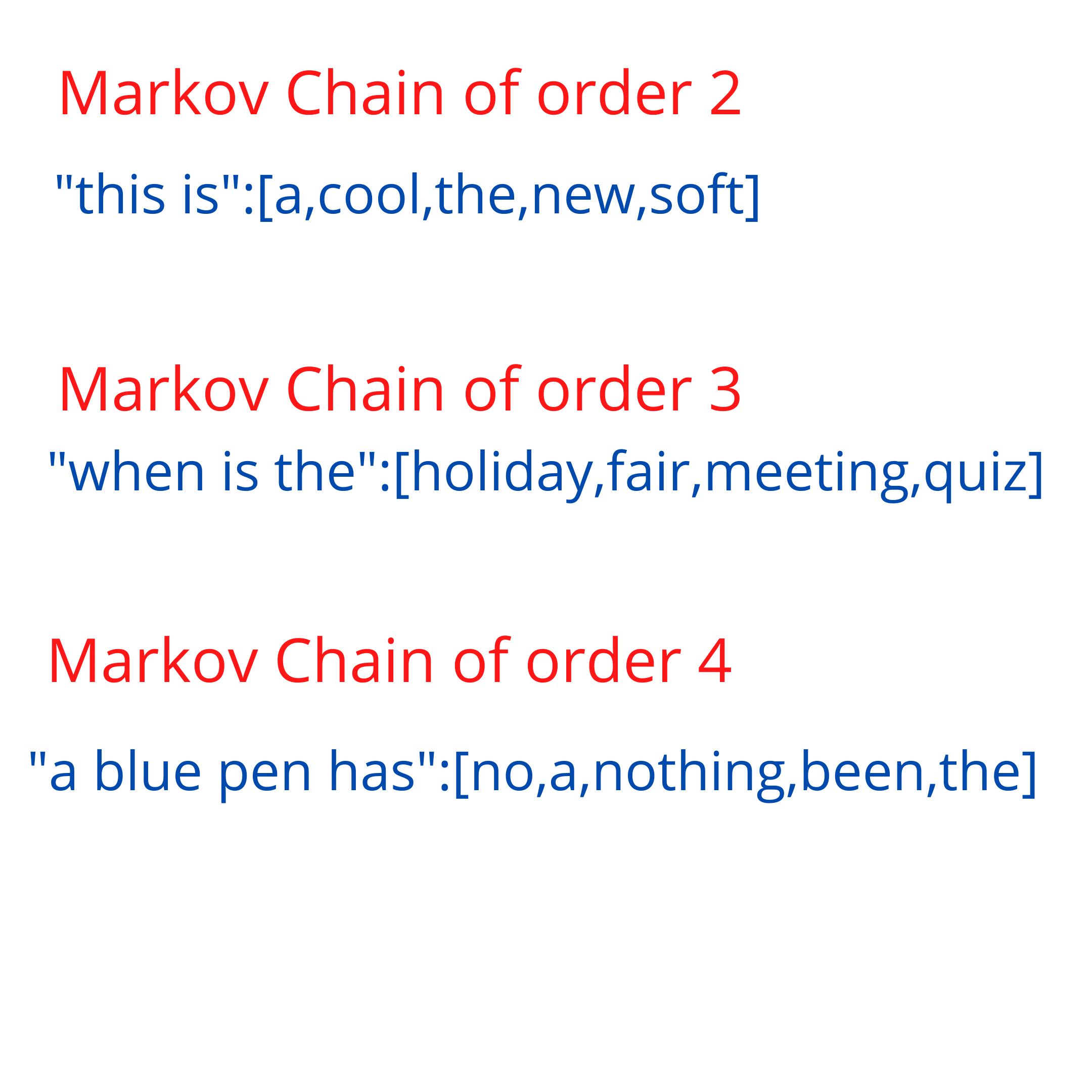

The order of the Markov Chain is basically how much “memory” your model has. For example, in a Text Generation AI, your model could look at ,say,4 words and then predict the next word. This “4” is the “memory” of your model, or the “order of your Markov Chain”.

马尔可夫链的顺序基本上是模型具有多少“内存”。 例如,在Text Generation AI中,您的模型可以查看4个单词,然后预测下一个单词。 这个“ 4”是模型的“内存”,或者是“马尔可夫链的顺序”。

The design of your Markov Chain model depends on this order. Lets take a look at some snippets from models of different orders

马尔可夫链模型的设计取决于此顺序。 让我们看一下不同订单模型中的一些片段

This is the basic concept and working of Markov Chains.

这是马尔可夫链的基本概念和工作原理。

Lets take a look at some ways you can apply Markov Chains for your Generative AI projects

让我们看一下将Markov Chains应用于生成式AI项目的一些方法

马尔可夫链在生成式AI中的应用 (Application of Markov Chains in Generative AI)

“Talking to yourself afterwards is ‘The Road To Success’. Discussing the Challenges in the room makes you believe in them after a while”

“事后与自己谈谈是“成功之路”。 讨论房间中的挑战会让您在一段时间后相信它们。”

—Generated by TweetMakersAI

—由TweetMakersAI生成

Markov Chains are a great way to implement a ML code, as training is quite fast, and not too heavy on an average CPU.

马尔可夫链是实现ML代码的好方法,因为训练速度非常快,而且在一般的CPU上不太沉重。

Although you won’t be able to develop complex projects like face generation like that made by NVIDIA, there’s still a lot you can do with Markov Chains in Text Generation.

尽管您将无法开发像NVIDIA这样的人脸生成这样的复杂项目,但是在文本生成中使用Markov Chains仍然可以做很多事情。

They work great with text generation as there isn’t much effort required to make the sentences make sense. The thumb rule (as is for most ML algorithms) is that the more relevant data you have, the higher accuracy you will achieve.

它们使文本生成非常有用,因为不需要太多的工作就可以使句子变得有意义。 经验法则(对于大多数ML算法而言)是,您拥有的数据越相关,您将获得的准确性越高。

Here are a few applications of Text Generation AI with Markov Chains

这是带有马尔可夫链的文本生成AI的一些应用

Chat Bot: With a huge dataset of conversations about a particular topic, you could develop your own chatbot using Markov Chains. Although they require a seed (test word) to begin the text generation, various NLP techniques can be used to get the seed from the client’s response. Neural Networks work the best when it comes to chat bots, no doubt, but using Markov Chains is a good way for a beginner to get familiar with both the concepts — Markov Chains, and Chat Bots.

聊天机器人 :拥有关于特定主题的大量对话数据集,您可以使用Markov Chains开发自己的聊天机器人 。 尽管它们需要种子(测试词)才能开始生成文本,但是可以使用各种NLP技术从客户端的响应中获取种子。 毫无疑问,在聊天机器人方面,神经网络的工作效果最好,但是使用Markov Chains是让初学者熟悉Markov Chains和Chat Bots这两个概念的好方法。

Story Writing: Say your language teacher asked you to write a story. Now, wouldn’t it be fun if you were able to come up with a story inspired by your favourite author? This is the easiest thing to do with Markov Chains. You can gather a large dataset of all stories/books written by an author (or more if you really want to mix different writing styles), and train a Markov Chain model on those. You will be surprised by the result it generates. It is a fun activity which I would highly recommend for Markov Chain Beginners.

故事写作:假设您的语言老师要求您写一个故事。 现在,如果您能够根据自己喜欢的作家创作一个故事,那会不会很有趣? 这是使用马尔可夫链最简单的方法。 您可以收集作者编写的所有故事/书的大型数据集(如果您真的想混合使用不同的写作风格,则可以收集更多数据/书),并在这些数据上训练马尔可夫链模型。 您会对它产生的结果感到惊讶。 我强烈建议Markov Chain初学者参加这项有趣的活动。

There are countless things you can do in Text Generation with Markov Chains if you use your imagination.

如果您发挥想象力,使用马尔可夫链在文本生成中可以做很多事情。

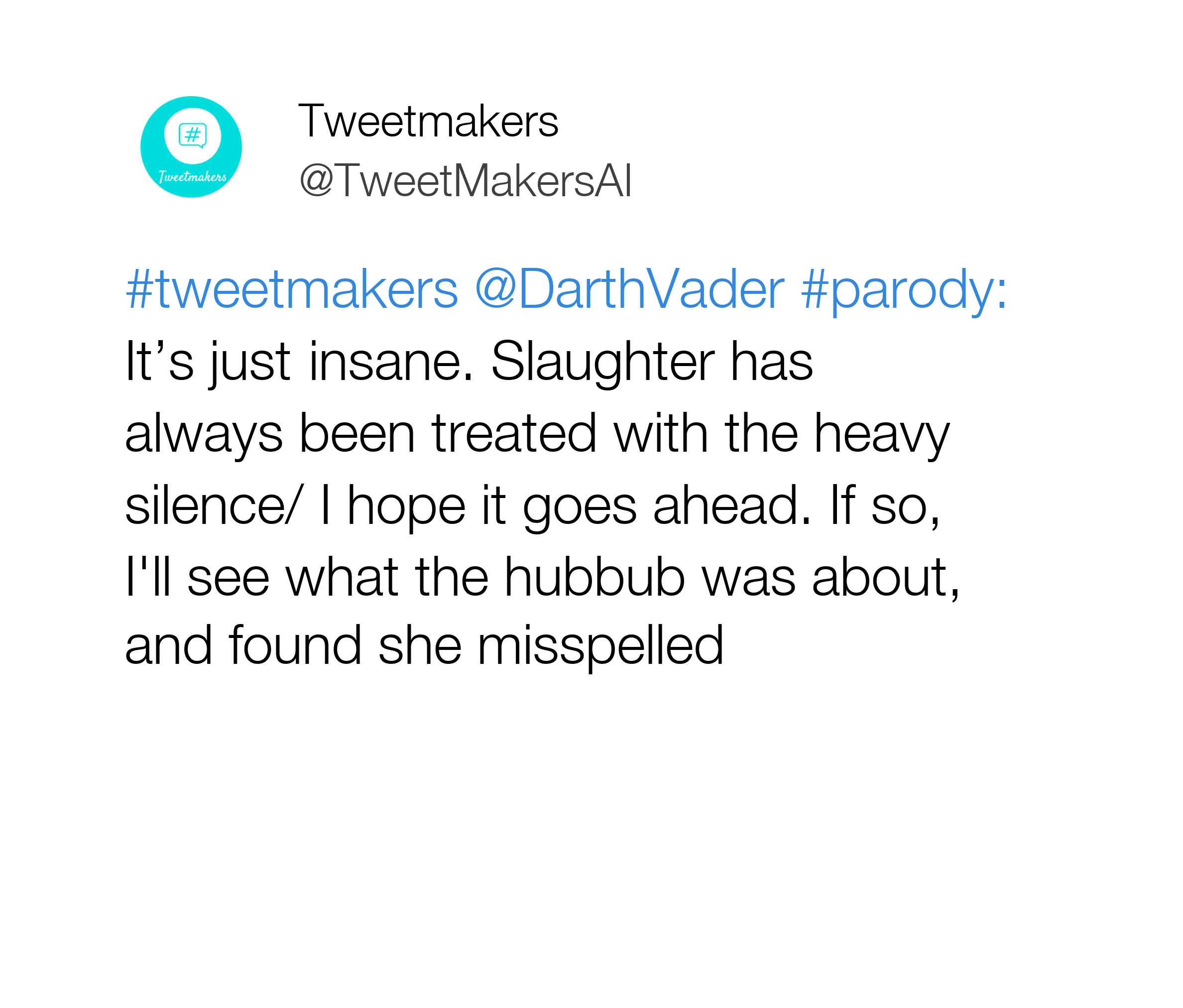

A fun project that uses Generative AI, is TweetMakers. This site generates fake tweets in the style of certain Twitter users.

TweetMakers是一个使用Generative AI的有趣项目。 该网站以某些Twitter用户的方式生成伪造的推文。

As an AI enthusiast, and a meme lover, I believe meme creation is going to be a major application for Generative AI. Check out my blog about the sites which have already started doing so.

作为AI爱好者和迷因爱好者,我相信迷因创作将成为Generative AI的主要应用。 查看我有关已开始这样做的网站的博客 。

Although there’s a lot you can do with Markov Chains , they do have certain limitations. Lets have a look a few of them.

尽管可以用Markov Chains做很多事情,但是它们确实有一定的局限性。 让我们看看其中的一些。

马尔可夫链的局限性 (Limitations Of Markov Chains)

In text generation, Markov Chains can play a huge role. However, there are some minor restrictions to it:

在文本生成中,马尔可夫链可以发挥巨大作用。 但是,对此有一些小的限制:

The seed should exist in the training data: The seed (test phrase or word) which you pass in order to generate a sentence, must exist in the key-value pairs collection of your Markov Model. This is because the way these Chains work is that they get the next word based on which words have occured after the seed and with what frequency. This is the reason most Text Generation AI bots don’t take any user input, instead select a seed from the existing data.

种子应该存在于训练数据中:为了生成句子而传递的种子(测试短语或单词) 必须存在于Markov模型的键值对集合中。 这是因为这些链的工作方式是它们根据种子之后出现的单词以及出现的频率来获得下一个单词。 这就是大多数Text Generation AI机器人不接受任何用户输入,而是从现有数据中选择种子的原因。

Might Generate Incomplete Sentences: Markov Chains cannot understand whether a sentence is complete or not. It’ll simply generate words the number of time you run the code in a loop. For example, a sentence like “This is a new” can be generated. Very clearly, this sentence is incomplete. Although Markov Chains cannot tell you if the sentence is complete or not, various NLP techniques can be used to get a complete sentence as an output.

可能产生不完整的句子:马尔可夫链无法理解一个句子是否完整。 只需在循环中运行代码的次数即可生成单词。 例如,可以生成诸如“这是新词”的句子。 很清楚,这句话是不完整的。 尽管Markov Chains不能告诉您句子是否完整,但是可以使用各种NLP技术获取完整的句子作为输出。

Markov Chains are a basic method for text generation. Although their output can directly be used for various purposes, you will inevitably have to do some post-processing on the output to achieve complex tasks

马尔可夫链是生成文本的基本方法。 尽管它们的输出可以直接用于各种目的,但您不可避免地必须对输出进行一些后处理才能完成复杂的任务

结论 (Conclusion)

Markov Chains are a great way to get started with Generative AI, with a lot of potential to accomplish a wide variety of tasks.

Markov Chains是创生式AI入门的绝佳方法,具有完成多种任务的巨大潜力。

Generative AI is a popular topic in ML/AI, so it is a good idea for anyone looking to make a career in this field to get into it, and for absolute beginners, Markov Chains is the way to go.

生成型AI在ML / AI中是一个受欢迎的话题,因此,对于任何希望在该领域从事职业的人来说,这都是一个好主意,对于绝对的初学者来说,Markov Chains是必经之路。

I hope this article was helpful and you enjoyed it :)

希望本文对您有所帮助,并且您喜欢它:)

翻译自: https://medium.com/swlh/machine-learning-algorithms-markov-chains-8e62290bfe12

本文深入探讨了机器学习中的马尔可夫链概念,它作为人工智能和智能系统中的一个重要算法,体现了人类才智的延伸。通过学习和理解马尔可夫链,我们可以更好地掌握人工智能和机器学习的精髓。

本文深入探讨了机器学习中的马尔可夫链概念,它作为人工智能和智能系统中的一个重要算法,体现了人类才智的延伸。通过学习和理解马尔可夫链,我们可以更好地掌握人工智能和机器学习的精髓。

519

519

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?