肉体之爱的解释圣经

Artificial intelligence (AI) has been integrated into every part of our lives. A chatbot, enabled by advanced Natural language processing (NLP), pops to assist you while you surf a webpage. A voice recognition system can authenticate you in order to unlock your account. A drone or driverless car can service operations or access areas that are humanly impossible. Machine-learning (ML) predictions are utilized to all kinds of decision making. A broad range of industries such as manufacturing, healthcare, finance, law enforcement, and education rely more and more on AI-enabled systems.

人工智能(AI)已融入我们生活的每个部分。 由高级自然语言处理(NLP)启用的聊天机器人会弹出,以在您浏览网页时为您提供帮助。 语音识别系统可以对您进行身份验证以解锁您的帐户。 无人机或无人驾驶汽车可以为人为不可能的操作或进入区域提供服务。 机器学习(ML)预测可用于各种决策。 制造业,医疗保健,金融,执法和教育等众多行业越来越依赖于支持AI的系统。

However, how AI systems make the decisions is not known to most people. Many of the algorithms, though achieving high-level of precision, are not easily understandable for how a recommendation is made. This is especially the case in a deep learning model. As humans, we must be able to fully understand how decisions are being made so that we can trust the decisions of AI systems. We need ML models to function as expected, to produce transparent explanations, and to be visible in how they work. Explainable AI (XAI) is an important research and has been guiding the development for AI. It enables humans to understand the models so as to manage effectively the benefits that AI systems provide, while maintaining a high level of prediction accuracy.

但是,大多数人并不知道AI系统如何做出决定。 许多算法虽然达到了很高的精度,但是对于如何提出建议却不容易理解。 在深度学习模型中尤其如此。 作为人类,我们必须能够完全理解决策的制定方式,以便我们可以信任AI系统的决策。 我们需要ML模型按预期运行,产生透明的解释并在它们的工作方式中可见。 可解释性AI(XAI)是一项重要的研究,一直在指导AI的发展。 它使人们能够理解模型,从而有效管理AI系统提供的好处,同时保持较高的预测精度。

Explainable AI answers the following questions to build the trusts of users for the AI systems:

可解释的AI回答以下问题以建立用户对AI系统的信任:

- Why does the model predict that result? 模型为什么可以预测结果?

- What are the reasons for a prediction? 进行预测的原因是什么?

- What is the prediction interval? 预测间隔是多少?

- How does the model work? 模型如何运作?

In this article I am going to walk you through the advancements that address the above questions.

在本文中,我将引导您解决上述问题。

Two emerging trends in AI are “Explainable AI” and “Differential Privacy”. On Explainable AI, Dataman has published a series of articles including “An Explanation for eXplainable AI”, “Explain Your Model with the SHAP Values”, “Explain Your Model with LIME”, and “Explain Your Model with Microsoft’s InterpretML. Differential Privacy is an important research branch in AI. It has brought a fundamental change to AI, and continues to morph the AI development. On Differentiated Privacy, Dataman published “You Can Be Identified by Your Netflix Watching History” and “What Is Differential Privacy?” and more to come in the future.

人工智能的两个新兴趋势是“可解释的人工智能”和“差异性隐私”。 在可解释的AI上,Dataman发表了一系列文章,包括“ 可解释AI的说明 ”,“ 使用SHAP值 解释模型 ”,“ 使用LIME解释模型 ”和“ 使用Microsoft的InterpretML解释模型 ”。 差异隐私是AI中重要的研究分支。 它给AI带来了根本的变化,并继续改变AI的发展。 关于差异化隐私,Dataman发表了“ 您可以通过Netflix的观看历史来识别您的身份 ”和“ 什么是差异化隐私? ”,将来还会有更多。

(A) Explainable AI with SHAP

(A)SHAP可解释的AI

In order to provide model transparency, the SHAP (SHapley Additive exPlanations) was invented by Lundberg and Lee (2016). The article “Explain Your Model with the SHAP Values” has the following analogy:

为了提供模型透明度, Lundberg和Lee(2016)发明了SHAP(SHapley Additive exPlanations ) 。 文章“ 用SHAP值解释模型 ”具有以下类推:

Is your highly-trained model easy to understand? If you ask me to swallow a black pill without telling me what’s in it, I certainly don’t want to swallow it. The interpretability of a model is like a label on a drug bottle. We need to make our effective pill transparent for easy adoption.

您训练有素的模型容易理解吗? 如果您要我吞下黑色药片而不告诉我里面有什么,我当然不想吞下它。 模型的可解释性就像药瓶上的标签。 我们需要使我们的有效药透明,以便于采用。

The SHAP provides three salient propositions:

SHAP提供了三个明显的建议:

The first one is global interpretability — the collective SHAP values can show how much each predictor contributes, either positively or negatively, to the target variable. This is like the variable importance plot but it is able to show the positive or negative relationship for each variable with the target (see the SHAP value plot below).

第一个是全局可解释性 -总体SHAP值可以显示每个预测变量对目标变量的正面或负面贡献。 这就像变量重要性图,但是它能够显示每个变量与目标的正或负关系(请参见下面的SHAP值图)。

The second one is local interpretability — each observation gets its own set of SHAP values (see the individual SHAP value plot below). This greatly increases its transparency. We can explain why a case receives its prediction and the contributions of the predictors. Traditional variable importance algorithms only show the results across the entire population but not on each individual case. The local interpretability enables us to pinpoint and contrast the impacts of the factors.

第二个是局部可解释性 -每个观察值都有其自己的SHAP值集(请参见下面的各个SHAP值图)。 这大大增加了其透明度。 我们可以解释为什么一个案例会收到其预测以及预测因素的贡献。 传统的变量重要性算法仅显示整个人群的结果,而不显示每个个案的结果。 当地的可解释性使我们能够查明和对比因素的影响。

- Third, the SHAP values can be calculated for any tree-based model, while other methods use linear regression or logistic regression models as the surrogate models. 第三,可以为任何基于树的模型计算SHAP值,而其他方法则使用线性回归或逻辑回归模型作为替代模型。

Global interpretability— A SHAP value plot can show positive or negative relationships of the predictors with the target variable. Figure 1 is made of all the dots in the train data.

全局可解释性 -SHAP值图可以显示预测变量与目标变量的正向或负向关系。 图1由列车数据中的所有点组成。

This SHAP value plot presents to us:

此SHAP值图向我们呈现:

Feature importance: Variables are ranked in descending order.

功能重要性:变量按降序排列。

Impact: The horizontal location shows whether the effect of that value is associated with a higher or lower prediction.

影响:水平位置显示该值的影响是与较高还是较低的预测相关联 。

Original value: Color shows whether that variable is high (in red) or low (in blue) for that observation.

原始值:颜色显示该变量在该观察值中是高(红色)还是低(蓝色)。

Correlation: A high level of the “alcohol” content has a high and positive impact on the quality rating. The “high” comes from the red color, and the “positive” impact is shown on the X-axis. Similarly, we will say the “volatile acidity” is negatively correlated with the target variable.

相关性: 高含量的“酒精”含量会对质量等级产生高而积极的影响。 “高”来自红色,“正”影响显示在X轴上。 同样,我们将说“挥发性酸度”与目标变量负相关。

Global interpretability — How about showing the marginal effect one or two features have on the predicted outcome? J. H. Friedman (2001), the instrumental contributor of machine learning techniques, calls such plot a partial dependence plot. It tells whether the relationship between the target and a feature is linear, monotonic or more complex. Figure (II) plot shows there is an approximately linear and positive trend between “alcohol” and the target variable, and “alcohol” interacts with “sulphates” frequently.

全球可解释性-如何显示一两个特征对预测结果的边际影响? 机器学习技术的重要贡献者JH Friedman(2001 )将这种图称为部分 依赖图 。 它告诉目标和特征之间的关系是线性的,单调的或更复杂的。 图(II)曲线显示“酒精”与目标变量之间存在近似线性和正的趋势,“酒精”与“硫酸盐”频繁相互作用。

Local interpretability — the ability to explain each prediction, is a very importance promise in an explainable AI. We need to explain what the reasons are for a prediction. This is delivered by a force plot. Each prediction will have a force plot to explain the reasons.

在可解释的AI中, 本地可解释性 -解释每个预测的能力是一个非常重要的承诺。 我们需要解释预测的原因。 这是通过测力图传递的。 每个预测都有一个力图来说明原因。

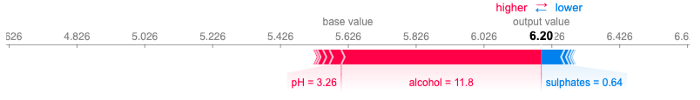

The force plot in Figure III shows that the prediction settled at 6.20. This is because the red features (Alcohol and pH) pushing the prediction to the right (higher), and the blue features (Sulphates) pushing the predictions to the left (lower).

图III中的力图显示预测值稳定在6.20。 这是因为红色特征(酒精和pH)将预测推向右侧(较高),蓝色特征(硫酸盐)将预测推向左侧(较低)。

If you want to implement the code and understand more details, please click “Explain Your Model with the SHAP Values” and “Explain Any Models with the SHAP Values — Use the KernelExplainer”, in which I walk you through how to create these explainable results. The SHAP builds on ML algorithms. If you want to get deeper into the Machine Learning algorithms, you can check my post “My Lecture Notes on Random Forest, Gradient Boosting, Regularization, and H2O.ai”.

如果您想实现代码并了解更多详细信息,请单击“ 使用SHAP值 解释模型 ”和“ 使用SHAP值解释任何模型-使用KernelExplainer ”,在其中我将指导您逐步创建这些可解释的结果。 SHAP基于ML算法。 如果您想更深入地学习机器学习算法,可以查看我的文章“ 有关随机森林,梯度提升,正则化和H2O.ai的我的讲义 ”。

(B) Explainable AI with LIME

(B)LIME可解释的AI

To make AI explainable, two types of confidences are absolutely required: the first one is the confidence for our model, and the second one is the confidence for our prediction. In the seminar work “Why Should I Trust You?” Explaining the Predictions of Any Classifier (KDD2016), the authors explained we need to build two types of trusts.

为了使AI可以解释,绝对需要两种类型的置信度:第一种是我们模型的置信度,第二种是我们预测的置信度。 在研讨会上, “为什么我应该相信你?” 在解释任何分类器的预测 (KDD2016)时,作者解释说我们需要建立两种类型的信任。

Trusting the overall model: the user gains enough trust that the model will behave in reasonable ways when deployed. Although in the modeling stage accuracy metrics (such as AUC — Area under the curve) are used on multiple validation datasets to mimic the real-world data, there often exist significant differences in the real-world data. Besides using the accuracy metrics, we need to test the individual prediction explanations.

信任整个模型:用户获得了足够的信任,使得该模型在部署时将以合理的方式运行。 尽管在建模阶段,在多个验证数据集上使用了准确性指标(例如AUC —曲线下的面积)来模拟真实数据,但实际数据中通常存在显着差异。 除了使用准确性指标外,我们还需要测试各个预测说明。

Trusting an individual prediction: a user will trust an individual prediction to act upon. No user wants to accept a model prediction on blind faith, especially if the consequences can be catastrophic.

信任单个预测:用户将信任要执行的单个预测。 没有用户愿意接受基于盲目信念的模型预测,尤其是如果后果可能是灾难性的。

They proposed a novel technique called the Local Interpretable Model-Agnostic Explanations (LIME) that can explain the predictions of any classifier in “an interpretable and faithful manner, by learning an interpretable model locally around the prediction”. Their approach is to gain the trust of users for individual predictions and then to trust the model as a whole.

他们提出了一种称为“ 本地可解释模型不可知性解释”(LIME)的新技术,该技术可以“以可解释和忠实的方式,通过在预测周围学习可解释的模型来解释任何分类器的预测”。 他们的方法是获得用户对单个预测的信任,然后再信任整个模型。

This reminds me a friend of mine once walked me through his new house. He excitingly showed me the outlook of the house, each individual rooms, and every devices in the closets. He saw the great value in the house and had great confidence. We the data science enthusiasts also hope our AI systems can be trusted likewise.

这使我想起我的一个朋友曾经带我走过他的新房子。 他激动地向我展示了房屋的外观,每个单独的房间以及壁橱中的每个设备。 他看到了房子的巨大价值,并充满了信心。 我们数据科学爱好者还希望我们的AI系统也能得到信任。

A model should be easily interpretable. Often we have this impression that a linear model is more interpretable than a ML model. Is it true? Look at this linear model with forty variables. Is it easy to explain? Not really.

模型应该易于解释 。 通常,我们的印象是线性模型比ML模型更具解释性。 是真的吗 看看这个带有40个变量的线性模型。 容易解释吗? 并不是的。

Although this model has forty variables, for an individual prediction there may be only a few variables influencing its predicted value. The interpretation should make sense from an individual prediction’s view. The authors of LIME call this local fidelity. Features that are globally important may not be important in the local context, and vice versa. Because of this, it could be the case that only a handful of variables directly relate to a local (individual) prediction, even if a model has hundreds of variables globally.

尽管此模型有40个变量,但对于单个预测,可能只有几个变量会影响其预测值。 从单个预测的角度来看,这种解释应该有意义。 LIME的作者将此称为本地保真度 。 具有全局重要性的功能在本地情况下可能并不重要,反之亦然。 因此,即使模型全局有数百个变量,也可能只有少数变量直接与局部(单个)预测相关。

Figure IV presents how LIME explains an individual prediction. The reasons that the prediction is 5.770 are due to the positive and negative driving forces.

图IV展示了LIME如何解释单个预测。 预测为5.770的原因是由于正驱动力和负驱动力。

This data point has sulphates>0.73, the strongest influencer driving the prediction to the right (higher). The next influencer is total sulfur dioxide that pushes the prediction to the left (lower). We can explain each influencer in the same way.

该数据点的硫酸盐> 0.73,是最强的影响因素,将预测推向右侧(较高)。 下一个影响因素是总二氧化硫,将预测值推到左侧(较低)。 我们可以用相同的方式解释每个影响者。

How is LIME different from SHAP? The Shapley value is the average of the marginal contributions across all permutations. The Shapley values consider all possible permutations, thus SHAP is a united approach that provides global and local consistency and interpretability. However, its cost is time — it has to compute all permutations in order to give the results. In contrast, LIME (Local Interpretable Model-agnostic Explanations) builds sparse linear models around an individual prediction in its local vicinity. So LIME is actually a subset of SHAP, as documented in Lundberg and Lee (2016).

LIME与SHAP有何不同? Shapley值是所有排列中边际贡献的平均值。 Shapley值考虑了所有可能的排列,因此SHAP是提供全局和局部一致性和可解释性的统一方法。 但是,它的代价是时间-必须计算所有排列才能得出结果。 相反,LIME(不可解释的本地模型解释)围绕其局部附近的单个预测建立稀疏线性模型。 因此,正如Lundberg和Lee(2016)所述,LIME实际上是SHAP的子集。

If you want to conduct your own LIME and get step-by-step tutorial, you can click the post “Explain Your Model with LIME”.

如果您想进行自己的LIME并获得分步教程,则可以单击“ 使用LIME解释模型 ”一文。

(C) Explainable AI with Microsoft’s InterpretML

(C)使用Microsoft的InterpretML可解释的AI

The InterpretML module, developed by a team in Microsoft Inc., offers prediction accuracy and model interpretability in an integrated API. If you use scikit-learn as your main modeling tool, you will find the InterpretML API offers a unified framework API like scikit-learn. It actually leverages many libraries like Plotly, LIME, SHAP, SALib so is already compatible with other modules. Its Explainable Boosting Machine (EBM) is a very accessible algorithm which is based on Generalized Additive Models (GAMs). The terms in a GAM is additive like those of a linear model, but they do not need to be linear with the target variable. You can find more tutorials on GAM in “Explain Your Model with Microsoft’s InterpretML” and “Business Forecasting with Facebook’s Prophet”.

由Microsoft Inc.的团队开发的InterpretML模块在集成的API中提供了预测准确性和模型可解释性。 如果使用scikit-learn作为主要的建模工具,您会发现InterpretML API提供了像scikit-learn这样的统一框架API。 它实际上利用了许多库,例如Plotly,LIME,SHAP,SALib,因此已经与其他模块兼容。 其可解释的助推器(EBM)是基于通用加法模型(GAM)的一种非常易于访问的算法。 GAM中的术语像线性模型中的术语一样是可加性的,但是它们不需要与目标变量呈线性关系。 您可以在“ 使用Microsoft的InterpretML解释模型 ”和“ 使用Facebook的Prophet进行业务预测 ”中找到有关GAM的更多教程。

Explainable AI means you can easily explain each variable like exploratory data analysis. The InterpretML offers the interface in Figure V to show the hisgram for each variable.

可解释的AI意味着您可以像解释性数据分析一样轻松地解释每个变量。 InterpretML提供了图V中的界面,以显示每个变量的直方图。

Figure VI shows presents the Pearson Correlation with the target variable.

图VI显示了与目标变量的皮尔逊相关性。

Global Interpretability: Once a model is built, you can demonstrate the overall variable importance in Figure VII. You also can show the Partial Dependent Plot (PDP) in Figure VIII for each variable.

全局可解释性:构建模型后,您可以在图VII中展示总体变量的重要性。 您还可以在图VIII中显示每个变量的部分相关图(PDP)。

Local interpretability: The interpretation for each prediction is importance in Explainable AI. InterpretML lets you explain each prediction like Figure IX. It says the reason that the predicted variable is 5.60 is due to those influencers in descending order.

本地可解释性:每个预测的解释在可解释的AI中都很重要。 InterpretML让您解释每个预测,如图IX。 它说预测变量为5.60的原因是由于这些影响因素按降序排列。

All of the above can be put together in an elegant dashboard. I think this is the best and is irresistible!

以上所有内容都可以放在优雅的仪表板中。 我认为这是最好的,是不可抗拒的!

If you want to implement the code and understand the detail, click this article “Explain Your Model with Microsoft’s InterpretML” which provides a gentle mathematical background then show you how to conduct the modeling.

如果要实现代码并理解细节,请单击本文“ 使用Microsoft的InterpretML解释模型 ”,该文章提供了柔和的数学背景,然后向您展示了如何进行建模。

(D) Explainable AI Can Include Prediction Intervals

(D)可解释的AI可以包括预测间隔

I believe one important piece of information in the development of Explainable AI is prediction intervals. Most applied machine learning techniques typically deliver the mean prediction. Prediction Intervals are not incorporated in the machine learning techniques until recent years. Important advancement has been made to Quantile Gradient Boosting, and Quantile Random Forests. Why do we need prediction intervals besides the mean predictions? Prediction intervals provide the range of the predicted values. In financial risk management, the prediction intervals for the high range can help risk managers to mitigate risks. In science, a predicted life of a battery between 100 to 110 hours can inform users when to take actions. Figure XI shows the intervals become wider for large values. It will be helpful to model a range of percentiles of the target variable.

我认为,可解释性AI的发展中重要的一条信息是预测间隔 。 大多数应用的机器学习技术通常会提供均值预测。 直到最近几年,预测间隔才被纳入机器学习技术中。 重要的进步已取得位数梯度推进 ,并位数随机森林 。 为什么除了均值预测外还需要预测间隔? 预测间隔提供了预测值的范围。 在财务风险管理中,高范围的预测间隔可以帮助风险管理者减轻风险。 在科学上,预计电池寿命在100到110小时之间可以告知用户何时采取措施。 图十一显示,对于较大的值,间隔变得更宽。 对目标变量的百分比范围进行建模将很有帮助。

In “A Tutorial on Quantile Regression, Quantile Random Forests, and Quantile GBM”, I show Quantile GBM can build several models for a range of percentiles of the target variable like Figure XII. The variable importance charts vary in different models. This quantile GBM provides much more insight to global interpretability than the standard GBM that aims at only the conditional mean.

在“ 有关分位数回归,分位数随机森林和分位数GBM的教程 ”中,我展示了分位数GBM可以为目标变量的一定百分比范围构建多个模型,如图XII所示。 可变重要性图表在不同模型中有所不同。 与仅针对条件均值的标准GBM相比,这种分位数GBM为全局解释性提供了更多了解。

Figure XIII shows how prediction intervals are achieved.

图十三显示了如何实现预测间隔。

If you are interested in producing quantile measures for Random Forests or GBM, the article “A Tutorial on Quantile Regression, Quantile Random Forests, and Quantile GBM” gives you much detail.

如果您有兴趣为随机森林或GBM制定分位数度量,则文章“ 分位数回归,分位数随机森林和分位数GBM教程 ”为您提供了许多详细信息。

Model Interpretability Does Not Mean Causality

模型的可解释性并不意味着因果关系

It is important to point out the model interpretability does not imply causality. To prove causality, you need different techniques. In the “identify causality” series of articles, I demonstrate econometric techniques that identify causality. Those articles cover the following techniques: Regression Discontinuity (see “Identify Causality by Regression Discontinuity”), Difference in differences (DiD)(see “Identify Causality by Difference in Differences”), Fixed-effects Models (See “Identify Causality by Fixed-Effects Models”), and Randomized Controlled Trial with Factorial Design (see “Design of Experiments for Your Change Management”).

重要的是要指出模型的可解释性并不意味着因果关系。 为了证明因果关系,您需要使用不同的技术。 在“ 识别因果关系 ”系列文章中,我演示了识别因果关系的计量经济学技术。 这些文章涵盖以下技术:回归不连续性(请参阅“ 通过回归不连续性确定因果关系 ”),差异差异(DiD)(请参阅“ 通过差异差异确定因果关系 ”),固定效应模型(请参阅“ 通过固定 差异确定因果关系 ”) 效果模型 ”)以及采用析因设计的随机对照试验(请参见“ 针对变更管理的实验设计 ”)。

In a modeling project, you explore the data, train the models, compare the model performance, then examine the predictions globally and locally — you get it all in the InterpretML module. It is a one-stop shop and easy to use. However, I want to remind you no machine can replace the creativity of feature engineering. Check “A Data Scientist’s Toolkit to Encode Categorical Variables to Numeric”, “Avoid These Deadly Modeling Mistakes that May Cost You a Career”, “Feature Engineering for Healthcare Fraud Detection”, and “Feature Engineering for Credit Card Fraud Detection”. Or you can bookmark “Dataman Learning Paths — Build Your Skills, Drive Your Career“ for all articles.

在建模项目中,您可以浏览数据,训练模型,比较模型性能,然后在全局和本地检查预测—您可以在InterpretML模块中获得全部信息。 这是一站式商店,易于使用。 但是,我想提醒您,没有任何机器可以取代要素工程的创造力。 选中“ 将分类变量编码为数字的数据科学家工具包 ”,“ 避免可能会导致职业生涯恶化的致命建模错误 ”,“ 医疗保健欺诈检测 功能工程 ”和“ 信用卡欺诈检测功能工程 ”。 或者,您可以为所有文章添加“ Dataman学习路径-建立技能,驱动职业 ”的书签。

翻译自: https://medium.com/analytics-vidhya/an-explanation-for-explainable-ai-xai-d56ae3dacd13

肉体之爱的解释圣经

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?