scikit 多标签分类

Scikit-Learn is an easy library to apply machine learning algorithms in Python. The library also contains several tools for statistical modeling such as regression, classification, and clustering.

Scikit-Learn是一个易于使用的库,可以在Python中应用机器学习算法。 该库还包含一些用于统计建模的工具,例如回归,分类和聚类。

Let’s do comparison of LogisticRegression, RandomForest, Naive Bayes and Linear Support Vector Machine for multi class text classification.

让我们比较一下LogisticRegression,RandomForest,朴素贝叶斯和线性支持向量机对多类文本的分类。

our task is to assign one of four product categories to a given review. This is multi-class text classification problem, and we want to know which algorithm will give high accuracy.

我们的任务是为给定评论分配四个产品类别之一。 这是多类文本分类问题,我们想知道哪种算法可以提供较高的准确性。

will load the library first

将首先加载库

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfTransformer

from sklearn.naive_bayes import MultinomialNBfrom

from sklearn.feature_extraction.text import TfidfVectorizer

import pandas as pd

from io import StringIO

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

from sklearn.naive_bayes import MultinomialNB

from sklearn.svm import LinearSVC

from sklearn.model_selection import train_test_split

from sklearn import metrics数据 (Data)

you can find the datasets in Kaggle. Women’s Clothing E-Commerce Reviews. The datasets presents a review of several products.

您可以在Kaggle中找到数据集。 女装电子商务评论。 数据集介绍了几种产品。

data = pd.read_csv ('Women Clothing E-Commerce Review.csv')let’s see information about the dataset

让我们看看有关数据集的信息

data.info

<bound method DataFrame.info of id Clothing_ID Age Title \

0 2 1077 60 Some major design flaws

1 3 1049 50 My favorite buy!

2 16 1065 34 You need to be at least average height, or taller

3 17 853 41 Looks great with white pants

4 18 1120 32 Super cute and cozy

.. ... ... ... ...

321 416 872 35 I love this shirt

322 417 1083 35 Flimsy

323 418 831 40 Super comfy

324 419 1080 32 Pretty pattern, weird fit

325 420 1077 68 Easy and stylish

ReviewText Rating Recommended \

0 I had such high hopes for this dress and reall... 3 0

1 I love, love, love this jumpsuit. it's fun, fl... 5 1

2 Material and color is nice. the leg opening i... 3 1

3 Took a chance on this blouse and so glad i did... 5 1

4 A flattering, super cozy coat. will work well... 5 1

.. ... ... ...

321 I really love this lace-up shirt, but i only l... 5 1

322 I love byron lars dresses, and this design is ... 2 0

323 Love this blouse, it;s super comfy, looks awes... 5 1

324 I fell in love with this dress when i saw it o... 3 1

325 I was hesitant based on the reviews, but i'm g... 5 1

Positive Feedback Count Division Name product Class_Name

0 0 General Dresses Dresses

1 0 General Petite Bottoms Pants

2 2 General Bottoms Pants

3 0 General Tops Blouses

4 0 General Jackets Outerwear

.. ... ... ... ...

321 14 General Tops Knits

322 2 General Dresses Dresses

323 0 General Tops Blouses

324 1 General Dresses Dresses

325 2 General Dresses Dresses

[326 rows x 11 columns]>Before we do our training and prediction it’s important to check the missing values

在进行训练和预测之前,检查缺失值很重要

data.isna().sum()id 0

Clothing_ID 0

Age 0

Title 0

ReviewText 0

Rating 0

Recommended 0

Positive Feedback Count 0

Division Name 0

product 0

Class_Name 0

dtype: int64So it seem the data has no missing values

因此看来数据没有缺失值

col = ['ReviewText', 'product']data = data[col]data.columns =['ReviewText', 'product']

col = ['ReviewText', 'product']data = data[col]data.columns =['ReviewText', 'product']

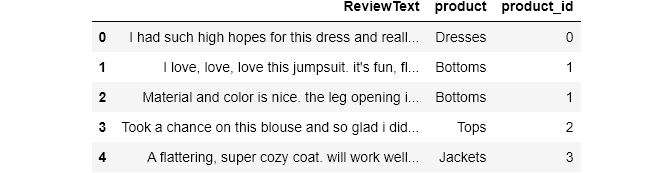

In this tutorial, we need only two two columns: “”ReviewText“and product” . will also give a number for each product category.

在本教程中,我们只需要两列:““ ReviewText”和product”。 还将提供每个产品类别的编号。

data['product_id'] = data['product'].factorize()[0]product_id_data = data[['product', 'product_id']].drop_duplicates().sort_values('product_id')product_to_id = dict(product_id_data.values)id_to_product = dict(product_id_data[['product_id', 'product']].values)

data['product_id'] = data['product'].factorize()[0]product_id_data = data[['product', 'product_id']].drop_duplicates().sort_values('product_id')product_to_id = dict(product_id_data.values)id_to_product = dict(product_id_data[['product_id', 'product']].values)

see our new data

查看我们的新数据

data.head()

We need also to vectorize the words and remove the stop words

我们还需要矢量化单词并删除停用词

tfidf = TfidfVectorizer(sublinear_tf=True, min_df=5, norm='l2', encoding='latin-1', ngram_range=(1, 2), stop_words='english')features = tfidf.fit_transform(data.ReviewText).toarray()

tfidf = TfidfVectorizer(sublinear_tf=True, min_df=5, norm='l2', encoding='latin-1', ngram_range=(1, 2), stop_words='english')features = tfidf.fit_transform(data.ReviewText).toarray()

set the label

设置标签

labels = data.product_idsplit the data

分割数据

X_train, X_test, y_train, y_test = train_test_split(data['ReviewText'], data['product'], random_state = 0)

count_vect = CountVectorizer()

X_train_counts = count_vect.fit_transform(X_train)

tfidf_transformer = TfidfTransformer()

X_train_tfidf = tfidf_transformer.fit_transform(X_train_counts)

clf = MultinomialNB().fit(X_train_tfidf, y_train)evaluate the accuracy of the machine learning algorithms.

评估机器学习算法的准确性。

models = [

RandomForestClassifier(n_estimators=200, max_depth=3, random_state=0),

LinearSVC(),#Linear Support Vector Classification.

MultinomialNB(),#Naive Bayes classifier for multinomial models

LogisticRegression(random_state=0),

]

CV = 10

cv_df = pd.DataFrame(index=range(CV * len(models)))

entries = []

for model in models:

model_name = model.__class__.__name__

accuracies = cross_val_score(model, features, labels, scoring='accuracy', cv=CV)

for fold_idx, accuracy in enumerate(accuracies):

entries.append((model_name, fold_idx, accuracy))

cv_df = pd.DataFrame(entries, columns=['model_name', 'fold_idx', 'accuracy'])see the accuracy

看到准确性

cv_df.groupby('model_name').accuracy.mean()model_name

LinearSVC 0.760985

LogisticRegression 0.632292

MultinomialNB 0.558428

RandomForestClassifier 0.546117

Name: accuracy, dtype: float64So, we can see that LinearSVC obtain the best accuracy compared with other algorithms. Let’s continue with LinearSVC

因此,我们可以看到,与其他算法相比, LinearSVC可获得最佳精度。 让我们继续使用LinearSVC

model = LinearSVC()X_train, X_test, y_train, y_test, indices_train, indices_test = train_test_split(features, labels, data.index, test_size=0.33, random_state=0)model.fit(X_train, y_train)y_pred = model.predict(X_test)

model = LinearSVC()X_train, X_test, y_train, y_test, indices_train, indices_test = train_test_split(features, labels, data.index, test_size=0.33, random_state=0)model.fit(X_train, y_train)y_pred = model.predict(X_test)

Finally, we are going to show the classification report for each class

最后,我们将显示每个班级的分类报告

print(metrics.classification_report(y_test, y_pred,

target_names=data['product'].unique()))precision recall f1-score support

Dresses 0.92 0.86 0.89 14

Bottoms 0.71 0.45 0.56 22

Tops 0.68 0.93 0.78 56

Jackets 1.00 0.44 0.62 9

Intimate 0.00 0.00 0.00 7

accuracy 0.72 108

macro avg 0.66 0.54 0.57 108

weighted avg 0.70 0.72 0.69 108That is all. I look forward to hear any feedback or questions.

就这些。 我期待听到任何反馈或问题。

翻译自: https://medium.com/swlh/multi-class-text-classification-using-scikit-learn-a9bacb751048

scikit 多标签分类

361

361

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?