redis集群拓扑

Redis — an open-source in-memory data structure store, is a very significant piece in modern architecture. There are fewer cases where it is used as a more general data store, but in cache technology landscape it is a clear winner

Redis是一种开源的内存中数据结构存储 ,是现代体系结构中非常重要的一部分。 很少有情况将其用作更通用的数据存储,但是在缓存技术领域,它无疑是赢家。

Lyft recently shared updated numbers (youtube video) for their Redis workloads in 2020:

Lyft最近分享了其2020年Redis工作负载的更新数字( youtube视频 ):

- 171 clusters 171个集群

- ~3,600 instances 约3600个实例

- 65M million QPS at peak 高峰时6500万QPS

It is very impressive how much the Redis ecosystem evolved with Core tech remained simple. Big credit to Salvatore Sanfilippo — the creator of Redis.

令人印象深刻的是,随着核心技术的发展,Redis生态系统保持了多么简单。 感谢Redis的创建者Salvatore Sanfilippo。

Minimal core leaves large room for variation of setup scenarios and within the scope of this article, we will review different topologies, each with its own High Availability and data safety characteristics.

最小的核心为设置场景的变化留出了很大的空间,在本文范围内,我们将介绍不同的拓扑,每种拓扑都有自己的高可用性和数据安全特性。

The article is introductory. If you are very experienced with Redis you won’t find much of a new material. But if you are new, then it could help you navigate through the variety of setup scenarios, so that you can then dig deeper. This will also set the stage for future writings on that topic

这篇文章是介绍性的。 如果您对Redis很有经验,则不会找到太多新材料。 但是,如果您是新手,那么它可以帮助您浏览各种设置方案,从而可以进行更深入的了解。 这也将为以后有关该主题的著作搭建舞台。

复制设置 (Replicated setup)

This is a very simple setup. There is a single master and 1 or more replicas. Replicas are trying to maintain an exact copy on the data, stored in the master node

这是一个非常简单的设置。 有一个主服务器和1个或多个副本。 副本正在尝试维护存储在主节点中的数据的精确副本

Redis replication is asynchronous and non-blocking. Which means master will keep processing incoming commands so that the potential impact on performance is very small

Redis复制是异步且非阻塞的。 这意味着主机将继续处理传入的命令,因此对性能的潜在影响很小

Benefits:

好处:

increased data safety, so that if a master goes down you can still have your data on other nodes

增强了数据安全性 ,因此,如果主服务器宕机,您仍然可以将数据保存在其他节点上

Read scalability. You can distribute read workload to your replicas. Although keep in mind that there are no consistency guarantees in this setup. (can be perfectly ok for some workloads)

读取可伸缩性 。 您可以将读取工作负载分配给副本。 尽管请记住,在此设置中没有一致性保证。 (对于某些工作负载完全可以)

*Higher-performance / lower latency on master. With this setup, it is possible to turn off disk persistence on a master. But do it with a big care and read the official doc. (at least disable auto-restarts)

*更高的性能/更低的主机延迟 。 通过此设置,可以关闭主服务器上的磁盘持久性。 但是要格外小心,并阅读官方文档。 (至少禁用自动重启)

While this setup is easy and straightforward, it comes with deficiencies:

尽管此设置既简单又直接,但它也有不足之处 :

- Doesn’t provide high-availability!!! 不提供高可用性!!!

- Clients should be topology-aware to gain read scalability. They need to understand where to direct reads and writes 客户端应了解拓扑,以获取读取可伸缩性。 他们需要了解将读取和写入指向何处

If you don’t have smart clients, which know where to route the traffic, there is a very old setup exists with HAProxy:

如果您没有智能客户端(该客户端知道将流量路由到何处),则HAProxy存在一个非常旧的设置:

# Specifies TCP timeout on connect for use by the frontend ft_redis

# Set the max time to wait for a connection attempt to a server to succeed

# The server and client side expected to acknowledge or send data.

defaults REDIS

mode tcp

timeout connect 3s

timeout server 6s

timeout client 6s

# Specifies listening socket for accepting client connections using the default

# REDIS TCP timeout and backend bk_redis TCP health check.

frontend ft_redis

bind *:6378 name redis

default_backend bk_redis

# Specifies the backend Redis proxy server TCP health settings

# Ensure it only forward incoming connections to reach a master.

backend bk_redis

option tcp-check

tcp-check connect

tcp-check send PING\r\n

tcp-check expect string +PONG

tcp-check send info\ replication\r\n

tcp-check expect string role:master

tcp-check send QUIT\r\n

tcp-check expect string +OK

server redis_6379 localhost:6379 check inter 1s

server redis_6380 localhost:6380 check inter 1sIt uses a health-check mechanism to ping Redis nodes so that it can identify master and direct traffic to it

它使用运行状况检查机制来对Redis节点执行ping操作,以便它可以识别主节点并将流量定向到该节点。

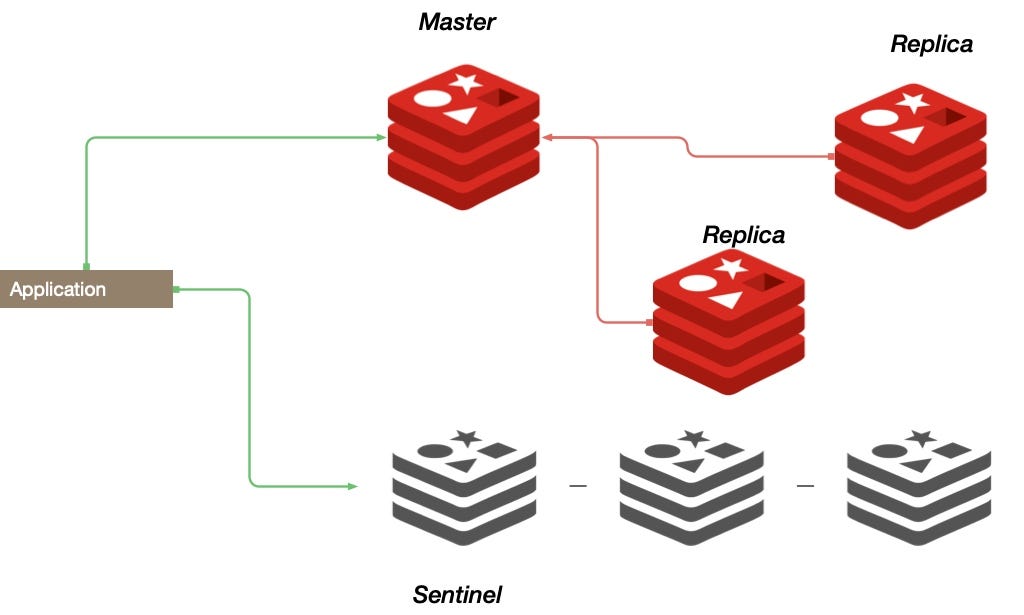

Redis前哨 (Redis Sentinel)

Redis Sentinel builds on the replication base and provides high availability for Redis without human intervention.

Redis Sentinel建立在复制基础上,无需人工干预即可为Redis提供高可用性。

Sentinel constantly monitors master and replicas and checks if they working as expected. If something goes wrong, it can run automatic failover and promote different master.

Sentinel不断监视主控和副本,并检查它们是否按预期工作。 如果出现问题,它可以运行自动故障转移并升级其他主服务器。

In addition to that Sentinel serves as a configuration provider (service-discovery) to connecting clients. In the process of failover, clients will get an updated address of a master node.

此外,Sentinel还用作连接客户端的配置提供程序(服务发现)。 在故障转移过程中,客户端将获得主节点的更新地址。

It is recommended for a setup to include multiple sentinel nodes and therefore has fault-tolerance characteristics if some of them go down.

建议设置包含多个标记节点,因此如果其中一些节点出现故障,则具有容错特性。

Sentinel nodes cooperate in order to agree on the state of the cluster (e.g. what to do in case of failure detection).

前哨节点协作以便就集群状态达成共识(例如,在检测到故障时该怎么做)。

Example of sentinel configuration (sentinel.conf):

哨兵配置示例(sentinel.conf):

sentinel monitor mymaster 127.0.0.1 6379 2

sentinel down-after-milliseconds mymaster 60000

sentinel failover-timeout mymaster 180000

sentinel parallel-syncs mymaster 1There it states that a sentinel node monitors group called mymaster with quorum 2

那里指出,定额2名为mymaster的前哨节点监视组

sentinel monitor <master-group-name> <ip> <port> <quorum>There is no need to specify replicas, they will be identified through auto-discovery.

无需指定副本,它们将通过自动发现进行标识。

Benefits

好处

High-availability. Builds on top of Redis replication, this setup allows for auto-failover

高可用性。 建立在Redis复制之上,此设置允许自动故障转移

Service-discovery. Sentinels serve as a configuration provider for clients making them topology-aware

服务发现 。 Sentinels充当客户端的配置提供程序,使它们了解拓扑

Deficiences

不足之处

Usually, this setup involves many nodes with different roles. (e.g. 5 nodes: master, replica, 3 sentinels). So it brings significant complexity, while all writes are still directed to a single master

通常,此设置涉及许多具有不同角色的节点。 (例如5个节点:主节点,副本节点,3个标记)。 因此,它带来了相当大的复杂性 ,而所有写入仍然都指向单个主机

- It is client drivers which takes the load and implement routing mechanism for the Sentinel setup. Not all drivers support that 客户端驱动程序负责加载并实现Sentinel设置的路由机制。 并非所有驱动程序都支持

Redis集群 (Redis Cluster)

This is where things start getting more interesting. Redis Cluster was introduced in 2015, since version 3.0. It is the first out-the-box Redis architecture, which allows writes scalability

这是事情开始变得更加有趣的地方。 自3.0版以来,Redis群集于2015年引入。 这是第一个开箱即用的Redis架构,可实现写入扩展性

Highlights:

重点 :

- Horizontally scalable. Adding more nodes increases capacity 水平可扩展。 添加更多节点可增加容量

- Data is sharded automatically 数据自动分片

- Fault-tolerant 容错的

To distribute data Redis Cluster has the notion of hash slots. There is a total number of 16384 of hash slots, and every node in the cluster is responsible for a subset of them

为了分发数据,Redis Cluster具有哈希槽的概念。 共有16384个哈希槽,集群中的每个节点都负责其中的一个子集

So when a request is sent for a given key, a client computes a hash slot for that key and sends it to an appropriate server. It means Redis Cluster client implementation must be cluster-aware:

因此,当发送对给定密钥的请求时,客户端将为该密钥计算一个哈希槽,并将其发送到适当的服务器。 这意味着Redis Cluster客户端实现必须支持群集:

- updating hash slots periodically 定期更新哈希槽

- handle redirects themselves (in case of cluster topology changes and cluster slots be temporarily out of sync) 处理自身重定向(如果群集拓扑发生更改并且群集插槽暂时不同步)

Benefits:

好处:

- simpler operations (fewer moving parts) 操作更简单(活动部件更少)

- high-availability out of the box 开箱即用的高可用性

- horizontal scalability 水平可伸缩性

Deficiencies:

缺陷:

- clients need to be more complex and sync cluster state themselves 客户端需要更复杂并自己同步集群状态

- caveats about the handling of multi-key operations (since data can be located on different nodes) 关于多键操作处理的警告(因为数据可以位于不同的节点上)

There is a good talk (youtube video) about moving to Redis Cluster by Ryan Luecke from Box.

Box的Ryan Luecke提出了关于转移到Redis Cluster的好话题( youtube视频 )。

Redis [独立版]和Envoy (Redis [standalone] with Envoy)

(or Twemproxy in older days)

(或旧时使用Twemproxy)

(Envoy Proxy doc)

(特使代理文档 )

This is the kind of setup we are running at Whisk (https://whisk.com). We very much like it for it’s operational simplicity and good fault tolerance characteristics.

这是我们在Whisk( https://whisk.com )上运行的那种设置。 我们非常喜欢它的操作简便性和良好的容错特性。

Note that this setup is not suitable if you are planning to use Redis as a persistent data store, but a perfect fit for cache or even can be good enough for features like rate-limiting

请注意,如果您打算将Redis用作持久性数据存储,则此设置不适合,但它非常适合缓存,甚至对于速率限制等功能也足够好

I would recommend watching the video (youtube link) from Lyft as it explains the concept very well.

我建议您观看Lyft的视频( youtube链接 ),因为它很好地说明了这一概念。

The idea and setup are very simple:

想法和设置非常简单:

- you have a bunch of standalone Redis nodes, which don’t know anything about each other 您有一堆独立的Redis节点,彼此之间一无所知

- you have set of Envoy proxies in front of them, which know how to distribute traffic (association of a key and a Redis node) 您在它们前面有一组Envoy代理,它们知道如何分配流量(密钥和Redis节点的关联)

This gives you great operational simplicity. Your client can route traffic to any of Envoy nodes and it will be handled. It is easy to tolerate the failure of any of Redis or Envoy nodes in this setup. In the “Redis as a cache” scenario the worst thing is that you would need to re-calculate a value for a key.

这使您操作起来非常简单。 您的客户端可以将流量路由到任何Envoy节点,它将得到处理。 在此设置中,容忍任何Redis或Envoy节点的故障很容易。 在“ Redis作为缓存”方案中,最糟糕的事情是您将需要重新计算密钥的值。

With our Envoy proxies running in Kubernetes close to app services, we have a very reliable and fast setup. And barely experienced any issues

由于我们的Envoy代理在靠近应用服务的Kubernetes中运行,因此设置非常可靠且快速。 而且几乎没有遇到任何问题

Envoy does hash-based partitioning, where during initialization or topology changes Envoy computes and associates an integer value for each of Redis nodes. Then when a request comes, it hashes it’s key to an integer and finds a node with the closest match to it

Envoy执行基于散列的分区 ,在初始化或拓扑更改期间,Envoy为每个Redis节点计算并关联一个整数值。 然后,当请求到来时,它将其哈希值哈希为整数并找到与其最匹配的节点

Benefits:

好处:

operational simplicity. very easy to set up and maintain. fewer moving parts

操作简单。 非常容易设置和维护。 运动部件少

fault-tolerance. you can lose/replace any node with minimal impact

容错 。 您可以丢失/替换任何节点而影响最小

horizontal scalability. almost no overheads when scaling out and adding more servers

横向可扩展性 。 扩展和添加更多服务器时几乎没有开销

simple clients. only load balancing or good reliable connection to Envoy is necessary

简单的客户 。 仅需要负载平衡或与Envoy的良好可靠连接

exposed metrics out of the box

公开指标

Limitations

局限性

- only fits certain use cases (e.g. cache) where potentially losing a small portion of a data is acceptable 仅适合某些可能会丢失少量数据的用例(例如缓存)

摘要 (Summary)

We outlined some of the basic Redis topologies, each with different characteristics. Each has its benefits and shortcomings. It depends on your scenario which to go with.

我们概述了一些基本的Redis拓扑,每种拓扑都具有不同的特征。 每个都有其优点和缺点。 这取决于您要使用的方案。

But if your scenario is about “best-effort cache” then I’d highly recommend considering the setup with Envoy. Otherwise, if you need safety guarantees for your data — Redis Cluster could be a good choice, which matured enough and has success case studies.

但是,如果您的方案是“尽力而为缓存”,那么我强烈建议您考虑使用Envoy进行设置。 否则,如果您需要数据的安全保证,那么Redis Cluster可能是一个不错的选择,它已经足够成熟并且具有成功的案例研究。

In some of the future writings we might look deeper into a setup with Envoy we are running at Whisk and might consider some performance comparisons of different JVM drivers.

在将来的一些著作中,我们可能会更深入地了解我们在Whisk运行的Envoy的设置,并可能考虑不同JVM驱动程序的一些性能比较。

资源资源 (Resources)

Redis Replication guide — https://redis.io/topics/replication

Redis的复制指南- https://redis.io/topics/replication

Redis Sentinel guide — https://redis.io/topics/sentinel

Redis的哨兵指南- https://redis.io/topics/sentinel

Redis Cluster by Ryan Luecke — https://www.youtube.com/watch?v=NymIgA7Wa78

瑞安·卢克(Ryan Luecke)的Redis群集-https: //www.youtube.com/watch?v= NymIgA7Wa78

Redis Cluster tuturial — https://redis.io/topics/cluster-tutorial

Redis群集教程— https://redis.io/topics/cluster-tutorial

Redis + Envoy by Lyft — https://www.youtube.com/watch?v=U4WspAKekqM

Lyft的Redis + Envoy- https://www.youtube.com/watch?v= U4WspAKekqM

Envoy Proxy Redis doc — https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/other_protocols/redis

Envoy代理Redis文档-https: //www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/other_protocols/redis

翻译自: https://medium.com/dev-genius/redis-topologies-d9e16a7fa8e0

redis集群拓扑

本文探讨了Redis集群的不同拓扑结构,详细解析了其工作原理和应用场景,旨在帮助读者深入理解Redis集群的部署和管理。

本文探讨了Redis集群的不同拓扑结构,详细解析了其工作原理和应用场景,旨在帮助读者深入理解Redis集群的部署和管理。

1137

1137

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?