多云部署架构

介绍 (Introduction)

The solutions team at Volterra designs and maintains many potential use cases of the Volterra platform to demonstrate its potential value. These use cases are often made into instructional guides that customers use to get first hands-on experience with Volterra.

Volterra的解决方案团队设计并维护了Volterra平台的许多潜在用例,以展示其潜在价值。 这些用例通常被制作成指导性指南,供客户用来获得Volterra的第一手动手经验。

The solutions team wanted a quicker, automated method of deploying our use cases on demand internally with as little human input required as possible. Our deployments covered a hybrid multi-cloud environment using both IaaS platforms (AWS, Azure and GCP) and private data center environments (VMware vSphere and Vagrant KVM). We wanted to simplify the management of these environments by providing a single tool that could create deployments in any environment without the need for individual users to create additional access accounts or manage additional user credentials for each virtualization platform or IaaS provider. This tool also needed to scale to support multiple concurrent users and deployments.

解决方案团队希望有一种更快,自动化的方法来按需在内部部署用例,而所需的人工输入尽可能少。 我们的部署涵盖了使用IaaS平台(AWS,Azure和GCP)和私有数据中心环境(VMware vSphere和Vagrant KVM)的混合多云环境。 我们想通过提供一个单一工具来简化这些环境的管理,该工具可以在任何环境中创建部署,而无需单个用户为每个虚拟化平台或IaaS提供商创建额外的访问帐户或管理其他用户凭据。 该工具还需要扩展以支持多个并发用户和部署。

In summation, the central problem we wished to solve was to create an automated solutions use case deployment tool, capable of scaling and handling concurrent deployments into hybrid multi-cloud environments with as little user input as possible.

总之,我们希望解决的中心问题是创建一个自动化的解决方案用例部署工具,该工具能够在尽可能少的用户输入的情况下将并发部署扩展和处理到混合多云环境中。

碳改项目(AC) (The Altered-Carbon Project (AC))

To this end we created a project called Altered-Carbon, often shortened to AC. The AC project is a dynamic GitLab pipeline capable of creating over 20 different Volterra solution use cases. Through pipeline automation, we created single command “push button deployments” allowing users to easily deploy multiple use case clusters on demand or through scheduled daily cron jobs.

为此,我们创建了一个名为Alterned-Carbon的项目,通常简称为AC。 AC项目是一个动态的GitLab管道,能够创建20多种不同的Volterra解决方案用例。 通过管道自动化,我们创建了单个命令“按钮部署”,使用户可以根据需要或通过计划的日常cron作业轻松部署多个用例集群。

For reference, in AC we refer to each deployment as a STACK and each unique use case as a SLEEVE.

作为参考,在AC中,我们将每个部署称为STACK ,将每个唯一用例称为SLEEVE 。

项目发展 (Project Development)

We began the development of the AC project by automating the use case hello-cloud into the first SLEEVE. The hello-cloud use case creates a Volterra site in AWS and Azure, then combines these sites into a Volterra VK8s (virtual Kubernetes) cluster and deploys a 10 pod application across both sites using the VK8s. We began the process by creating additional terraform templates and shell scripts utilizing the Volterra API in order to create a fully automated workflow GitLab that CI/CD pipelines could manage. Then we set to work on making this deployment methodology reusable and scalable.

我们通过我自动化用例你好云n要第一套筒开始交流项目的开发。 hello-cloud用例在AWS和Azure中创建了一个Volterra站点,然后将这些站点组合到一个Volterra VK8(虚拟Kubernetes)集群中,并使用VK8在两个站点之间部署了一个10 Pod应用程序。 我们首先通过使用Volterra API创建其他terraform模板和shell脚本来创建该流程,以创建CI / CD管道可以管理的全自动工作流程GitLab。 然后,我们着手努力使这种部署方法可重用和可扩展。

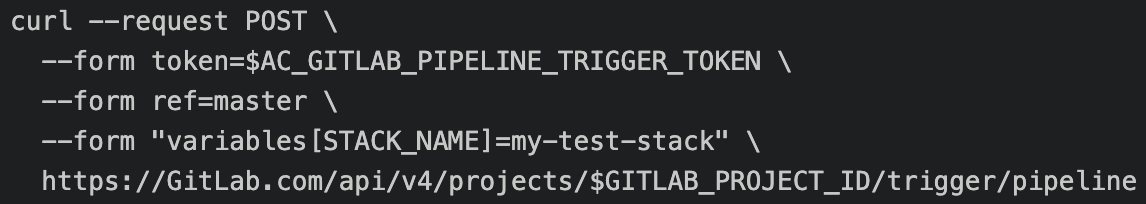

Adding unique naming conventions was the next issue we tackled in our design. To allow for multiple deployments of the use case on a single Volterra tenant environment, we needed to ensure our resources created in each STACK had unique names and would not try to create resources with names duplicating other STACKs in the same Volterra tenant environment. To solve possible naming convention conflicts we began to incorporate the idea of unique user-provided environmental variables in pipeline triggers that would become central to the project. The environmental variable STACK_NAME was introduced and used by terraform to include a user-defined string in the names of all resources associated with a specific STACK. Instead of triggering on commit, the AC pipelines jobs trigger conditions were set to only run when triggered by a GitLab users API call using a GitLab Project CI Trigger Token allowing the pipeline to be controlled by human input or external API calls. By using API calls similar to the following example, it allowed users to accomplish our goal of creating multiple deployments without resource conflicts with a single command.

添加独特的命名约定是我们在设计中解决的下一个问题。 为了在单个Volterra租户环境中允许用例的多个部署,我们需要确保在每个STACK中创建的资源具有唯一的名称,并且不要尝试在相同的Volterra租户环境中创建名称与其他STACK重复的资源。 为了解决可能的命名约定冲突,我们开始将用户提供的唯一环境变量的想法纳入管道触发器中,这将成为该项目的核心。 terraform引入并使用了环境变量STACK_NAME ,以在与特定STACK关联的所有资源的名称中包括用户定义的字符串。 除了在提交时触发外,AC管道作业触发条件设置为仅在使用GitLab项目CI触发令牌通过GitLab用户API调用触发时才运行,从而允许通过人工输入或外部API调用来控制管道。 通过使用类似于以下示例的API调用,它使用户可以实现我们创建多个部署的目标,而无需使用单个命令就可以避免资源冲突。

Then we endeavored to create additional deployment options from this model. The AWS and Azure site deployments of hello-cloud were also individual use cases we wished to deploy independently with AC. This caused us to run into our first major issue with GitLab. In GitLab CI/CD pipelines, all jobs in a projects pipeline are connected. This was counterintuitive, as many jobs needed in our hello-cloud deployment would not be needed in our AWS or Azure site deployments. We essentially wanted one GitLab Project CI pipeline to contain multiple independent pipelines that could be triggered on demand with separate sets of CI jobs when they were required.

然后,我们努力根据该模型创建其他部署选项。 hello-cloud的AWS和Azure站点部署也是我们希望与AC独立部署的个别用例。 这使我们遇到了GitLab的第一个主要问题。 在GitLab CI / CD管道中,项目管道中的所有作业都已连接。 这是违反直觉的,因为在我们的AWS或Azure站点部署中不需要在hello-cloud部署中需要的许多工作。 我们本质上希望一个GitLab项目CI管道包含多个独立的管道,这些管道可以根据需要在需要时使用单独的CI作业集触发。

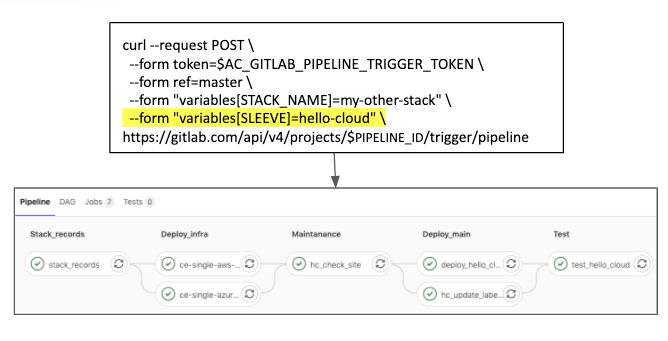

To solve this issue, we introduced the environmental variable SLEEVE into the pipeline structure that incorporated GitLab CI/CD only/except options. This allowed CI jobs triggered on any pipeline to be limited based on the value of the SLEEVE provided in the pipeline trigger. Finally, we had our initial 3 SLEEVE options: simple-aws, simple-azure and hello-cloud. Each SLEEVE would define what use case a user wished to deploy (thus controlling the CI jobs in a triggered pipeline) and a STACK_NAME to name the resources created by any triggered pipeline. The following command structure incorporating both environmental variables served as the most basic AC command that is still used today:

为解决此问题,我们将环境变量SLEEVE引入了仅包含GitLab CI / CD / except选项的管道结构。 这允许根据管道触发器中提供的SLEEVE的值来限制在任何管道上触发的CI作业。 最后,我们有最初的3个SLEEVE选项:简单aws,简单azure和hello-cloud。 每个SLEEVE都将定义用户希望部署的用例(从而控制触发管道中的CI作业)和STACK_NAME来命名由任何触发管道创建的资源。 结合了两个环境变量的以下命令结构是当今仍在使用的最基本的AC命令:

The following image shows a visualization of how changing the SLEEVE environmental variable will change the jobs triggered in each run of the AC pipeline.

下图显示了更改SLEEVE环境变量将如何更改在交流管道的每次运行中触发的作业的可视化效果。

SLEEVE “simple-aws” pipeline:

覆盖“简单法”管道:

SLEEVE “hello-cloud” pipeline:

扩展“ hello-cloud”管道:

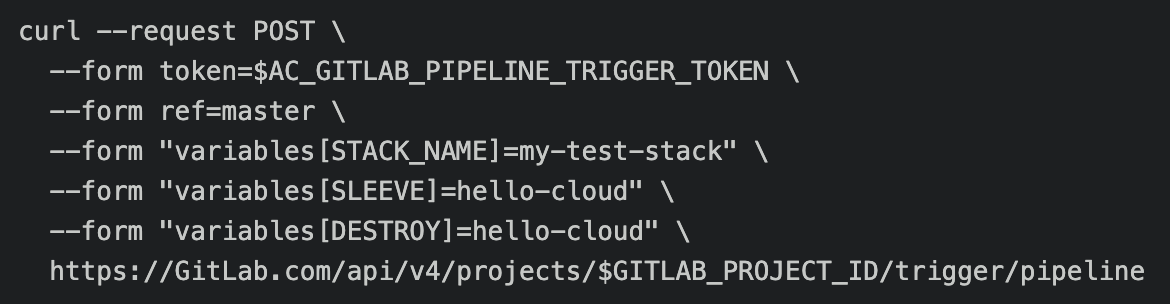

We also introduced additional jobs that would be triggered if the environmental variable DESTROY was provided in any pipeline trigger. This would provide a reverse option to remove the resources created by AC. The following is an example of that would remove the resources of an existing STACK:

我们还介绍了如果在任何管道触发器中提供了环境变量DESTROY就会触发的其他作业。 这将提供一个反向选项,以删除AC创建的资源。 下面是一个示例,该示例将删除现有堆栈的资源:

Other environmental variables were stored in GitLab with default values that could be overridden by adding values into the trigger command. For example, the API URL of our Volterra tenant environments was stored in the VOLTERRA_TENANT environmental variable. If a user added the VOLTERRA_TENANT environmental variable to their API command, this would override the default value and redirect the deployment to the desired location. This proved to be very important to our internal testing ability as the solutions team manages dozens of Volterra tenant environments and needs the ability to switch between them based on the task at hand:

其他环境变量以默认值存储在GitLab中,可以通过将值添加到触发命令中来覆盖这些默认值。 例如,我们的Volterra租户环境的API URL存储在VOLTERRA_TENANT环境变量中。 如果用户将VOLTERRA_TENANT环境变量添加到其API命令中,则它将覆盖默认值并将部署重定向到所需位置。 事实证明,这对于我们的内部测试能力非常重要,因为解决方案团队管理着数十个Volterra租户环境,并且需要能够根据手头的任务在它们之间进行切换:

These optional environment variables could be used to add a greater level of control onto deployments when needed, but allowed a more simplistic default deployment option for users who did not want to manage this additional overhead. It also allowed us to easily switch between deployments on staging and production environments which would prove essential for our largest AC consumer.

这些可选的环境变量可在需要时用于在部署上添加更高级别的控制,但对于不想管理此额外开销的用户,则允许使用更为简单的默认部署选项。 这也使我们能够轻松地在登台和生产环境的部署之间进行切换,这对于我们最大的AC用户而言至关重要。

解决方案用例:每晚回归测试 (Solutions Use Case: Nightly Regression Testing)

As mentioned before, each SLEEVE in AC represented a Volterra use case that would often be customers’ first interaction with the product. Ensuring these use cases were functional and bug-free was key to providing a strong first impression of the product. Before AC was created, testing the use cases to confirm that they were functional and up to date with the latest Volterra software and API versions was a time consuming task. The manual portions required of each use case created a limitation on regression testing, which was not undertaken often enough and was prone to human error.

如前所述,AC中的每个SLEEVE都代表一个Volterra用例,这通常是客户与产品的首次交互。 确保这些用例具有功能性和无缺陷是提供良好的产品第一印象的关键。 在创建AC之前,测试用例以确认它们的功能和最新Volterra软件和API版本的更新是一项耗时的任务。 每个用例所需的手册部分对回归测试造成了限制,这种限制没有足够频繁地进行,并且容易出现人为错误。

However with AC automation, daily scheduled jobs could be used to trigger a deployment of any specific use case with a SLEEVE and then remove the resources created after the deployment had either completed or failed to deploy. This was used on both staging and production environments to test if recent changes in either had affected the use case deployment or caused bugs in the Volterra software. We would then be able to update bugs found in our use case guides or catch Volterra software bugs quickly and submit resolution tickets.

但是,使用AC自动化,可以使用每日计划的作业触发带有SLEEVE的任何特定用例的部署,然后删除在部署完成或部署失败后创建的资源。 在登台环境和生产环境中都使用了此功能,以测试最近的更改是否影响了用例部署或是否导致Volterra软件中的错误。 然后,我们将能够更新在用例指南中找到的错误,或Swift捕获Volterra软件的错误并提交解决方案。

We created a separate repository and pipeline with scheduled jobs that would use the GitLab API trigger commands to concurrently generate multiple stacks using different SLEEVEs. Each smoke test job would start by triggering the creation of a stack with an independent AC pipeline. The smoke test job would then get the pipeline ID from the stdout of the pipeline trigger call and the GitLab API to monitor the status of the pipeline it triggered. When the pipeline completed (either with a success or failure) it would then run the destroy pipeline on the same STACK it created to remove the resources after the test.

我们使用计划的作业创建了一个单独的存储库和管道,这些作业将使用GitLab API触发命令来使用不同的SLEEVE同时生成多个堆栈。 每个烟雾测试作业都将通过触发具有独立AC管道的烟囱来开始。 然后,烟雾测试作业将从管道触发调用的stdout和GitLab API中获取管道ID,以监视其触发的管道的状态。 管道完成(成功或失败)后,它将在创建的同一堆栈上运行销毁管道,以在测试后删除资源。

The following image details this process and shows the jobs it triggers in AC for its creation and destruction commands:

下图详细描述了此过程,并显示了在AC中为其创建和销毁命令触发的作业:

When a smoke test pipeline failed, we were able to provide the environmental variables that could be used in an AC trigger to reproduce the issue. This could be provided in our technical issue tickets allowing our developers to easily recreate the failed deployments. Then as more SLEEVES were completed, we added more and more jobs to the CI pipelines allowing more test coverage. To further improve the visibility of these tests we added a Slack integration and had the smoke test jobs send the success or failure message of each pipeline run with links and details to the corresponding CI web pages in both the Altered-Carbon and Smoke-Test projects.

当烟雾测试管道失败时,我们能够提供可在交流触发中使用的环境变量来重现该问题。 这可以在我们的技术发行单中提供,从而使我们的开发人员可以轻松地重新创建失败的部署。 然后,随着更多SLEEVES的完成,我们向CI管道添加了越来越多的作业,从而实现了更多的测试覆盖范围。 为了进一步提高这些测试的可见性,我们添加了Slack集成,并通过烟雾测试作业将每个管道的成功或失败消息以及链接和详细信息发送到Alterned-Carbon和Smoke-Test项目中的相应CI网页。 。

堆栈记录保存和管道Web URL导航 (Stack Record Keeping and Pipeline Web URL Navigation)

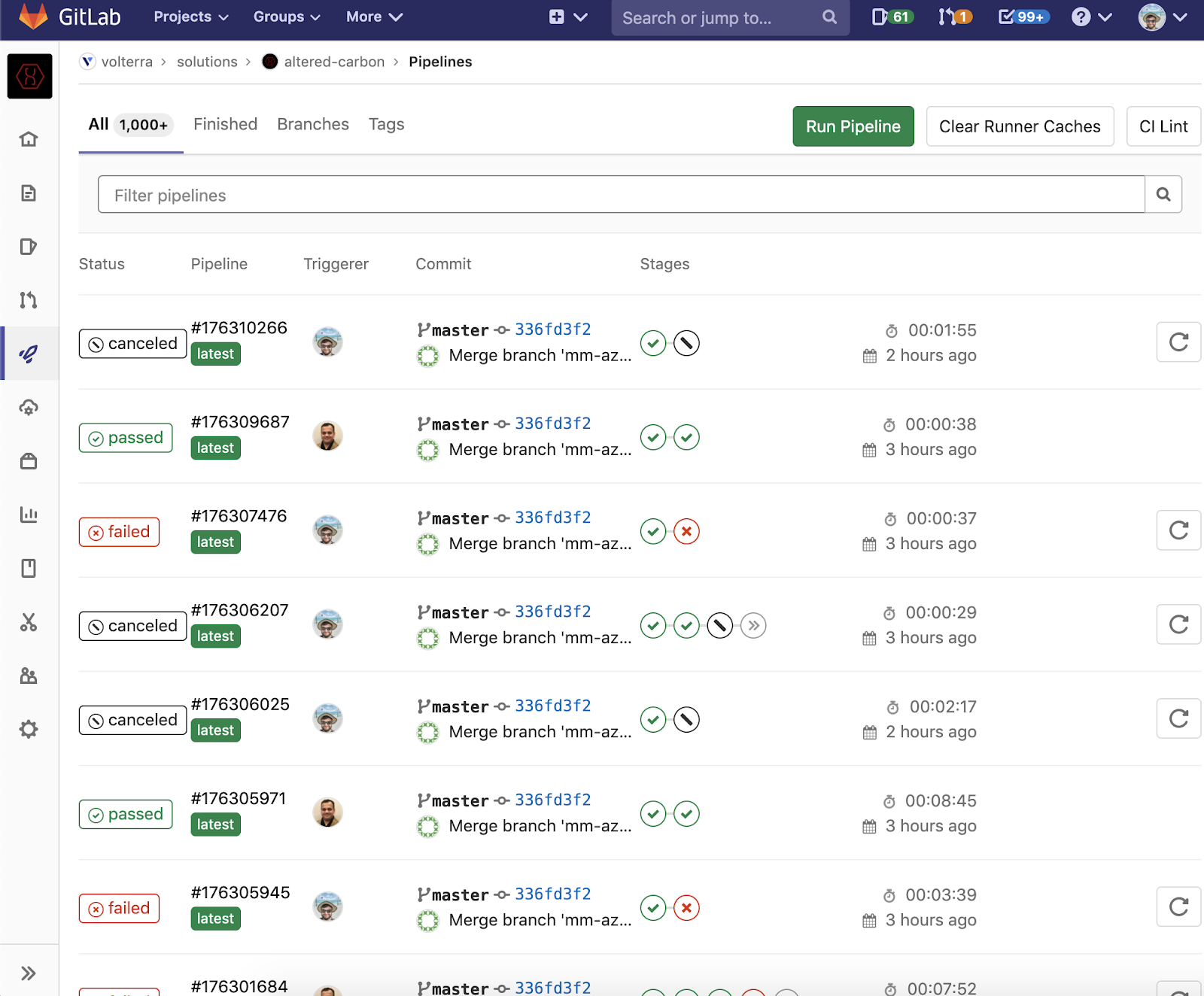

The complexity of the project increased as AC evolved, added additional users from the solutions team and created more and more stacks. This began to create fundamental problems when navigating the GitLab Pipeline web UI. We were using GitLab pipelines in a very untraditional way, which rendered the GitLab pipeline web UI difficult to use in tracing individual pipeline runs that related to the STACKs we were creating.

随着AC的发展,项目的复杂性增加,解决方案团队增加了更多用户,并创建了越来越多的堆栈。 浏览GitLab Pipeline Web UI时,这开始产生根本性的问题。 我们以非常传统的方式使用GitLab管道,这使得GitLab管道Web UI难以用于跟踪与我们所创建的STACK相关的各个管道运行。

GitLab pipelines that manage deployments through GitOps workflows seem best suited when it is used against a static defined set of clusters. In this case, each run of a GitLab pipeline would affect the same clusters and resources every time. The deployment history of these clusters in this case is the pipeline history visualized in the GitLab web UI. However, AC is dynamic and handles a constantly changing set of resources where each pipeline run can utilize a totally different set of jobs, managing different STACKs of resources, in different virtualization providers. This differentiation created by the SLEEVE and STACK conventions meant that it is very difficult to determine which pipeline corresponds to which stack. For example, we can take a look at the GitLab CI/CD pipeline web UI for AC:

通过GitOps工作流管理部署的GitLab管道似乎最适合用于静态定义的集群集。 在这种情况下,每次运行GitLab管道都会影响相同的集群和资源。 在这种情况下,这些集群的部署历史记录是在GitLab Web UI中可视化的管道历史记录。 但是,AC是动态的,可以处理不断变化的资源集,其中每个管道运行可以利用完全不同的作业集,在不同的虚拟化提供程序中管理不同的STACK资源。 SLEEVE和STACK约定创建的这种区分意味着很难确定哪个管道对应于哪个堆栈。 例如,我们可以看一下AC的GitLab CI / CD管道Web UI:

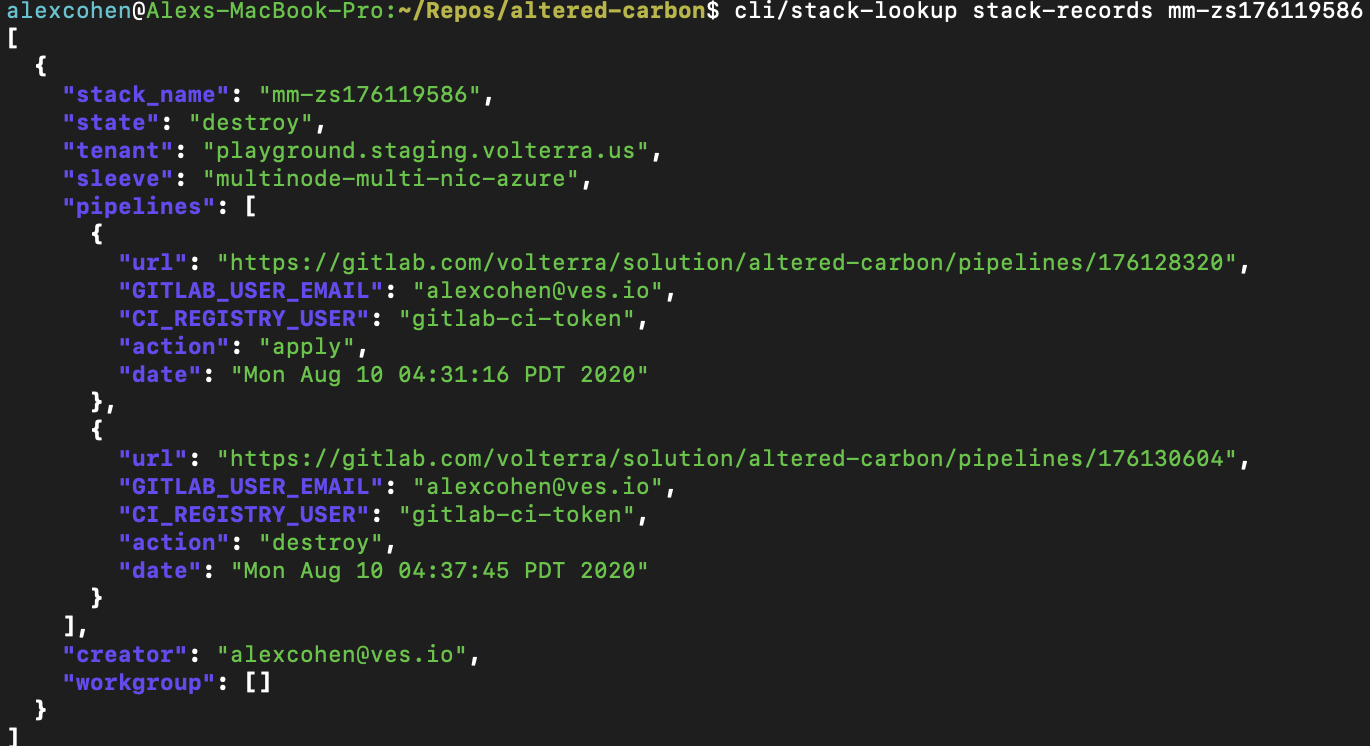

From this view, we cannot determine which STACK or SLEEVE any single pipeline is changing without viewing each and every pipeline individually. When this is running hundreds of pipelines a day it can be tedious to find the specific pipeline run that either created or destroyed any one STACK in particular or locate specific details about said STACK. To solve this problem early on in the AC development, we added a simple record keeping system. A job would run before any pipeline called stack-records. This job would collect details on the stack upon creation and generate a json file that would be uploaded to our S3 storage bucket used to store our tfstate backups. Below we see an example of a stack record:

从这个角度看,如果不单独查看每个管道,我们将无法确定单个管道正在更改哪个STACK或SLEEVE。 当它每天运行数百条管道时,查找特别创建或销毁任何一个STACK的特定管道运行或查找有关该STACK的特定细节可能很繁琐。 为了在AC开发早期解决此问题,我们添加了一个简单的记录保存系统。 作业将在任何称为stack-record的管道之前运行。 这项工作将在创建时收集堆栈上的详细信息,并生成一个json文件,该文件将上传到用于存储tfstate备份的S3存储桶中。 下面我们看到一个堆栈记录的示例:

The stack-record.json files include details of each stack such as:

stack-record.json文件包含每个堆栈的详细信息,例如:

- Which Volterra tenant environment was used. 使用哪个Volterra租户环境。

- What SLEEVE was used. 使用了什么SLEEVE。

- If the STACK was either currently running in the “apply” state or if its resources were removed with the “destroy” state. 如果STACK当前处于“应用”状态,或者其资源已被“破坏”状态删除。

- Which GitLab user created the stack. 哪个GitLab用户创建了堆栈。

- A workgroup listing other users with access to change a STACK. 工作组列出了有权更改堆栈的其他用户。

- And most importantly, a pipelines array that would include the web url of each pipeline run that ran against the stack, who triggered the pipeline, if the pipeline either applied or destroyed a stack and when the pipeline was triggered. 最重要的是,如果管道应用或破坏了堆栈以及何时触发了管道,则管道数组将包括与管道相撞的每个管道运行的Web url,谁触发了管道。

This provided a recorded history of all pipeline URLs associated with any one stack and a simple CLI script that can access these files via S3 API calls was created to simplify the process further. Our users consuming AC could use these documents to track the history of stacks and view when these stacks were changed by viewing the stack records.

这提供了与任何一个堆栈关联的所有管道URL的记录历史记录,并创建了一个可以通过S3 API调用访问这些文件的简单CLI脚本,以进一步简化流程。 我们使用AC的用户可以使用这些文档来跟踪堆栈的历史记录,并通过查看堆栈记录来查看何时更改了这些堆栈。

Stack records also allowed us to implement certain levels of user control and error catching over the pipelines we deploy. For instance, a change made to the Volterra Software after the creation of AC forced us to begin limiting site cluster names (a value derived from the STACK_NAME value) used in Volterra site creation to a limit of 17 characters. So we added a check onto the stack records job that would cause pipelines to fail before running any deployment steps if the STACK_NAME violated the character limit. Other custom controls were added such as the addition of user permission levels in AC that limit which users have access to change specific stacks controlled by AC.

堆栈记录还使我们能够对部署的管道实施一定级别的用户控制和错误捕获。 例如,创建AC后对Volterra软件进行的更改迫使我们开始将Volterra网站创建中使用的网站集群名称(从STACK_NAME值派生的值)限制为最多17个字符。 因此,我们在堆栈记录作业中添加了一项检查,如果STACK_NAME违反了字符数限制,这将导致管道在运行任何部署步骤之前失败。 添加了其他自定义控件,例如在AC中添加了用户权限级别,以限制哪些用户有权更改由AC控制的特定堆栈。

Admin level permissions where the top two developers of AC have the ability to create or destroy any stack for debugging purposes.

管理员级别的权限,AC的前两名开发人员可以创建或破坏任何堆栈以进行调试。

The owner or creator level is for a GitLab user who initially creates a STACK. Their GitLab email value is recorded in the stack records as the creator and has the ability to create or destroy a stack.

所有者或创建者级别适用于最初创建堆栈的GitLab用户。 他们的GitLab电子邮件值作为创建者记录在堆栈记录中,并且具有创建或销毁堆栈的能力。

Then we have workgroup level permissions, the GitLab user email can be added into the stack record workgroup array granting users other than the creator the ability to apply or destroy a STACK.

然后,我们拥有工作组级别的权限,可以将GitLab用户电子邮件添加到堆栈记录工作组数组中,从而为创建者以外的用户授予应用或销毁堆栈的能力。

- A user without any of these permission levels will not be able to change a stack. If such a user attempts to change a stack they have no permission over then the stack-records job will fail before any resources are deployed. 没有任何这些权限级别的用户将无法更改堆栈。 如果此类用户尝试更改堆栈,则他们没有权限,那么在部署任何资源之前,堆栈记录作业将失败。

内部使用和当前测试范围 (Internal Use and Current Test Coverage)

Today AC has become central to the solutions team providing most of our regression testing and automation. We find its main uses are regression smoke testing, scale testing, product billing tests and for simplified deployments used in product demos.

今天,AC已成为解决方案团队的核心,提供了我们大多数的回归测试和自动化功能。 我们发现它的主要用途是回归烟雾测试,规模测试,产品账单测试以及用于产品演示的简化部署。

The automated deployments have found their greatest consumer in our nightly regression tests. Each night we test each of our SLEEVES against a production and staging environment by triggering a deployment and then tearing down the resources created. When changes occur we are quickly able to detect them and submit bug reports to update our guides. Our current test coverage includes:

在我们的每晚回归测试中,自动化部署已经找到了最大的用户。 每天晚上,我们通过触发部署然后拆除创建的资源,针对生产和登台环境测试每个SLEEVES。 发生更改时,我们能够Swift检测到它们并提交错误报告以更新我们的指南。 我们目前的测试范围包括:

- Secure Kubernetes Gateway. 安全的Kubernetes网关

- Web Application Security. Web应用程序安全性。

- Network Edge Applications that deploy our standard 10 pod “hipster-co” application and a more lightweight single pod application. 部署我们的标准10容器“ hipster-co”应用程序和更轻量的单容器应用程序的网络边缘应用程序。

- Hello-Cloud. 你好,云。

- CE single node single network interface volterra sites across AWS, GCP, Azure, VSphere and KVM/Vagrant. 跨AWS,GCP,Azure,VSphere和KVM / Vagrant的CE单节点单网络接口volterra站点。

- CE single node double network interface volterra sites across AWS, GCP, Azure, VSphere and KVM/Vagrant. 跨AWS,GCP,Azure,VSphere和KVM / Vagrant的CE单节点双网络接口volterra站点。

- Scaled CE single node single network interface volterra sites deployments (using GCP, VSphere and KVM), creating a number of ce single node single nic sites to test Volterra scaling abilities. 扩展的CE单节点单网络接口volterra站点部署(使用GCP,VSphere和KVM),创建了许多ce单节点单nic站点以测试Volterra扩展能力。

- CE single node multi network interface volterra sites across AWS, GCP, Azure, VSphere and KVM/Vagrant. 跨AWS,GCP,Azure,VSphere和KVM / Vagrant的CE单节点多网络接口volterra站点。

- CE multi node single network interface volterra sites across AWS, GCP, Azure, VSphere and KVM/Vagrant. 跨AWS,GCP,Azure,VSphere和KVM / Vagrant的CE多节点单网络接口volterra站点。

- CE multi node multi network interface volterra sites across AWS, GCP, Azure, VSphere and KVM/Vagrant. 跨AWS,GCP,Azure,VSphere和KVM / Vagrant的CE多节点多网络接口volterra站点。

We also have specialized scale testing sleeves that automate the process of deploying up to 400 sites at once to test out the scaling capabilities of Volterra software and has been tested using GCP, vSphere and KVM.

我们还拥有专门的规模测试套件,可自动完成一次部署多达400个站点的过程,以测试Volterra软件的扩展功能,并且已经使用GCP,vSphere和KVM进行了测试。

The rapid automated deployment of use cases allows solutions team members to focus on other tasks, improving internal efficiency. The solutions team often uses AC to deploy dozens of KVM, GCP and vSphere sites for recording video demos saving us time in creating Volterra sites to use in creating more complex infrastructure, building on top of the automation we have in place. This is also used for daily cron jobs that test the billing features of the Volterra platform by automating deploying AWS, web app security, secure Kubernetes gateway and network edge application use cases on a specialized Volterra tenant that records billing information.

用例的快速自动化部署使解决方案团队成员可以专注于其他任务,从而提高内部效率。 解决方案团队经常使用AC部署数十个KVM,GCP和vSphere站点来录制视频演示,从而在我们现有的自动化基础上,为我们节省创建Volterra站点以用于创建更复杂的基础结构的时间。 这也用于日常cron作业,通过在专门记录帐单信息的Volterra租户上自动部署AWS,Web应用程序安全性,安全的Kubernetes网关和网络边缘应用程序用例来测试Volterra平台的帐单功能。

未来计划和路线图 (Future Plans and Roadmap)

Our use of AC is already yielding very successful results and there are still many more features and improvements to add onto the project in the near future.

我们对AC的使用已经产生了非常成功的结果,并且在不久的将来,还有更多功能和改进可以添加到项目中。

The biggest addition to the project is the constant addition of new SLEEVE options to cover additional use case deployments. For each new SLEEVE added, we add a new job to our nightly regression smoke tests providing more coverage for solutions deployment projects. Most previous sleeves focused on single node and single network interface use cases but we have recently expanded our SLEEVE coverage to multi node Volterra site clusters and multi-network interface use cases across AWS, Azure, GCP, VMWare and KVM/Vagrant platforms.

该项目最大的增加是不断增加新的SLEEVE选项,以涵盖其他用例部署。 对于添加的每个新SLEEVE,我们都会在每晚回归烟雾测试中添加新工作,从而为解决方案部署项目提供更多覆盖范围。 以前的大多数研究都集中在单节点和单个网络接口的用例上,但我们最近将SLEEVE的范围扩展到了跨AWS,Azure,GCP,VMWare和KVM / Vagrant平台的多节点Volterra网站群集和多网络接口用例。

We also seek to improve our stack record system. We will be increasing the level of detail in our AC records and adding improved search functionalities with the incorporation of RDS database stores for our records. The goal is that we will be able to provide faster searches of our AC environment and more selective search functionality such as stack lookups based on stack state, stack creators, etc. Using these records to construct a custom UI to more efficiently visualize deployments created with AC is also on our roadmap.

我们还寻求改善我们的堆栈记录系统。 我们将通过添加RDS数据库存储来增加AC记录中详细信息的级别,并增加改进的搜索功能。 目标是我们将能够提供更快的交流环境搜索和更多选择性搜索功能,例如基于堆栈状态,堆栈创建者等的堆栈查找。使用这些记录来构建自定义UI,以更有效地可视化使用AC也在我们的路线图上。

多云部署架构

7007

7007

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?