tfrecord图像分类

TFRecords简介(Introduction to TFRecords)

TFRecords store a sequence of binary records, which are read linearly. They are useful format for storing data because they can be read efficiently. You can learn more about TFRecords here.

TFRecords存储一系列二进制记录,这些记录可以线性读取。 它们是用于存储数据的有用格式,因为可以有效地读取它们。 您可以在此处了解有关TFRecords的更多信息。

A binary file format for storage while working with voluminous data can have a significant impact on the performance of import pipeline and as a consequence on the training time of your model. Binary data takes up less space on disk, takes less time to copy and can be read much more efficiently from disk. This is especially true if your data is stored on spinning disks, due to the much lower read/write performance in comparison with SSDs.

在处理大量数据时,用于存储的二进制文件格式可能会对导入管道的性能产生重大影响,并因此对模型的训练时间产生重大影响。 二进制数据占用磁盘上较少的空间,花费较少的时间进行复制,并且可以从磁盘更有效地进行读取。 如果您的数据存储在旋转磁盘上,则尤其如此,因为与SSD相比,其读/写性能要低得多。

Apart from performance, TFRecords are optimized for use with Tensorflow in multiple ways. Firstly, it makes it easy to combine multiple datasets and integrates seamlessly with the data import and preprocessing functionality provided by the library. Especially for datasets that are too large to be stored fully in memory this is an advantage as only the data that is required at the time (e.g. a batch) is loaded from disk and then processed. Another major advantage of TFRecords is that it is possible to store sequence data — for instance, a time series or word encodings — in a way that allows for very efficient and (from a coding perspective) convenient import of this type of data.

除了性能之外,TFRecords还针对通过多种方式与Tensorflow一起使用进行了优化。 首先,它使合并多个数据集变得容易,并且可以与库提供的数据导入和预处理功能无缝集成。 特别是对于太大而无法完全存储在内存中的数据集,这是一个优势,因为只有从磁盘加载然后处理时才需要(例如批处理)时需要的数据。 TFRecords的另一个主要优点是可以存储序列数据(例如时间序列或单词编码),并且这种方式可以非常有效地(从编码的角度)导入这种类型的数据。

The next few sections focus on converting an image dataset to tfrecords, loading the data, model training and prediction.

接下来的几节重点介绍将图像数据集转换为tfrecords,加载数据,模型训练和预测。

资料下载 (Data Download)

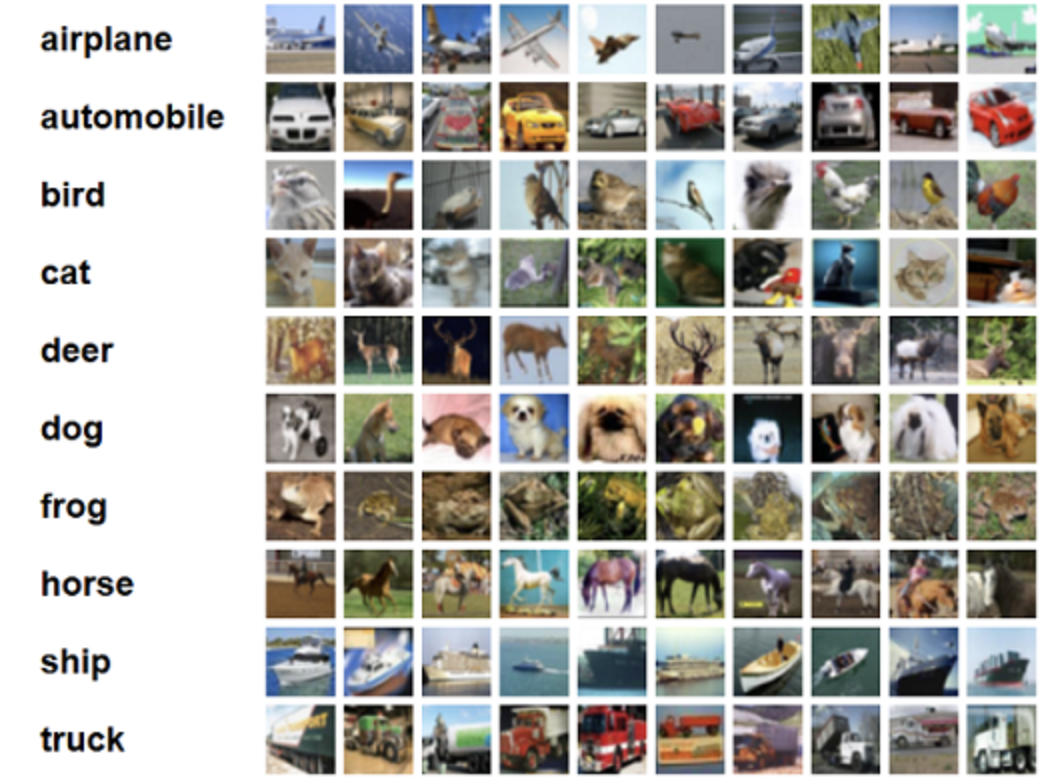

For this illustration, I shall be using a ubiquitous image dataset CIFAR-10.More details on the same can be found here. The code snippet below loads the CIFAR10 dataset directly from tensorflow.

在本例中,我将使用无处不在的图像数据集CIFAR-10,有关详细信息,请参见此处。 下面的代码片段直接从tensorflow加载CIFAR10数据集。

import tensorflow as tf

import matplotlib.pyplot as plt

import wget

import tarfile

import os

from tensorflow.keras.datasets import cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

print('x_train shape:', x_train.shape)

print(x_train.shape[0], 'train samples')

print(x_test.shape[0], 'test samples')将数据集转换为TFRecords(Convert dataset to TFRecords)

A TFRecord file stores the data as a sequence of binary strings. We need to specify the structure of our data before writing it to the file.

TFRecord文件将数据存储为二进制字符串序列。 在将数据写入文件之前,我们需要指定数据的结构。

We shall be using tf.train.Example for the same. While writing an image to a TFRecord, we need the image itself and the corresponding label . In the code snippet below , we are defining two features namely image and label within the tf.train.Example.

我们将使用tf.train.Example相同。 在将图像写入TFRecord时,我们需要图像本身和相应的label。 在下面的代码段中,我们在tf.train.Example中定义了两个功能,即图像和标签。

The image feature stores the image array as bytes and the label feature stores the label as int. You may choose to store the label as string also or you could store additional information such as height, width and depth.

图像功能将图像数组存储为字节,标签功能将标签存储为int。 您也可以选择将标签存储为字符串,也可以存储其他信息,例如高度,宽度和深度。

if not os.path.exists('./data/validation'):

os.makedirs('./data/validation')

if not os.path.exists('./data/train'):

os.makedirs('./data/train')

def write_tfrecords(x, y, filename):

writer = tf.io.TFRecordWriter(filename)

for image, label in zip(x, y):

example = tf.train.Example(features=tf.train.Features(

feature={

'image': tf.train.Feature(bytes_list=tf.train.BytesList(value=[image.tobytes()])),

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=[label])),

}))

writer.write(example.SerializeToString())

write_tfrecords(x_test, y_test, './data/validation/validation.tfrecords')

write_tfrecords(x_train, y_train, './data/train/train.tfrecords')加载数据集(Load the dataset)

The process of reading TFRecords is straightforward:

读取TFRecords的过程非常简单:

- Read the TFRecord using a tf.data.TFRecordDataset 使用tf.data.TFRecordDataset读取TFRecord

Define the features you expect in the TFRecord by using tf.FixedLenFeature and tf.VarLenFeature, depending on what has been defined during the definition of tf.train.Example.

使用tf.FixedLenFeature和tf.VarLenFeature定义TFRecord中期望的功能,具体取决于在定义tf.train.Example时定义的内容。

Parse one tf.train.Example (one file) a time using tf.parse_single_example.

使用tf.parse_single_example一次解析一个tf.train.Example(一个文件)。

- Shuffle the dataset and extract by batch_size 随机播放数据集并按batch_size提取

def _parse_image_function(example_proto):

features = tf.io.parse_single_example(example_proto, image_feature_description)

image = tf.io.decode_raw(features['image'], tf.uint8)

image.set_shape([3 * 32 * 32])

image = tf.reshape(image, [32, 32, 3])

label = tf.cast(features['label'], tf.int32)

label = tf.one_hot(label, 10)

return image, labelVisualize input images

可视化输入图像

train_dataset = read_dataset(10, 100, 'data/train', 'train')

validation_dataset = read_dataset(10, 100, 'data/validation', 'validation')

def show_batch(image_batch, label_batch):

plt.figure(figsize=(50, 50))

for n in range(100):

ax = plt.subplot(5, 5, n + 1)

plt.imshow(image_batch[n] / 255.0)

plt.axis("off")

image_batch, label_batch = next(iter(train_dataset))

show_batch(image_batch.numpy(), label_batch.numpy())

模型训练与预测 (Model Training and Prediction)

Since we are using a relatively small and balanced dataset with 10 classes, we decided to write a custom model architecture similar to Lenet.

由于我们使用的是一个相对较小且平衡的数据集,包含10个类,因此我们决定编写一个类似于Lenet的自定义模型架构。

Our evaluation metric will be accuracy, but one may choose to have other metrics like average precision or area under roc curve

我们的评估指标将是准确性,但可以选择采用其他指标,例如平均精度或roc曲线下面积

def keras_model_fn(learning_rate, optimizer):

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same', name='inputs', input_shape=(HEIGHT, WIDTH, DEPTH)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.2))

model.add(Conv2D(64, (3, 3), padding='same'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.3))

model.add(Conv2D(128, (3, 3), padding='same'))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(Conv2D(128, (3, 3)))

model.add(BatchNormalization())

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.4))

model.add(Flatten())

model.add(Dense(512, kernel_constraint=max_norm(2.)))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(NUM_CLASSES))

model.add(Activation('softmax'))

opt = SGD(learning_rate=learning_rate)

model.compile(loss='categorical_crossentropy',

optimizer=opt,

metrics=['accuracy'])

return modelThe next step would be fitting the model , saving it and predicting for test set.

下一步将是拟合模型,保存模型并预测测试集。

model.fit(x=train_dataset,

epochs=epochs,

batch_size=batch_size)

# Saving trained model

model.save(args.model_output + '/1')

validation_array = np.array(list(validation_dataset.unbatch().take(-1).as_numpy_iterator()))

test_x = np.stack(validation_array[:,0])

test_y = np.stack(validation_array[:,1])

# Use the model to predict the labels

test_predictions = model.predict(test_x)

test_y_pred = np.argmax(test_predictions, axis=1)

test_y_true = np.argmax(test_y, axis=1)

# Evaluating model accuracy and logging it as a scalar for TensorBoard hyperparameter visualization.

accuracy = sklearn.metrics.accuracy_score(test_y_true, test_y_pred)

tf.summary.scalar(METRIC_ACCURACY, accuracy, step=1)

logging.info('Test accuracy:{}'.format(accuracy))结论(Conclusion)

Though the task of converting raw data to TFRecords may seem arduous but it is worth the effort. Few notable advantages are:

尽管将原始数据转换为TFRecords的任务似乎很艰巨,但是值得付出努力。 几个明显的优点是:

- TFRecords occupy less disk space than raw jpegs/pngs/csvs. TFRecords比原始jpegs / pngs / csvs占用更少的磁盘空间。

- TFRecords improves I/O performance as it takes less time to copy and can be read much more efficiently from disk TFRecords提高了I / O性能,因为它花费的时间更少,并且可以从磁盘更有效地读取

- Tensorflow provides a seemless integration of TFRecords with import pipelinesTensorflow提供了TFRecords与导入管道的无缝集成

- It has the ability to store sequential data它具有存储顺序数据的能力

- There is no change required in the model fit and definition statements while working with TFRecords使用TFRecords时,模型拟合和定义语句中不需要任何更改

- As a consequence of improved I/O performance, TFRecords reduces the training time.由于改善了I / O性能,TFRecords减少了培训时间。

翻译自: https://towardsdatascience.com/how-to-train-an-image-classifier-on-tfrecord-files-97a98a6a1d7a

tfrecord图像分类

974

974

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?