和自然语言处理有关的英语

Natural Language Processing (NLP) is growing in use and plays a vital role in many systems from resume-parsing for hiring to automated telephone services. You can also find it in commonly used technology such as chatbots, virtual assistants, and modern spam detection. However, the development and implementation of NLP technology is not as equitable as it may appear.

自然语言处理(NLP)的使用在不断增长,并且在许多系统中(从用于招聘的简历解析到自动电话服务)都起着至关重要的作用。 您还可以在常用技术中找到它,例如聊天机器人,虚拟助手和现代垃圾邮件检测。 但是,NLP技术的开发和实施并不像看起来那样公平。

To put it into perspective, although there are over 7000 languages spoken around the world, the vast majority of NLP processes amplify seven key languages: English, Chinese, Urdu, Farsi, Arabic, French, and Spanish.

纵观其观点,尽管全球使用超过7000种语言,但NLP流程绝大多数都放大了7种主要语言:英语,中文,乌尔都语,波斯语,阿拉伯语,法语和西班牙语。

Even among these seven languages, the vast majority of technological advances have been achieved in English-based NLP systems. For example, optical character recognition (OCR) is still limited for non-English languages. And anyone who has used an online automatic translation service knows the severe limitations once you venture beyond the key languages referenced above.

即使在这七种语言中,绝大多数基于英语的NLP系统也取得了技术进步。 例如,光学字符识别(OCR)仍然限于非英语语言。 一旦您冒险使用以上提到的主要语言,使用在线自动翻译服务的任何人都将了解严格的限制。

NLP管道如何开发? (How are NLP Pipelines Developed?)

To understand the language disparity in NLP, it is helpful to first understand how these systems are developed. A typical pipeline starts by gathering and labeling data. A large dataset is essential here as data will be needed to both train and test the algorithm.

要了解NLP中的语言差异,首先了解这些系统是如何开发的很有帮助。 典型的管道从收集和标记数据开始。 这里需要一个大数据集,因为训练和测试算法都需要数据。

When a pipeline is developed for a language with little available data, it is helpful to have strong patterns within the language. Small datasets can be augmented by techniques such as synonym replacement to simplify the language, back-translation to create similarly phrased sentences to bulk up the dataset, and replacing words with other related parts of speech.

当为几乎没有可用数据的语言开发管道时,在该语言中使用牢固的模式将很有帮助。 小型数据集可以通过诸如同义词替换以简化语言,反向翻译以创建类似短语的句子以充实数据集之类的技术以及用其他相关词性替换单词来增强。

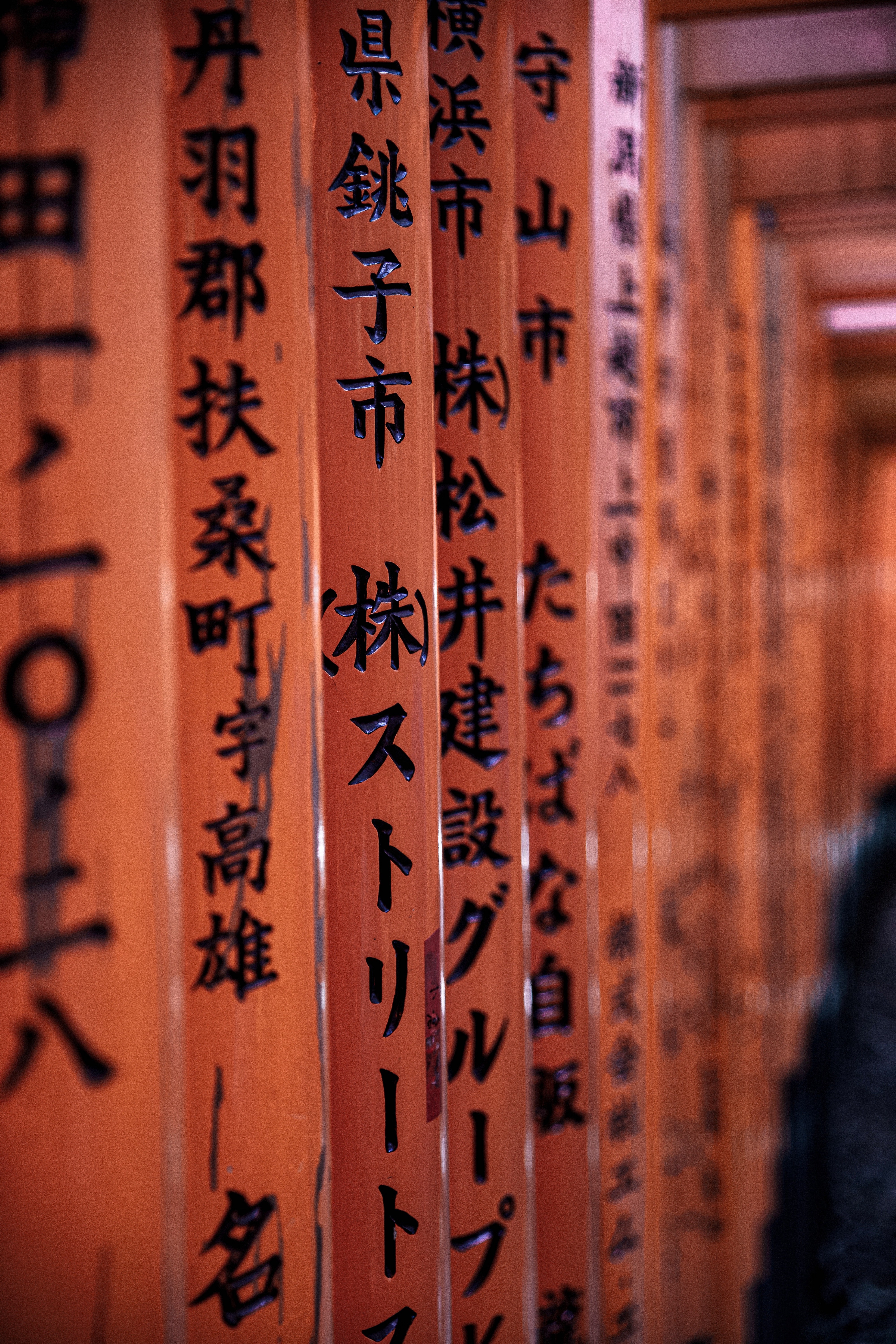

Language data also requires significant cleaning. When a non-English language with special characters is used, like Chinese, proper unicode normalization is typically required. This allows the text to be converted to a binary form that is recognizable by all computer systems, reducing the risk of processing errors. This issue is amplified for languages like Croatian, that rely heavily on accentuation to change the meaning of a word. For example, in Croatian, a single accent can change a positive word into a negative one. Therefore, these terms have to be manually encoded to ensure a robust dataset.

语言数据也需要大量清理。 当使用带有特殊字符的非英语语言(例如中文)时,通常需要正确的unicode规范化。 这样可以将文本转换为所有计算机系统都可以识别的二进制格式,从而降低了处理错误的风险。 对于像克罗地亚语这样的语言,这个问题就更加严重了,这些语言严重依赖重音来更改单词的含义。 例如,在克罗地亚语中,单个重音可以将一个肯定的单词更改为否定的单词。 因此,必须手动编码这些术语以确保数据集的健壮性。

Finally, the dataset can be divided into a training and testing split, and sent through the machine learning process of feature engineering, modeling, evaluation, and refinement.

最后,数据集可以分为训练和测试部分,并通过特征工程,建模,评估和细化的机器学习过程发送。

One commonly used NLP tool is Google’s Bidirectional Encoder Representations from Transformers (BERT) which is purported to develop a “state of the art” model in 30 minutes using a single tensor processing unit. Their GitHub page reports that the top 100 languages with the largest Wikipedia databases are supported, but the actual evaluation and refinement of the system has only been performed on 15 languages. While BERT technically does support more languages, the lower level of accuracy and lack of proper testing limits the applicability of this technology. Other NLP systems such as Word2Vec and the Natural Language Toolkit (NLTK) have similar limitations.

一种常用的NLP工具是Google的来自变压器的双向编码器表示(BERT),据称它可以使用单个张量处理单元在30分钟内开发出“最新技术”模型。 他们的GitHub页面报告支持最大的Wikipedia数据库的前100种语言,但是仅对15种语言执行了系统的实际评估和完善。 尽管BERT在技术上确实支持更多语言,但是较低的准确性水平和缺乏适当的测试限制了该技术的适用性。 其他NLP系统(如Word2Vec和自然语言工具包(NLTK))具有类似的限制。

In summary, the NLP pipeline is a challenge for less popular languages. The datasets are smaller, they often need augmentation work, and the cleaning process requires time and effort. The less access you have to native-language resources, the less data available when building an NLP pipeline. This makes the barrier to entry for less popular languages very high, and in many cases, too high.

总之,对于不太流行的语言,NLP管道是一个挑战。 数据集较小,它们通常需要扩充工作,并且清理过程需要时间和精力。 您对本机语言资源的访问越少,构建NLP管道时可用的数据就越少。 这使得不太受欢迎的语言进入的障碍非常高,在许多情况下也太高了。

NLP中多样化语言支持的重要性 (The Importance of Diverse Language Support in NLP)

There are three overarching perspectives that support the expansion of NLP:

有三种支持NLP扩展的总体观点:

- The reinforcement of social disadvantages 加强社会弊端

- Normative biases规范性偏见

- Language expansion to improve ML technology语言扩展以改善ML技术

Let’s look at each in more detail:

让我们更详细地看看每个:

加强社会弱势 (Reinforcement of Social Disadvantages)

From a societal perspective, it is important to keep in mind that technology is only accessible when its tools are available in your language. On a basic level, the lack of spell-check technology impairs those who speak and write less common languages. This disparity rises up the technological chain.

从社会角度来看,重要的是要牢记,只有在使用您的语言提供工具时,才可以访问技术。 从根本上讲,缺少拼写检查技术会损害说和写较少通用语言的人。 这种差距加剧了技术链。

What’s more, psychological research has shown that the language you speak molds the way you think. An in-built language preference in the systems that drive the internet inherently incorporates the societal norms of the driving languages.

此外,心理学研究表明,您说的语言会塑造您的思维方式。 在驱动互联网的系统中,内置的语言偏好固有地包含了驱动语言的社会规范。

The fact is that supported systems continue to thrive while it is challenging to introduce new aspects to a deeply ingrained program. This means that as NLP continues to develop without bringing in a diverse range of languages, it will be more challenging to incorporate them in the future, endangering the global variety of languages.

事实是,受支持的系统继续蓬勃发展,而向根深蒂固的程序中引入新的方面却具有挑战性。 这意味着随着NLP的不断发展,而又没有引入多种语言,将来将它们整合进来将更具挑战性,危及全球多种语言。

规范性偏见 (Normative Biases)

English and English-adjancent languages are not representative of other world languages as they have unique grammatical structures that many languages do not share. By supporting mostly English languages, however, the Internet and other technologies are progressively treating English as the normal, default language setting.

英语和英语-辅助语言不能代表其他世界语言,因为它们具有许多语言无法共享的独特语法结构。 但是,通过主要支持英语,Internet和其他技术正在逐渐将英语作为常规的默认语言设置。

As a relatively agnostic system is trained on English, it learns the norms and systems of a specific language and all the cultural implications that come with that limitation. This single sided approach will only continue to become more apparent as NLP is applied to more intelligent processes that have an international audience.

当一个相对不可知的系统接受英语培训时,它会学习特定语言的规范和系统以及该限制所带来的所有文化含义。 随着NLP被应用于具有国际受众的更智能的过程,这种单方面的方法将继续变得更加明显。

语言扩展以改善机器学习技术 (Language Expansion to Improve ML Technology)

When we apply machine learning techniques to only a handful of languages, we program implicit bias into the systems. As machine learning and NLP continues to advance while only supporting a few languages, we not only make it more challenging to introduce new languages, but run the risk of making it fundamentally impossible to do so.

当我们仅将机器学习技术应用于少数几种语言时,我们会将隐性偏见编程到系统中。 随着机器学习和NLP在仅支持几种语言的同时继续发展,我们不仅在引入新语言方面更具挑战性,而且冒着从根本上不可能这样做的风险。

For example, subword tokenization implementation performs very poorly on languages that feature reduplication, a feature common to many international languages such as Afrikaans, Irish, Punjabi, and Armenian.

例如,子词标记化实现在具有重复功能的语言上表现很差,这种功能是南非荷兰语,爱尔兰语,旁遮普语和亚美尼亚语等许多国际语言所共有的功能。

Languages also have a variety of word order norms, which tend to stump the common neural models used in English-based NLP.

语言还具有多种词序规范,这往往会阻碍基于英语的NLP中使用的常见神经模型。

可以做什么? (What Can Be Done?)

In the current discourse around NLP, when the words “natural language” are spoken, the general assumption is that the researcher is working on an English database. To break out of this mold and create more awareness for international systems, we should first always refer to the language system being developed. This idea of always stating the language a researcher is working on is colloquially referred to as the Bender Rule.

在有关NLP的当前讨论中,当说出“自然语言”一词时,通常的假设是研究人员正在研究英语数据库。 为了打破这种局面并提高对国际体系的认识,我们首先应该始终参考正在开发的语言系统。 总是陈述研究人员正在使用的语言的想法通俗地称为Bender Rule 。

Simple awareness of the issue, of course, is not enough. However, being mindful of the issue can help in the development of more broadly applicable tools.

当然,仅仅了解这个问题是不够的。 但是,注意该问题可以帮助开发更广泛适用的工具。

When looking to introduce more languages into an NLP pipeline, it is also important to consider the size of the dataset. If you are creating a new dataset, a significant portion of your budget should be applied to creating a dataset in another language. Of course, additional research in optimizing current cleaning and annotation programs in other languages is also vital to broadening NLP technologies around the globe.

当希望将更多语言引入NLP管道时,考虑数据集的大小也很重要。 如果要创建新的数据集,则应将预算的很大一部分用于创建另一种语言的数据集。 当然,在优化当前使用其他语言编写的清洗和注释程序方面的其他研究对于在全球范围内扩展NLP技术也至关重要。

和自然语言处理有关的英语

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?