pytorch回归

PyTorch Lightning framework was built to make deep learning research faster. Why write endless engineering boilerplate? Why limit your training to single GPUs? How can I easily reproduce my old experiments?

PyTorch Lightning框架旨在加快深度学习研究的速度。 为什么要写无尽的工程样板? 为什么将训练仅限于单个GPU? 如何轻松地重现旧的实验?

Our mission is to minimize engineering cognitive load and maximize efficiency, giving you all the latest AI features and engineering best practices to make your models scale at lightning speed. All Lightning automated features are rigorously tested with every change, reducing the footprints of potential errors.

我们的使命是最大程度地减少工程认知负担并提高效率 ,为您提供所有最新的AI功能和最佳工程实践,以使模型以闪电般的速度扩展。 所有Lightning自动化功能的每一项更改都经过严格测试,从而减少了潜在错误的产生。

To make the Lightning experience even more comprehensive, we want to share implementations with the same lightning standards. PyTorch Lightning Bolts is a collection of PyTorch Lightning implementations of popular models that are well tested and optimized for speed on multiple GPUs and TPUs.

为了使Lightning体验更加全面,我们希望共享具有相同Lightning标准的实现。 PyTorch Lightning Bolts是流行模型的PyTorch Lightning实现的集合,这些模型已经过测试和优化,可在多个GPU和TPU上实现速度。

In this article, we’ll give you a quick glimpse of the new Lightning Bolts collection and how you can use it to try crazy research ideas with just a few lines of code!

在本文中,我们将向您简要介绍一下新的闪电螺栓系列,以及如何使用它只需几行代码即可尝试疯狂的研究思路!

来自闪电的螺栓 (From Lightning comes Bolts)

We’re happy to introduce Lightning Bolts, a new community built deep learning research and production toolbox, featuring a collection of well established and SOTA models and components, pre-trained weights, callbacks, loss functions, data sets, and data modules.

我们很高兴介绍 闪电社区( Lightning Bolts )是一个新社区,它构建了深度学习研究和生产工具箱,其中包含一组完善的SOTA模型和组件,预先训练的权重,回调,损失函数,数据集和数据模块。

Everything is implemented in Lightning and tested (daily), benchmarked, documented, and works on CPUs, TPUs, GPUs, and 16-bit precision.

一切都在Lightning中实现并经过测试(每日),进行基准测试,记录下来,并且可以在CPU,TPU,GPU和16位精度上运行。

What separates bolts from all the other libraries out there is that bolts is built by and used by AI researchers. This means every single bolt component is modularized so that it can be easily extended or mixed with arbitrary parts of the rest of the code-base. Bolts models are designed for you to explore new research ideas — just subclass, override, and train!

螺栓与其他所有库的区别在于螺栓是由AI研究人员构建和使用的。 这意味着每个螺栓组件都是模块化的,因此可以轻松扩展或与其余代码库的任意部分混合。 螺栓模型旨在为您探索新的研究思路-只是子类,替代和训练!

Here are a few tools available:

这里有一些可用的工具:

- Multi-GPU + TPU enabled linear regression and logistic regression. Multi-GPU + TPU支持线性回归和逻辑回归。

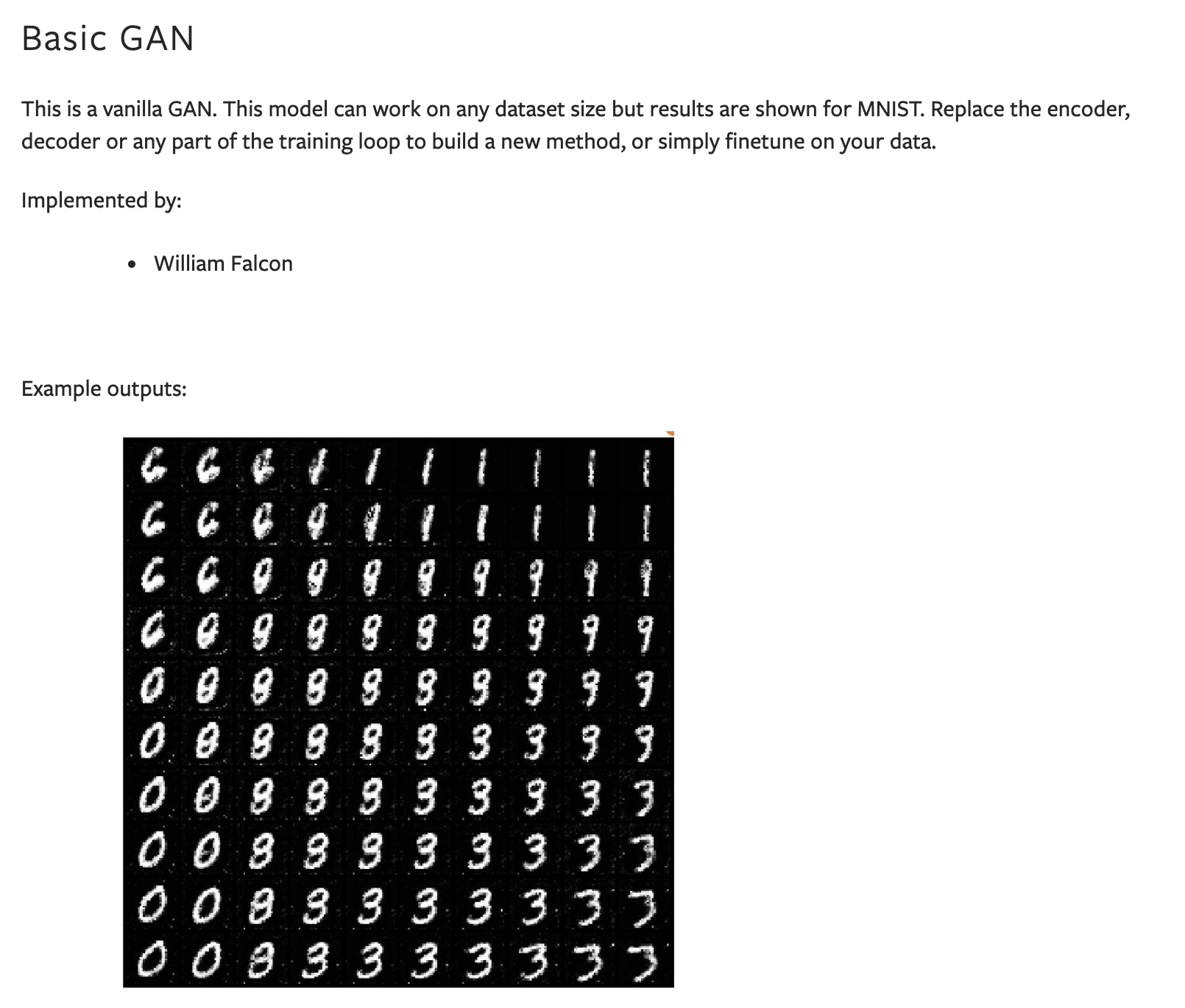

- Popular models such as GANs VAEs, SimCLR, CPC (We have the first verified implementation of CPC v2 outside of DeepMind!) 流行的模型,例如GAN VAE,SimCLR,CPC(我们在DeepMind之外拥有第一个经过验证的CPC v2的实现!)

- Full datasets that specify the transforms, train, test, and validation splits automatically. 自动指定转换,训练,测试和验证拆分的完整数据集。

雷电用例 (Lightning Bolts Use Cases)

经过测试的基准 (Tested Baselines)

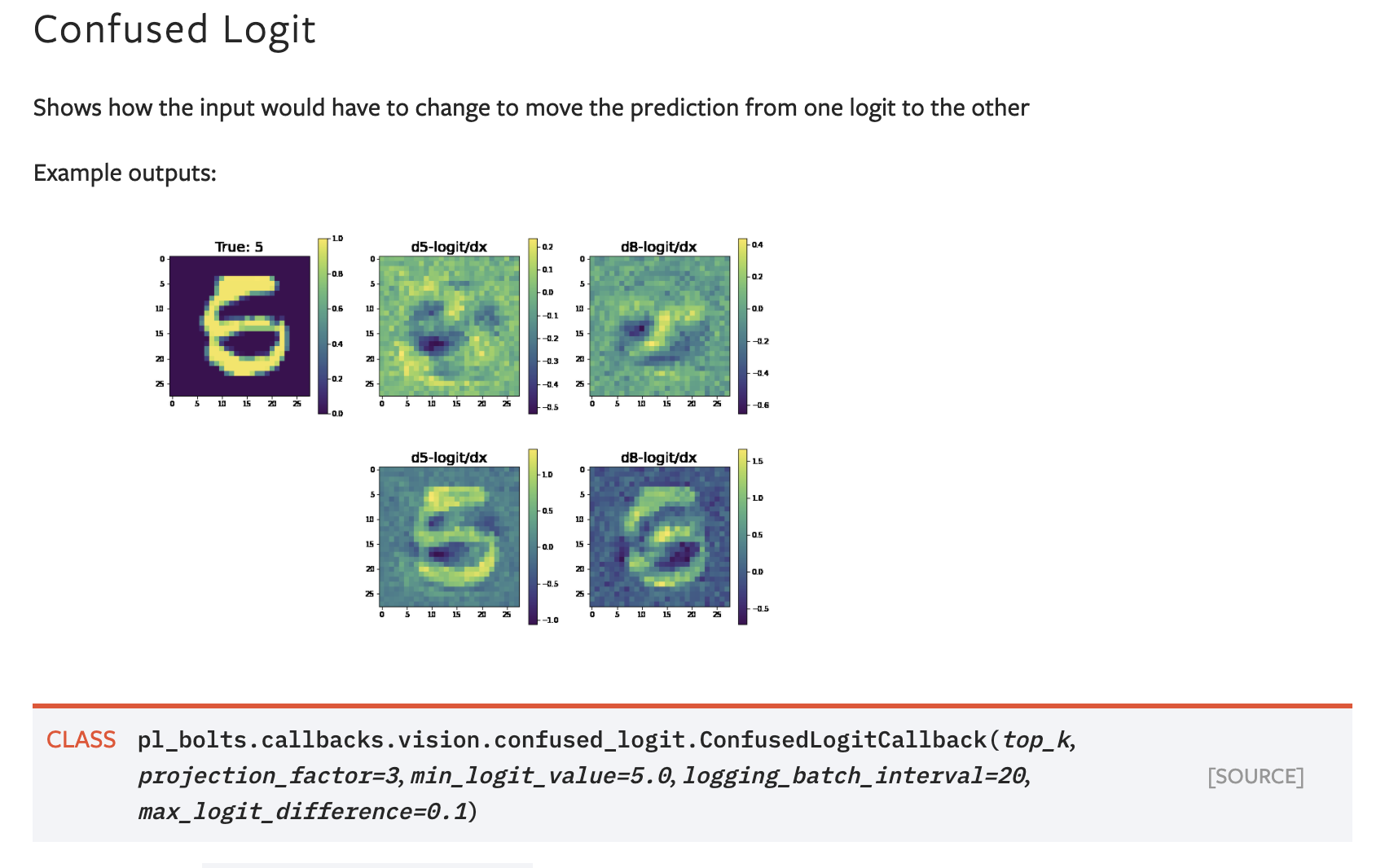

Bolts has rigorously tested and benchmarked baselines. From VAEs to GANs to GPT to self-supervised models — you don’t have to spend months implementing the baselines to try new ideas. Instead, subclass one of ours and try your idea!

螺栓已经过严格的测试和基准测试。 从VAE到GAN,再到GPT,再到自我监督的模型 —您不必花数月的时间来实施基准就可以尝试新的想法。 相反,请继承我们的其中一个并尝试您的想法!

预测您的数据 (Predicting on your data)

You can use Bolts pre-trained weights on most of the standard datasets. This is useful when you don’t have enough data, time, or money to do your own training. It can also be used as a benchmark to improve or test your own model.

您可以在大多数标准数据集中使用Bolts预训练权重。 当您没有足够的数据,时间或金钱来进行自己的培训时,这很有用。 它也可以用作改进或测试您自己的模型的基准。

For example, you could use a pre-trained VAE to generate features for an image dataset, and compare it to CPC.

例如,您可以使用预先训练的VAE来生成图像数据集的特征,并将其与CPC进行比较。

子类和火车 (Subclass and train)

Bolts modules are written to be easily extended for research. For example, you can subclass SimCLR and make changes to the NT-Xent loss.

螺栓模块的编写易于扩展以进行研究。 例如,您可以继承SimCLR的子类,并对NT-Xent丢失进行更改。

连连看 (Mix and Match)

The beauty of Bolts is that it’s easy to plug and play with your Lightning modules or any PyTorch data set. Mix and match data, modules, and components as you please!

Bolts的优点在于可以很容易地插入和使用Lightning模块或任何PyTorch数据集。 根据需要混合和匹配数据,模块和组件!

model = ImageGPT(datamodule=FashionMNISTDataModule(PATH))Or pass in any dataset of your choice

或传入您选择的任何数据集

model = ImageGPT()

Trainer().fit(model,

train_dataloader=DataLoader(…),

val_dataloader=DataLoader(…)

)And train on any hardware accelerator, using all of Lightning trainer flags!

并使用所有Lightning培训者标志在任何硬件加速器上进行培训!

import pytorch_lightning as plmodel = ImageGPT(datamodule=FashionMNISTDataModule(PATH))# cpus

pl.Trainer().fit(model)# gpus

pl.Trainer(gpus=8).fit(model)# tpus

pl.Trainer(tpu_cores=8).fit(model)For more details, read the docs.

有关更多详细信息,请阅读docs 。

多GPU和TPU上的线性回归 (Linear regressions on multiple-GPUs and TPUs)

Lightning Bolts includes a collection of non-deep learning algorithms that can train on multiple GPUs and TPUs.

Lightning Bolts包含一组非深度学习算法,这些算法可以在多个GPU和TPU上进行训练。

Here’s an example running logistic regression on Imagenet in 2 GPUs with 16-bit precision.

这是一个在2个GPU上以16位精度在Imagenet上运行逻辑回归的示例。

This is the summary for the above setup:

这是上述设置的摘要:

- 1,281,167 images (in Imagenet) 1,281,167张图像(在Imagenet中)

- 150,528 input features (224x224x3 pixels) 150,528个输入功能(224x224x3像素)

- 512 batch size (256 per GPU) 512个批处理大小(每个GPU 256个)

- 16-bit precision 16位精度

- 2 V100 GPUs 2个V100 GPU

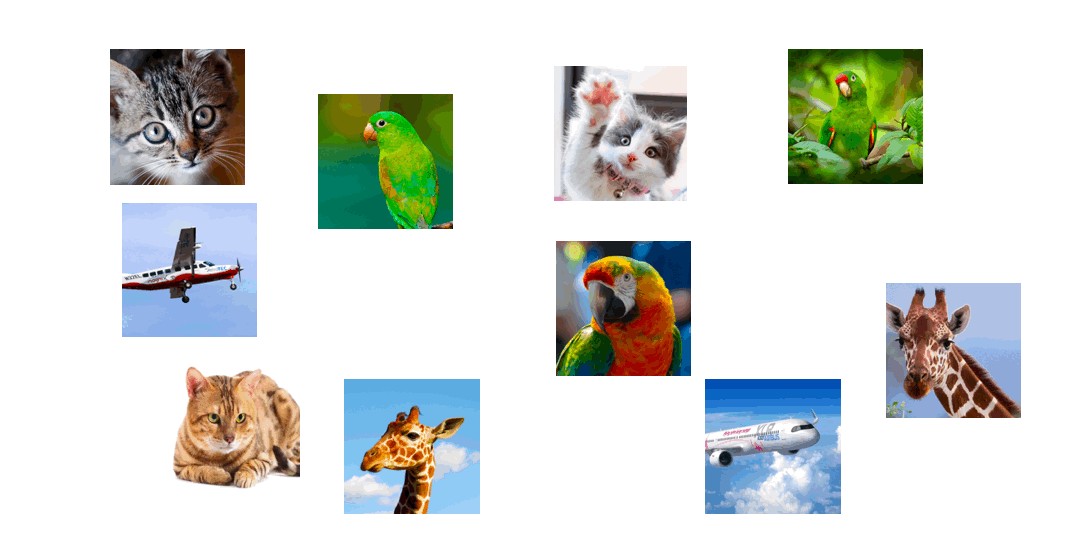

自我监督学习模型 (Self Supervised Learning Models)

Bolts houses a collection of many of the current state-of-the-art self-supervised algorithms.

Bolts包含许多当前最先进的自我监督算法。

In the latest paper, “A Framework For Contrastive Self-Supervised Learning And Designing A New Approach” by William Falcon and Kyunghyun Cho, explore and implement many of the latest self-supervised learning algorithms. Those implementations are available in Bolts under the self-supervised learning suite.

在William Falcon和Kyunghyun Cho的最新论文“ 一种对比式自我监督学习和设计新方法的框架 ”中,探索并实现了许多最新的自我监督学习算法。 这些实现在Bolts中的自我监督学习套件下可用。

You can use Self-supervised learning algorithms to extract image features, to train unlabeled data or mix and match parts of the models to create your own new method:

您可以使用自我监督学习算法来提取图像特征,训练未标记的数据或混合和匹配模型的各个部分以创建自己的新方法:

from pl_bolts.models.self_supervised import CPCV2from pl_bolts.losses.self_supervised_learning import FeatureMapContrastiveTask

amdim_task = FeatureMapContrastiveTask(comparisons='01, 11, 02', bidirectional=True)

model = CPCV2(contrastive_task=amdim_task)You can find bolts implementation for

您可以找到以下的螺栓实现

MOCO-V2

摩科 -V2

SimCLR (check out our video series on SimCLR, exploring the paper in details + implementation from scratch)

SimCLR (查看我们关于SimCLR的视频系列 ,从头开始探索本文的详细信息和实现)

贡献螺栓! (Contribute to Bolts!)

Bolts models and components are built-by the Lightning community. The lightning team guarantees that contributions are:

螺栓模型和组件由Lightning社区构建。 闪电团队保证做出的贡献是:

- Rigorously Tested (on CPUs, GPUs, TPUs). 经过严格测试(在CPU,GPU,TPU上)。

- Rigorously Documented. 严格记录。

- Standardized via PyTorch Lightning. 通过PyTorch Lightning进行标准化。

- Optimized for reproducibility. 针对重现性进行了优化。

- Checked for correctness. 检查正确性。

Want to get your implementation tested on CPUs, GPUs, TPUs, and mixed-precision and help us grow? Consider contributing your model to Bolts (you can even do it from your own repo) to make it available for the Lightning community!

想要在CPU,GPU,TPU和混合精度上测试您的实现,并帮助我们发展吗? 考虑将模型贡献给Bolts (甚至可以从您自己的仓库中完成),以使其可用于Lightning社区!

You can find a list of model suggestions in our GitHub issues. Your models don’t have to be state of the art to get added to bolts, just well designed and tested. If you have any ideas, start a discussion on our slack channel.

您可以在我们的GitHub问题中找到模型建议的列表 。 您的模型不必经过最先进的设计即可添加到螺栓中,只需经过精心设计和测试即可。 如果您有任何想法,请在我们的闲暇渠道上进行讨论。

pytorch回归

751

751

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?