Note this is roughly based on a presentation I made back in February at the Boston Data Science Meetup Group. You can find the full slide deck here. I have also included some more recent experiences and insights as well as answers to common questions that I have encountered.

请注意,这大致基于我2月份在波士顿数据科学聚会组织所做的演讲。 您可以在此处找到完整的幻灯片。 我还提供了一些最新的经验和见解,以及对我遇到的常见问题的解答。

背景 (Background)

When I first started my river forecasting research, I envisioned using just a notebook. However, it became clear to me that effectively tracking experiments and optimizing hyper-parameters would play a crucial role in the success of any river flow model; particularly, as I wanted to forecast river flows for over 9,000+ rivers around the United States. This led me on the track to develop flow forecast which is now a multi-purpose deep learning for time series framework.

刚开始进行河流预报研究时,我设想只使用笔记本电脑。 但是,对我来说很清楚,有效跟踪实验和优化超参数将对任何河流流量模型的成功发挥至关重要的作用。 特别是因为我想预测美国周围超过9000条以上的河流流量。 这使我走上了开发流量预测的轨道,该流程现在已成为时间序列框架的多功能深度学习。

重现性 (Reproducibility)

One of the biggest challenges in machine learning (particularly deep learning) is being able to reproduce experiment results. Several others have touched on this issue, so I will not spend too much time discussing it in detail. For a good overview of why reproducibility is important see Joel Grus’s talk and slide deck. The TLDR: is that in order to build upon prior research we need to make sure that it worked in the first place. Similarly, to deploy models we have to be able to easily find the artifacts of the best “one.”

机器学习(尤其是深度学习)中最大的挑战之一是如何重现实验结果。 其他几个人已经谈到了这个问题,所以我不会花太多时间详细讨论它。 有关为什么重现性很重要的概述,请参阅Joel Grus的演讲和幻灯片。 TLDR:是为了基于先前的研究,我们需要确保它首先起作用。 同样,要部署模型,我们必须能够轻松找到最佳“一个”的工件。

One of my first recommendations to enable reproducible experiments is NOT to use Jupyter Notebooks or Colab (at least not in their entirety). Jupyter Notebooks and Colab are great for rapidly prototyping a model or leveraging an existing code-base (as I will discuss in a second) to run experiments, but not for building out your models or other features.

我能够进行可重复实验的第一个建议是不要使用Jupyter Notebooks或Colab(至少不要全部使用)。 Jupyter Notebooks和Colab非常适合快速建立模型原型或利用现有代码库(如我稍后将讨论)来运行实验,但不适用于构建模型或其他功能。

编写高质量代码 (Write High Quality Code)

Write unit tests (preferably as you are writing the code)

编写单元测试(最好是在编写代码时)

I have found test driven development very effective in the machine learning space. One of the first things people ask me is how do I write tests for a model that I don’t what its outputs will be? Fundamentally your unit tests should fall into one of the four categories:

我发现测试驱动的开发在机器学习领域非常有效。 人们首先问我的第一件事是,我如何为模型编写测试,而不知道模型的输出是什么? 从根本上讲,您的单元测试应该属于以下四类之一:

(a) Test that your model’s returned representations are the proper size.

(a)测试模型返回的表示形式是否正确。

(b) Test that your models initialize properly for the parameters you specify and that the right parameters are trainable

(b)测试您的模型是否针对您指定的参数正确初始化,并且正确的参数是可训练的

Another relatively simple unit test is make sure that model initializes in the way you expect it to and the proper parameters are trainable.

另一个相对简单的单元测试是确保模型按照您期望的方式初始化,并且正确的参数是可训练的。

(c ) Test the logic of custom loss functions and training loops:

(c)测试自定义损失函数和训练循环的逻辑:

People often ask me how to do this? I’ve found the best way is to create a dummy model with a known result to test the correctness of custom loss functions, metrics, and training loops. For instance you could create a PyTorch model that only returns 0. Then use that to write a unit test that checks if the loss and the training loop are correct.

人们经常问我该怎么做? 我发现最好的方法是创建一个具有已知结果的虚拟模型,以测试自定义损失函数,指标和训练循环的正确性。 例如,您可以创建一个仅返回0的PyTorch模型。然后使用该模型编写一个单元测试,以检查损失和训练循环是否正确。

(d) Test the logic of data pre-processing/loaders

(d)测试数据预处理/加载器的逻辑

Another major thing to cover is to make sure your data loaders are outputting data in the format you expect and handling problematic values. Problems with data quality are a huge issue with machine learning so it is important to make sure your data loaders are properly tested. For instance, you should write tests to check NaN/Null values are filled or the rows are dropped.

要涵盖的另一项主要内容是确保数据加载器以期望的格式输出数据并处理有问题的值。 数据质量问题是机器学习的一个大问题,因此确保正确测试数据加载器非常重要。 例如,您应该编写测试以检查是否填充了NaN / Null值或删除了行。

Finally, I recommend using tools CodeCov and Codefactor. They are useful for automatically determining you code test coverage.

最后,我建议使用工具CodeCov和Codefactor。 它们对于自动确定您的代码测试覆盖率很有用。

Recommended tools: Travis-CI, CodeCov

2. Utilize integration tests for end-to-end code coverage

2.利用集成测试来覆盖端到端代码

Having unit tests is good, but it is also important to make sure your code runs properly in an end-to-end fashion. For instance, sometimes I’ve found a single model’s unit tests run, only to find out the way I was passing the configuration file to the model didn’t work. As a result I now add integration tests for every new model I add to the repository. The integration tests can also demonstrate how to use your models. For instance, the configuration files I use for my integration tests I often leverage as the backbone of my full parameter sweeps.

进行单元测试是很好的,但是确保您的代码以端到端的方式正确运行也很重要。 例如,有时我发现运行单个模型的单元测试,只是发现我将配置文件传递给模型的方式不起作用。 结果,我现在为添加到存储库中的每个新模型添加集成测试。 集成测试还可以演示如何使用模型。 例如,我经常将用于集成测试的配置文件用作完整参数扫描的基础。

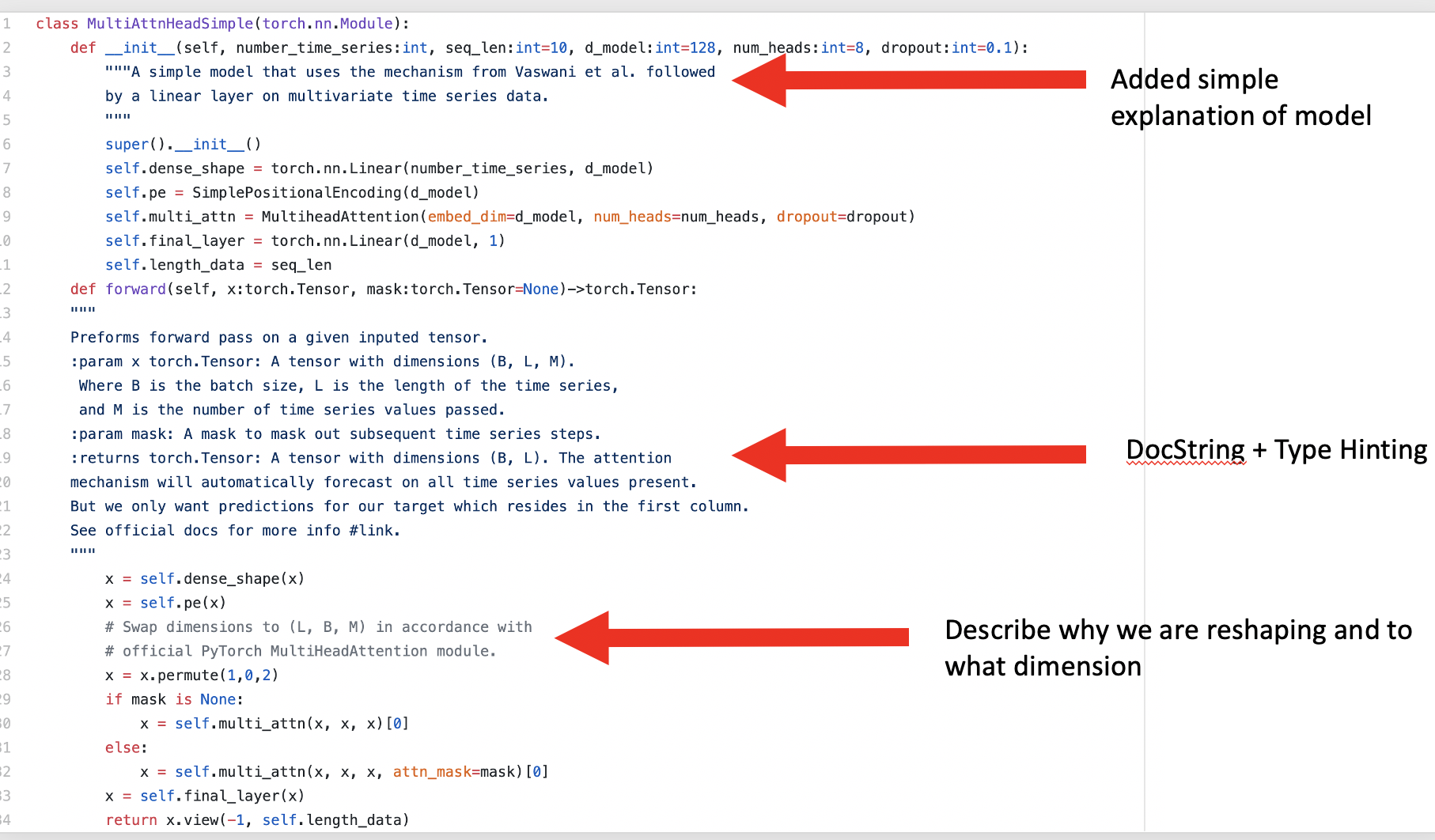

3. Utilize both type hints and document strings:

3.同时使用类型提示和文档字符串:

Having both type hints and document strings greatly increases readability; particularly when you are passing around tensors. When I’m coding I frequently have to look back at the doc-strings to remember what shape my tensors are. Without them I have to manually print the shape. which wastes a lot of time and potentially adds garbage that you later forget to remove.

同时具有类型提示和文档字符串可大大提高可读性; 特别是当您绕过张量时。 在编码时,我经常不得不回顾文档字符串,以记住张量是什么形状。 没有它们,我必须手动打印形状。 这会浪费大量时间,并可能添加垃圾,以后您将其删除。

4. Create good documentation

4.创建好的文档

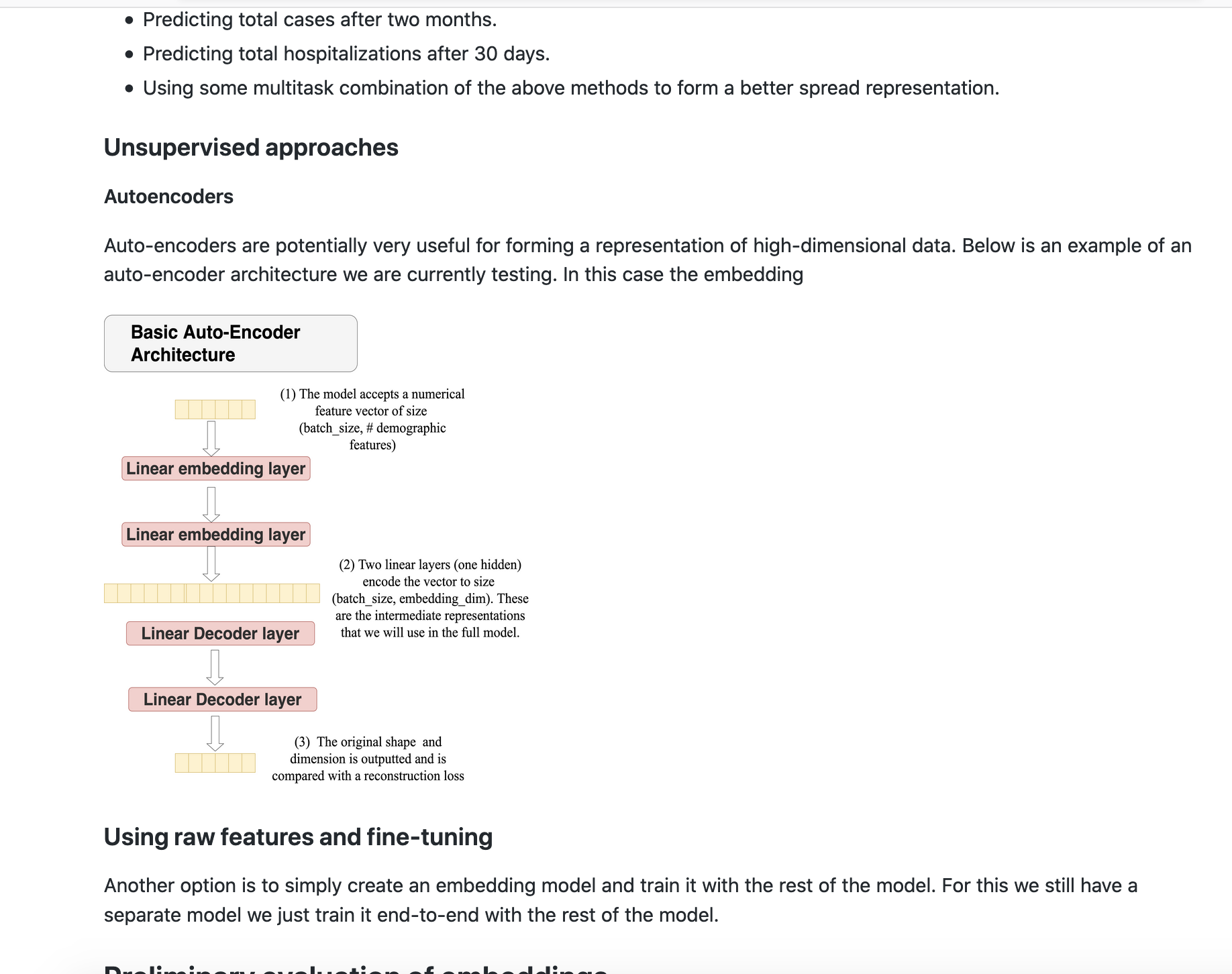

I’ve found the best time to create documentation for machine learning projects is while I’m writing code or even before. Often laying out the architectural design of ML models and how they will interface with existing classes saves me considerable time when implementing the code as well as forces me to think critically about the ML decisions I make. Additionally, once the implementation is complete you already have good start on informing your peers/researchers on how to use your model.

我发现为机器学习项目创建文档的最佳时间是在编写代码时,甚至之前。 通常,布局ML模型的体系结构设计以及它们与现有类的接口方式将为我节省实施代码时的大量时间,并迫使我认真考虑所做出的ML决策。 此外,一旦实现完成,您就已经有了一个良好的开端,可以告知同行/研究人员如何使用模型。

Of course you will need to add some things like specifics of what parameters you pass and their types. For documentation, I generally record broader architectural decisions in Confluence (or GH Wiki pages if unavailable) whereas specifics about the code/parameters I include in ReadTheDocs. As we will talk about in Part II. having good initial documentation also makes it pretty simple to add model results and explain why your design works.

当然,您将需要添加一些东西,例如传递的参数及其类型的细节。 对于文档,我通常在Confluence (或GH Wiki页面,如果不可用)中记录更广泛的体系结构决策,而我在ReadTheDocs中包含的代码/参数的细节。 正如我们将在第二部分中讨论的那样。 拥有良好的初始文档也使添加模型结果并解释设计为何有效非常简单。

Tools: ReadTheDocs, Confluence

工具: ReadTheDocs , Confluence

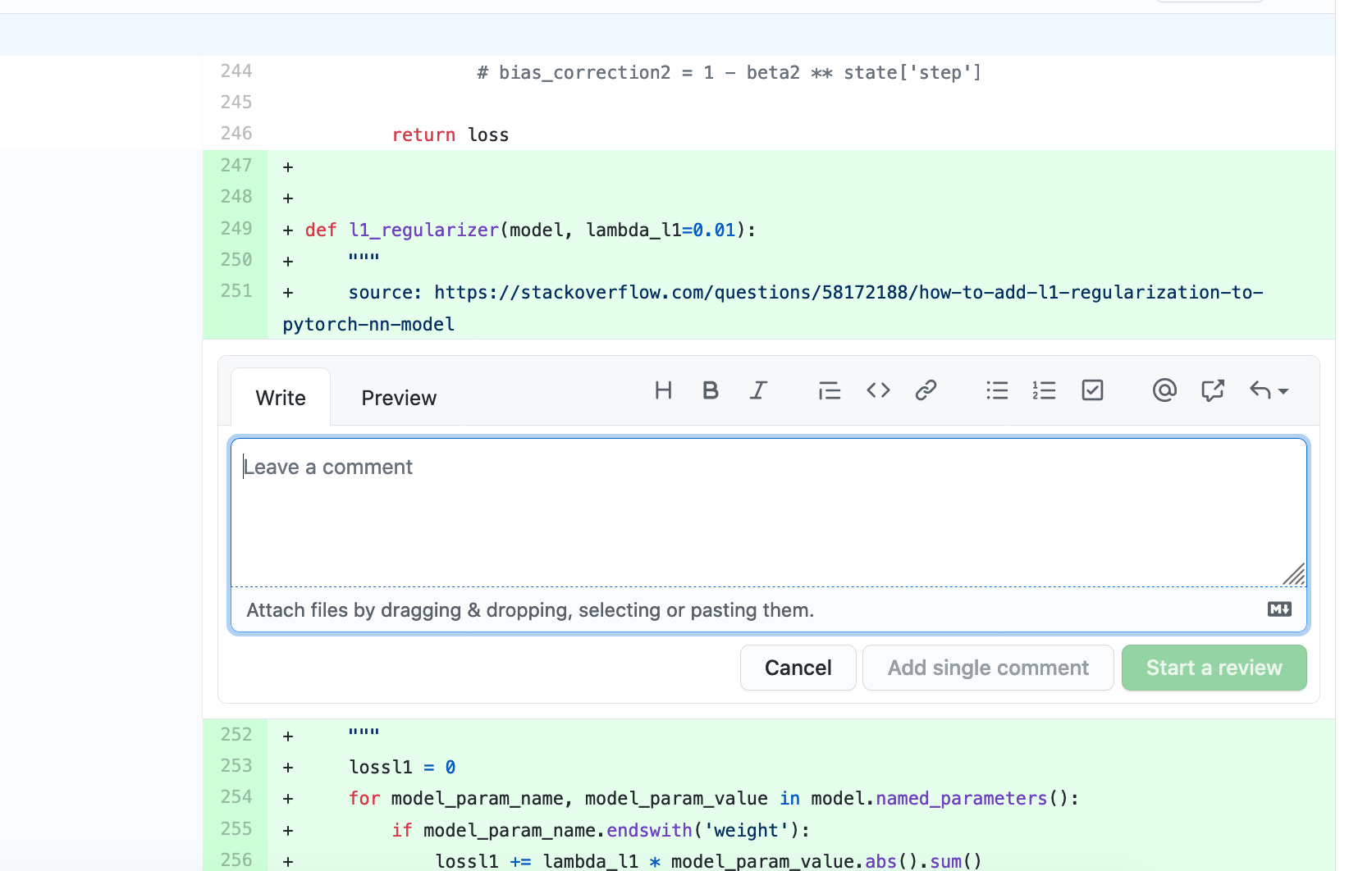

5. Leverage peer reviews

5.利用同行评审

Peer review is another critical step in making sure your code is correct before you run your experiments. Often times, a second pair of eyes can help you avoid all sorts of problems. This is another good reason not to use Jupyter Notebooks as reviewing notebook diffs is almost impossible.

同行评审是在运行实验之前确保代码正确的另一个关键步骤。 通常,第二只眼睛可以帮助您避免各种问题。 这是不使用Jupyter笔记本电脑的另一个很好的理由,因为几乎不可能检查笔记本电脑的差异。

As a peer reviewer it is also important to take time to go through the code line by line and comment where you don’t understand something. I often see reviewers just quickly approve all changes.

作为同行评审者,花时间逐行浏览代码并在您不了解的地方添加注释也很重要。 我经常看到审阅者很快就批准了所有更改。

A recent example: While adding meta-data for DA-RNN, I encountered a bug. This section of code did have an integration test but unfortunately it lacked a comprehensive unit test. As a result several of the experiments that I ran and that I thought used meta-data turned out did not. The problem was very simple; on line 23 I forget to include meta-data while calling the encoder.

最近的一个示例:在为DA-RNN添加元数据时,我遇到了一个错误。 这部分代码的确进行了集成测试,但不幸的是,它缺少全面的单元测试。 结果,我进行了一些我认为使用了元数据的实验,结果却没有。 问题很简单。 在第23行,我忘记了在调用编码器时包含元数据。

This problem likely could have been averted by writing a unit tests to check that the parameters of the meta-data model wereupdated on the forward pass or by having a test to check that the result with and without the meta-data were not equal.

通过编写单元测试以检查元数据模型的参数是否在正向传递时进行更新,或者通过进行测试以检查有无元数据的结果不相等,可能可以避免此问题。

In Part II of this series I will talk about how to actually make your experiments reproducible now that you have high quality and (mostly) bug free code. Specifically, I will look at things like data versioning, logging experiment results, and tracking parameters. So

在本系列的第二部分中,我将讨论既然您拥有高质量且(大多数)无错误的代码,那么如何使您的实验可重现。 具体来说,我将研究数据版本控制,记录实验结果和跟踪参数。 所以

相关文章和资源:(Relevant Articles and Resources:)

Unit testing machine learning code

1485

1485

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?